- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

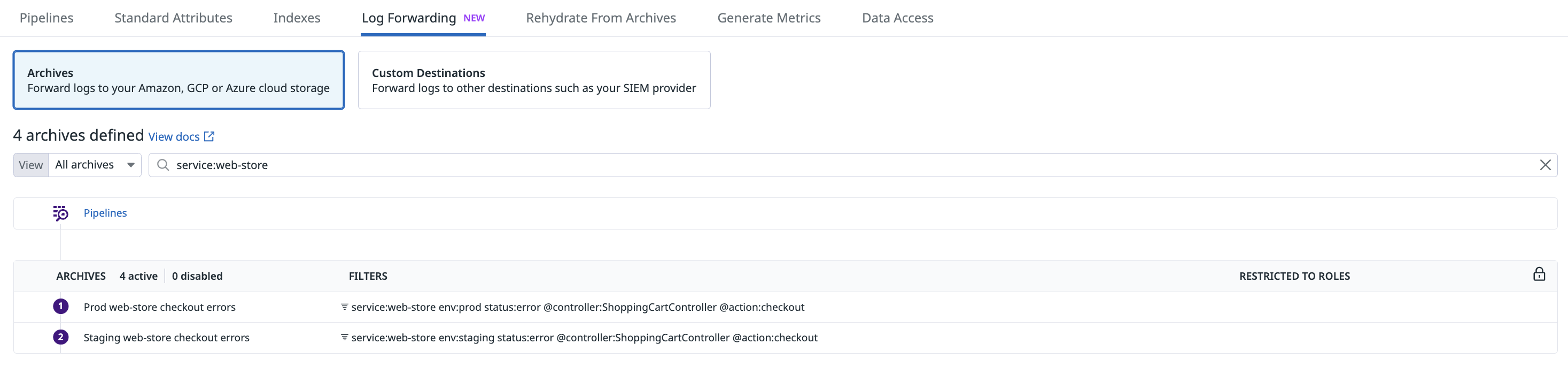

Log Archives

Overview

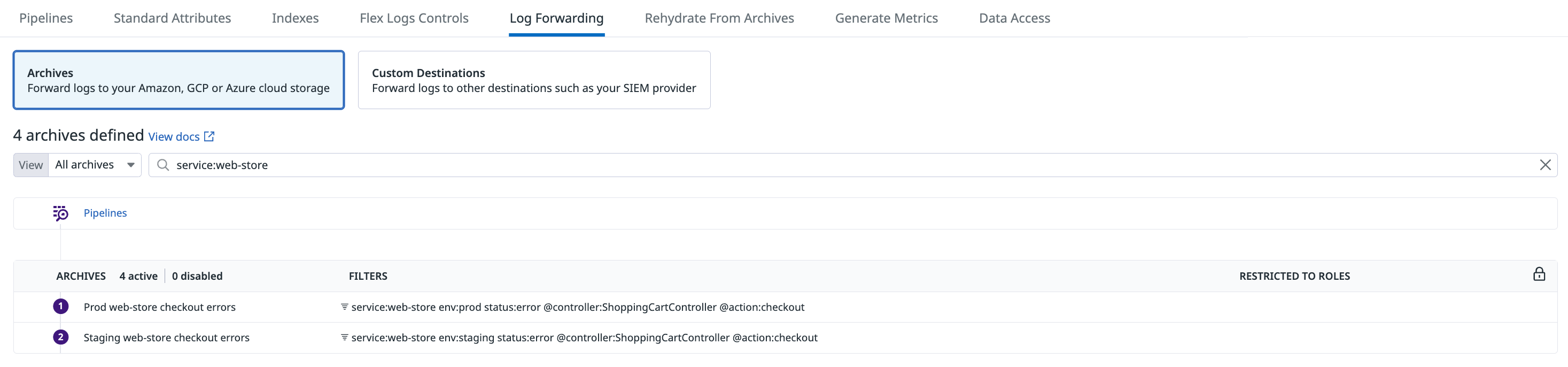

Configure your Datadog account to forward all the logs ingested—whether indexed or not—to a cloud storage system of your own. Keep your logs in a storage-optimized archive for longer periods of time and meet compliance requirements while also keeping auditability for ad-hoc investigations, with Rehydration.

Navigate to the Log Forwarding page to set up an archive for forwarding ingested logs to your own cloud-hosted storage bucket.

- If you haven’t already, set up a Datadog integration for your cloud provider.

- Create a storage bucket.

- Set permissions to

readand/orwriteon that archive. - Route your logs to and from that archive.

- Configure advanced settings such as encryption, storage class, and tags.

- Validate your setup and check for possible misconfigurations that Datadog would be able to detect for you.

See how to archive your logs with Observability Pipelines if you want to route your logs to a storage-optimized archive directly from your environment.

Configure an archive

Set up an integration

If not already configured, set up the AWS integration for the AWS account that holds your S3 bucket.

- In the general case, this involves creating a role that Datadog can use to integrate with AWS S3.

- Specifically for AWS China accounts, use access keys as an alternative to role delegation.

Set up the Azure integration within the subscription that holds your new storage account, if you haven’t already. This involves creating an app registration that Datadog can use to integrate with.

Note: Archiving to Azure ChinaCloud and Azure GermanyCloud is not supported. Archiving to Azure GovCloud is supported in Preview. To request access, contact Datadog support.

Set up the Google Cloud integration for the project that holds your GCS storage bucket, if you haven’t already. This involves creating a Google Cloud service account that Datadog can use to integrate with.

Create a storage bucket

Sending logs to an archive is outside of the Datadog GovCloud environment, which is outside the control of Datadog. Datadog shall not be responsible for any logs that have left the Datadog GovCloud environment, including without limitation, any obligations or requirements that the user may have related to FedRAMP, DoD Impact Levels, ITAR, export compliance, data residency or similar regulations applicable to such logs.

Go into your AWS console and create an S3 bucket to send your archives to.

Datadog Archives do not support bucket names with dots (.) when integrated with an S3 FIPS endpoint which relies on virtual-host style addressing. Learn more from AWS documentation. AWS FIPS and AWS Virtual Hosting.

Notes:

- Do not make your bucket publicly readable.

- For US1, US3, and US5 sites, see AWS Pricing for inter-region data transfer fees and how cloud storage costs may be impacted. Consider creating your storage bucket in

us-east-1to manage your inter-region data transfer fees.

- Go to your Azure Portal and create a storage account to send your archives to. Give your storage account a name, select either standard performance or Block blobs premium account type, and select the hot or cool access tier.

- Create a container service into that storage account. Take note of the container name as you will need to add this in the Datadog Archive Page.

Note: Do not set immutability policies because the last data needs to be rewritten in some rare cases (typically a timeout).

Go to your Google Cloud account and create a GCS bucket to send your archives to. Under Choose how to control access to objects, select Set object-level and bucket-level permissions.

Note: Do not add retention policy because the last data needs to be rewritten in some rare cases (typically a timeout).

Set permissions

Only Datadog users with the logs_write_archive permission can create, modify, or delete log archive configurations.

Create a policy with the following permission statements:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "DatadogUploadAndRehydrateLogArchives", "Effect": "Allow", "Action": ["s3:PutObject", "s3:GetObject"], "Resource": [ "arn:aws:s3:::<MY_BUCKET_NAME_1_/_MY_OPTIONAL_BUCKET_PATH_1>/*", "arn:aws:s3:::<MY_BUCKET_NAME_2_/_MY_OPTIONAL_BUCKET_PATH_2>/*" ] }, { "Sid": "DatadogRehydrateLogArchivesListBucket", "Effect": "Allow", "Action": "s3:ListBucket", "Resource": [ "arn:aws:s3:::<MY_BUCKET_NAME_1>", "arn:aws:s3:::<MY_BUCKET_NAME_2>" ] } ] }- The

GetObjectandListBucketpermissions allow for rehydrating from archives. - The

PutObjectpermission is sufficient for uploading archives. - Ensure that the resource value under the

s3:PutObjectands3:GetObjectactions ends with/*because these permissions are applied to objects within the buckets.

- The

Edit the bucket names.

Optionally, specify the paths that contain your log archives.

Attach the new policy to the Datadog integration role.

- Navigate to Roles in the AWS IAM console.

- Locate the role used by the Datadog integration. By default it is named DatadogIntegrationRole, but the name may vary if your organization has renamed it. Click the role name to open the role summary page.

- Click Add permissions, and then Attach policies.

- Enter the name of the policy created above.

- Click Attach policies.

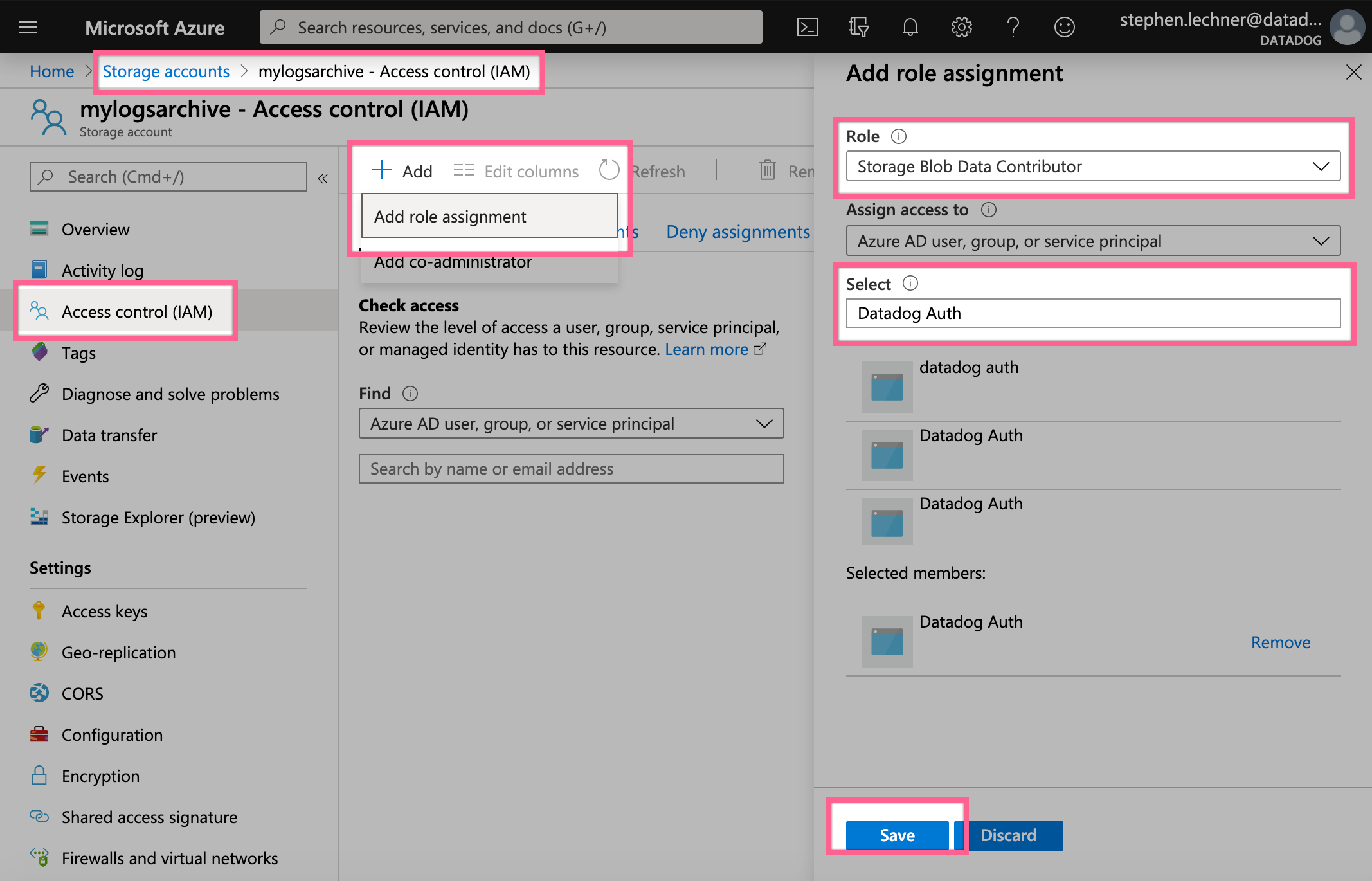

- Grant the Datadog app permission to write to and rehydrate from your storage account.

- Select your storage account from the Storage Accounts page, go to Access Control (IAM), and select Add -> Add Role Assignment.

- Input the Role called Storage Blob Data Contributor, select the Datadog app which you created to integrate with Azure, and save.

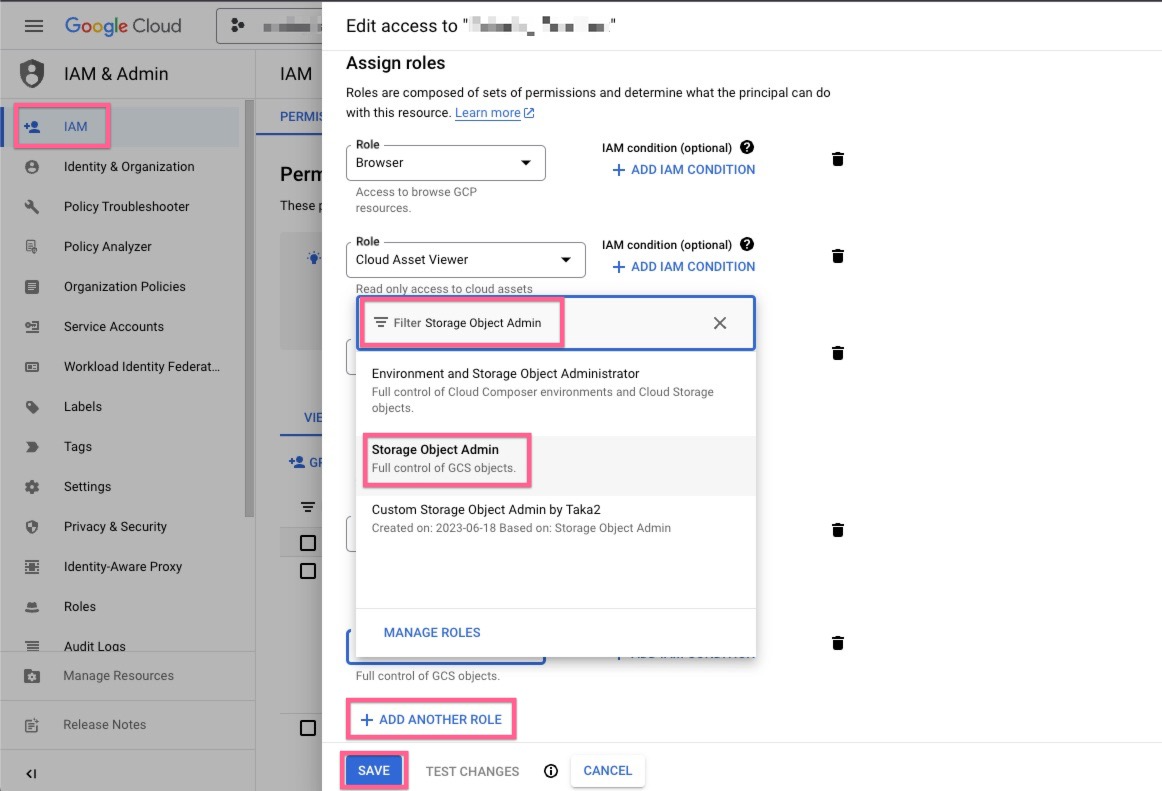

Grant your Datadog Google Cloud service account permissions to write your archives to your bucket.

Select your Datadog Google Cloud service account principal from the Google Cloud IAM Admin page and select Edit principal.

Click ADD ANOTHER ROLE, select the Storage Object Admin role, and save.

Route your logs to a bucket

Navigate to the Log Forwarding page and select Add a new archive on the Archives tab.

Notes:

- Only Datadog users with the

logs_write_archivepermission can complete this and the following step. - Archiving logs to Azure Blob Storage requires an App Registration. See instructions on the Azure integration page, and set the “site” on the right-hand side of the documentation page to “US.” App Registration(s) created for archiving purposes only need the “Storage Blob Data Contributor” role. If your storage bucket is in a subscription being monitored through a Datadog Resource, a warning is displayed about the App Registration being redundant. You can ignore this warning.

- If your bucket restricts network access to specified IPs, add the webhook IPs from the IP ranges list to the allowlist.

- For the US1-FED site, you can configure Datadog to send logs to a destination outside the Datadog GovCloud environment. Datadog is not responsible for any logs that leave the Datadog GovCloud environment. Additionally, Datatdog is not responsible for any obligations or requirements you might have concerning FedRAMP, DoD Impact Levels, ITAR, export compliance, data residency, or similar regulations applicable to these logs after they leave the GovCloud environment.

| Service | Steps |

|---|---|

| Amazon S3 | - Select the appropriate AWS account and role combination for your S3 bucket. - Input your bucket name. Optional: Input a prefix directory for all the content of your log archives. |

| Azure Storage | - Select the Azure Storage archive type, and the Azure tenant and client for the Datadog App that has the Storage Blob Data Contributor role on your storage account. - Input your storage account name and the container name for your archive. Optional: Input a prefix directory for all the content of your log archives. |

| Google Cloud Storage | - Select the Google Cloud Storage archive type, and the GCS Service Account that has permissions to write on your storage bucket. - Input your bucket name. Optional: Input a prefix directory for all the content of your log archives. |

Advanced settings

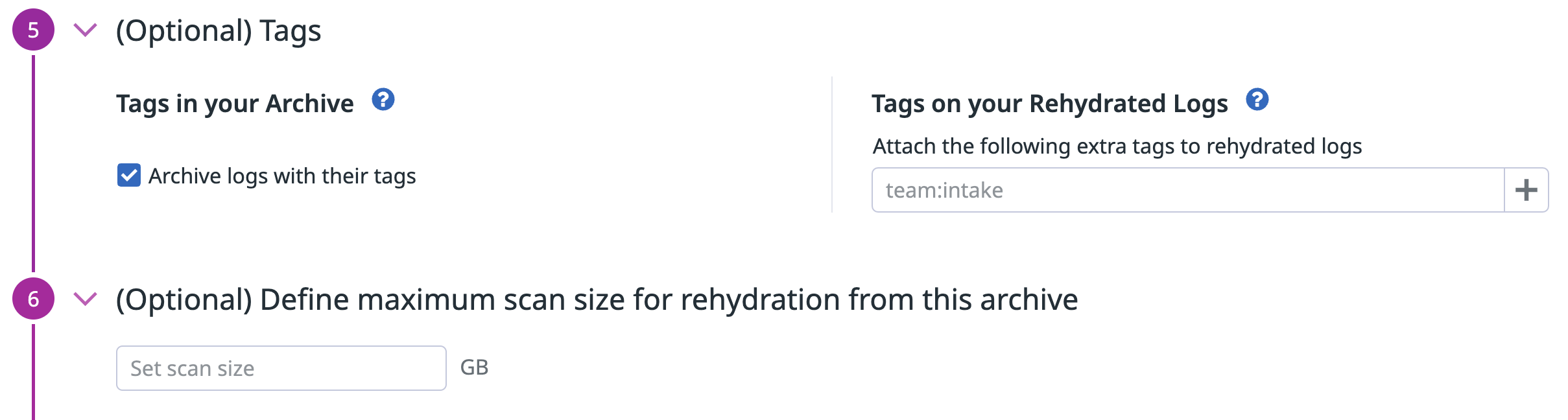

Datadog tags

Use this optional configuration step to:

- Include all log tags in your archives (activated by default on all new archives). Note: This increases the size of resulting archives.

- Add tags on rehydrated logs according to your Restriction Queries policy. See the

logs_read_datapermission.

Define maximum scan size

Use this optional configuration step to define the maximum volume of log data (in GB) that can be scanned for Rehydration on your Log Archives.

For Archives with a maximum scan size defined, all users need to estimate the scan size before they are allowed to start a Rehydration. If the estimated scan size is greater than what is permitted for that Archive, users must reduce the time range over which they are requesting the Rehydration. Reducing the time range will reduce the scan size and allow the user to start a Rehydration.

Firewall rules

Firewall rules are not supported.

Storage class

You can either select a storage class for your archive or set a lifecycle configuration on your S3 bucket to automatically transition your log archives to optimal storage classes.

Rehydration only supports the following storage classes:

- S3 Standard

- S3 Standard-IA

- S3 One Zone-IA

- S3 Glacier Instant Retrieval

- S3 Intelligent-Tiering, only if the optional asynchronous archive access tiers are both disabled.

If you wish to rehydrate from archives in another storage class, you must first move them to one of the supported storage classes above.

Archiving and Rehydration only supports the following access tiers:

- Hot access tier

- Cool access tier

If you wish to rehydrate from archives in another access tier, you must first move them to one of the supported tiers above.

Archiving and Rehydration supports the following access tiers:

- Standard

- Nearline

- Coldline

- Archive

Server-side encryption (SSE) for S3 archives

When creating or updating an S3 archive in Datadog, you can optionally configure Advanced Encryption. Three options are available under the Encryption Type dropdown:

- Default S3 Bucket-Level Encryption (Default): Datadog does not override your S3 bucket’s default encryption settings.

- Amazon S3 managed keys: Forces server-side encryption using Amazon S3 managed keys (SSE-S3), regardless of the S3 bucket’s default encryption.

- AWS Key Management Service: Forces server-side encryption using a customer-managed key (CMK) from AWS KMS, regardless of the S3 bucket’s default encryption. You will need to provide the CMK ARN.

When this option is selected, Datadog does not specify any encryption headers in the upload request. The default encryption from your S3 bucket will apply.

To set or check your S3 bucket’s encryption configuration:

- Navigate to your S3 bucket.

- Click the Properties tab.

- In the Default Encryption section, configure or confirm the encryption type. If your encryption uses AWS KMS, ensure that you have a valid CMK and CMK policy attached to your CMK.

This option ensures that all archives objects are uploaded with SSE_S3, using Amazon S3 managed keys. This overrides any default encryption setting on the S3 bucket.

This option ensures that all archives objects are uploaded using a customer-managed key (CMK) from AWS KMS. This overrides any default encryption setting on the S3 bucket.

Ensure that you have completed the following steps to create a valid CMK and CMK policy. You will need to provide the CMK ARN to successfully configure this encryption type.

- Create your CMK.

- Attach a CMK policy to your CMK with the following content, replacing the AWS account number and Datadog IAM role name appropriately:

{

"Id": "key-consolepolicy-3",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Enable IAM User Permissions",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<MY_AWS_ACCOUNT_NUMBER>:root"

},

"Action": "kms:*",

"Resource": "*"

},

{

"Sid": "Allow use of the key",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<MY_AWS_ACCOUNT_NUMBER>:role/<MY_DATADOG_IAM_ROLE_NAME>"

},

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource": "*"

},

{

"Sid": "Allow attachment of persistent resources",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<MY_AWS_ACCOUNT_NUMBER>:role/<MY_DATADOG_IAM_ROLE_NAME>"

},

"Action": [

"kms:CreateGrant",

"kms:ListGrants",

"kms:RevokeGrant"

],

"Resource": "*",

"Condition": {

"Bool": {

"kms:GrantIsForAWSResource": "true"

}

}

}

]

}

- After selecting AWS Key Management Service as your Encryption Type in Datadog, input your AWS KMS key ARN.

Validation

Once your archive settings are successfully configured in your Datadog account, your processing pipelines begin to enrich all logs ingested into Datadog. These logs are subsequently forwarded to your archive.

However, after creating or updating your archive configurations, it can take several minutes before the next archive upload is attempted. The frequency at which archives are uploaded can vary. Check back on your storage bucket in 15 minutes to make sure the archives are successfully being uploaded from your Datadog account.

After that, if the archive is still in a pending state, check your inclusion filters to make sure the query is valid and matches log events in Live Tail. When Datadog fails to upload logs to an external archive, due to unintentional changes in settings or permissions, the corresponding Log Archive is highlighted in the configuration page.

Hover over the archive to view the error details and the actions to take to resolve the issue. An event is also generated in the Events Explorer. You can create a monitor for these events to detect and remediate failures quickly.

Multiple archives

If multiple archives are defined, logs enter the first archive based on filter.

It is important to order your archives carefully. For example, if you create a first archive filtered to the env:prod tag and a second archive without any filter (the equivalent of *), all your production logs would go to one storage bucket or path, and the rest would go to the other.

Format of the archives

The log archives that Datadog forwards to your storage bucket are in compressed JSON format (.json.gz). Using the prefix you indicate (or / if there is none), the archives are stored in a directory structure that indicates on what date and at what time the archive files were generated, such as the following:

/my/bucket/prefix/dt=20180515/hour=14/archive_143201.1234.7dq1a9mnSya3bFotoErfxl.json.gz

/my/bucket/prefix/dt=<YYYYMMDD>/hour=<HH>/archive_<HHmmss.SSSS>.<DATADOG_ID>.json.gz

This directory structure simplifies the process of querying your historical log archives based on their date.

Within the zipped JSON file, each event’s content is formatted as follows:

{

"_id": "123456789abcdefg",

"date": "2018-05-15T14:31:16.003Z",

"host": "i-12345abced6789efg",

"source": "source_name",

"service": "service_name",

"status": "status_level",

"message": "2018-05-15T14:31:16.003Z INFO rid='acb-123' status=403 method=PUT",

"attributes": { "rid": "abc-123", "http": { "status_code": 403, "method": "PUT" } },

"tags": [ "env:prod", "team:acme" ]

}

Further Reading

Additional helpful documentation, links, and articles:

*Logging without Limits is a trademark of Datadog, Inc.