- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Google Cloud Platform

Overview

Use this guide to get started monitoring your Google Cloud environment. This approach simplifies the setup for Google Cloud environments with multiple projects, allowing you to maximize your monitoring coverage.

See the full list of Google Cloud integrations

See the full list of Google Cloud integrations

Datadog's Google Cloud integration collects all Google Cloud metrics. Datadog continually updates the docs to show every dependent integration, but the list of integrations is sometimes behind the latest cloud services metrics and services.

If you don’t see an integration for a specific Google Cloud service, reach out to Datadog Support.

| Integration | Description |

|---|---|

| App Engine | PaaS (platform as a service) to build scalable applications |

| BigQuery | Enterprise data warehouse |

| Bigtable | NoSQL Big Data database service |

| Cloud SQL | MySQL database service |

| Cloud APIs | Programmatic interfaces for all Google Cloud Platform services |

| Cloud Armor | Network security service to help protect against denial-of-service and web attacks |

| Cloud Composer | A fully managed workflow orchestration service |

| Cloud Dataproc | A cloud service for running Apache Spark and Apache Hadoop clusters |

| Cloud Dataflow | A fully-managed service for transforming and enriching data in stream and batch modes |

| Cloud Filestore | High-performance, fully managed file storage |

| Cloud Firestore | A flexible, scalable database for mobile, web, and server development |

| Cloud Interconnect | Hybrid connectivity |

| Cloud IoT | Secure device connection and management |

| Cloud Load Balancing | Distribute load-balanced compute resources |

| Cloud Logging | Real-time log management and analysis |

| Cloud Memorystore for Redis | A fully managed in-memory data store service |

| Cloud Router | Exchange routes between your VPC and on-premises networks by using BGP |

| Cloud Run | Managed compute platform that runs stateless containers over HTTP |

| Cloud Security Command Center | Security Command Center is a threat reporting service |

| Cloud Tasks | Distributed task queues |

| Cloud TPU | Train and run machine learning models |

| Compute Engine | High performance virtual machines |

| Container Engine | Kubernetes, managed by Google |

| Datastore | NoSQL database |

| Firebase | Mobile platform for application development |

| Functions | Serverless platform for building event-based microservices |

| Kubernetes Engine | Cluster manager and orchestration system |

| Machine Learning | Machine learning services |

| Private Service Connect | Access managed services with private VPC connections |

| Pub/Sub | Real-time messaging service |

| Spanner | Horizontally scalable, globally consistent, relational database service |

| Storage | Unified object storage |

| Vertex AI | Build, train, and deploy custom machine learning (ML) models |

| VPN | Managed network functionality |

Setup

Set up Datadog’s Google Cloud integration to collect metrics and logs from your Google Cloud services.

Prerequisites

1. If your organization restricts identities by domain, you must add Datadog’s customer identity as an allowed value in your policy. Datadog’s customer identity: C0147pk0i

1. If your organization restricts identities by domain, you must add Datadog’s customer identity as an allowed value in your policy. Datadog’s customer identity: C03lf3ewa

2. Enable the following APIs for every project you want to monitor, including the project where the service account has been created.

Service account impersonation and automatic project discovery relies on you having certain roles and APIs enabled to monitor projects. Complete this step to avert integration issues.

- Cloud Monitoring API

- Allows Datadog to query your Google Cloud metric data.

- Compute Engine API

- Allows Datadog to discover compute instance data.

- Cloud Asset API

- Allows Datadog to request Google Cloud resources and link relevant labels to metrics as tags.

- Cloud Resource Manager API

- Allows Datadog to append metrics with the correct resources and tags.

- IAM API

- Allows Datadog to authenticate with Google Cloud.

- Cloud Billing API

- Allows developers to manage billing for their Google Cloud Platform projects programmatically. See the Cloud Cost Management (CCM) documentation for more information.

3. Ensure that any projects being monitored are not configured as scoping projects that pull in metrics from multiple other projects.

Metric collection

Installation

Organization-level (or folder-level) monitoring is recommended for comprehensive coverage of all projects, including any future projects that may be created in an org or folder.

Note: Your Google Cloud Identity user account must have the Admin role assigned to it at the desired scope to complete the setup in Google Cloud (for example, Organization Admin).

1. Create a Google Cloud service account in the default project

1. Create a Google Cloud service account in the default project

- Open your Google Cloud console.

- Navigate to IAM & Admin > Service Accounts.

- Click Create service account at the top.

- Give the service account a unique name.

- Click Done to complete creating the service account.

2. Add the service account at the organization or folder level

2. Add the service account at the organization or folder level

- In the Google Cloud console, go to the IAM page.

- Select a folder or organization.

- To grant a role to a principal that does not already have other roles on the resource, click Grant Access, then enter the email of the service account you created earlier.

- Enter the service account’s email address.

- Assign the following roles:

- Compute Viewer provides read-only access to get and list Compute Engine resources

- Monitoring Viewer provides read-only access to the monitoring data availabile in your Google Cloud environment

- Cloud Asset Viewer provides read-only access to cloud assets metadata

- Browser provides read-only access to browse the hierarchy of a project

- Service Usage Consumer (optional, for multi-project environments) provides per-project cost and API quota attribution after this feature has been enabled by Datadog support

- Click Save.

Note: The Browser role is only required in the default project of the service account. Other projects require only the other listed roles.

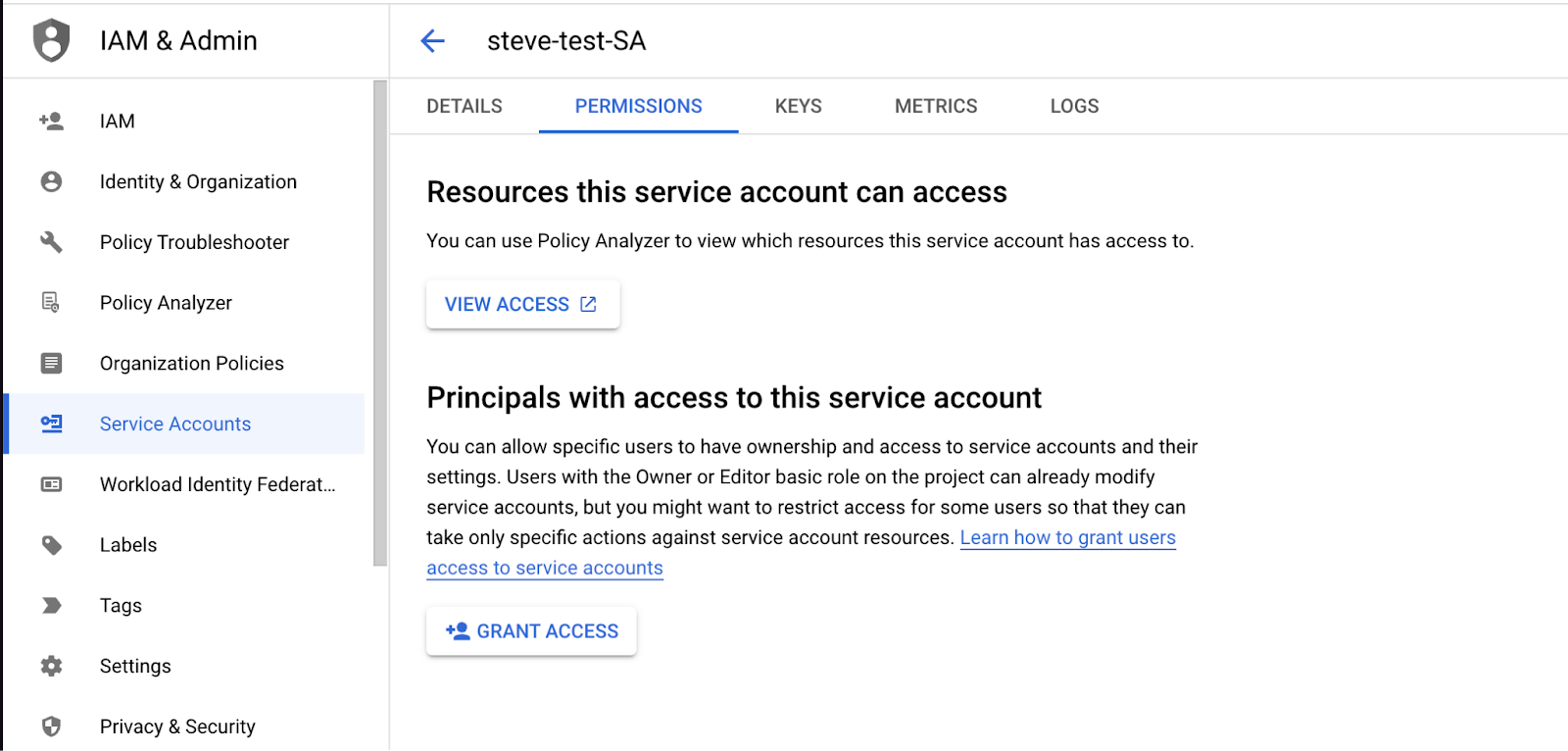

3. Add the Datadog principal to your service account

3. Add the Datadog principal to your service account

Note: If you previously configured access using a shared Datadog principal, you can revoke the permission for that principal after you complete these steps.

- In Datadog, navigate to Integrations > Google Cloud Platform.

- Click Add Google Cloud Account. If you have no configured projects, you are automatically redirected to this page.

- Copy your Datadog principal and keep it for the next section.

Note: Keep this window open for Section 4.

- In the Google Cloud console, under the Service Accounts menu, find the service account you created in Section 1.

- Go to the Permissions tab and click Grant Access.

- Paste your Datadog principal into the New principals text box.

- Assign the role of Service Account Token Creator.

- Click Save.

4. Complete the integration setup in Datadog

4. Complete the integration setup in Datadog

- In your Google Cloud console, navigate to the Service Account > Details tab. On this page, find the email associated with this Google service account. It has the format

<SA_NAME>@<PROJECT_ID>.iam.gserviceaccount.com. - Copy this email.

- Return to the integration configuration tile in Datadog (where you copied your Datadog principal in the previous section).

- Paste the email you copied in Add Service Account Email.

- Click Verify and Save Account.

Metrics appear in Datadog approximately 15 minutes after setup.

Best practices for monitoring multiple projects

Enable per-project cost and API quota attribution

By default, Google Cloud attributes the cost of monitoring API calls, as well as API quota usage, to the project containing the service account for this integration. As a best practice for Google Cloud environments with multiple projects, enable per-project cost attribution of monitoring API calls and API quota usage. With this enabled, costs and quota usage are attributed to the project being queried, rather than the project containing the service account. This provides visibility into the monitoring costs incurred by each project, and also helps to prevent reaching API rate limits.

To enable this feature:

- Ensure that the Datadog service account has the Service Usage Consumer role at the desired scope (folder or organization).

- Click the Enable Per Project Quota toggle in the Projects tab of the Google Cloud integration page.

You can use service account impersonation and automatic project discovery to integrate Datadog with Google Cloud.

This method enables you to monitor all projects visible to a service account by assigning IAM roles in the relevant projects. You can assign these roles to projects individually, or you can configure Datadog to monitor groups of projects by assigning these roles at the organization or folder level. Assigning roles in this way allows Datadog to automatically discover and monitor all projects in the given scope, including any new projects that may be added to the group in the future.

1. Create a Google Cloud service account

1. Create a Google Cloud service account

- Open your Google Cloud console.

- Navigate to IAM & Admin > Service Accounts.

- Click on Create service account at the top.

- Give the service account a unique name, then click Create and continue.

- Add the following roles to the service account:

- Monitoring Viewer provides read-only access to the monitoring data availabile in your Google Cloud environment

- Compute Viewer provides read-only access to get and list Compute Engine resources

- Cloud Asset Viewer provides read-only access to cloud assets metadata

- Browser provides read-only access to discover accessible projects

- Click Continue, then Done to complete creating the service account.

2. Add the Datadog principal to your service account

2. Add the Datadog principal to your service account

In Datadog, navigate to the Integrations > Google Cloud Platform.

Click on Add GCP Account. If you have no configured projects, you are automatically redirected to this page.

If you have not generated a Datadog principal for your org, click the Generate Principal button.

Copy your Datadog principal and keep it for the next section.

Note: Keep this window open for the next section.

In Google Cloud console, under the Service Accounts menu, find the service account you created in the first section.

Go to the Permissions tab and click on Grant Access.

Paste your Datadog principal into the New principals text box.

Assign the role of Service Account Token Creator and click SAVE.

Note: If you previously configured access using a shared Datadog principal, you can revoke the permission for that principal after you complete these steps.

3. Complete the integration setup in Datadog

3. Complete the integration setup in Datadog

- In your Google Cloud console, navigate to the Service Account > Details tab. There, you can find the email associated with this Google service account. It resembles

<sa-name>@<project-id>.iam.gserviceaccount.com. - Copy this email.

- Return to the integration configuration tile in Datadog (where you copied your Datadog principal in the previous section).

- In the box under Add Service Account Email, paste the email you previously copied.

- Click on Verify and Save Account.

In approximately fifteen minutes, metrics appear in Datadog.

Validation

To view your metrics, use the left menu to navigate to Metrics > Summary and search for gcp:

Configuration

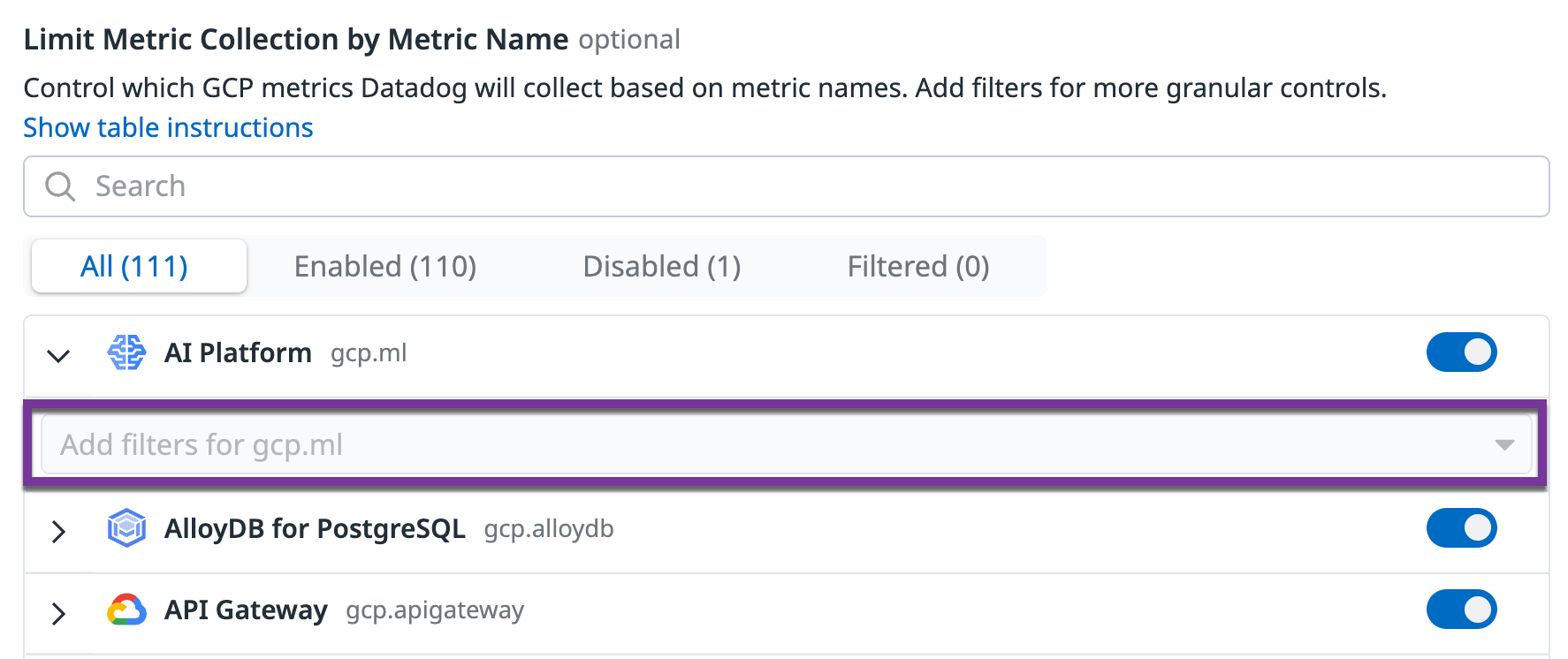

Limit metric collection by metric namespace, and by granular metric filters

Limit metric collection by metric namespace, and by granular metric filters

Optionally, you can choose which Google Cloud services you monitor with Datadog, and further define which specific metrics you want to collect from each individual service. Configuring metric collection lets you optimize your Google Cloud Monitoring API costs, while retaining visibility into your critical services.

For granular control of metric collection from a service, define inclusion and exclusion filters for that service. A metric is collected only if it matches at least one inclusion filter, and does not match any exclusion filters.

Under the Metric Collection tab in Datadog’s Google Cloud integration page, unselect the metric namespaces to exclude. To apply granular filtering for enabled services, click on the service in question and apply your filters in the Add filters for gcp.<service> field.

Example filters:

subscription.*topic.*- Limit collection to metrics matching either

gcp.<service>.subscription.*orgcp.<service>.topic.* !*_cost!*_count- Limit collection to metrics matching neither

gcp.<service>.*_costnorgcp.<service>.*_count snapshot.*!*_by_region- Limit collection to metrics matching

gcp.<service>.snapshot.*but not matchinggcp.<service>.*_by_region

Limit metric collection by tag

Limit metric collection by tag

By default, you’ll see all your Google Compute Engine (GCE) instances in Datadog’s infrastructure overview. Datadog automatically tags them with GCE host tags and any GCE labels you may have added.

Optionally, you can use tags to limit the instances that are pulled into Datadog. Under a project’s Metric Collection tab, enter the tags in the Limit Metric Collection Filters textbox. Only hosts that match one of the defined tags are imported into Datadog. You can use wildcards (? for single character, * for multi-character) to match many hosts, or ! to exclude certain hosts. This example includes all c1* sized instances, but excludes staging hosts:

datadog:monitored,env:production,!env:staging,instance-type:c1.*

See Google’s Organize resources using labels page for more details.

Leveraging the Datadog Agent

Use the Datadog Agent to collect the most granular, low-latency metrics from your infrastructure. Install the Agent on any host, including GKE, to get deeper insights from the traces and logs it can collect. For more information, see Why should I install the Datadog Agent on my cloud instances?

Log collection

See the Google Cloud Log Forwarding Setup page for log forwarding setup options and instructions.

Expanded BigQuery monitoring

Join the Preview!

Expanded BigQuery monitoring is in Preview. Use this form to sign up to start gaining insights into your query performance.

Request AccessExpanded BigQuery monitoring provides granular visibility into your BigQuery environments.

BigQuery jobs performance monitoring

To monitor the performance of your BigQuery jobs, grant the BigQuery Resource Viewer role to the Datadog service account for each Google Cloud project.

Notes:

- You need to have verified your Google Cloud service account in Datadog, as outlined in the setup section.

- You do not need to set up Dataflow to collect logs for expanded BigQuery monitoring.

- In the Google Cloud console, go to the IAM page.

- Click Grant access.

- Enter the email of your service account in New principals.

- Assign the BigQuery Resource Viewer role.

- Click SAVE.

- In Datadog’s Google Cloud integration page, click into the BigQuery tab.

- Click the Enable Query Performance toggle.

BigQuery data quality monitoring

BigQuery data quality monitoring provides quality metrics from your BigQuery tables (such as freshness and updates to row count and size). Explore the data from your tables in depth on the Data Quality Monitoring page.

To collect quality metrics, grant the BigQuery Metadata Viewer role to the Datadog Service Account for each BigQuery table you are using.

Note: BigQuery Metadata Viewer can be applied at a BigQuery table, dataset, project, or organization level.

- For Data Quality Monitoring of all tables within a dataset, grant access at the dataset level.

- For Data Quality Monitoring of all datasets within a project, grant access at the project level.

- Navigate to BigQuery.

- In the Explorer, search for the desired BigQuery resource.

- Click the three-dot menu next to the resource, then click Share -> Manage Permissions.

- Click ADD PRINCIPAL.

- In the new principals box, enter the Datadog service account set up for the Google Cloud integration.

- Assign the BigQuery Metadata Viewer role.

- Click SAVE.

- In Datadog’s Google Cloud integration page, click into the BigQuery tab.

- Click the Enable Data Quality toggle.

BigQuery jobs log retention

Datadog recommends setting up a new logs index called data-observability-queries, and indexing your BigQuery job logs for 15 days. Use the following index filter to pull in the logs:

service:data-observability @platform:*

See the Log Management pricing page for cost estimation.

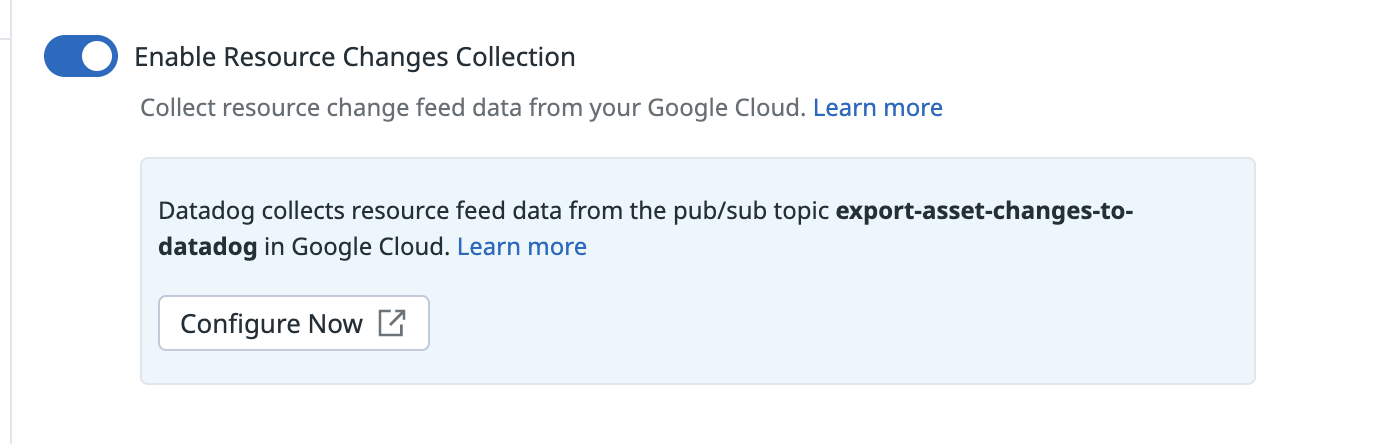

Resource changes collection

Select Enable Resource Collection in the Resource Collection tab of the Google Cloud integration page. This allows you to receive resource events in Datadog when Google’s Cloud Asset Inventory detects changes in your cloud resources.

Then, follow the steps below to forward change events from a Pub/Sub topic to the Datadog Event Explorer.

Google Cloud CLI

Google Cloud CLI

Create a Cloud Pub/Sub topic and subscription

Create a topic

- In the Google Cloud Pub/Sub topics page, click CREATE TOPIC.

- Give the topic a descriptive name.

- Uncheck the option to add a default subscription.

- Click CREATE.

Create a subscription

- In the Google Cloud Pub/Sub subscriptions page, click CREATE SUBSCRIPTION.

- Enter

export-asset-changes-to-datadogfor the subscription name. - Select the Cloud Pub/Sub topic previously created.

- Select Pull as the delivery type.

- Click CREATE.

Grant access

To read from this Pub/Sub subscription, the Google Cloud service account used by the integration needs the pubsub.subscriptions.consume permission for the subscription. A default role with minimal permissions that allows this is the Pub/Sub subscriber role. Follow the steps below to grant this role:

- In the Google Cloud Pub/Sub subscriptions page, click the

export-asset-changes-to-datadogsubscription. - In the info panel on the right of the page, click the Permissions tab. If you don’t see the info panel, click SHOW INFO PANEL.

- Click ADD PRINCIPAL.

- Enter the service account email used by the Datadog Google Cloud integration. You can find your service accounts listed on the left of the Configuration tab in the Google Cloud integration page in Datadog.

Create an asset feed

Run the command below in Cloud Shell or the gcloud CLI to create a Cloud Asset Inventory Feed that sends change events to the Pub/Sub topic created above.

gcloud asset feeds create <FEED_NAME>

--project=<PROJECT_ID>

--pubsub-topic=projects/<PROJECT_ID>/topics/<TOPIC_NAME>

--asset-names=<ASSET_NAMES>

--asset-types=<ASSET_TYPES>

--content-type=<CONTENT_TYPE>

Update the placeholder values as indicated:

<FEED_NAME>: A descriptive name for the Cloud Asset Inventory Feed.<PROJECT_ID>: Your Google Cloud project ID.<TOPIC_NAME>: The name of the Pub/Sub topic linked with theexport-asset-changes-to-datadogsubscription.<ASSET_NAMES>: Comma-separated list of resource full names to receive change events from. Optional if specifyingasset-types.<ASSET_TYPES>: Comma-separated list of asset types to receive change events from. Optional if specifyingasset-names.<CONTENT_TYPE>: Optional asset content type to receive change events from.

gcloud asset feeds create <FEED_NAME>

--folder=<FOLDER_ID>

--pubsub-topic=projects/<PROJECT_ID>/topics/<TOPIC_NAME>

--asset-names=<ASSET_NAMES>

--asset-types=<ASSET_TYPES>

--content-type=<CONTENT_TYPE>

Update the placeholder values as indicated:

<FEED_NAME>: A descriptive name for the Cloud Asset Inventory Feed.<FOLDER_ID>: Your Google Cloud folder ID.<TOPIC_NAME>: The name of the Pub/Sub topic linked with theexport-asset-changes-to-datadogsubscription.<ASSET_NAMES>: Comma-separated list of resource full names to receive change events from. Optional if specifyingasset-types.<ASSET_TYPES>: Comma-separated list of asset types to receive change events from. Optional if specifyingasset-names.<CONTENT_TYPE>: Optional asset content type to receive change events from.

gcloud asset feeds create <FEED_NAME>

--organization=<ORGANIZATION_ID>

--pubsub-topic=projects/<PROJECT_ID>/topics/<TOPIC_NAME>

--asset-names=<ASSET_NAMES>

--asset-types=<ASSET_TYPES>

--content-type=<CONTENT_TYPE>

Update the placeholder values as indicated:

<FEED_NAME>: A descriptive name for the Cloud Asset Inventory Feed.<ORGANIZATION_ID>: Your Google Cloud organization ID.<TOPIC_NAME>: The name of the Pub/Sub topic linked with theexport-asset-changes-to-datadogsubscription.<ASSET_NAMES>: Comma-separated list of resource full names to receive change events from. Optional if specifyingasset-types.<ASSET_TYPES>: Comma-separated list of asset types to receive change events from. Optional if specifyingasset-names.<CONTENT_TYPE>: Optional asset content type to receive change events from.

Terraform

Terraform

Create an asset feed

Copy the following Terraform template and substitute the necessary arguments:

locals {

project_id = "<PROJECT_ID>"

}

resource "google_pubsub_topic" "pubsub_topic" {

project = local.project_id

name = "<TOPIC_NAME>"

}

resource "google_pubsub_subscription" "pubsub_subscription" {

project = local.project_id

name = "export-asset-changes-to-datadog"

topic = google_pubsub_topic.pubsub_topic.id

}

resource "google_pubsub_subscription_iam_member" "subscriber" {

project = local.project_id

subscription = google_pubsub_subscription.pubsub_subscription.id

role = "roles/pubsub.subscriber"

member = "serviceAccount:<SERVICE_ACCOUNT_EMAIL>"

}

resource "google_cloud_asset_project_feed" "project_feed" {

project = local.project_id

feed_id = "<FEED_NAME>"

content_type = "<CONTENT_TYPE>" # Optional. Remove if unused.

asset_names = ["<ASSET_NAMES>"] # Optional if specifying asset_types. Remove if unused.

asset_types = ["<ASSET_TYPES>"] # Optional if specifying asset_names. Remove if unused.

feed_output_config {

pubsub_destination {

topic = google_pubsub_topic.pubsub_topic.id

}

}

}

Update the placeholder values as indicated:

<PROJECT_ID>: Your Google Cloud project ID.<TOPIC_NAME>: The name of the Pub/Sub topic to be linked with theexport-asset-changes-to-datadogsubscription.<SERVICE_ACCOUNT_EMAIL>: The service account email used by the Datadog Google Cloud integration.<FEED_NAME>: A descriptive name for the Cloud Asset Inventory Feed.<ASSET_NAMES>: Comma-separated list of resource full names to receive change events from. Optional if specifyingasset-types.<ASSET_TYPES>: Comma-separated list of asset types to receive change events from. Optional if specifyingasset-names.<CONTENT_TYPE>: Optional asset content type to receive change events from.

locals {

project_id = "<PROJECT_ID>"

}

resource "google_pubsub_topic" "pubsub_topic" {

project = local.project_id

name = "<TOPIC_NAME>"

}

resource "google_pubsub_subscription" "pubsub_subscription" {

project = local.project_id

name = "export-asset-changes-to-datadog"

topic = google_pubsub_topic.pubsub_topic.id

}

resource "google_pubsub_subscription_iam_member" "subscriber" {

project = local.project_id

subscription = google_pubsub_subscription.pubsub_subscription.id

role = "roles/pubsub.subscriber"

member = "serviceAccount:<SERVICE_ACCOUNT_EMAIL>"

}

resource "google_cloud_asset_folder_feed" "folder_feed" {

billing_project = local.project_id

folder = "<FOLDER_ID>"

feed_id = "<FEED_NAME>"

content_type = "<CONTENT_TYPE>" # Optional. Remove if unused.

asset_names = ["<ASSET_NAMES>"] # Optional if specifying asset_types. Remove if unused.

asset_types = ["<ASSET_TYPES>"] # Optional if specifying asset_names. Remove if unused.

feed_output_config {

pubsub_destination {

topic = google_pubsub_topic.pubsub_topic.id

}

}

}

Update the placeholder values as indicated:

<PROJECT_ID>: Your Google Cloud project ID.<FOLDER_ID>: The ID of the folder this feed should be created in.<TOPIC_NAME>: The name of the Pub/Sub topic to be linked with theexport-asset-changes-to-datadogsubscription.<SERVICE_ACCOUNT_EMAIL>: The service account email used by the Datadog Google Cloud integration.<FEED_NAME>: A descriptive name for the Cloud Asset Inventory Feed.<ASSET_NAMES>: Comma-separated list of resource full names to receive change events from. Optional if specifyingasset-types.<ASSET_TYPES>: Comma-separated list of asset types to receive change events from. Optional if specifyingasset-names.<CONTENT_TYPE>: Optional asset content type to receive change events from.

locals {

project_id = "<PROJECT_ID>"

}

resource "google_pubsub_topic" "pubsub_topic" {

project = local.project_id

name = "<TOPIC_NAME>"

}

resource "google_pubsub_subscription" "pubsub_subscription" {

project = local.project_id

name = "export-asset-changes-to-datadog"

topic = google_pubsub_topic.pubsub_topic.id

}

resource "google_pubsub_subscription_iam_member" "subscriber" {

project = local.project_id

subscription = google_pubsub_subscription.pubsub_subscription.id

role = "roles/pubsub.subscriber"

member = "serviceAccount:<SERVICE_ACCOUNT_EMAIL>"

}

resource "google_cloud_asset_organization_feed" "organization_feed" {

billing_project = local.project_id

org_id = "<ORGANIZATION_ID>"

feed_id = "<FEED_NAME>"

content_type = "<CONTENT_TYPE>" # Optional. Remove if unused.

asset_names = ["<ASSET_NAMES>"] # Optional if specifying asset_types. Remove if unused.

asset_types = ["<ASSET_TYPES>"] # Optional if specifying asset_names. Remove if unused.

feed_output_config {

pubsub_destination {

topic = google_pubsub_topic.pubsub_topic.id

}

}

}

Update the placeholder values as indicated:

<PROJECT_ID>: Your Google Cloud project ID.<TOPIC_NAME>: The name of the Pub/Sub topic to be linked with theexport-asset-changes-to-datadogsubscription.<SERVICE_ACCOUNT_EMAIL>: The service account email used by the Datadog Google Cloud integration.<ORGANIZATION_ID>: Your Google Cloud organization ID.<FEED_NAME>: A descriptive name for the Cloud Asset Inventory Feed.<ASSET_NAMES>: Comma-separated list of resource full names to receive change events from. Optional if specifyingasset-types.<ASSET_TYPES>: Comma-separated list of asset types to receive change events from. Optional if specifyingasset-names.<CONTENT_TYPE>: Optional asset content type to receive change events from.

Datadog recommends setting the asset-types parameter to the regular expression .* to collect changes for all resources.

Note: You must specify at least one value for either the asset-names or asset-types parameter.

See the gcloud asset feeds create reference for the full list of configurable parameters.

Enable resource changes collection

Click to Enable Resource Changes Collection in the Resource Collection tab of the Google Cloud integration page.

Validation

Find your asset change events in the Datadog Event Explorer.

Private Service Connect

Private Service Connect is only available for the US5 and EU Datadog sites.

Use the Google Cloud Private Service Connect integration to visualize connections, data transferred, and dropped packets through Private Service Connect. This gives you visibility into important metrics from your Private Service Connect connections, both for producers as well as consumers. Private Service Connect (PSC) is a Google Cloud networking product that enables you to access Google Cloud services, third-party partner services, and company-owned applications directly from your Virtual Private Cloud (VPC).

See Access Datadog privately and monitor your Google Cloud Private Service Connect usage in the Datadog blog for more information.

Data Collected

Metrics

| gcp.gce.instance.cpu.utilization (gauge) | Fraction of the allocated CPU that is currently in use on the instance. Note that some machine types allow bursting above 100% usage. Shown as fraction |

Cumulative metrics

Cumulative metrics are imported into Datadog with a .delta metric for each metric name. A cumulative metric is a metric where the value constantly increases over time. For example, a metric for sent bytes might be cumulative. Each value records the total number of bytes sent by a service at that time. The delta value represents the change since the previous measurement.

For example:

gcp.gke.container.restart_count is a CUMULATIVE metric. While importing this metric as a cumulative metric, Datadog adds the gcp.gke.container.restart_count.delta metric which includes the delta values (as opposed to the aggregate value emitted as part of the CUMULATIVE metric). See Google Cloud metric kinds for more information.

Events

All service events generated by your Google Cloud Platform are forwarded to your Datadog Events Explorer.

Service Checks

The Google Cloud Platform integration does not include any service checks.

Tags

Tags are automatically assigned based on a variety of Google Cloud Platform and Google Compute Engine configuration options. The project_id tag is added to all metrics. Additional tags are collected from the Google Cloud Platform when available, and varies based on metric type.

Additionally, Datadog collects the following as tags:

- Any hosts with

<key>:<value>labels. - Custom labels from Google Pub/Sub, GCE, Cloud SQL, and Cloud Storage.

Troubleshooting

Incorrect metadata for user defined gcp.logging metrics?

For non-standard gcp.logging metrics, such as metrics beyond Datadog’s out of the box logging metrics, the metadata applied may not be consistent with Google Cloud Logging.

In these cases, the metadata should be manually set by navigating to the metric summary page, searching and selecting the metric in question, and clicking the pencil icon next to the metadata.

Need help? Contact Datadog support.

Further Reading

Additional helpful documentation, links, and articles: