- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Monitoring Kafka Queues

Overview

In event-driven pipelines, queuing and streaming technologies such as Kafka are essential to the successful operation of your systems. Ensuring that messages are being reliably and quickly conveyed between services can be difficult due to the many technologies and teams involved in such an environment. The Datadog Kafka integration and APM enable your team to monitor the health and efficiency of your infrastructure and pipelines.

The Kafka integration

Visualize the performance of your cluster in real time and correlate the performance of Kafka with the rest of your applications by using the Datadog Kafka integration. Datadog also provides a MSK integration.

Data Stream Monitoring

Datadog Data Streams Monitoring provides a standardized method for your teams to measure pipeline health and end-to-end latencies for events traversing your system. The deep visibility offered by Data Streams Monitoring enables you to pinpoint faulty producers, consumers, or queues driving delays and lag in the pipeline. You can discover hard-to-debug pipeline issues such as blocked messages, hot partitions, or offline consumers. And you can collaborate seamlessly across relevant infrastructure or app teams.

Distributed traces

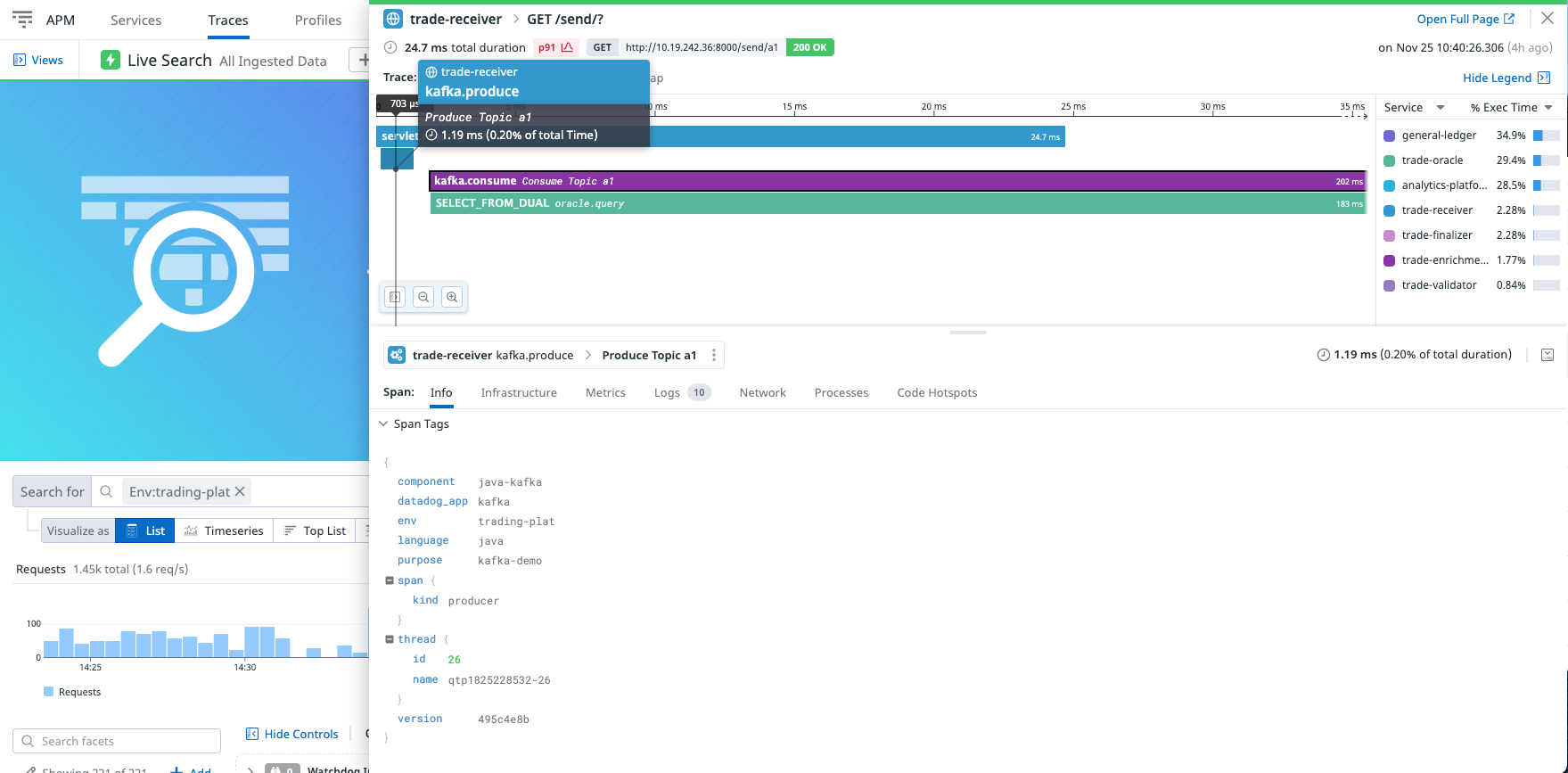

APM’s distributed tracing gives you expanded visibility into the performance of your services by measuring request volume and latency. Create graphs and alerts to monitor your APM data, and visualize the activity of a single request in a flame graph, like the one shown below, to better understand the sources of latency and errors.

APM can automatically trace requests to and from Kafka clients. This means you can collect traces without modifying your source code. Datadog injects headers in the Kafka messages so as to propagate the context of the trace from the producer to the consumer.

Check which Kafka libraries are supported in our compatibility pages.

Setup

To trace Kafka applications, Datadog traces the producing and consuming calls within the Kafka SDK. So to monitor Kafka, you just have to setup APM on your services. See the APM trace collection documentation for guidance on getting started with APM and distributed tracing.

Monitor your application in APM

A classic Kafka setup shows a trace with a producer span, and as a child, a consumer span. Any work that generates a trace in the consumption side is represented by child spans of the consumer span. Each span has a set of tags with the messaging prefix. The following table describes the tags you can find on Kafka spans.

To get a more global understanding of spans metadata in Datadog, read Span Tags Semantics.

| Name | Type | Description |

|---|---|---|

messaging.system | string | Kafka |

messaging.destination | string | The topic the message is sent to. |

messaging.destination_kind | string | Queue |

messaging.protocol | string | The name of the transport protocol. |

messaging.protocol_version | string | The version of the transport protocol. |

messaging.url | string | The connection string to the messaging system. |

messaging.message_id | string | A value used by the messaging system as an identifier for the message, represented as a string. |

messaging.conversation_id | string | The conversation ID for the conversation that the message belongs to, represented as a string. |

messaging.message_payload_size | number | The size of the uncompressed message payload in bytes. |

messaging.operation | string | A string identifying the kind of message consumption. Examples: send (a message sent to a producer), receive (a message is received by a consumer), or process (a message previously received is processed by a consumer). |

messaging.consumer_id | string | {messaging.kafka.consumer_group} - {messaging.kafka.client_id} if both are present.messaging.kafka.consumer_group if not. |

messaging.kafka.message_key | string | Message keys in Kafka are used for grouping alike messages to ensure they’re processed on the same partition. They differ from messaging.message_id in that they’re not unique. |

messaging.kafka.consumer_group | string | Name of the Kafka Consumer Group that is handling the message. Only applies to consumers, not producers. |

messaging.kafka.client_id | string | Client ID for the Consumer or Producer that is handling the message. |

messaging.kafka.partition | string | Partition the message is sent to. |

messaging.kafka.tombstone | string | A Boolean that is true if the message is a tombstone. |

messaging.kafka.client_id | string | Client ID for the Consumer or Producer that is handling the message. |

Special use cases

See Java’s tracer documentation for configuration of Kafka.

The Kafka .NET Client documentation states that a typical Kafka consumer application is centered around a consume loop, which repeatedly calls the Consume method to retrieve records one-by-one. The Consume method polls the system for messages. Thus, by default, the consumer span is created when a message is returned and closed before consuming the next message. The span duration is then representative of the computation between one message consumption and the next.

When a message is not processed completely before consuming the next one, or when multiple messages are consumed at once, you can set DD_TRACE_KAFKA_CREATE_CONSUMER_SCOPE_ENABLED to false in your consuming application. When this setting is false, the consumer span is created and immediately closed. If you have child spans to trace, follow the headers extraction and injection documentation for .NET custom instrumentation to extract the trace context.

The .NET tracer allows tracing Confluent.Kafka since v1.27.0. The trace context propagation API is available since v2.7.0.

The Kafka integration provides tracing of the ruby-kafka gem. Follow Ruby’s tracer documentation to enable it.

Disable tracing for Kafka

If you want to disable Kafka tracing on an application, set the appropriate language-specific configuration.

Further reading

Additional helpful documentation, links, and articles: