- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Watchdog

- Metrics

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Service Level Objectives

- Incident Management

- On-Call

- Status Pages

- Event Management

- Case Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Administration

AI-Enhanced Static Code Analysis

This product is not supported for your selected Datadog site. ().

Static Code Analysis (SAST) uses AI to help automate detection, validation, and remediation across the vulnerability management lifecycle. This page provides an overview of these features.

Summary of AI features in SAST

| Step of vulnerability management lifecycle | Feature | Trigger Point | Impact |

|---|---|---|---|

| Detection | Malicious PR protection: Detect potentially malicious changes or suspicious diffs | At PR time | Flags PRs introducing novel risky code |

| Validation | False positive filtering: Deprioritize low-likelihood findings | After scan | Reduce noise, allow focus on actual issues |

| Remediation | Batched remediation: Generate suggested fixes (and optionally PRs) for one or multiple vulnerabilities | After scan | Reduces developer effort, accelerates fix cycle |

Detection

Join the Preview!

Malicious PR protection is in Preview and supports GitHub repositories only. Click Request Access and complete the form.

Request AccessMalicious PR protection uses LLMs to detect and prevent malicious code changes at scale. By scanning pull requests (PRs) submitted to the default branches of your repositories to detect potentially malicious intent, this functionality helps you to:

- Secure code changes from both internal and external contributors

- Scale your code reviews as the volume of AI-assisted code changes increases

- Embed code security into your security incident response workflows

Detection coverage

Malicious code changes come in many different forms. Datadog SAST covers attack vectors such as:

- Malicious code injection

- Attempted secret exfiltration

- Pushing of malicious packages

- CI workflow compromise

Examples include the tj-actions/changed-files breach (March 2025) and obfuscation of malicious code in npm packages (September 2025). Read more in the blog post here.

Search and filter results

Detections from Datadog SAST on potentially malicious PRs can be found in Security Signals from the rule ID def-000-wnp.

There are two potential verdicts: malicious and benign. They can be filtered for using:

@malicious_pr_protection.scan.verdict:malicious@malicious_pr_protection.scan.verdict:benign.

Signals can be triaged directly in Datadog (assign, create a case, or declare an incident), or routed externally using Datadog Workflow Automation.

Validation and triage

False positive filtering

For a subset of SAST vulnerabilities, Bits AI reviews the context of the finding and assess whether it is more likely to be a true or false positive, along with a short explanation of the reasoning.

To narrow down your initial list for triage, in Vulnerabilities, select Filter out false positives. This option uses the -bitsAssessment:"False Positive" query.

Each finding includes a section with an explanation of the assessment. You can provide Bits AI with feedback on its assessment using a thumbs up 👍 or thumbs down 👎.

Supported CWEs

Supported CWEs

False positive filtering is supported for the following CWEs:

Remediation

Join the Preview!

AI-suggested remediation for SAST is powered by the Bits AI Dev Agent and is in Preview. To sign up, click Request Access and complete the form.

Request AccessDatadog SAST uses the Bits AI Dev Agent to generate single and bulk remediations for vulnerabilities.

Fix a single vulnerability

For each SAST vulnerability, open the side panel to see a pre-generated fix under the Remediation section. For other findings (such as code quality), you can click the Fix with Bits button to generate a fix.

From each remediation, you can modify the fix suggested by Bits AI directly in the session view, or click Create a pull request to apply the remediation back to your source code repository.

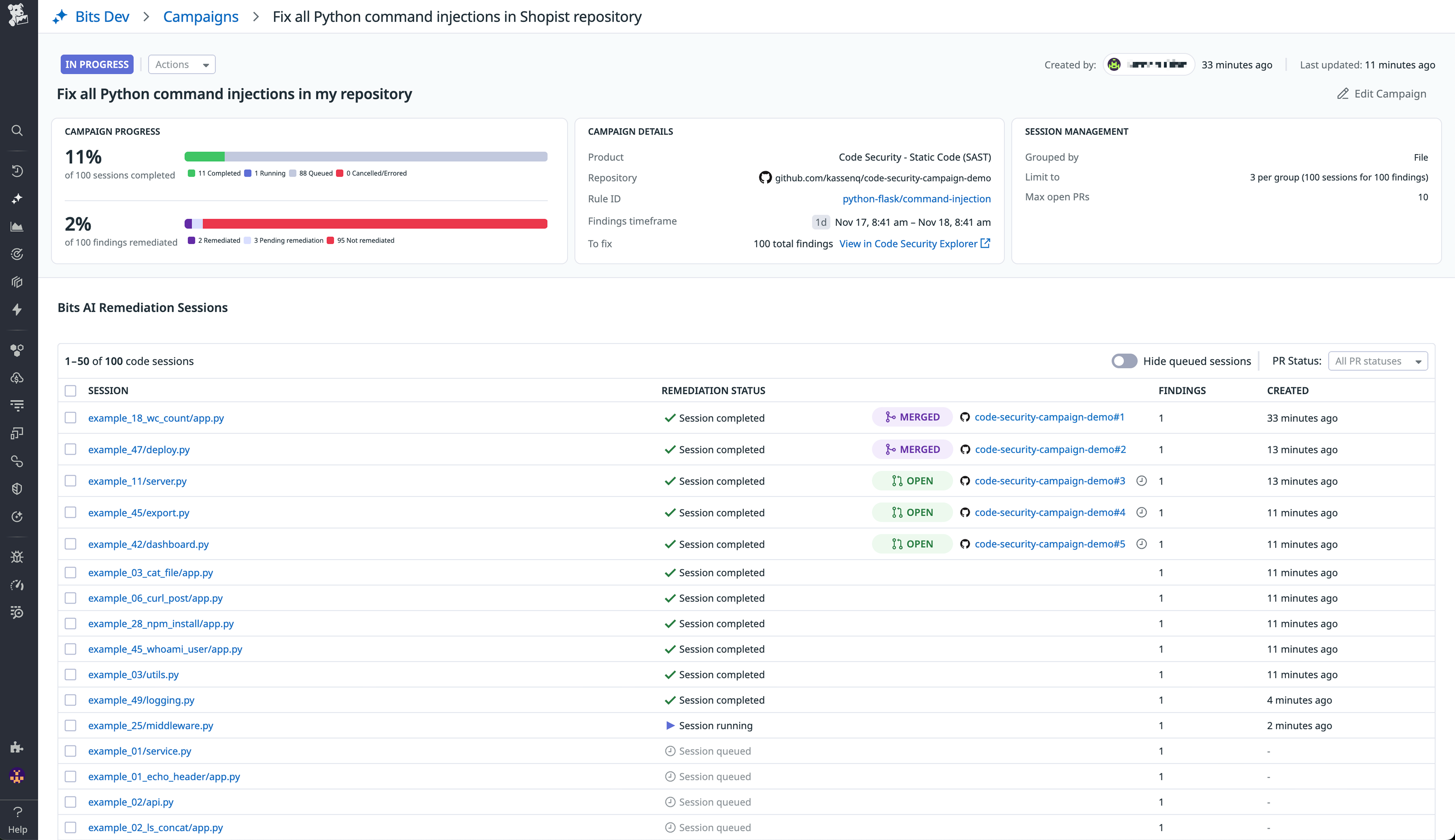

Fix multiple vulnerabilities in batches with campaigns

Datadog SAST saves time by replacing the filing of individual pull requests to fix vulnerabilities with bulk-remediation campaigns that can fix multiple vulnerabilities at once.

A campaign is how teams in Datadog operationalize remediation at scale. Creating a campaign tells Datadog to generate remediations for a certain subset of vulnerabilities in your codebase. Each campaign can also automatically create pull requests to apply fixes for all vulnerabilities in the scope of the campaign.

A campaign defines the following:

| Section | Description | Options |

|---|---|---|

| Repositories | Define which repo(s) and paths to scan | - Set the GitHub repo URL. - Use Paths to limit rule scanning to certain directories or files. |

| Rule | Choose which SAST rule to apply | - Select a rule from the dropdown. - View description, code example, and number of matches. - Click Show More to see remediation steps. |

| Session Management | Controls how PRs are grouped and submitted | - Create one PR per: • Repository: One PR for all findings in the repo• File: One PR per file with findings• Finding: One PR per finding (most granular)- Allow [n] open PRs at a time: Prevents too many PRs at once - Limit [n] findings per PR: Prevents creating too-large PRs |

| Custom Instructions | Customizes how the AI proposes remediations | - Custom Instructions: Guide the AI on how to tweak fixes (for example, Update CHANGELOG.md with a summary of changes, Start all PR titles with [autofix]). |

Campaign in progress

After you click Create Campaign, Bits AI Dev Agent does the following:

- Loads SAST findings for the selected repo(s), path(s), and rule.

- Generates patches for each group of findings.

- Creates PRs according to your session rules. Note: Automatic PR creation is opt-in through Settings.

- Lets you review, edit, and merge fixes by interacting directly with the Agent.

The campaign page shows real findings that Bits AI is actively remediating and how many have been remediated or are pending so your security and development teams can track progress made toward remediating vulnerabilities.

You can click a session to view the code changes in more detail and chat with the Bits AI Dev Agent to ask for changes.

Session details

A remediation session shows the full lifecycle of an AI-generated fix. It includes the original security finding, a proposed code change, an explanation of how and why the AI made the fix, and if enabled, CI results from applying the patch.

Session details make each remediation transparent, reviewable, and auditable, helping you safely adopt AI in your secure development workflow.

Session details include the following:

- Header: Identifies the campaign, time of session creation, and affected branch, file, or PR.

- Title: Summarizes the remediation goal based on the vulnerability being fixed.

- Session metadata: Indicates whether the session is a part of a campaign, the AI model used, and related PR metadata.

- Right panel:

- Suggested code change: Displays a diff of the vulnerable code and the AI-generated patch.

- Create/View Pull Request: Creates a GitHub PR to apply the remediation, or opens an existing linked GitHub PR for you to review or merge the proposed changes.

- Left panel displays the chat message history, for example:

- Prompt for remediation: Asks for remediation(s) and explains the triggered rule, the security risk, and why the original code is unsafe.

- Task list: Shows exactly how the AI read the code, understood the context, chose its approach, and applied the fix. This is helpful for auditability, compliance, and trust. You can confirm that the AI isn’t rewriting code blindly, but applying defensible and explainable patterns.

- CI logs from GitHub: Describes whether the AI-generated patch breaks anything downstream, and includes full error logs. This helps you validate that a fix is not only secure but also safe to deploy, without needing to leave the platform.

- Summary: Recaps the impact of the fix and provides next steps or guidance if tests failed or PR needs to be rebased.

- Bits AI chat field: Lets you interactively refine the fix or ask the AI follow-up questions. This makes remediation collaborative and tunable, giving security engineers and developers control without needing to write the patch themselves.

Further reading

Additional helpful documentation, links, and articles: