- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Set Up Pipelines

This product is not supported for your selected Datadog site. ().

Overview

The pipelines and processors outlined in this documentation are specific to on-premises logging environments. To aggregate, process, and route cloud-based logs, see Log Management Pipelines.

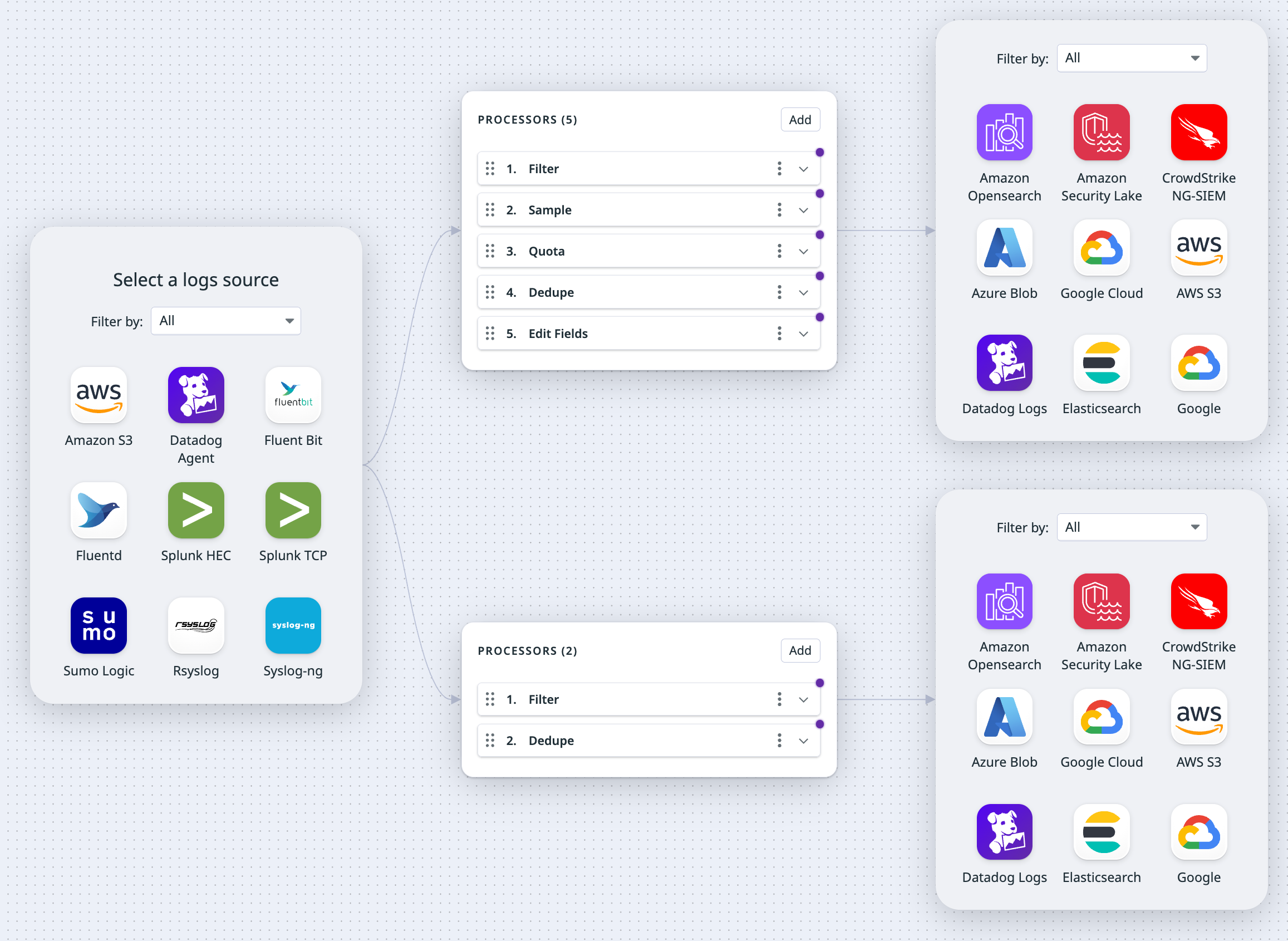

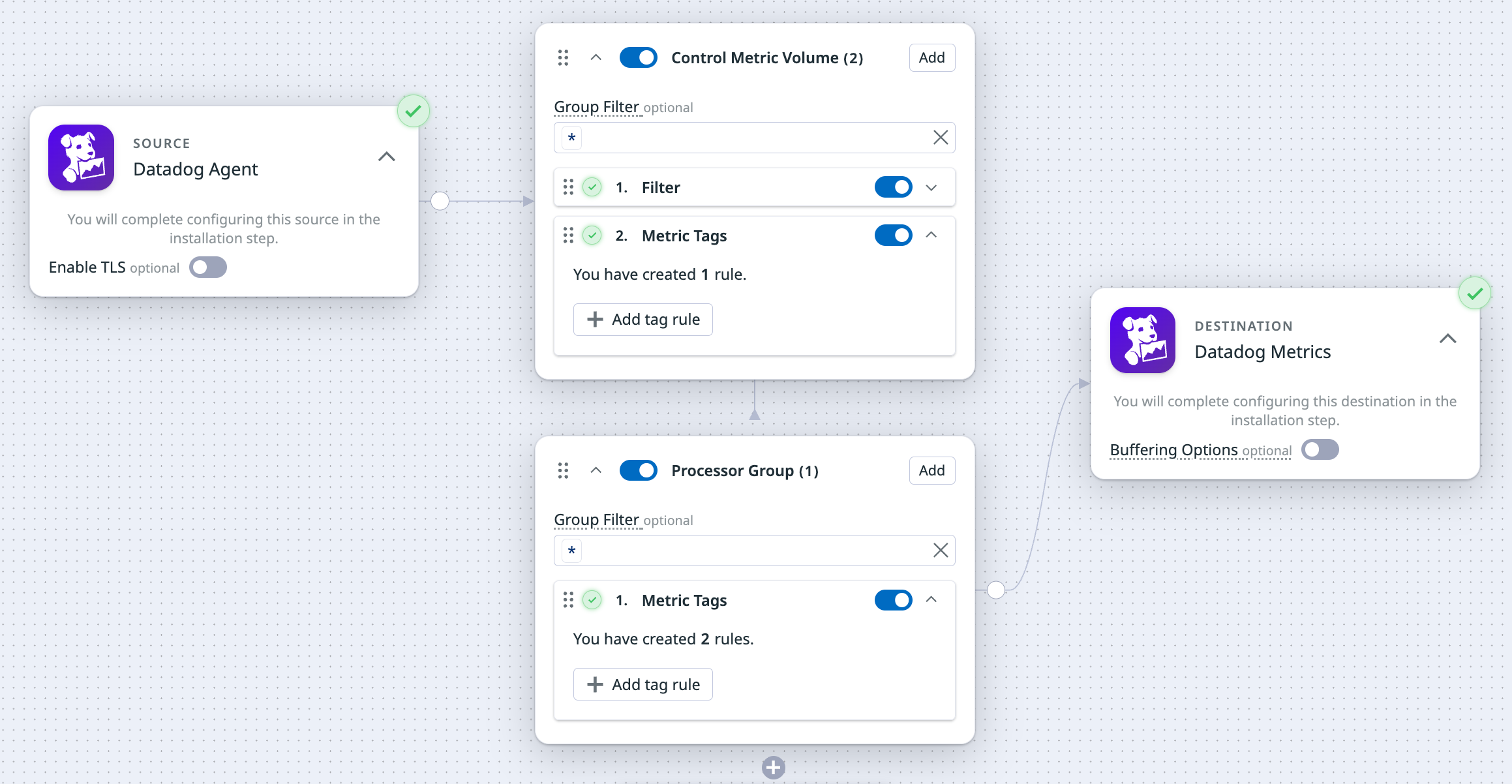

In Observability Pipelines, a pipeline is a sequential path with three types of components:

- Source: Receives data from your data source (for example, the Datadog Agent).

- Processors: Enrich and transform your data.

- Destinations: Where your processed data is sent.

You can create a pipeline with one of the following methods:

Set up a pipeline in the UI

- Navigate to Observability Pipelines.

- Select a template based on your use case.

- Select and set up your source.

- Add processors to transform, redact, and enrich your log data.

- If you want to copy a processor, click the copy icon for that processor and then use

command-vto paste it.

- If you want to copy a processor, click the copy icon for that processor and then use

- Select and set up destinations for your processed logs.

Add or remove components

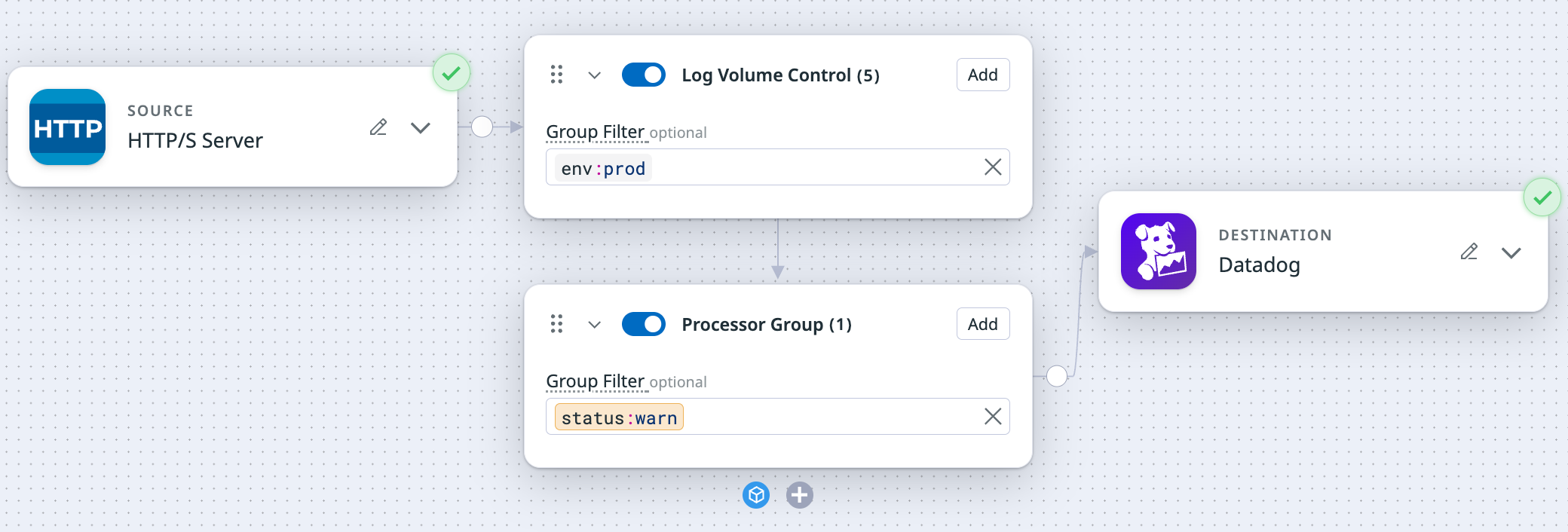

Add another processor group

If you want to add another group of processors for a destination:

- Click the plus sign (+) at the bottom of the existing processor group.

- Click the name of the processor group to update it.

- Optionally, enter a group filter. See [Search Syntax][11] for more information.

- Click Add to add processors to the group.

- If you want to copy all processors in a group and paste them into the same processor group or a different group:

- Click the three dots on the processor group.

- Select Copy all processors.

- Select the desired processor group, and then paste the processors into it.

- You can toggle the switch to enable and disable the processor group and also each individual processor.

Notes:

- There is a limit of 10 processor groups for a pipeline canvas.

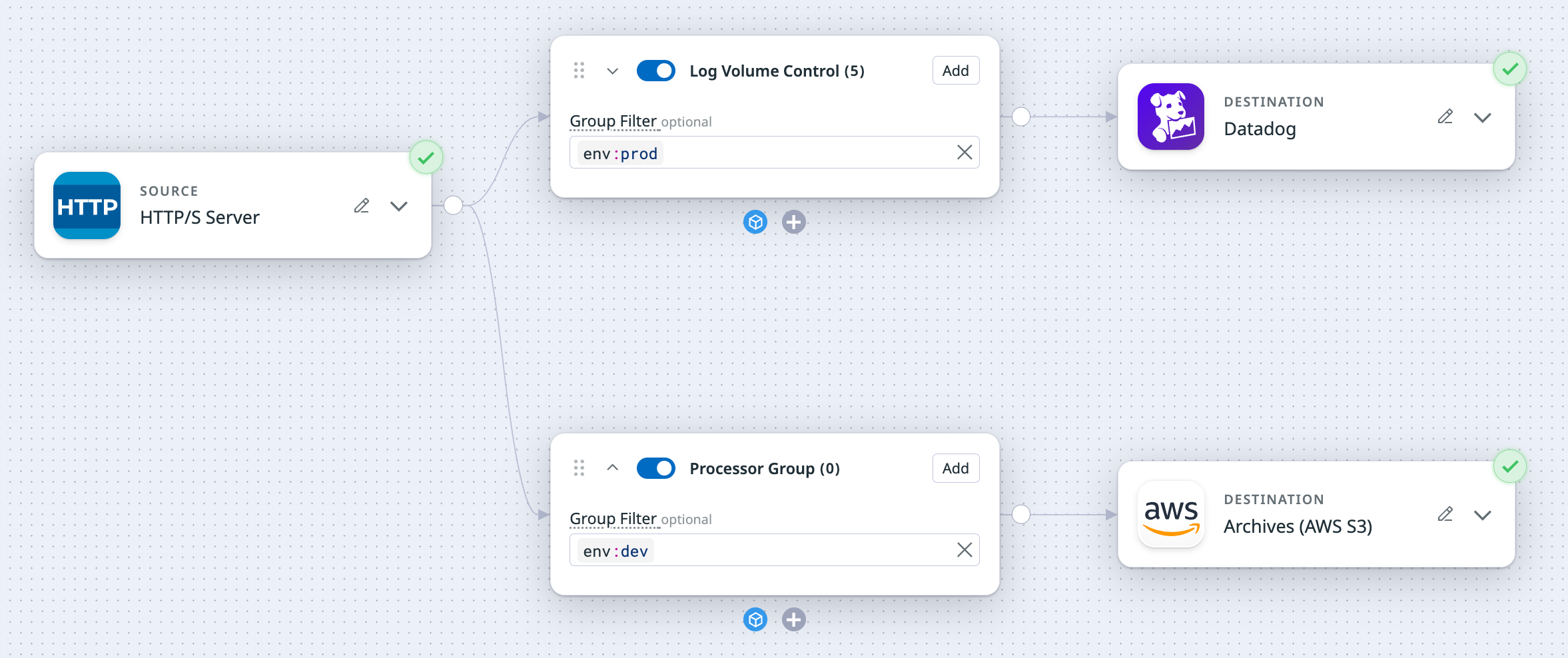

Add another set of processors and destinations

If you want to add another set of processors and destinations, click the plus sign (+) to the left of the processor group to add another set of processors and destinations to the source.

To delete a processor group, you need to delete all destinations linked to that processor group. When the last destination is deleted, the processor group is removed with it.

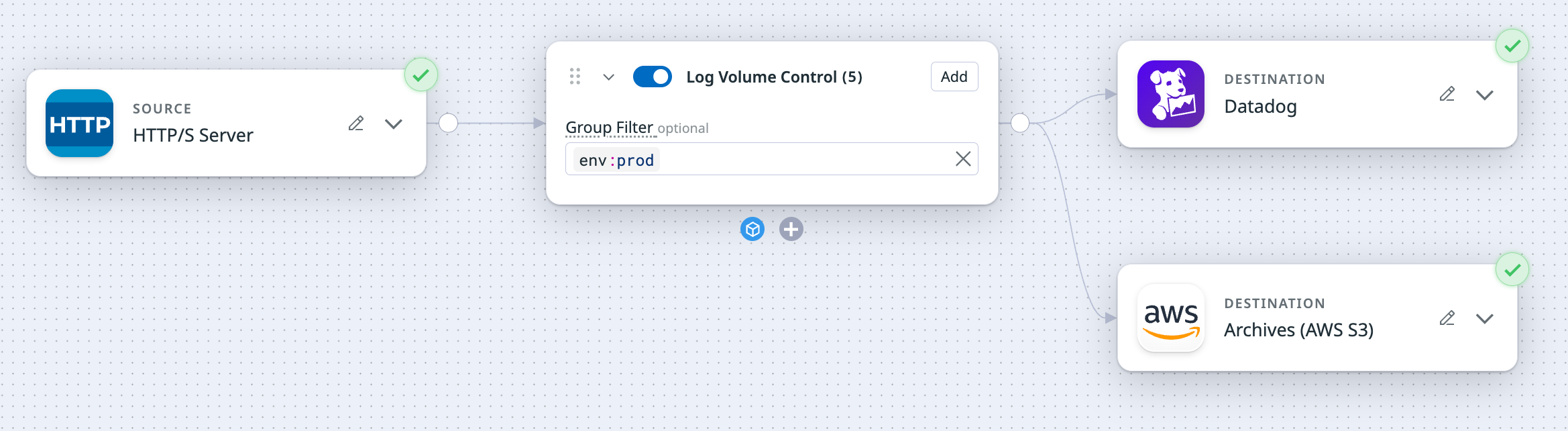

Add another destination to a processor group

If you want to add an additional destination to a processor group, click the plus sign (+) to the right of the processor group.

To delete a destination, click on the pencil icon to the top right of the destination, and select Delete node.

- If you delete a destination from a processor group that has multiple destinations, only the deleted destination is removed.

- If you delete a destination from a processor group that only has one destination, both the destination and the processor group are removed.

Notes:

- A pipeline must have at least one destination. If a processor group only has one destination, that destination cannot be deleted.

- You can add a total of three destinations for a pipeline.

- A specific destination can only be added once. For example, you cannot add multiple Splunk HEC destinations.

Set up pipeline components

- Navigate to Observability Pipelines.

- Select the Metric Tag Governance template.

- Set up the Datadog Agent source.

- Add processors to filter and transform your metrics.

- If you want to copy a processor, click the copy icon for that processor and then paste it (

Cmd+Von Mac,Ctrl+Von Windows/Linux).

- If you want to copy a processor, click the copy icon for that processor and then paste it (

- Set up the Datadog Metrics destination.

Add another processor group

If you want to add another group of processors for a destination:

- Click the plus sign (+) at the bottom of the existing processor group.

- Click the name of the processor group to update it.

- Optionally, enter a group filter. See Search Syntax for more information.

- Click Add to add processors to the group.

- If you want to copy all processors in a group and paste them into the same processor group or a different group:

- Click the three dots on the processor group.

- Select Copy all processors.

- Select the desired processor group, and then paste the processors into it.

- You can toggle the switch to enable and disable the processor group and also each individual processor.

Notes:

- There is a limit of 10 processor groups for a pipeline canvas.

Install the Worker and deploy the pipeline

After you have set up your source, processors, and destinations, click Next: Install. See Install the Worker for instructions on how to install the Worker for your platform. See Advanced Worker Configurations for bootstrapping options.

If you want to make changes to your pipeline after you have deployed it, see Update Existing Pipelines.

Enable out-of-the-box monitors for your pipeline

- Navigate to the Pipelines page and find your pipeline.

- Click Enable monitors in the Monitors column for your pipeline.

- Click Start to set up a monitor for one of the suggested use cases.

- The metric monitor is configured based on the selected use case. You can update the configuration to further customize it. See the Metric monitor documentation for more information.

Set up a pipeline with the API

Use the Observability Pipelines API to create a pipeline. See the API reference for example request payloads.

After creating the pipeline, install the Worker to send data through the pipeline.

- See Environment Variables for the list of environment variables you need for the different sources, processor, and destinations when you install the Worker.

Note: Pipelines created using the API are read-only in the UI. Use the update a pipeline endpoint to make any changes to an existing pipeline.

See Advanced Worker Configurations for bootstrapping options.

Set up a pipeline with Terraform

Creating pipelines using Terraform is in Preview. Fill out the form to request access.

Terraform 3.84.0 replaces standalone processors with processor groups and is a breaking change. If you want to upgrade to Terraform 3.84.0, see the PR description for instructions on how to migrate your existing resources.

You can use the datadog_observability_pipeline module to create a pipeline using Terraform.

After creating the pipeline, install the Worker to send data through the pipeline.

- See Environment Variables for the list of environment variables you need for the different sources, processor, and destinations when you install the Worker.

Pipelines created using Terraform are read-only in the UI. Use the datadog_observability_pipeline module to make any changes to an existing pipeline.

See Advanced Worker Configurations for bootstrapping options.

Clone a pipeline

To clone a pipeline in the UI:

- Navigate to Observability Pipelines.

- Select the pipeline you want to clone.

- Click the cog at the top right side of the page, then select Clone.

Delete a pipeline

To delete a pipeline in the UI:

- Navigate to Observability Pipelines.

- Select the pipeline you want to delete.

- Click the cog at the top right side of the page, then select Delete.

Note: You cannot delete an active pipeline. You must stop all Workers for a pipeline before you can delete it.

Pipeline requirements and limits

- A pipeline must have at least one destination. If a processor group only has one destination, that destination cannot be deleted.

- For log pipelines:

- You can add a total of three destinations for a log pipeline.

- A specific destination can only be added once. For example, you cannot add multiple Splunk HEC destinations.

Further Reading

Additional helpful documentation, links, and articles: