- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Administration

Getting Started with Notebooks Analysis Features

Overview

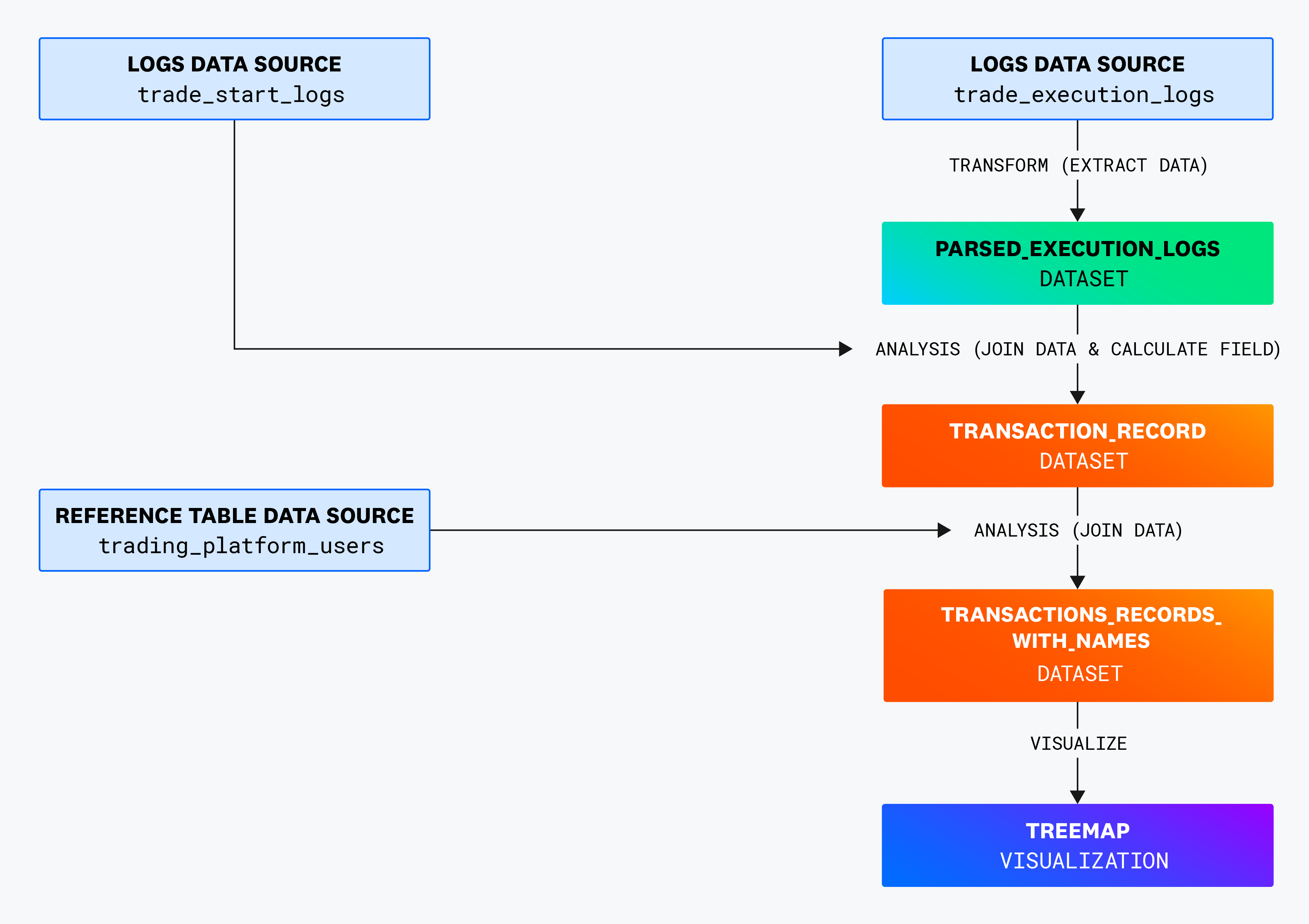

This example notebook walks through a multi-step analysis that transforms and visualizes log and reference data. It begins by importing several data sources, then processes and enriches them through parsing, joins, and transformations to build derived datasets. The walkthrough concludes with a visualization that highlights the final results, helping illustrate the full data journey from raw logs to structured insights.

This diagram shows how the data flows through each transformation and analysis step.

Step-by-Step walkthrough on building the analysis

1. Importing data sources

The analysis begins with two primary log data sources:

trade_start_logs: Contains information about trade initiationtrade_execution_logs: Contains details about trade execution

2. Extracting transaction IDs

The first transformation uses a transform cell to create parsed_execution_logs. This cell applies grok parsing syntax to extract transaction IDs from the message column of trade_execution_logs, creating a new transaction_id column:

transaction %{notSpace:transaction_id}

An example of the resulting parsed_execution_logs dataset:

| timestamp | host | message | transaction_id |

|---|---|---|---|

| May 29 11:09:28.000 | shopist.internal | Executing trade for transaction 56519 | 56519 |

| May 29 10:59:29.000 | shopist.internal | Executing trade for transaction 23269 | 23269 |

| May 29 10:58:54.000 | shopist.internal | Executing trade for transaction 96870 | 96870 |

| May 31 12:20:01.152 | shopist.internal | Executing trade for transaction 80207 | 80207 |

3. Joining trade start and execution logs

The next step uses an analysis cell to create transaction_record. This SQL query selects specific columns from both datasets, transforms the status field (converting ‘INFO’ to ‘OK’), and joins the datasets on transaction_id:

SELECT

start_logs.timestamp,

start_logs.customer_id,

start_logs.transaction_id,

start_logs.dollar_value,

CASE

WHEN executed_logs.status = 'INFO' THEN 'OK'

ELSE executed_logs.status

END AS status

FROM

trade_start_logs AS start_logs

JOIN

trade_execution_logs AS executed_logs

ON

start_logs.transaction_id = executed_logs.transaction_id;

An example of the resulting transaction_record dataset:

| timestamp | customer_id | transaction_id | dollar_value | status |

|---|---|---|---|---|

| May 29 11:09:28.000 | 92446 | 085cc56c-a54f | 838.32 | OK |

| May 29 10:59:29.000 | 78037 | b1fad476-fd4f | 479.96 | OK |

| May 29 10:58:54.000 | 47694 | cb23d1a7-c0cb | 703.71 | OK |

| May 31 12:20:01.152 | 80207 | 2c75b835-4194 | 386.21 | ERROR |

Then the reference table trading_platform_users is added as a data source:

| customer_name | customer_id | account_status |

|---|---|---|

| Meghan Key | 92446 | verified |

| Anthony Gill | 78037 | verified |

| Tanya Mejia | 47694 | verified |

| Michael Kaiser | 80207 | fraudulent |

4. Enriching transaction data with customer information

The analysis cell transaction_record_with_names uses SQL to join the transaction data with customer information. This query selects columns from both datasets, enriching the transaction records with customer names and account status:

SELECT tr.timestamp, tr.customer_id, tpu.customer_name, tpu.account_status, tr.transaction_id, tr.dollar_value, tr.status

FROM transaction_record AS tr

LEFT JOIN trading_platform_users AS tpu ON tr.customer_id = tpu.customer_id;

An example of the resulting transaction_record_with_names dataset:

| timestamp | customer_id | customer_name | account_status | transaction_id | dollar_value | status |

|---|---|---|---|---|---|---|

| May 29 11:09:28.000 | 92446 | Meghan Key | verified | 085cc56c-a54f | 838.32 | OK |

| May 29 10:59:29.000 | 78037 | Anthony Gill | verified | b1fad476-fd4f | 479.96 | OK |

| May 29 10:58:54.000 | 47694 | Tanya Mejia | verified | cb23d1a7-c0cb | 703.71 | OK |

| May 31 12:20:01.152 | 80207 | Michael Kaiser | fraudulent | 2c75b835-4194 | 386.21 | ERROR |

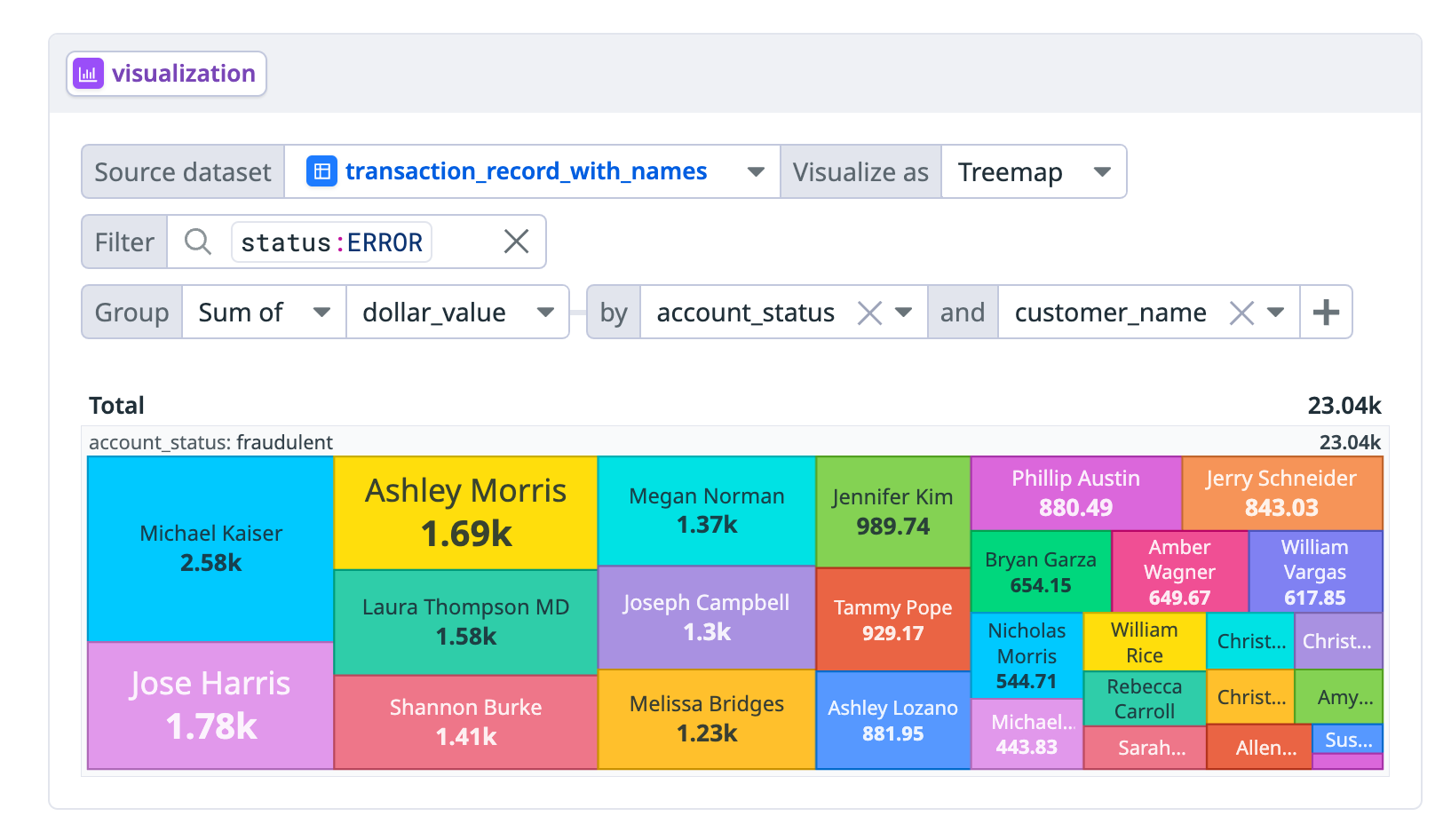

5. Visualizing error transactions

Finally, a treemap visualization cell is created to identify problematic transactions. The visualization uses the transaction_record_with_names dataset with a filter for status:ERROR logs and groups the data by dollar_value, account_status, and customer_name, making it easy to spot patterns in failed transactions.

Further reading

Additional helpful documentation, links, and articles: