- 重要な情報

- はじめに

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- Administrator's Guide

- API

- Partners

- DDSQL Reference

- モバイルアプリケーション

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- アプリ内

- ダッシュボード

- ノートブック

- DDSQL Editor

- Reference Tables

- Sheets

- Watchdog

- アラート設定

- メトリクス

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- インフラストラクチャー

- Cloudcraft

- Resource Catalog

- ユニバーサル サービス モニタリング

- Hosts

- コンテナ

- Processes

- サーバーレス

- ネットワークモニタリング

- Cloud Cost

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM & セッションリプレイ

- Synthetic モニタリング

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- Code Security

- クラウド セキュリティ マネジメント

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- CloudPrem

- 管理

(レガシー) 高可用性と災害復旧

This product is not supported for your selected Datadog site. ().

Observability Pipelines は、US1-FED Datadog サイトでは利用できません。

このガイドは、大規模な本番環境レベルのデプロイメント向けです。

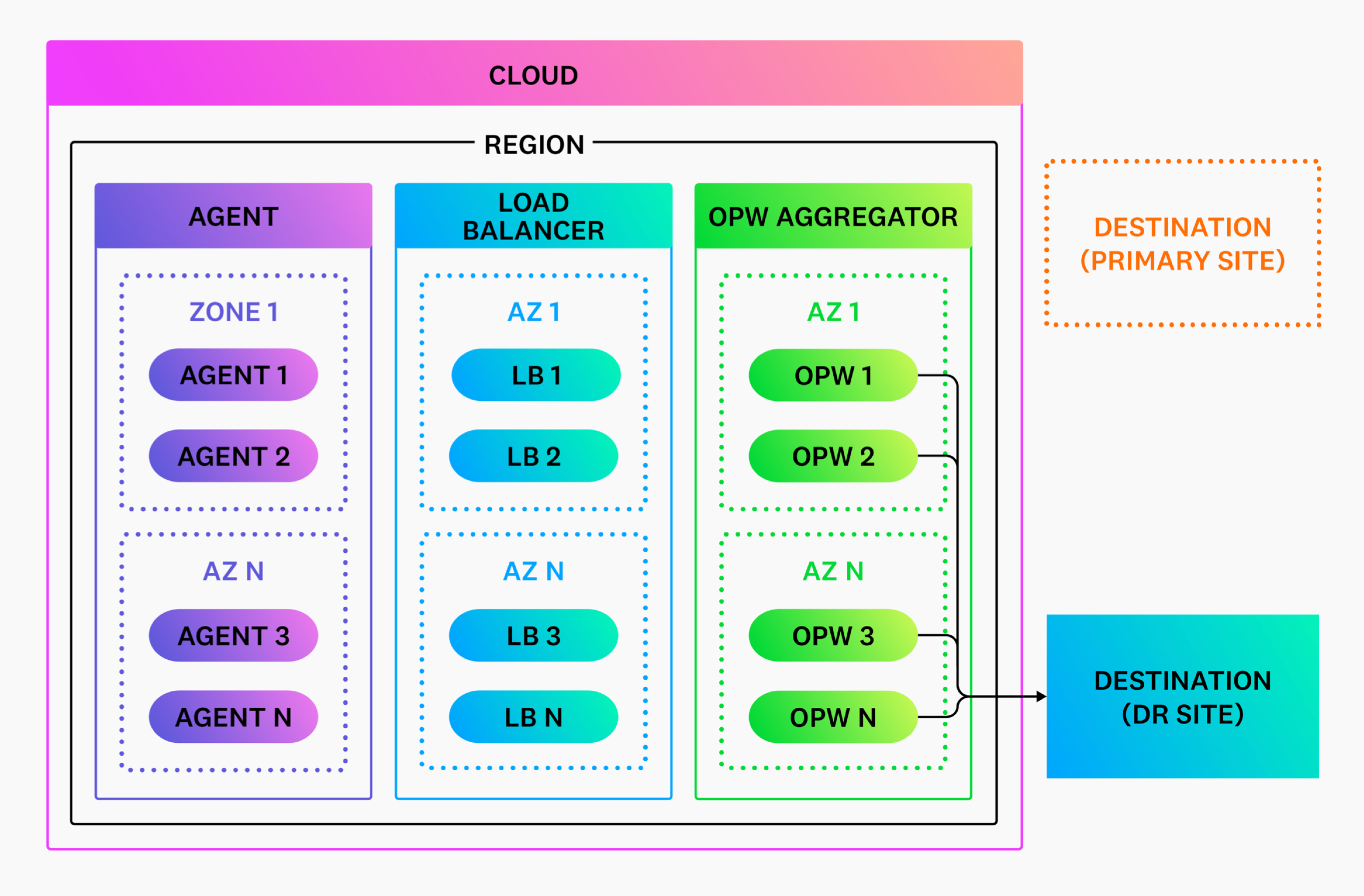

観測可能性パイプラインの文脈では、高可用性とは、システムに問題が発生しても観測可能性パイプラインワーカーが利用可能であることを指します。

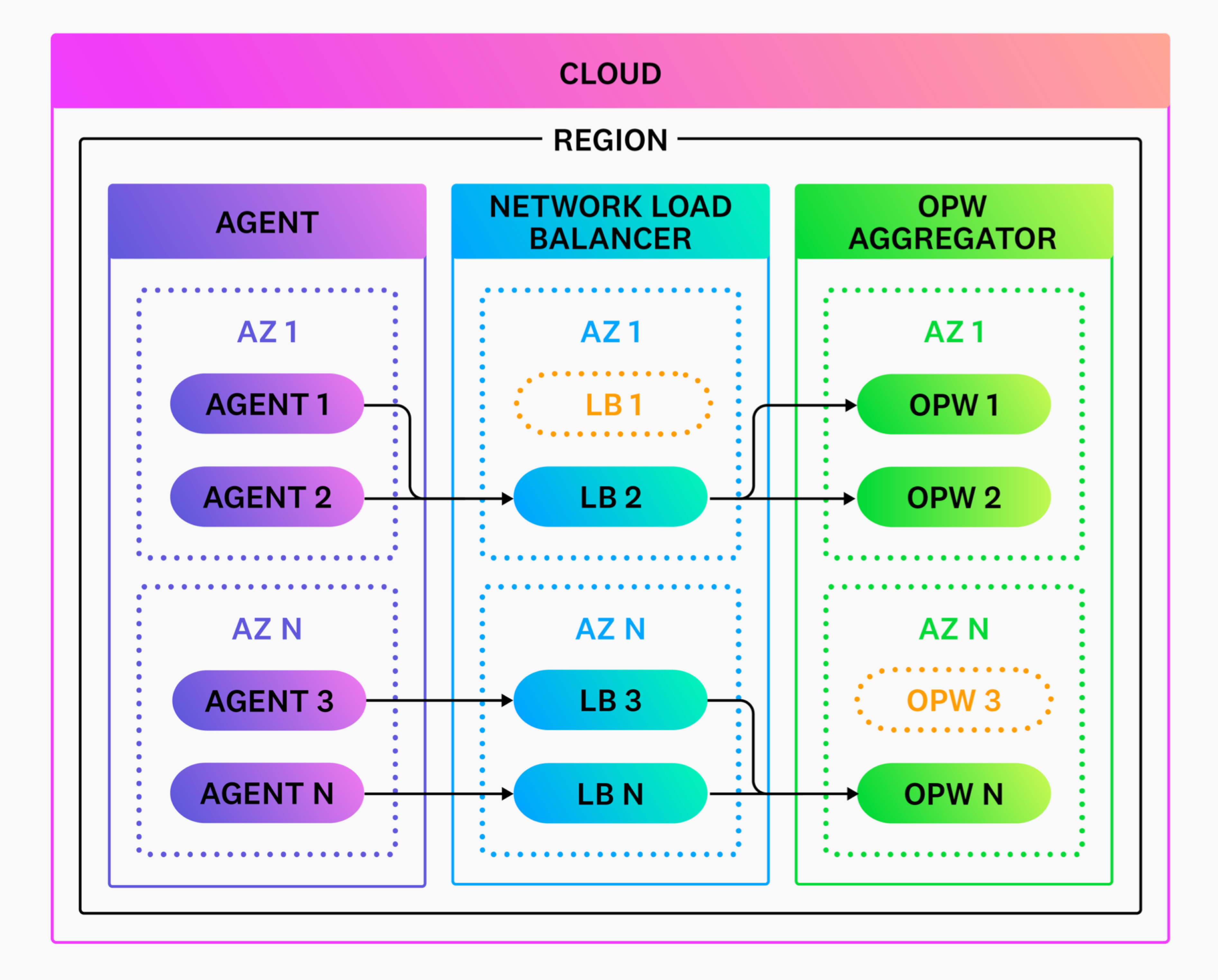

高可用性を実現するには

- 各アベイラビリティゾーンに少なくとも 2 つの観測可能性パイプラインワーカーインスタンスをデプロイします。

- 観測可能性パイプラインワーカーを少なくとも 2 つのアベイラビリティゾーンにデプロイします。

- 観測可能性パイプラインワーカーインスタンス間のトラフィックをバランスさせるロードバランサーで観測可能性パイプラインワーカーインスタンスを前面化します。詳細については、キャパシティプランニングとスケーリングを参照してください。

障害シナリオの軽減

観測可能性パイプラインワーカープロセスの問題への対応

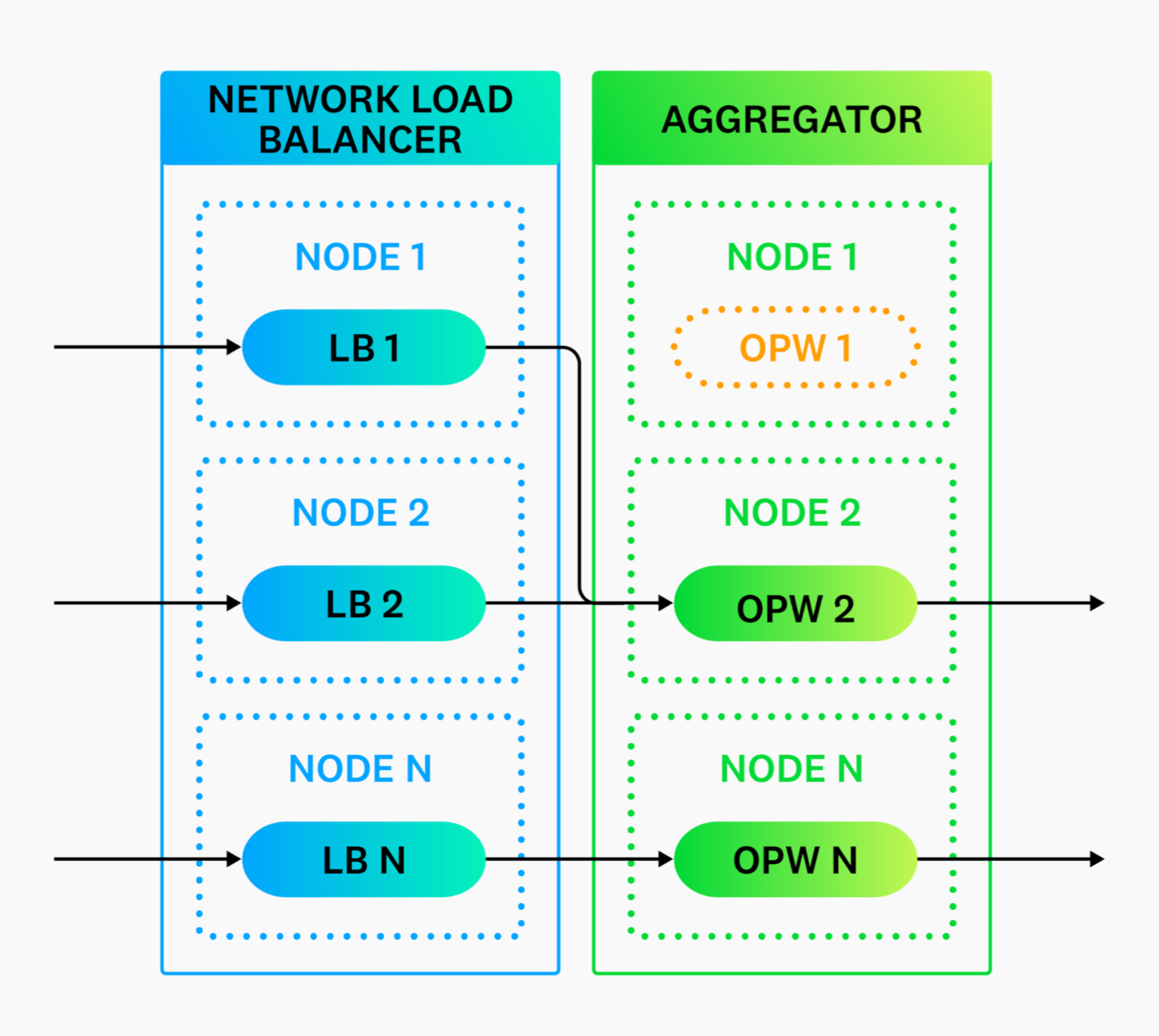

システムプロセスの問題を軽減するには、観測可能性パイプラインワーカーを複数のノードに分散し、必要に応じて別の観測可能性パイプラインワーカーインスタンスにトラフィックをリダイレクトできるネットワークロードバランサーで前面化します。さらに、プラットフォームレベルの自動自己修復機能により、最終的にはプロセスを再起動するか、ノードを交換する必要があります。

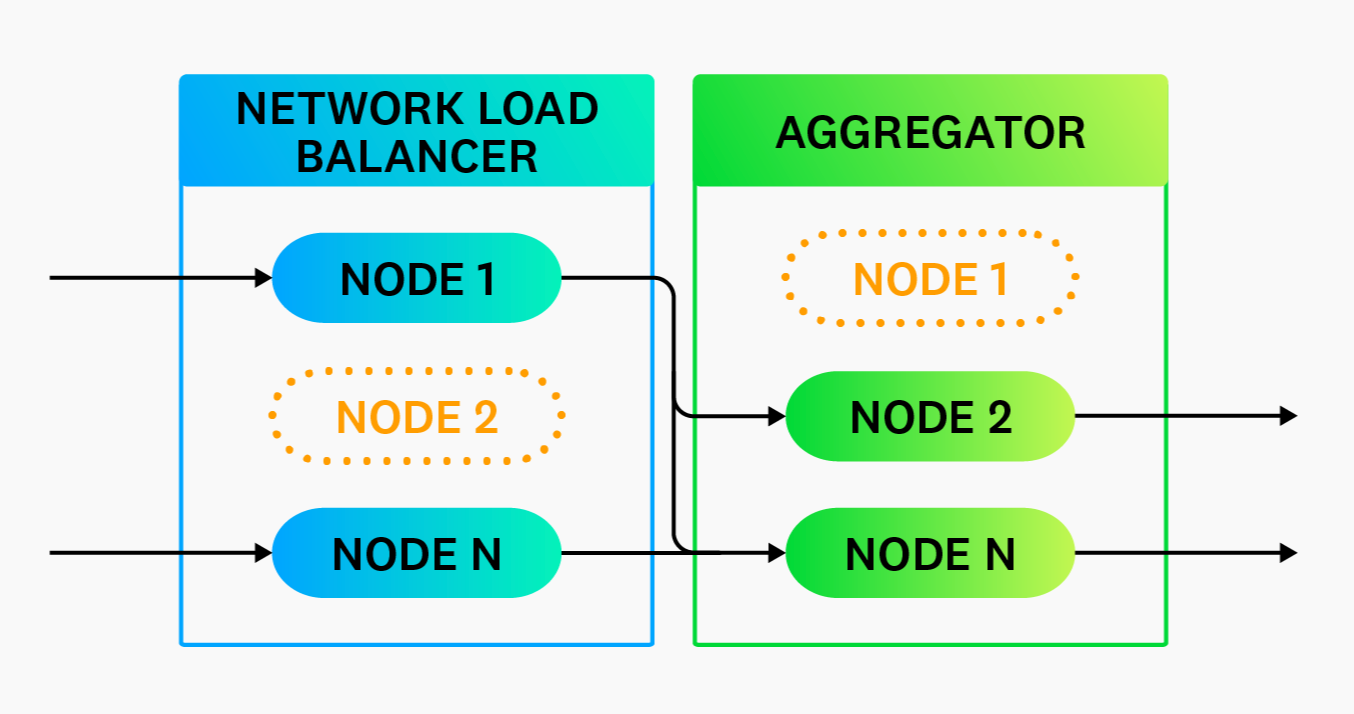

ノード障害の軽減

ノードの問題を軽減するには、観測可能性パイプラインワーカーを複数のノードに分散し、別の観測可能性パイプラインワーカーノードにトラフィックをリダイレクトできるネットワークロードバランサーで前面化します。さらに、プラットフォームレベルの自動自己修復機能により、最終的にはノードを交換する必要があります。

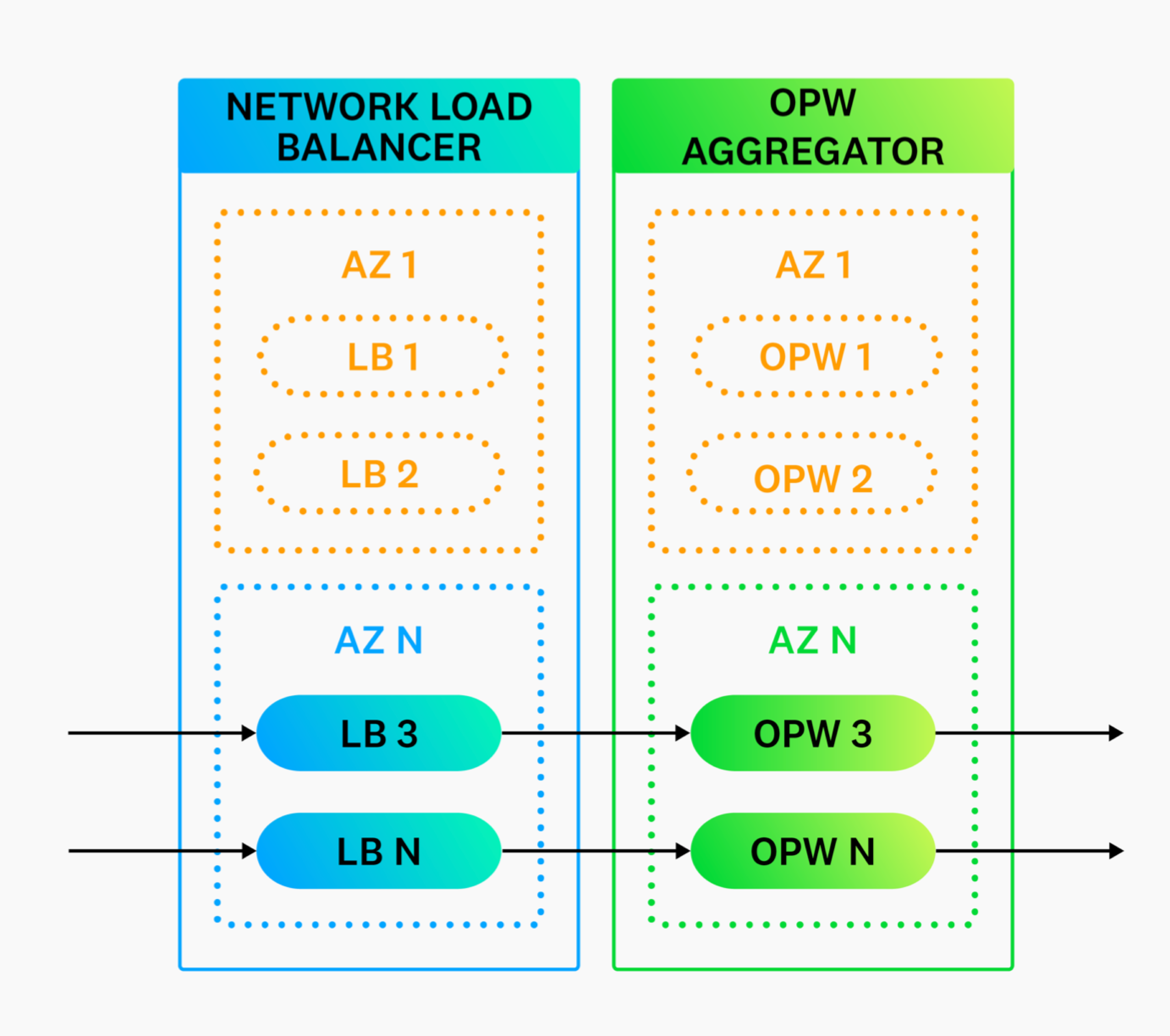

アベイラビリティゾーン障害への対応

アベイラビリティゾーンの問題を軽減するために、複数のアベイラビリティゾーンに観測可能性パイプラインワーカーをデプロイします。

リージョン障害の軽減

観測可能性パイプラインワーカーは、内部の観測可能性データをルーティングするために設計されており、他のリージョンにフェイルオーバーするべきではありません。その代わりに、観測可能性パイプラインワーカーは、全てのリージョンにデプロイされるべきです。そのため、ネットワーク全体やリージョンに障害が発生した場合、観測可能性パイプラインワーカーも一緒に障害になります。詳しくはネットワーキングをご覧ください。

災害復旧

内部災害復旧

観測可能性パイプラインワーカーは、内部の観測可能性データをルーティングするために設計されたインフラストラクチャーレベルのツールです。シェアードナッシングアーキテクチャを実装しており、災害復旧 (DR) サイトに複製または転送されるべき状態を管理しません。そのため、リージョン全体が障害になった場合、観測可能性パイプラインワーカーも一緒に障害になります。したがって、より広範な DR 計画の一環として、DR サイトに観測可能性パイプラインワーカーをインストールする必要があります。

外部災害復旧

Datadog のようなマネージドデスティネーションを使用している場合、観測可能性パイプラインワーカーのサーキットブレーカー機能を使用して、Datadog DR サイトへのデータの自動ルーティングを容易にすることができます。