- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Test Impact Analysis for JavaScript and TypeScript

This product is not supported for your selected Datadog site. ().

Overview

Test Impact Analysis for JavaScript skips entire test suites (test files) rather than individual tests.

Compatibility

Test Impact Analysis is only supported in the following versions and testing frameworks:

jest>=24.8.0- From

dd-trace>=4.17.0ordd-trace>=3.38.0. - Only

jest-circus/runneris supported astestRunner. - Only

jsdomandnodeare supported as test environments.

- From

mocha>=5.2.0- From

dd-trace>=4.17.0ordd-trace>=3.38.0. - Run mocha with

nycto enable code coverage.

- From

cucumber-js>=7.0.0- From

dd-trace>=4.17.0ordd-trace>=3.38.0. - Run cucumber-js with

nycto enable code coverage.

- From

cypress>=6.7.0- From

dd-trace>=4.17.0ordd-trace>=3.38.0. - Instrument your web application with code coverage.

- From

Setup

Test Optimization

Prior to setting up Test Impact Analysis, set up Test Optimization for JavaScript and TypeScript. If you are reporting data through the Agent, use v6.40 and later or v7.40 and later.

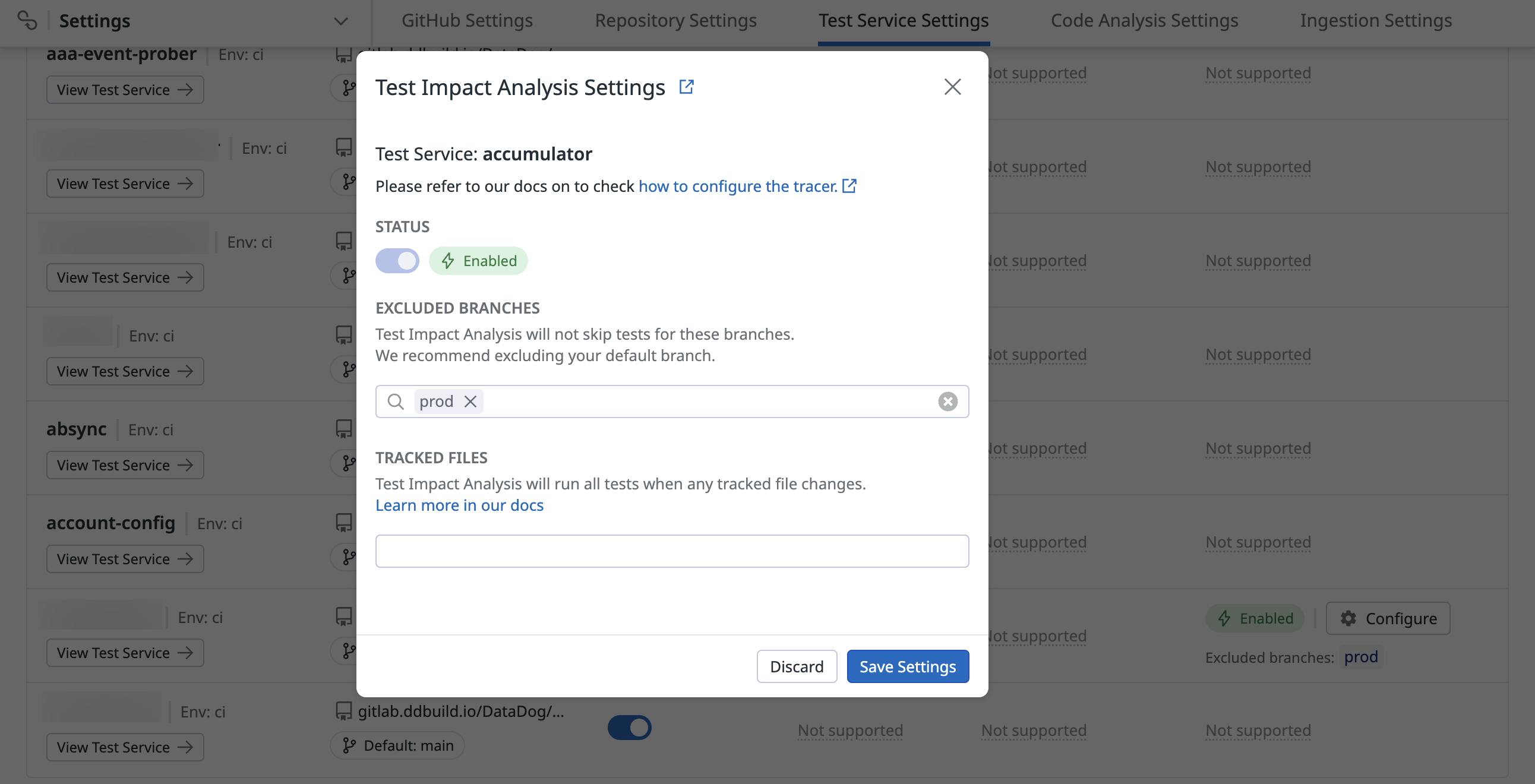

Activate Test Impact Analysis for the test service

You, or a user in your organization with the Intelligent Test Runner Activation (intelligent_test_runner_activation_write) permission, must activate Test Impact Analysis on the Test Service Settings page.

Run tests with Test Impact Analysis enabled

After completing setup, run your tests as you normally do:

NODE_OPTIONS="-r dd-trace/ci/init" DD_ENV=ci DD_SERVICE=my-javascript-app yarn testAfter completing setup, run your tests as you normally do:

NODE_OPTIONS="-r dd-trace/ci/init" DD_ENV=ci DD_SERVICE=my-javascript-app DD_CIVISIBILITY_AGENTLESS_ENABLED=true DD_API_KEY=$DD_API_KEY yarn testCypress

For Test Impact Analysis for Cypress to work, you must instrument your web application with code coverage. For more information about enabling code coverage, see the Cypress documentation.

To check that you’ve successfully enabled code coverage, navigate to your web app with Cypress and check the window.__coverage__ global variable. This is what dd-trace uses to collect code coverage for Test Impact Analysis.

Inconsistent test durations

In some frameworks, such as jest, there are cache mechanisms that make tests faster after other tests have run (see jest cache docs). If Test Impact Analysis is skipping all but a few test files, these suites might run slower than they usually do. This is because they run with a colder cache. Regardless of this, total execution time for your test command should still be reduced.

Disabling skipping for specific tests

You can override the Test Impact Analysis behavior and prevent specific tests from being skipped. These tests are referred to as unskippable tests.

Why make tests unskippable?

Test Impact Analysis uses code coverage data to determine whether or not tests should be skipped. In some cases, this data may not be sufficient to make this determination.

Examples include:

- Tests that read data from text files

- Tests that interact with APIs outside of the code being tested (such as remote REST APIs)

Designating tests as unskippable ensures that Test Impact Analysis runs them regardless of coverage data.

Marking tests as unskippable

You can use the following docblock at the top of your test file to mark a suite as unskippable. This prevents any of the tests defined in the test file from being skipped by Test Impact Analysis. This is similar to jest’s testEnvironmentOptions.

/**

* @datadog {"unskippable": true}

*/

describe('context', () => {

it('can sum', () => {

expect(1 + 2).to.equal(3)

})

})

You can use the @datadog:unskippable tag in your feature file to mark it as unskippable. This prevents any of the scenarios defined in the feature file from being skipped by Test Impact Analysis.

@datadog:unskippable

Feature: Greetings

Scenario: Say greetings

When the greeter says greetings

Then I should have heard "greetings"

Examples of tests that should be unskippable

This section shows some examples of tests that should be marked as unskippable.

Tests that depend on fixtures

/**

* We have a `payload.json` fixture file in `./fixtures/payload`

* that is processed by `processPayload` and put into a snapshot.

* Changes in `payload.json` do not affect the test code coverage but can

* make the test fail.

*/

/**

* @datadog {"unskippable": true}

*/

import processPayload from './process-payload';

import payload from './fixtures/payload';

it('can process payload', () => {

expect(processPayload(payload)).toMatchSnapshot();

});

Tests that communicate with external services

/**

* We query an external service running outside the context of

* the test.

* Changes in this external service do not affect the test code coverage

* but can make the test fail.

*/

/**

* @datadog {"unskippable": true}

*/

it('can query data', (done) => {

fetch('https://www.external-service.com/path')

.then((res) => res.json())

.then((json) => {

expect(json.data[0]).toEqual('value');

done();

});

});

# Same way as above we're requesting an external service

@datadog:unskippable

Feature: Process the payload

Scenario: Server responds correctly

When the server responds correctly

Then I should have received "value"

Further Reading

Additional helpful documentation, links, and articles: