- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Introduction to Integrations

Overview

This is a guide for using integrations. If you are looking for information about building a new integration, see the Create a new integration page.

An integration, at the highest level, is when you assemble a unified system from units that are usually considered separately. At Datadog, you can use integrations to bring together all of the metrics and logs from your infrastructure and gain insight into the unified system as a whole—you can see pieces individually and also how individual pieces are impacting the whole.

Note: It’s best to start collecting metrics on your projects as early in the development process as possible, but you can start at any stage.

Datadog provides three main types of integrations:

- Agent-based integrations are installed with the Datadog Agent and use a Python class method called

checkto define the metrics to collect. - Authentication (crawler) based integrations are set up in Datadog where you provide credentials for obtaining metrics with the API. These include popular integrations like Slack, AWS, Azure, and PagerDuty.

- Library integrations use the Datadog API to allow you to monitor applications based on the language they are written in, like Node.js or Python.

You can also build a custom check to define and send metrics to Datadog from your unique in-house system.

Setting up an integration

The Datadog Agent package includes integrations officially supported by Datadog, in integrations core. To use those integrations, download the Datadog Agent. Community-based integrations are in integrations extras. For more information on installing or managing these integrations, see the integrations management guide.

Permissions

The Integrations Manage permission is required to interact with an Integration tile. See RBAC roles for more information.

API and application keys

To install the Datadog Agent, you need an API key. If the Agent is already downloaded, make sure to set up the API key in the datadog.yaml file. To use most additional Datadog functionality besides submitting metrics and events, you need an application key. You can manage your accounts API and application keys in the API Settings page.

Installation

If you want to connect with a crawler or library based integration, navigate to that provider on the Integrations page for specific instructions on how to connect. For other supported integrations, install the Datadog Agent. Most integrations are supported for the containerized Agents: Docker and Kubernetes. After you’ve downloaded the Agent, go to the Integrations page to find specific configuration instructions for individual integrations.

Configuring Agent integrations

Most configuration parameters are specific to the individual integration. Configure Agent integrations by navigating to the conf.d folder at the root of your Agent’s configuration directory. Each integration has a folder named <INTEGRATION_NAME>.d, which contains the file conf.yaml.example. This example file lists all available configuration options for the particular integration.

To activate a given integration:

- Rename the

conf.yaml.examplefile (in the corresponding<INTEGRATION_NAME>.dfolder) toconf.yaml. - Update the required parameters inside the newly created configuration file with the values corresponding to your environment.

- Restart the Datadog Agent.

Note: All configuration files follow the format documented under @param specification.

For example, this is the minimum conf.yaml configuration file needed to collect metrics and logs from the apache integration:

init_config:

service: apache

instances:

- apache_status_url: http://localhost/server-status?auto

logs:

- type: file

path: /var/log/apache2/access.log

source: apache

sourcecategory: http_web_access

- type: file

path: /var/log/apache2/error.log

source: apache

sourcecategory: http_web_access

To monitor multiple Apache instances in the same Agent check, add additional instances to the instances section:

init_config:

instances:

- apache_status_url: "http://localhost/server-status?auto"

service: local-apache

- apache_status_url: "http://<REMOTE_APACHE_ENDPOINT>/server-status?auto"

service: remote-apache

Collection interval

The default collection interval for all Datadog standard integrations is 15 seconds. To change the collection interval, use the parameter min_collection_interval. For more details, see Updating the collection interval.

Tagging

Tagging is a key part of filtering and aggregating the data coming into Datadog across many sources. For more information about tagging, see Getting started with tags.

If you define tags in the datadog.yaml file, the tags are applied to all of your integrations data. Once you’ve defined a tag in datadog.yaml, all new integrations inherit it.

For example, setting service in your config file is the recommended Agent setup for monitoring separate, independent systems.

To better unify your environment, it is also recommended to configure the env tag in the Agent. To learn more, see Unified Service Tagging.

Per-check tag configuration

You can customize tag behavior for individual checks, overriding the global Agent-level settings:

Disable Autodiscovery tags

By default, the metrics reported by integrations include tags automatically detected from the environment. For example, the metrics reported by a Redis check that runs inside a container include tags associated with the container, such as

image_name. You can turn this behavior off by setting theignore_autodiscovery_tagsparameter totrue.Set tag cardinality per integration check

You can define the level of tag cardinality (low, orchestrator, or high) on a per-check basis using the

check_tag_cardinalityparameter. This overrides the global tag cardinality setting defined in the Agent configuration.

init_config:

# Ignores tags coming from autodiscovery

ignore_autodiscovery_tags: true

# Override global tag cardinality setting

check_tag_cardinality: low

# Rest of the config here

For containerized environments, you can also set these parameters through Kubernetes Autodiscovery annotations.

Validation

To validate your Agent and integrations configuration, run the Agent’s status subcommand, and look for new configuration under the Checks section.

Installing multiple integrations

Installing more than one integration is a matter of adding the configuration information to a new conf.yaml file in the corresponding <INTEGRATIONS>.d folder. Look up the required parameters for the new integration from the conf.yaml.example file, add it into the new conf.yaml file, and then follow the same steps to validate your configuration.

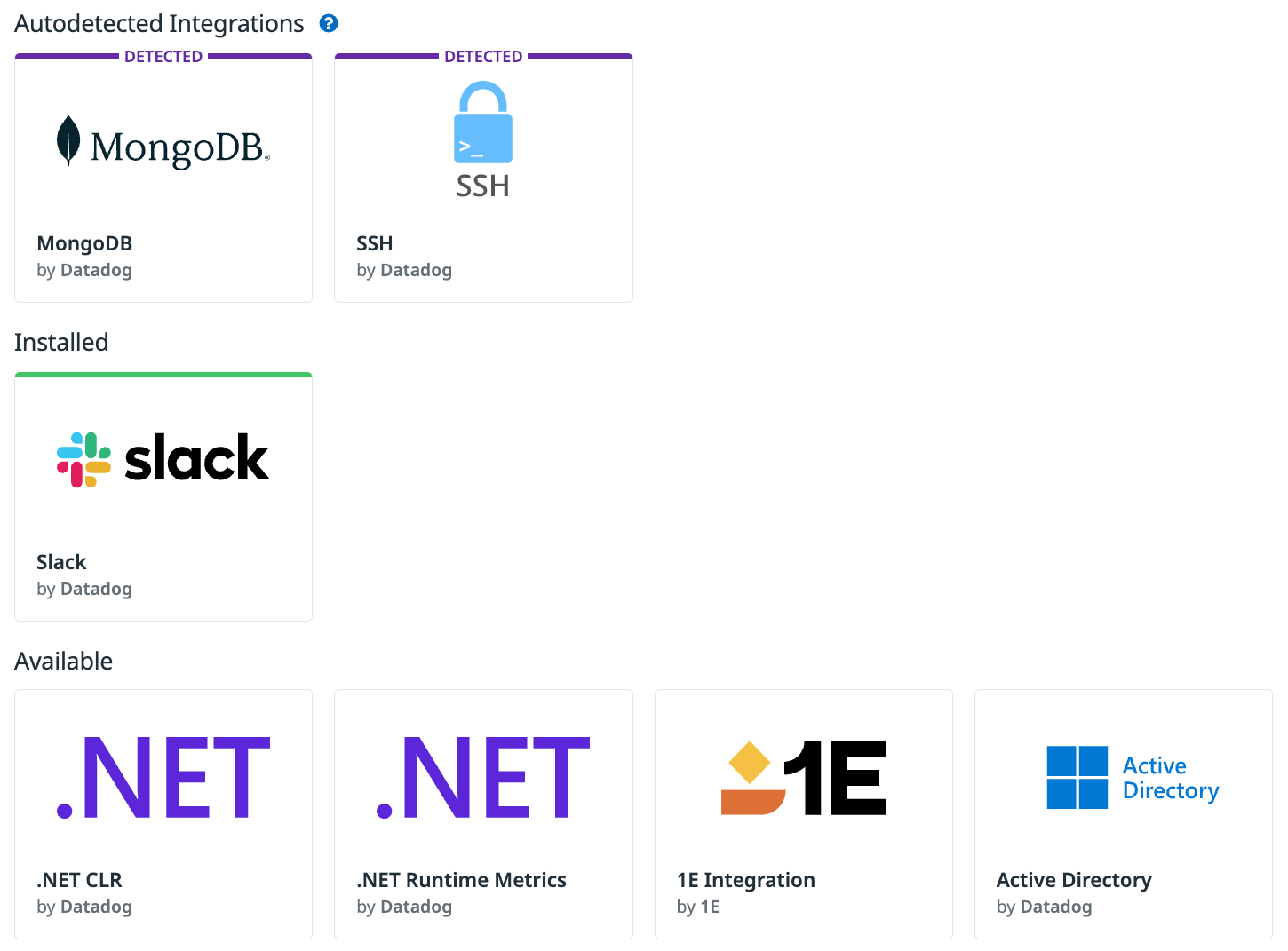

Autodetected integrations

If you set up process collection, Datadog autodetects technologies running on your hosts. This identifies Datadog integrations that can help you monitor these technologies. These auto-detected integrations are displayed in the Integrations search:

Each integration has one of four status types:

- Detected: The technology is running on a host, but the integration has not been installed or configured and only partial metrics are being collected. Configure the integration for full coverage. To find a list of hosts that are running an autodetected technology, open the integrations tile and select the Hosts tab.

- Installed: This integration is installed and configured on a host.

- Available: All integrations that do not fall into the Installed and Detected categories.

- Missing Data: Integration metrics have not been detected in the last 24 hours.

Security practices

For information on how Datadog handles your data, and other security considerations, see the Security documentation.

Granular access control

By default, access to integration resources (accounts, services, webhooks) is unrestricted. Granular access controls can be used to restrict the behavior of users, teams, roles, or your full organization at the integration resource level.

Note: The restricted access option is only visible if the integration supports granular access control. To verify if granular access control is supported for an integration, review that integration’s documentation.

- While viewing an integration, navigate to the Configure tab and locate the resource (account, service, webhook) that should have granular access controls applied.

- Click Set Permissions.

- By default, everyone in your org has full access. Click Restrict Access.

- The dialog box updates to show that members of your organization have Viewer access by default.

- Use the dropdown to select one or more teams, roles, or users that may edit the monitor. Note: The Integrations Manage permission is also required to edit individual resources.

- Click Add.

- The dialog box updates to show the updated permissions.

- Click Save. The integration page automatically refreshes with updated permissions.

Note: To maintain edit access to the resource, the system requires you to include at least one role or team that you are a member of before saving.

To restore general access to a integration resource with restricted access, follow the steps below:

- While viewing an integration, navigate to the Configure tab and locate the resource (account, service, webhook) that should have general access restored.

- Click Set Permissions.

- Click Restore Full Access.

- Click Save. The integration page automatically refreshes with updated permissions.

What’s next?

After your first integration is set up, explore all of the metrics being sent to Datadog by your application, and use these metrics to begin setting up dashboards and alerts to monitor your data.

Also check out Datadog Logs management, APM, and Synthetic Monitoring solutions.

Troubleshooting

The first step to troubleshooting an integration is to use a plugin in your code editor or use one of the many online tools to verify that the YAML is valid. The next step is to run through all of the Agent troubleshooting steps.

If you continue to have problems, contact Datadog support.

Key terms

conf.yaml- You create the

conf.yamlin theconf.d/<INTEGRATION_NAME>.dfolder at the root of your Agent’s configuration directory. Use this file to connect integrations to your system, as well as configure their settings. - custom check

- If you have a unique system that you want to monitor, or if you’re going to expand the metrics already sent by an integration, you can build a custom check to define and send metrics to Datadog. However, if you want to monitor a generally available application, public service, or an open source project and the integration doesn’t exist, consider building a new integration instead of a custom check.

datadog.yaml- This is the main configuration file where you’re defining how the Agent as a whole interacts with its own integrations and with your system. Use this file to update API keys, proxies, host tags, and other global settings.

- event

- Events are informational messages about your system that are consumed by the events explorer so that you can build monitors on them.

- instance

- You define and map the instance of whatever you are monitoring in the

conf.yamlfile. For example, in thehttp_checkintegration, you’re defining the name associated with the instance of the HTTP endpoint you are monitoring up and downtime. You can monitor multiple instances in the same integration, and you do that by defining all of the instances in theconf.yamlfile. <INTEGRATION_NAME>.d- If you have a complex configuration, you can break it down into multiple

YAMLfiles, and then store them all in the<INTEGRATION_NAME>.dfolder to define the configuration. The Agent loads any validYAMLfile in the<INTEGRATION_NAME>.dfolder. - logging

- If the system you are monitoring has logs, customize the logs you are sending to Datadog by using the Log Management solution.

metadata.csv- The file that lists and stores the metrics collected by each integration.

- metrics

- The list of what is collected from your system by each integration. You can find the metrics for each integration in that integration’s

metadata.csvfile. For more information about metrics, see the Metrics developer page. You can also set up custom metrics, so if the integration doesn’t offer a metric out of the box, you can usually add it. - parameters

- Use the parameters in the

conf.yamlfile to control accesses between your integration data source and the Agent. The individual integrationsconf.yaml.examplefile has all of the required and not required parameters listed. - service check

- Service checks are a type of monitor used to track the uptime status of the service. For more information, see the Service checks guide.

- tagging

- Tags are a way to add customization to metrics so that you can filter and visualize them in the most useful way to you.

Further Reading

Additional helpful documentation, links, and articles: