- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

Test Impact Analysis for Python

Ce produit n'est pas pris en charge par le site Datadog que vous avez sélectionné. ().

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Compatibility

Test Impact Analysis is only supported in the following versions and testing frameworks:

pytest>=7.2.0- From

ddtrace>=2.1.0. - From

Python>=3.7. - Requires

coverage>=5.5. - Incompatible with

pytest-cov(see known limitations)

- From

unittest- From

ddtrace>=2.2.0. - From

Python>=3.7.

- From

coverage- Incompatible for coverage collection (see known limitations)

Setup

Test Optimization

Prior to setting up Test Impact Analysis, set up Test Optimization for Python. If you are reporting data through the Agent, use v6.40 and later or v7.40 and later.

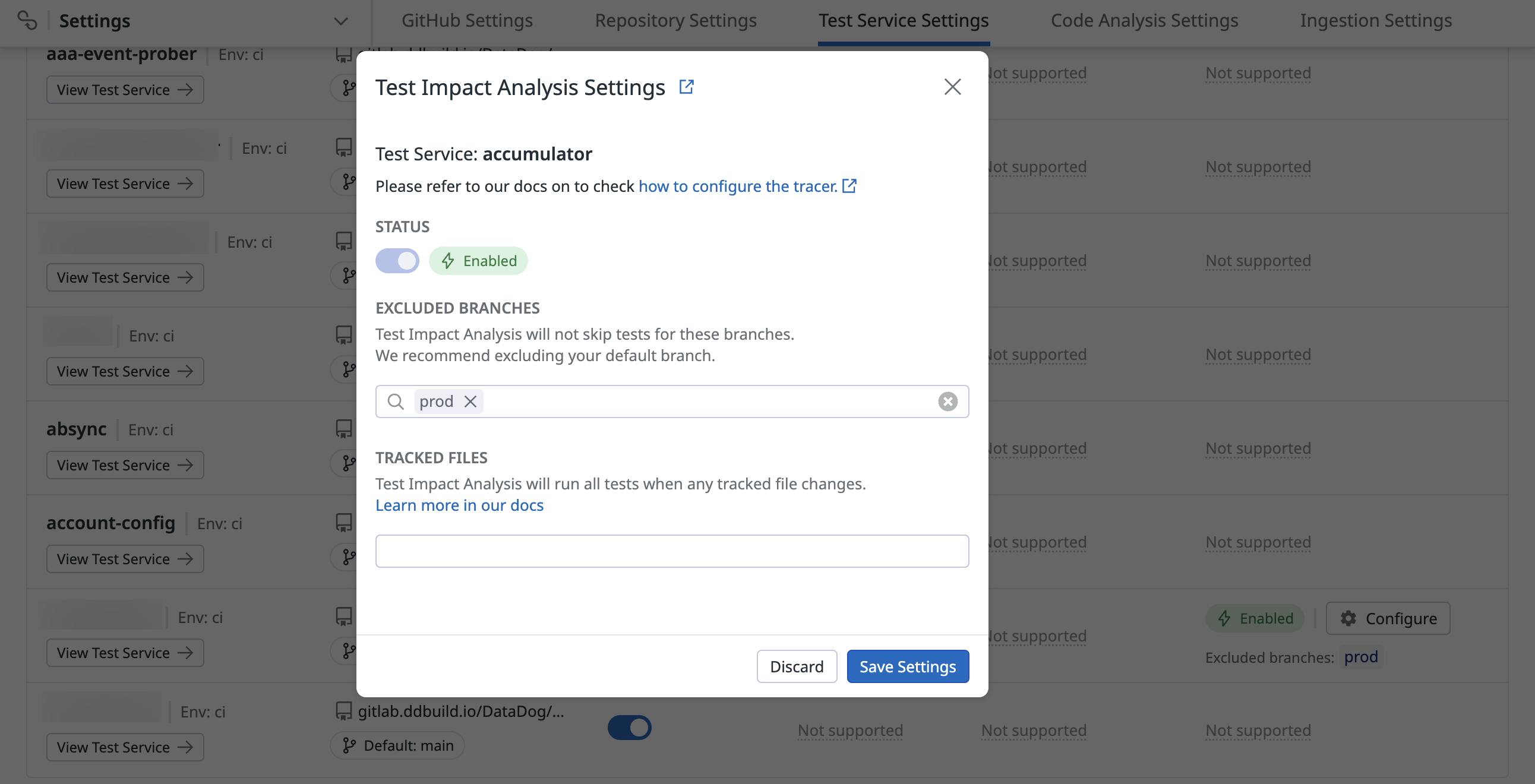

Activate Test Impact Analysis for the test service

You, or a user in your organization with the Intelligent Test Runner Activation (intelligent_test_runner_activation_write) permission, must activate Test Impact Analysis on the Test Service Settings page.

Required dependencies

Test Impact Analysis requires the coverage package.

Install the package in your CI test environment by specifying it in the relevant requirements file, for example, or using pip:

pip install coverageSee known limitations if you are already using the coverage package or a plugin like pytest-cov.

Running tests with Test Impact Analysis enabled

Test Impact Analysis is enabled when you run tests with the Datadog integration active. Run your tests with the following command:

DD_ENV=ci DD_SERVICE=my-python-app pytest --ddtraceDD_ENV=ci DD_SERVICE=my-python-app ddtrace-run python -m unittestTemporarily disabling Test Impact Analysis

Test Impact Analysis can be disabled locally by setting the DD_CIVISIBILITY_ITR_ENABLED environment variable to false or 0.

DD_CIVISIBILITY_ITR_ENABLED(Optional)- Enable Test Impact Analysis coverage and test skipping features

Default:(true)

Run the following command to disable Test Impact Analysis:

DD_ENV=ci DD_SERVICE=my-python-app DD_CIVISIBILITY_ITR_ENABLED=false pytest --ddtraceDD_ENV=ci DD_SERVICE=my-python-app DD_CIVISIBILITY_ITR_ENABLED=false ddtrace-run python -m unittestDisabling skipping for specific tests

You can override Test Impact Analysis’s behavior and prevent specific tests from being skipped. These tests are referred to as unskippable tests.

Why make tests unskippable?

Test Impact Analysis uses code coverage data to determine whether or not tests should be skipped. In some cases, this data may not be sufficient to make this determination.

Examples include:

- Tests that read data from text files

- Tests that interact with APIs outside of the code being tested (such as remote REST APIs)

Designating tests as unskippable ensures that Test Impact Analysis runs them regardless of coverage data.

Compatibility

Unskippable tests are supported in the following versions:

pytest- From

ddtrace>=1.19.0.

- From

Marking tests as unskippable

You can use pytest’s skipif mark to prevent Test Impact Analysis from skipping individual tests or modules. Specify the condition as False, and the reason as "datadog_itr_unskippable".

Individual tests

Individual tests can be marked as unskippable using the @pytest.mark.skipif decorator as follows:

import pytest

@pytest.mark.skipif(False, reason="datadog_itr_unskippable")

def test_function():

assert True

Modules

Modules can be skipped using the pytestmark global variable as follows:

import pytest

pytestmark = pytest.mark.skipif(False, reason="datadog_itr_unskippable")

def test_function():

assert True

Note: This does not override any other skip marks, or skipif marks that have a condition evaluating to True.

Compatibility

Unskippable tests are supported in the following versions:

unittest- From

ddtrace>=2.2.0.

- From

Marking tests as unskippable in unittest

You can use unittest’s skipif mark to prevent Test Impact Analysis from skipping individual tests. Specify the condition as False, and the reason as "datadog_itr_unskippable".

Individual tests

Individual tests can be marked as unskippable using the @unittest.skipif decorator as follows:

import unittest

class MyTestCase(unittest.TestCase):

@unittest.skipIf(False, reason="datadog_itr_unskippable")

def test_function(self):

assert True

Using @unittest.skipif does not override any other skip marks, or skipIf marks that have a condition evaluating to True.

Known limitations

Code coverage collection

Interaction with coverage tools

Coverage data may appear incomplete when Test Impact Analysis is enabled. Lines of code that would normally be covered by tests are not be covered when these tests are skipped.

Interaction with the coverage package

Test Impact Analysis uses the coverage package’s API to collect code coverage. Data from coverage run or plugins like pytest-cov is incomplete as a result of ddtrace’s use of the Coverage class.

Some race conditions may cause exceptions when using pytest plugins such as pytest-xdist that change test execution order or introduce parallelization.

Further reading

Documentation, liens et articles supplémentaires utiles: