- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

(LEGACY) High Availability and Disaster Recovery

Ce produit n'est pas pris en charge par le site Datadog que vous avez sélectionné. ().

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

This guide is for large-scale production-level deployments.

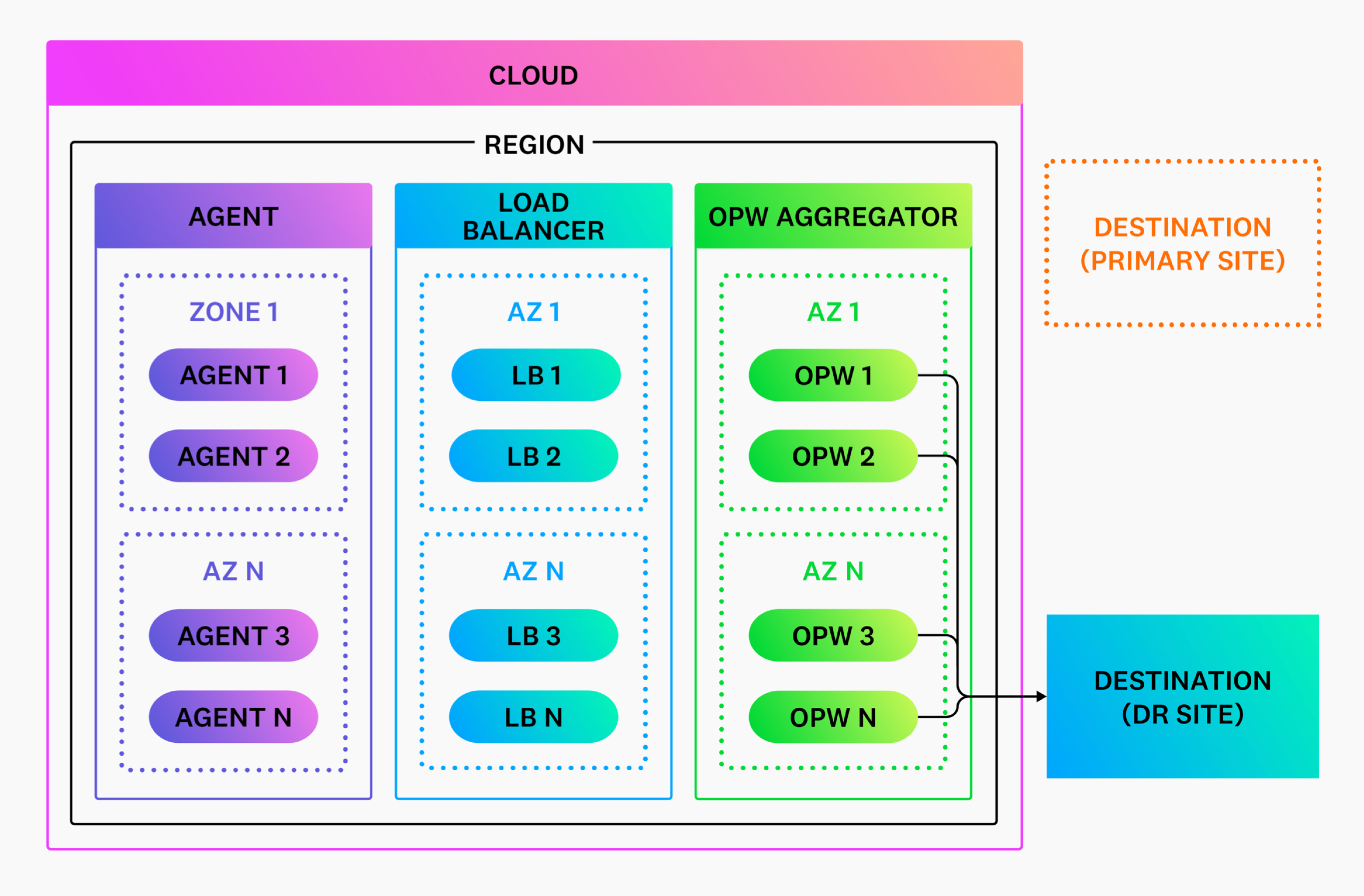

In the context of Observability Pipelines, high availability refers to the Observability Pipelines Worker remaining available if there are any system issues.

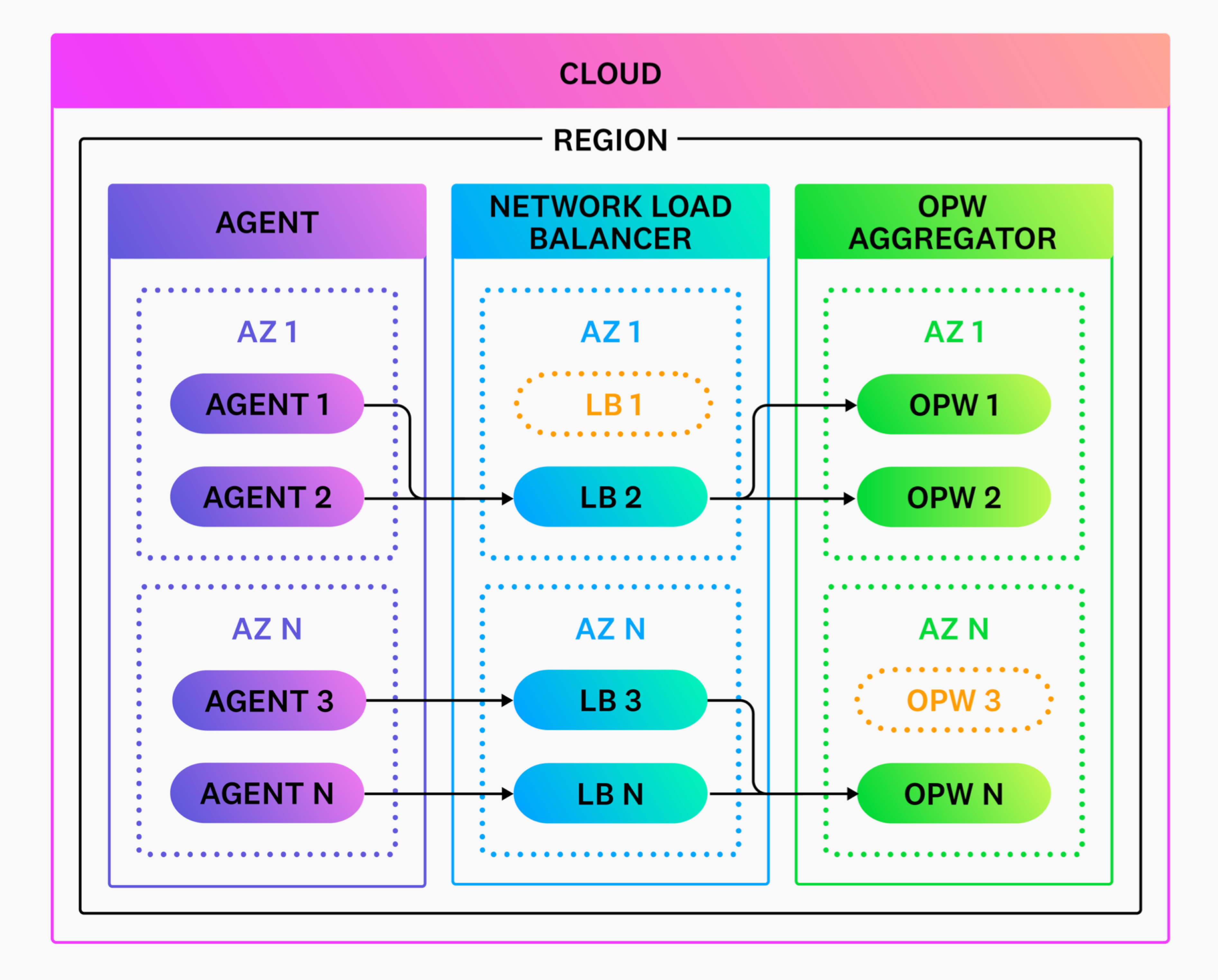

To achieve high availability:

- Deploy at least two Observability Pipelines Worker instances in each Availability Zone.

- Deploy Observability Pipelines Worker in at least two Availability Zones.

- Front your Observability Pipelines Worker instances with a load balancer that balances traffic across Observability Pipelines Worker instances. See Capacity Planning and Scaling for more information.

Mitigating failure scenarios

Handling Observability Pipelines Worker process issues

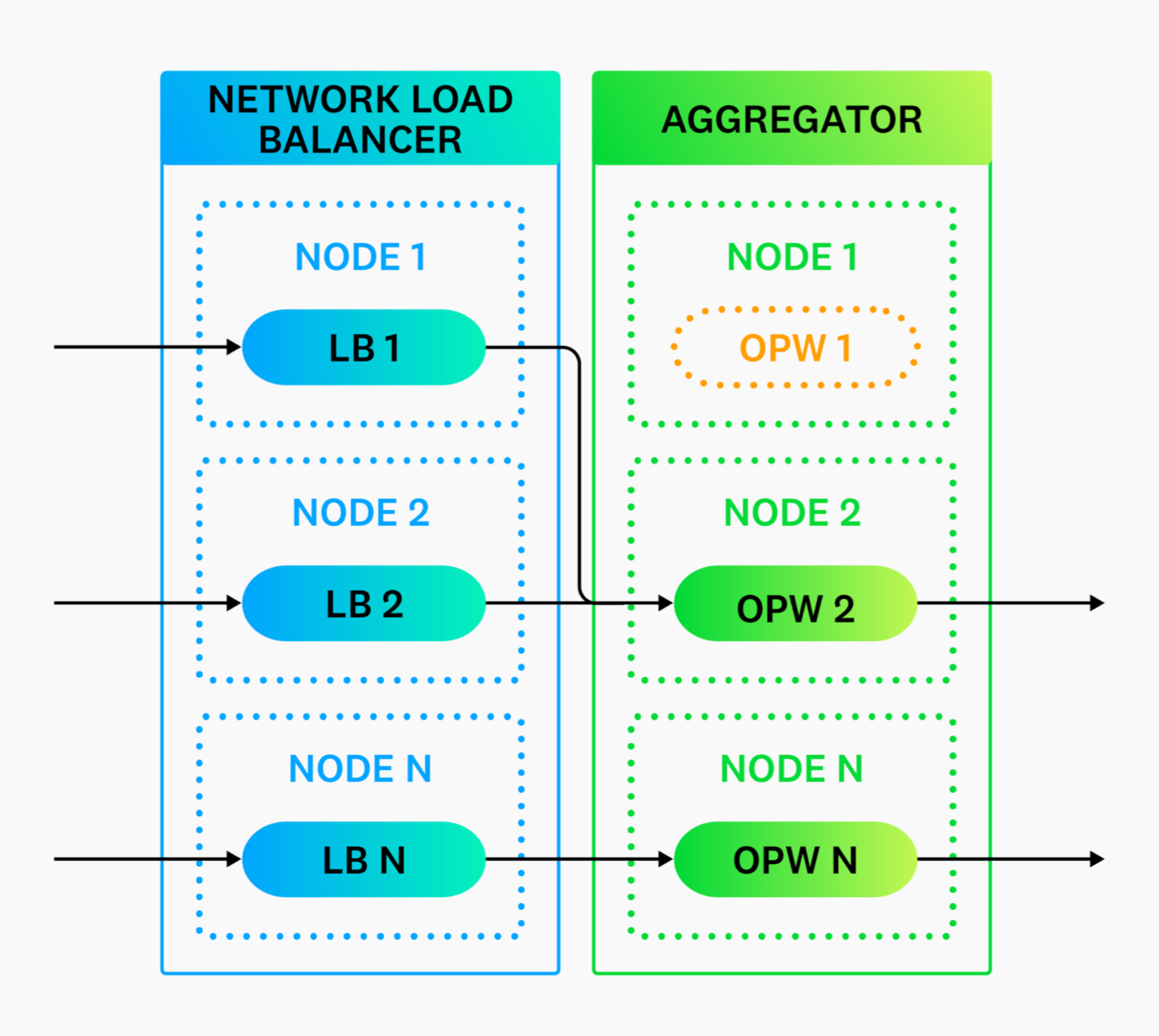

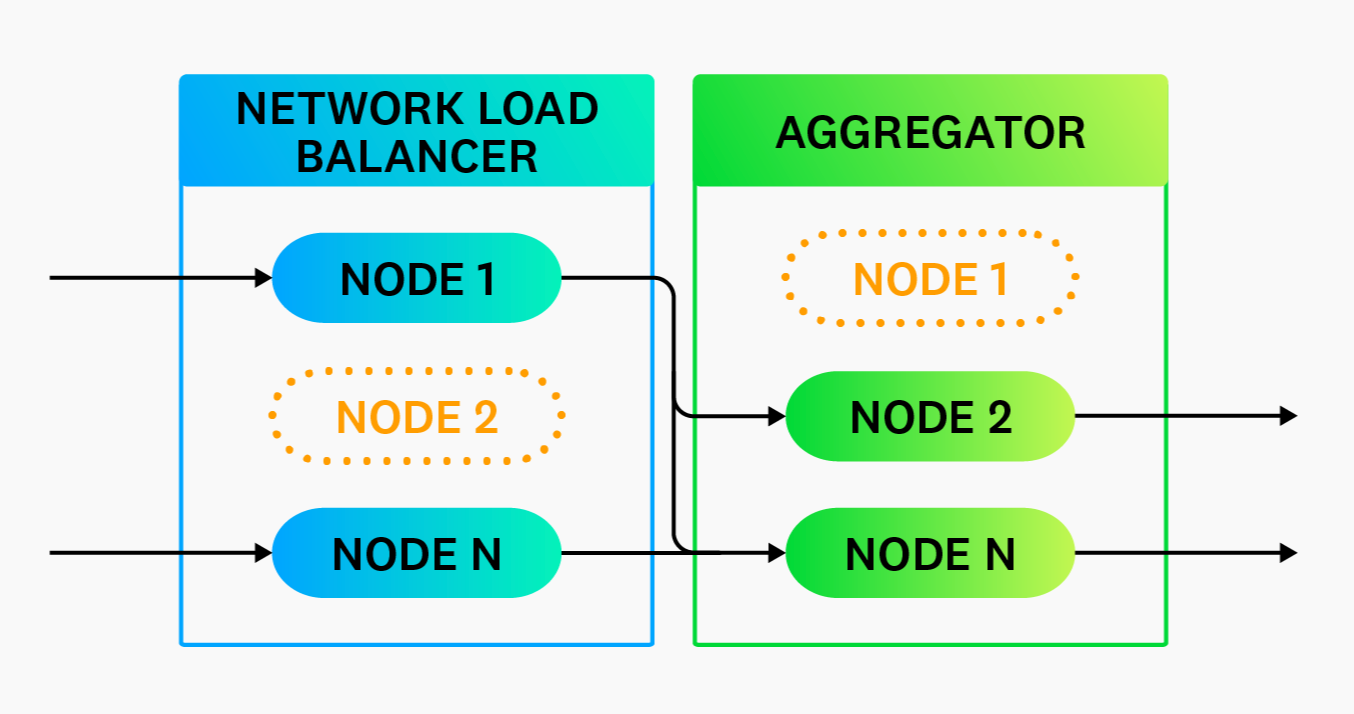

To mitigate a system process issue, distribute the Observability Pipelines Worker across multiple nodes and front them with a network load balancer that can redirect traffic to another Observability Pipelines Worker instance as needed. In addition, platform-level automated self-healing should eventually restart the process or replace the node.

Mitigating node failures

To mitigate node issues, distribute the Observability Pipelines Worker across multiple nodes and front them with a network load balancer that can redirect traffic to another Observability Pipelines Worker node. In addition, platform-level automated self-healing should eventually replace the node.

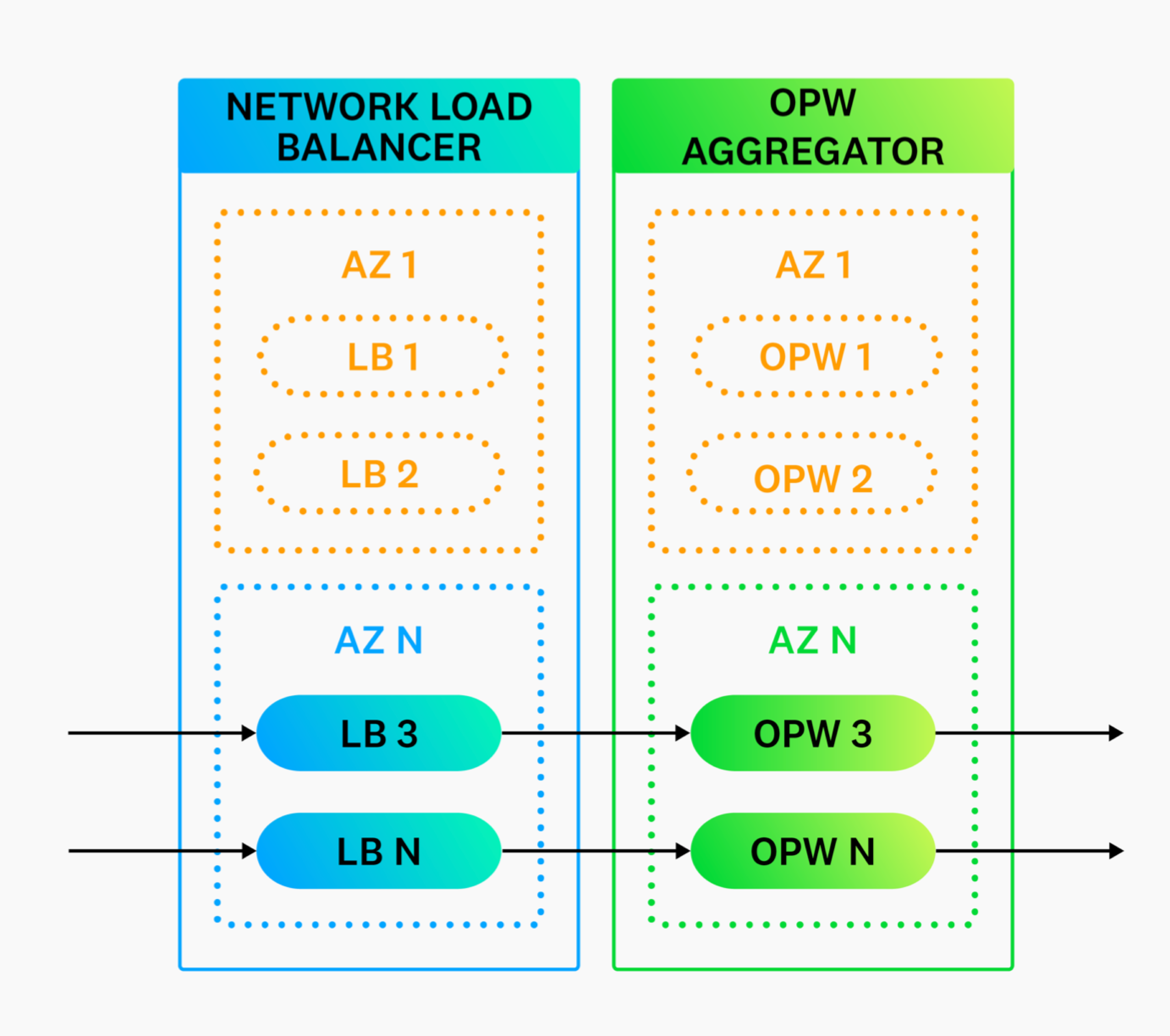

Handling availability zone failures

To mitigate issues with availability zones, deploy the Observability Pipelines Worker across multiple availability zones.

Mitigating region failures

Observability Pipelines Worker is designed to route internal observability data, and it should not failover to another region. Instead, Observability Pipelines Worker should be deployed in all of your regions. Therefore, if your entire network or region fails, Observability Pipelines Worker would fail with it. See Networking for more information.

Disaster recovery

Internal disaster recovery

Observability Pipelines Worker is an infrastructure-level tool designed to route internal observability data. It implements a shared-nothing architecture and does not manage state that should be replicated or transferred to a disaster recovery (DR) site. Therefore, if your entire region fails, Observability Pipelines Worker would fail with it. Therefore, you should install the Observability Pipelines Worker in your DR site as part of your broader DR plan.

External disaster recovery

If you’re using a managed destination, such as Datadog, Observability Pipelines Worker can facilitate automatic routing of data to your Datadog DR site using Observability Pipelines Worker’s circuit breaker feature.