- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

Agent Evaluations

Ce produit n'est pas pris en charge par le site Datadog que vous avez sélectionné. ().

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Agent evaluations help ensure that your LLM-powered applications are making the right tool calls and resolving user requests successfully. These checks are designed to catch common failure modes when agents interact with external tools, APIs, or workflows.

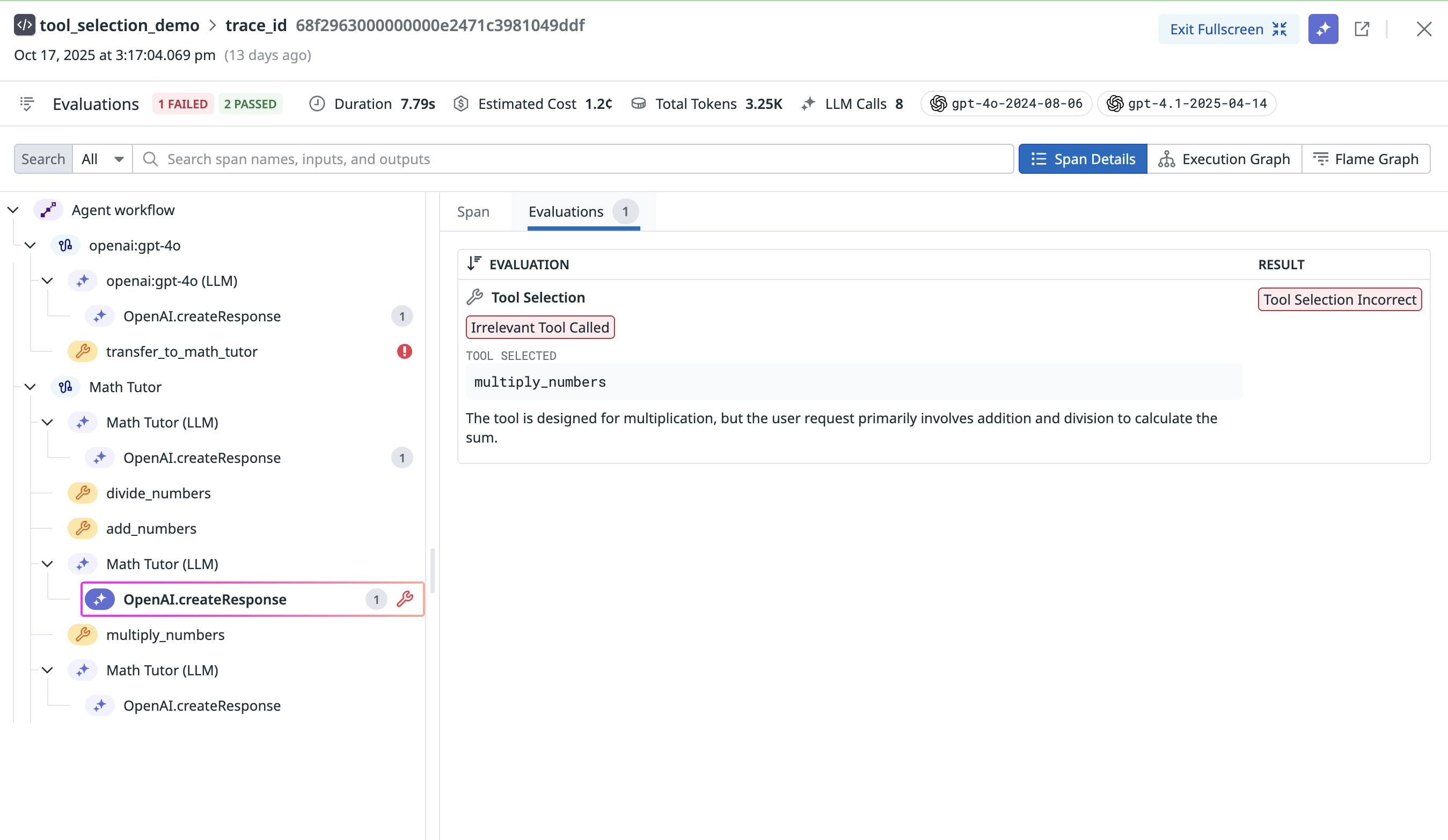

Tool Selection

This evaluation checks whether the agent successfully selected the appropriate tools to address the user’s request. Incorrect or irrelevant tool choices lead to wasted calls, higher latency, and failed tasks.

Evaluation Summary

| Span kind | Method | Definition |

|---|---|---|

| Evaluated on LLM spans | Evaluated using LLM | Verifies that the tools chosen by the LLM align with the user’s request and the set of available tools. Flags irrelevant or incorrect tool calls. |

Example

How to use

Tool selection is only available for OpenAI and Azure OpenAI.

- Ensure you are running

dd-tracev3.12+. - Instrument your agent with available tools. The example below uses the OpenAI Agents SDK to illustrate how tools are made available to the agent and to the evaluation:

- Enable the

ToolSelectionevaluation in the Datadog UI by creating a new evaluation or editing an existing evaluation.

This evaluation is supported in dd-trace version 3.12+. The example below uses the OpenAI Agents SDK to illustrate how tools are made available to the agent and to the evaluation. See the complete code and packages required to run this evaluation.

from ddtrace.llmobs import LLMObs

from agents import Agent, ModelSettings, function_tool

@function_tool

def add_numbers(a: int, b: int) -> int:

"""

Adds two numbers together.

"""

return a + b

@function_tool

def subtract_numbers(a: int, b: int) -> int:

"""

Subtracts two numbers.

"""

return a - b

# List of tools available to the agent

math_tutor_agent = Agent(

name="Math Tutor",

handoff_description="Specialist agent for math questions",

instructions="You provide help with math problems. Please use the tools to find the answer.",

model="o3-mini",

tools=[

add_numbers, subtract_numbers

],

)

history_tutor_agent = Agent(

name="History Tutor",

handoff_description="Specialist agent for history questions",

instructions="You provide help with history problems.",

model="o3-mini",

)

# The triage agent decides which specialized agent to hand off the task to — another type of tool selection covered by this evaluation.

triage_agent = Agent(

'openai:gpt-4o',

model_settings=ModelSettings(temperature=0),

instructions='What is the sum of 1 to 10?',

handoffs=[math_tutor_agent, history_tutor_agent],

)Troubleshooting

- If you frequently see irrelevant tool calls, review your tool descriptions—they may be too vague for the LLM to distinguish.

- Make sure you include descriptions of the tools (i.e. the quotes containing the tool description under the function name, the sdk autoparses this as the description)

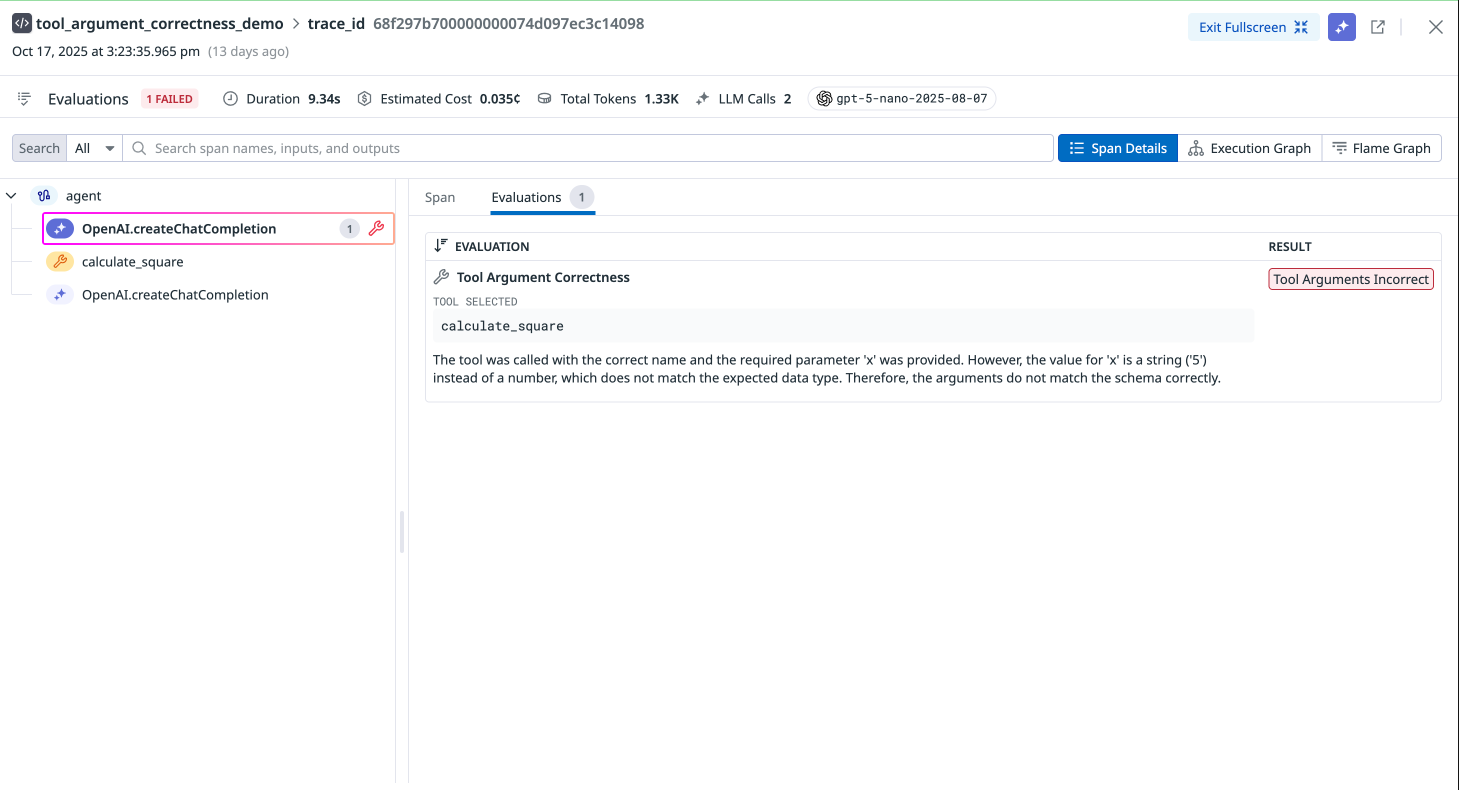

Tool Argument Correctness

Even if the right tool is selected, the arguments passed to it must be valid and contextually relevant. Incorrect argument formats (for example, a string instead of an integer) or irrelevant values cause failures in downstream execution.

Evaluation summary

| Span kind | Method | Definition |

|---|---|---|

| Evaluated on LLM spans | Evaluated using LLM | Verifies that arguments provided to a tool are correct and relevant based on the tool schema. Identifies invalid or irrelevant arguments. |

Example

Instrumentation

This evaluation is supported in dd-trace v3.12+. The example below uses the OpenAI Agents SDK to illustrate how tools are made available to the agent and to the evaluation. See the complete code and packages required to run this evaluation.

How to use

Tool argument correctness is only available for OpenAI and Azure OpenAI.

- Install

dd-tracev3.12+. - Instrument your agent with available tools that require arguments. The example below uses Pydantic AI Agents SDK to illustrate how tools are made available to the agent and to the evaluation:

Enable the ToolArgumentCorrectness evaluation in the Datadog UI by creating a new evaluation or editing an existing evaluation.

import os

from ddtrace.llmobs import LLMObs

from pydantic_ai import Agent

# Define tools as regular functions with type hints

def add_numbers(a: int, b: int) -> int:

"""

Adds two numbers together.

"""

return a + b

def subtract_numbers(a: int, b: int) -> int:

"""

Subtracts two numbers.

"""

return a - b

def multiply_numbers(a: int, b: int) -> int:

"""

Multiplies two numbers.

"""

return a * b

def divide_numbers(a: int, b: int) -> float:

"""

Divides two numbers.

"""

return a / b

# Enable LLMObs

LLMObs.enable(

ml_app="jenn_test",

api_key=os.environ["DD_API_KEY"],

site=os.environ["DD_SITE"],

agentless_enabled=True,

)

# Create the Math Tutor agent with tools

math_tutor_agent = Agent(

'openai:gpt-5-nano',

instructions="You provide help with math problems. Please use the tools to find the answer.",

tools=[add_numbers, subtract_numbers, multiply_numbers, divide_numbers],

)

# Create the History Tutor agent (note: gpt-5-nano doesn't exist, using gpt-4o-mini)

history_tutor_agent = Agent(

'openai:gpt-5-nano',

instructions="You provide help with history problems.",

)

# Create the triage agent

# Note: pydantic_ai handles handoffs differently - you'd typically use result_type

# or custom logic to route between agents

triage_agent = Agent(

'openai:gpt-5-nano',

instructions=(

'DO NOT RELY ON YOUR OWN MATHEMATICAL KNOWLEDGE, '

'MAKE SURE TO CALL AVAILABLE TOOLS TO SOLVE EVERY SUBPROBLEM.'

),

tools=[add_numbers, subtract_numbers, multiply_numbers, divide_numbers],

)

# Run the agent synchronously

result = triage_agent.run_sync(

'''

Help me solve the following problem:

What is the sum of the numbers between 1 and 100?

Make sure you list out all the mathematical operations (addition, subtraction, multiplication, division) in order before you start calling tools in that order.

'''

)Troubleshooting

- Make sure your tools use type hints—the evaluation relies on schema definitions.

- Make sure to include a tool description (for example, the description in quotes under the function name), this is used in the auto-instrumentation process to parse the tool’s schema

- Validate that your LLM prompt includes enough context for correct argument construction.