- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

BlazeMeter

Intégration1.0.0

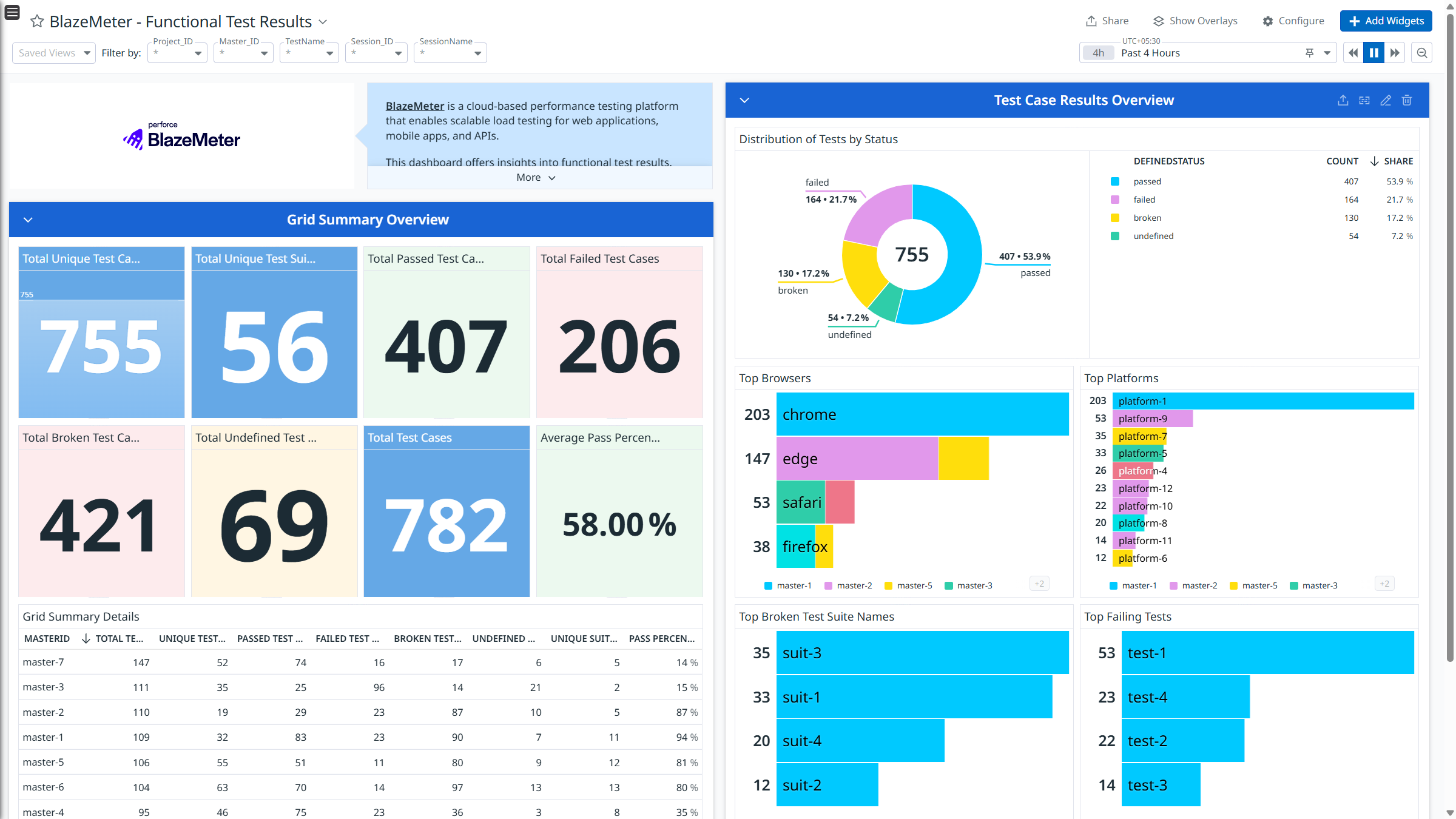

BlazeMeter - Functional Test Results

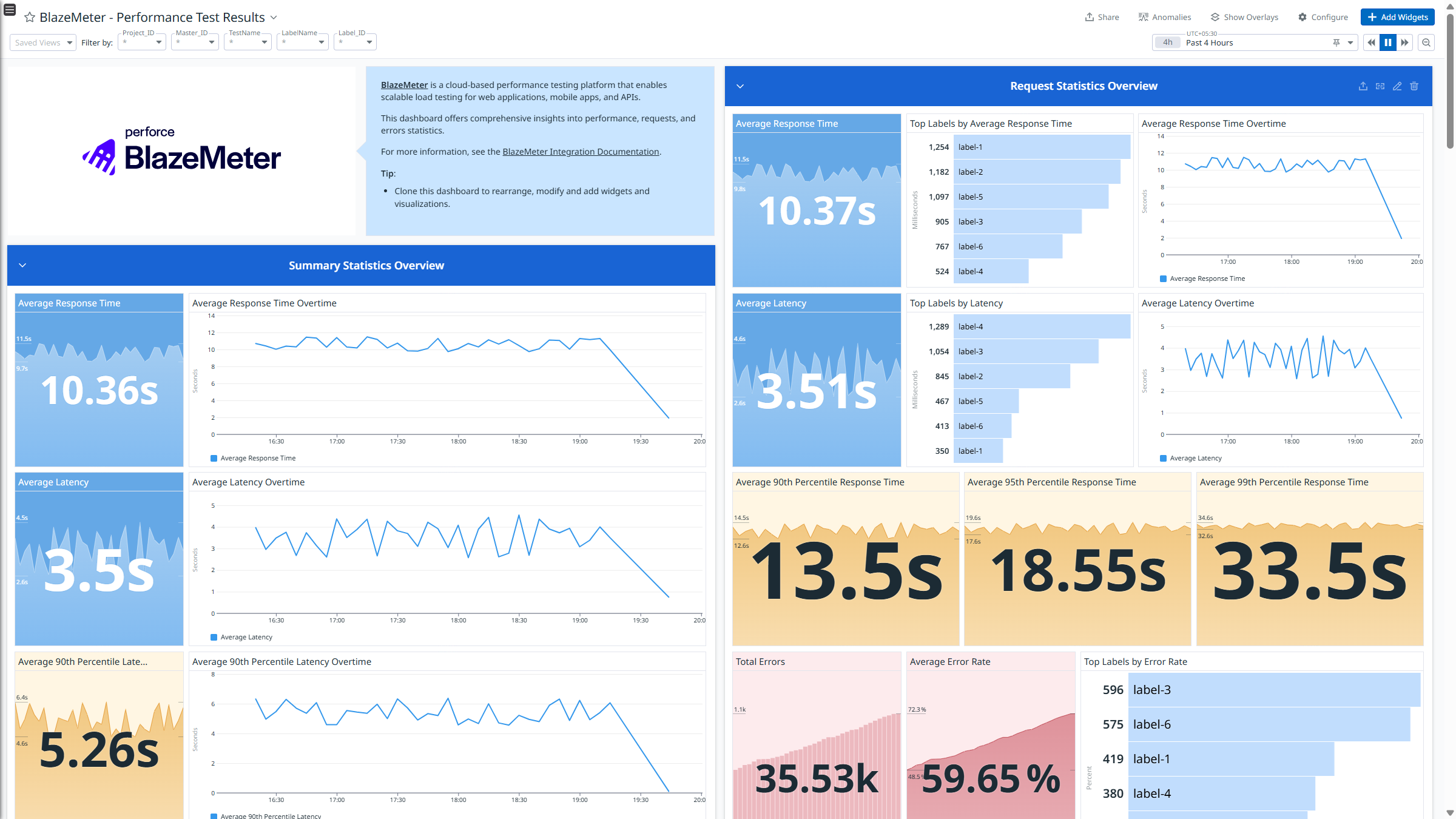

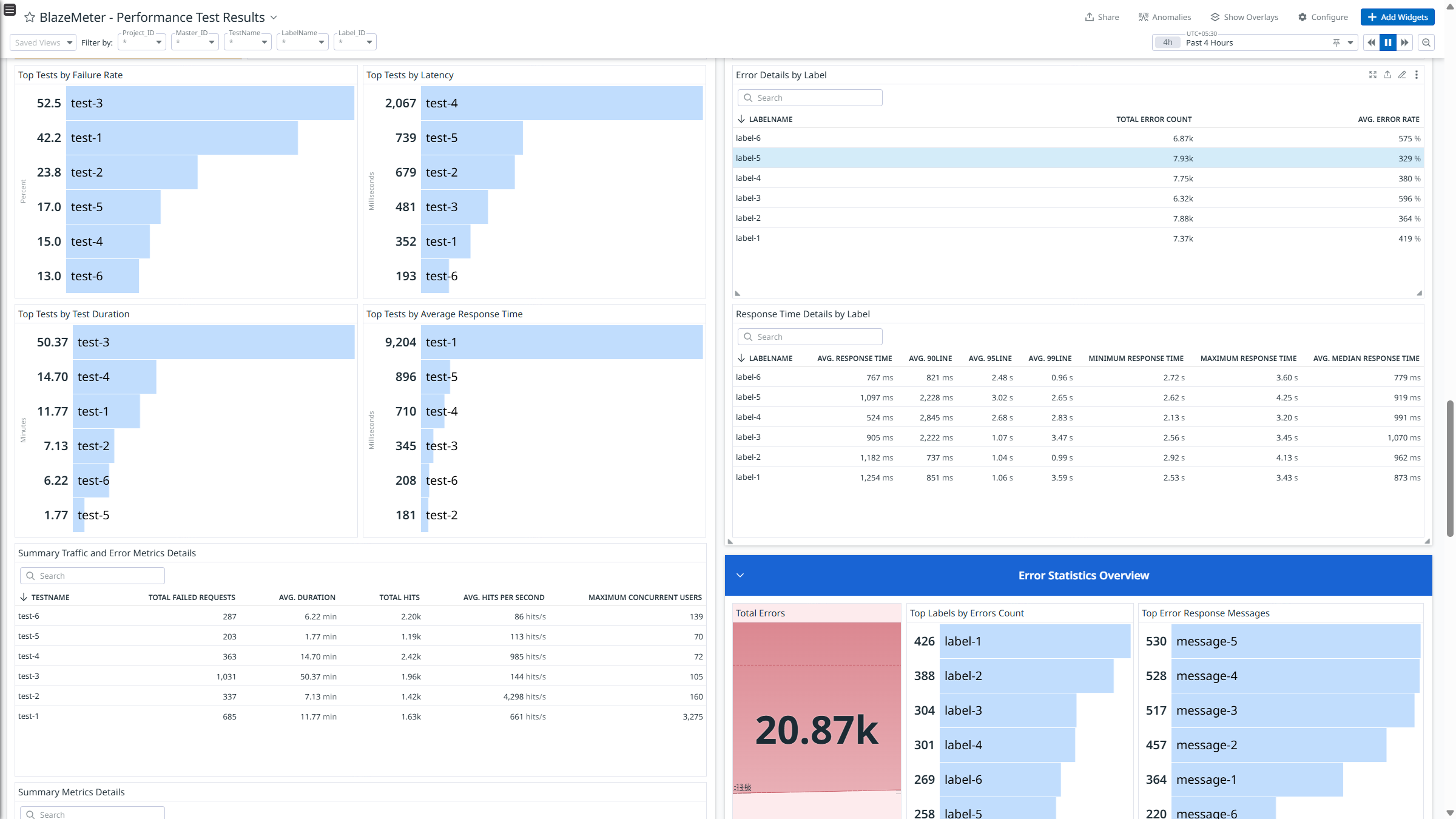

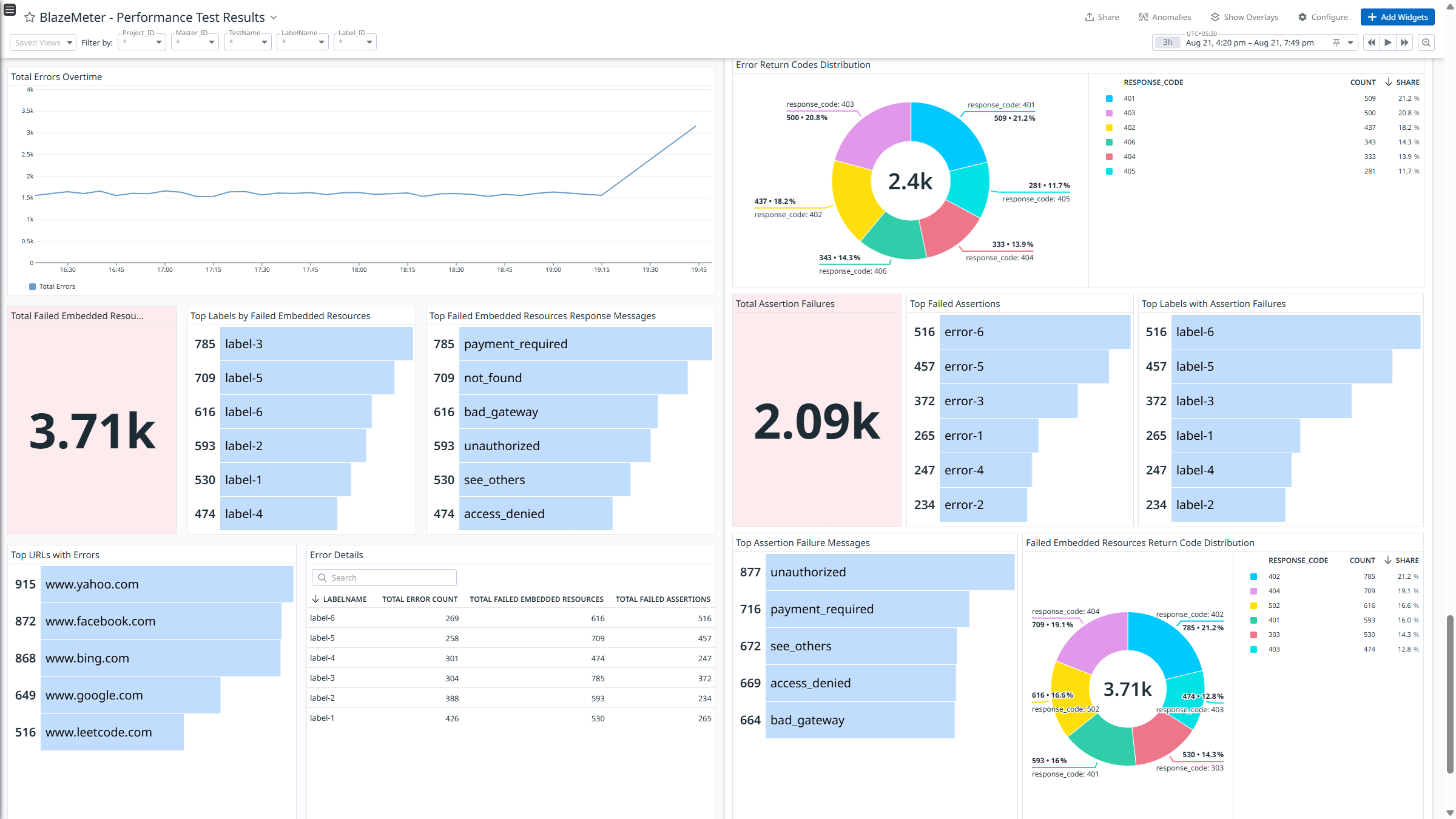

BlazeMeter - Performance Test Results

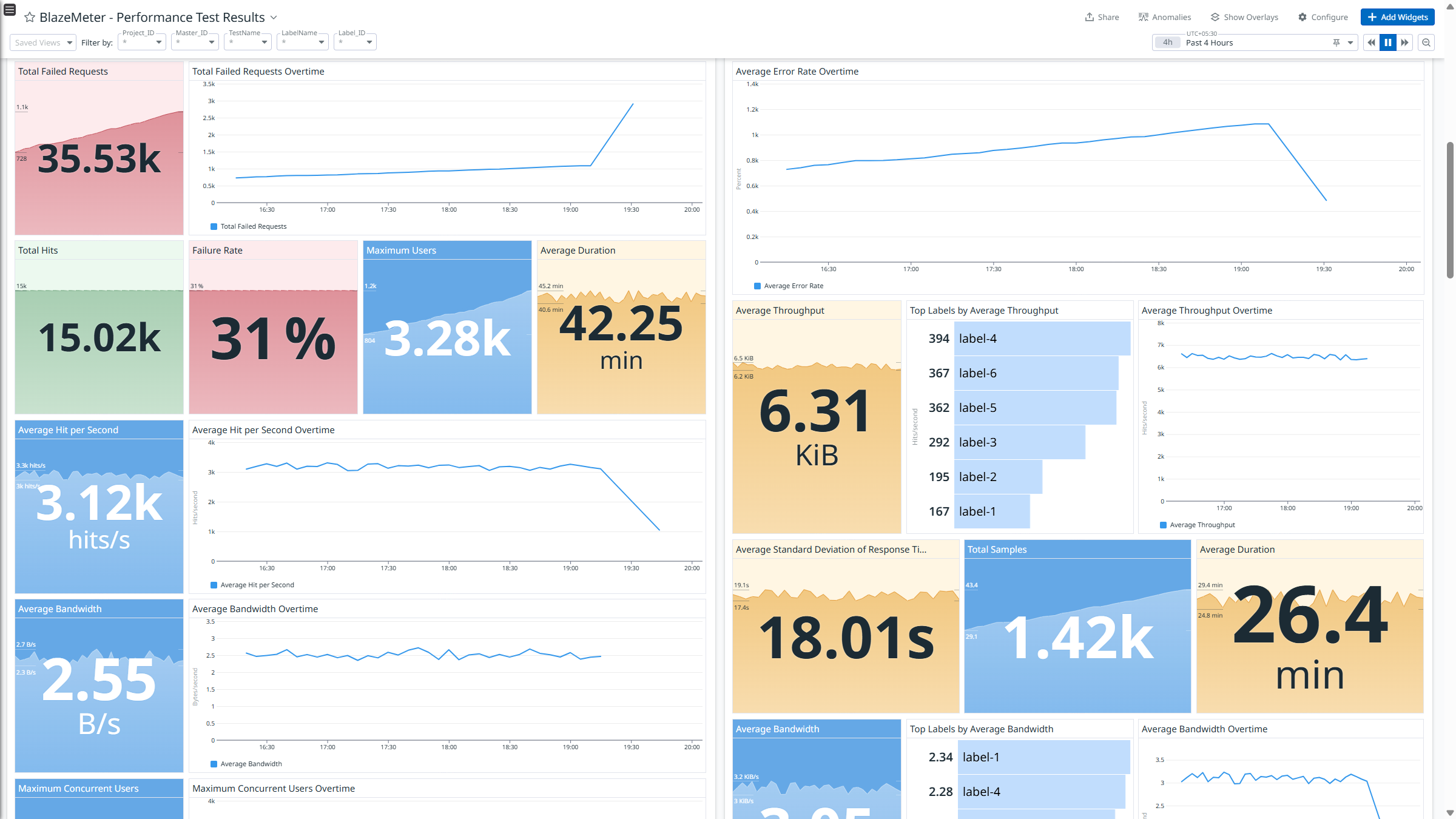

BlazeMeter - Performance Test Results

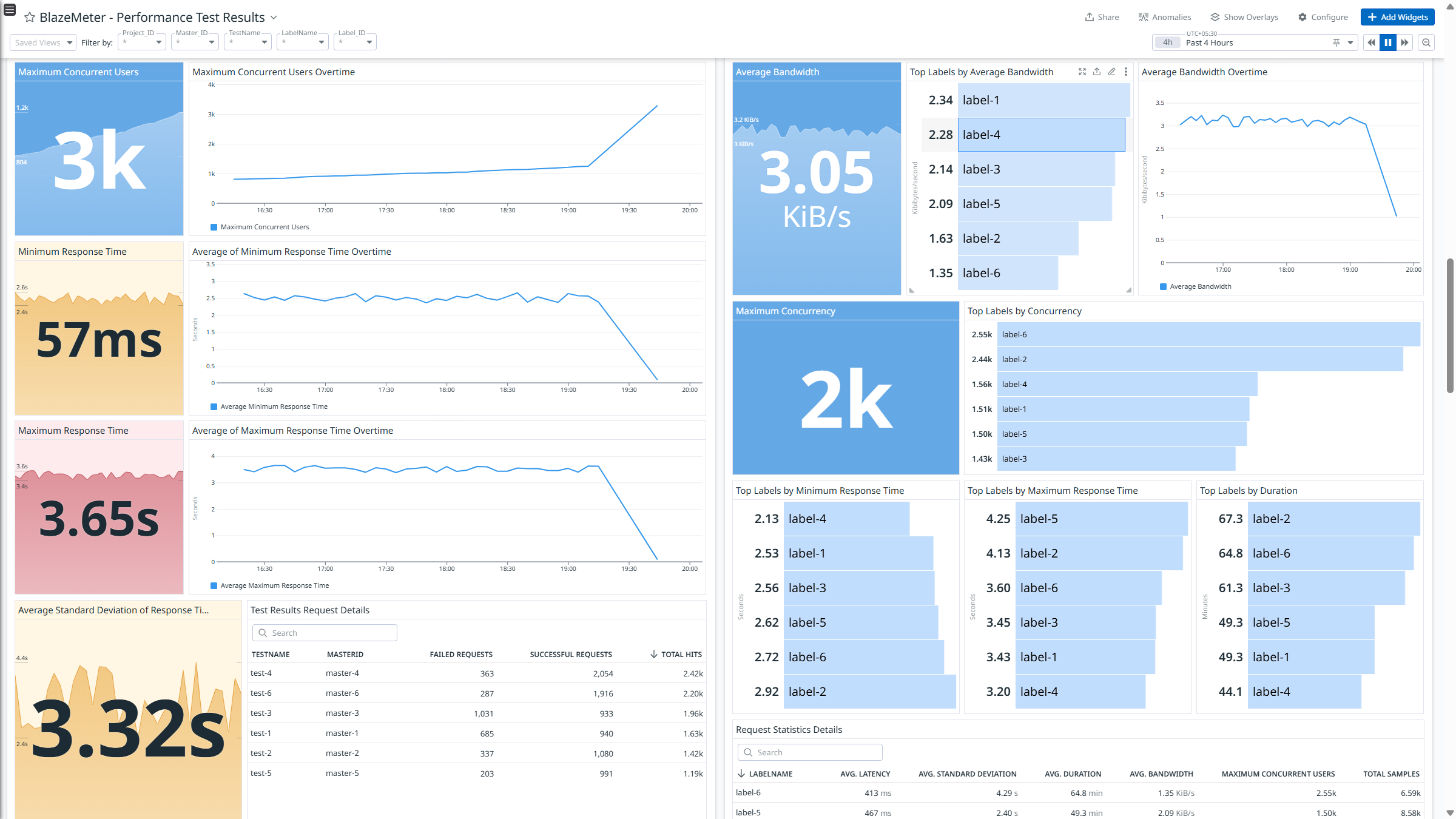

BlazeMeter - Performance Test Results

BlazeMeter - Performance Test Results

BlazeMeter - Performance Test Results

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Overview

BlazeMeter is a cloud-based performance testing platform that enables scalable load testing for web applications, mobile apps, and APIs. It offers a range of testing capabilities, including performance testing and functional testing.

Integrate BlazeMeter with Datadog to gain insights into performance and functional test results metrics data.

Setup

Generate API Key Id and API Key Secret in BlazeMeter

- Log in to BlazeMeter Account.

- Navigate to the Settings page by clicking the gear icon in the upper-right corner of the page.

- In the left side bar, under the Personal section, click API Keys.

- Create a new API Key by clicking + icon.

- In the Generate API Key section, enter a name and select an expiration date.

- Click the Generate button to generate the API Key Id and API Key Secret.

Connect your BlazeMeter account to Datadog

Add your API Key Id and API Key Secret\

Parameters Description API Key Id API Key Id of BlazeMeter Account. API Key Secret API Key Secret of BlazeMeter Account. Click the Save button to save your settings.

Data Collected

Metrics

| blazemeter.functional.gridSummary_brokenTestCasesCount (gauge) | The number of test cases that are in a broken state |

| blazemeter.functional.gridSummary_failedTestCasesCount (gauge) | The number of the test cases that are in a failed state |

| blazemeter.functional.gridSummary_passedPercent (gauge) | The percentage of test cases that passed Shown as percent |

| blazemeter.functional.gridSummary_passedTestCasesCount (gauge) | The number of test cases that are in a passed state |

| blazemeter.functional.gridSummary_testCasesCount (gauge) | The number of test cases in a test |

| blazemeter.functional.gridSummary_undefinedTestCasesCount (gauge) | The number of test cases that are in undefined state |

| blazemeter.functional.gridSummary_uniqueSuitesCount (gauge) | The unique number of testsuites in a test |

| blazemeter.functional.gridSummary_uniqueTestCasesCount (gauge) | The unique number of test cases in a test |

| blazemeter.functional.individual_testCaseCount (gauge) | The individual number of test cases in a test |

| blazemeter.performance.assertions_failures (gauge) | Number of failures generated with this assertion name and message |

| blazemeter.performance.errors_count (gauge) | Number of errors generated with this response code and message |

| blazemeter.performance.errors_url_count (gauge) | Number of errors the URL generated an error |

| blazemeter.performance.failedEmbeddedResources_count (gauge) | Number of errors generated with this response code and message |

| blazemeter.performance.request_90line (gauge) | Max Response Time for 90% of samples Shown as millisecond |

| blazemeter.performance.request_95line (gauge) | Max Response Time for 95% of samples Shown as millisecond |

| blazemeter.performance.request_99line (gauge) | Max Response Time for 99% of samples Shown as millisecond |

| blazemeter.performance.request_avgBytes (gauge) | Average size of requests. Shown as byte |

| blazemeter.performance.request_avgLatency (gauge) | Average Latency of the label. Shown as millisecond |

| blazemeter.performance.request_avgResponseTime (gauge) | Average response time of the label. Shown as millisecond |

| blazemeter.performance.request_avgThroughput (gauge) | Average number of requests processed per second. Shown as hit |

| blazemeter.performance.request_concurrency (gauge) | Maximum Concurrent Users |

| blazemeter.performance.request_duration (gauge) | Total duration of the test Shown as second |

| blazemeter.performance.request_errorRate (gauge) | Percentage of requests that resulted in errors. Shown as percent |

| blazemeter.performance.request_errorsCount (gauge) | Total number of errors. |

| blazemeter.performance.request_maxResponseTime (gauge) | Maximum response time of the label. Shown as millisecond |

| blazemeter.performance.request_medianResponseTime (gauge) | Maximum Response Time for 50% of samples Shown as millisecond |

| blazemeter.performance.request_minResponseTime (gauge) | Minimum response time of the label. Shown as millisecond |

| blazemeter.performance.request_samples (gauge) | Total number of samples or requests processed. |

| blazemeter.performance.request_stDev (gauge) | Standard deviation of the response times. Shown as millisecond |

| blazemeter.performance.summary_avg (gauge) | Average Response Time of the test run. Shown as millisecond |

| blazemeter.performance.summary_bytes (gauge) | Average Bandwidth of the test run. Shown as byte |

| blazemeter.performance.summary_concurrency (gauge) | Maximum Concurrent Users |

| blazemeter.performance.summary_duration (gauge) | Duration of Test Run Shown as second |

| blazemeter.performance.summary_failed (gauge) | Total Number of Errors in the test run. |

| blazemeter.performance.summary_hits (gauge) | Total number of successful requests processed. |

| blazemeter.performance.summary_hits_avg (gauge) | Average number of hits per second. Shown as hit |

| blazemeter.performance.summary_latency (gauge) | Average Latency of the test run. Shown as millisecond |

| blazemeter.performance.summary_max (gauge) | Maximum Response Time. Shown as millisecond |

| blazemeter.performance.summary_maxUsers (gauge) | The maximum concurrency the test reached. |

| blazemeter.performance.summary_min (gauge) | Minimum Response Time. Shown as millisecond |

| blazemeter.performance.summary_stDev (gauge) | Standard deviation of the response times Shown as millisecond |

| blazemeter.performance.summary_tp90 (gauge) | Max response time for 90% of samples Shown as millisecond |

Service Checks

The BlazeMeter integration does not include any service checks.

Events

The BlazeMeter integration does not include any events.

Support

Need help? Contact Datadog support.