- Esenciales

- Empezando

- Agent

- API

- Rastreo de APM

- Contenedores

- Dashboards

- Monitorización de bases de datos

- Datadog

- Sitio web de Datadog

- DevSecOps

- Gestión de incidencias

- Integraciones

- Internal Developer Portal

- Logs

- Monitores

- OpenTelemetry

- Generador de perfiles

- Session Replay

- Security

- Serverless para Lambda AWS

- Software Delivery

- Monitorización Synthetic

- Etiquetas (tags)

- Workflow Automation

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Build an Integration with Datadog

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un dashboard de integración

- Create a Monitor Template

- Crear una regla de detección Cloud SIEM

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Aplicación móvil de Datadog

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Reference Tables

- Hojas

- Monitores y alertas

- Watchdog

- Métricas

- Bits AI

- Internal Developer Portal

- Error Tracking

- Explorador

- Estados de problemas

- Detección de regresión

- Suspected Causes

- Error Grouping

- Bits AI Dev Agent

- Monitores

- Issue Correlation

- Identificar confirmaciones sospechosas

- Auto Assign

- Issue Team Ownership

- Rastrear errores del navegador y móviles

- Rastrear errores de backend

- Manage Data Collection

- Solucionar problemas

- Guides

- Change Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Status Pages

- Gestión de eventos

- Gestión de casos

- Actions & Remediations

- Infraestructura

- Cloudcraft

- Catálogo de recursos

- Universal Service Monitoring

- Hosts

- Contenedores

- Processes

- Serverless

- Monitorización de red

- Cloud Cost

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilidad del servicio

- Endpoint Observability

- Instrumentación dinámica

- Live Debugger

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Límites de tasa del Agent

- Métricas de APM del Agent

- Uso de recursos del Agent

- Logs correlacionados

- Stacks tecnológicos de llamada en profundidad PHP 5

- Herramienta de diagnóstico de .NET

- Cuantificación de APM

- Go Compile-Time Instrumentation

- Logs de inicio del rastreador

- Logs de depuración del rastreador

- Errores de conexión

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Setting Up Amazon DocumentDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Exploring Database Schemas

- Exploring Recommendations

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Experiencia digital

- Real User Monitoring

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Análisis de productos

- Entrega de software

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Configuración

- Network Settings

- Tests en contenedores

- Repositories

- Explorador

- Monitores

- Test Health

- Flaky Test Management

- Working with Flaky Tests

- Test Impact Analysis

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Code Coverage

- Quality Gates

- Métricas de DORA

- Feature Flags

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- CloudPrem

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Ayuda

Métricas de Apache Spark

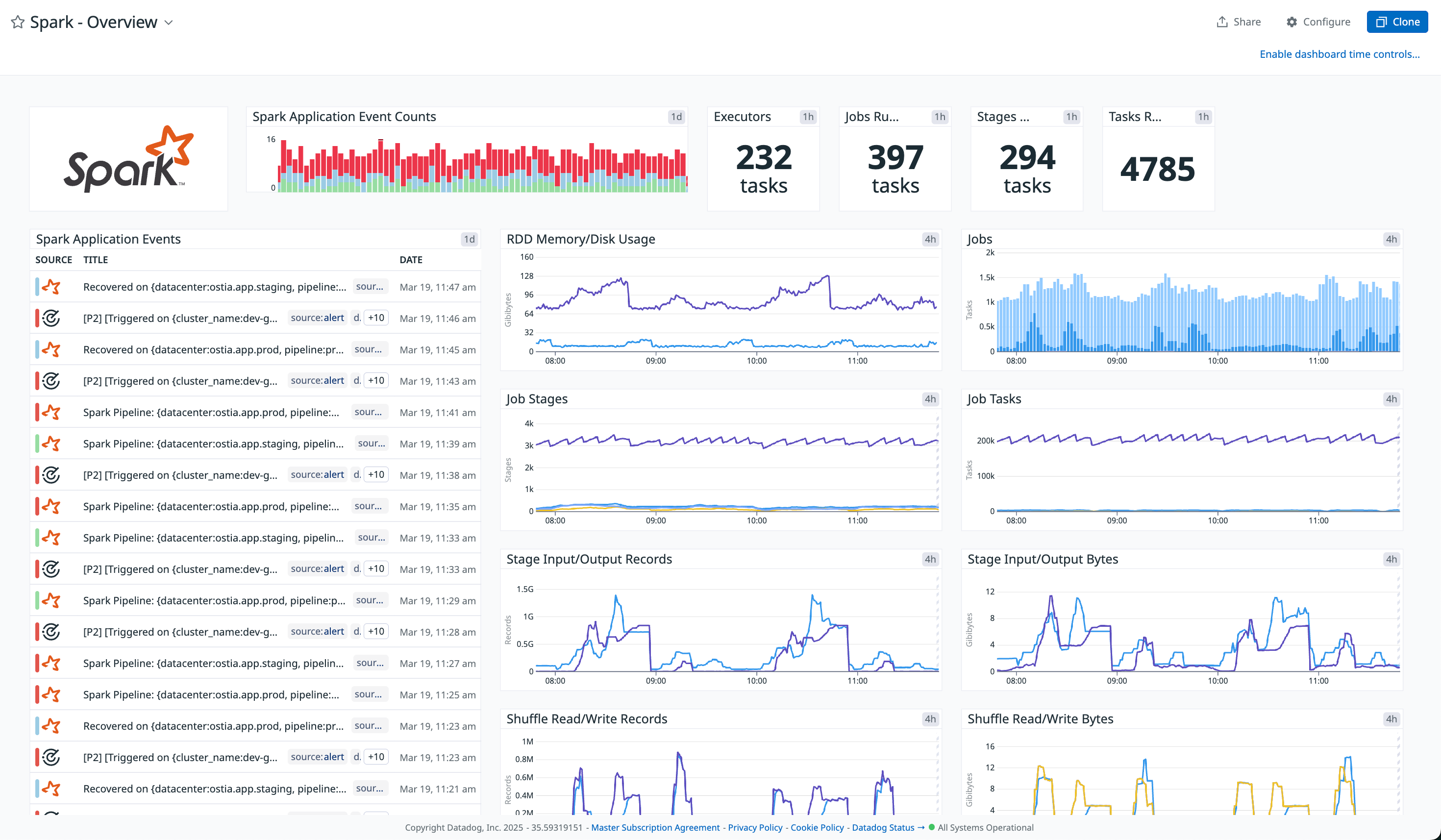

Información general

El receptor de Apache Spark permite recopilar métricas de Apache Spark y acceder al dashboard de Información general de Spark. Configura el receptor según las especificaciones de la última versión del apachesparkreceiver.

Para más información, consulta la documentación del proyecto de OpenTelemetry para el receptor de Apache Spark.

Configuración

Para recopilar métricas de Apache Spark con OpenTelemetry para su uso con Datadog:

- Configura el receptor de Apache Spark en tu configuración de OpenTelemetry Collector.

- Asegúrate de que el OpenTelemetry Collector está configurado para exportar a Datadog.

Consulta la documentación del receptor de Apache Spark para obtener información detallada sobre las opciones y requisitos de configuración.

Datos recopilados

| OTEL | DATADOG | DESCRIPTION | FILTER | TRANSFORM |

|---|---|---|---|---|

| spark.driver.block_manager.disk.usage | spark.driver.disk_used | Disk space used by the BlockManager. | × 1048576 | |

| spark.driver.block_manager.memory.usage | spark.driver.memory_used | Memory usage for the driver’s BlockManager. | × 1048576 | |

| spark.driver.dag_scheduler.stage.count | spark.stage.count | Number of stages the DAGScheduler is either running or needs to run. | ||

| spark.executor.disk.usage | spark.executor.disk_used | Disk space used by this executor for RDD storage. | ||

| spark.executor.disk.usage | spark.rdd.disk_used | Disk space used by this executor for RDD storage. | ||

| spark.executor.memory.usage | spark.executor.memory_used | Storage memory used by this executor. | ||

| spark.executor.memory.usage | spark.rdd.memory_used | Storage memory used by this executor. | ||

| spark.job.stage.active | spark.job.num_active_stages | Number of active stages in this job. | ||

| spark.job.stage.result | spark.job.num_completed_stages | Number of stages with a specific result in this job. | job_result: completed | |

| spark.job.stage.result | spark.job.num_failed_stages | Number of stages with a specific result in this job. | job_result: failed | |

| spark.job.stage.result | spark.job.num_skipped_stages | Number of stages with a specific result in this job. | job_result: skipped | |

| spark.job.task.active | spark.job.num_tasks{status: running} | Number of active tasks in this job. | ||

| spark.job.task.result | spark.job.num_skipped_tasks | Number of tasks with a specific result in this job. | job_result: skipped | |

| spark.job.task.result | spark.job.num_failed_tasks | Number of tasks with a specific result in this job. | job_result: failed | |

| spark.job.task.result | spark.job.num_completed_tasks | Number of tasks with a specific result in this job. | job_result: completed | |

| spark.stage.io.records | spark.stage.input_records | Number of records written and read in this stage. | direction: in | |

| spark.stage.io.records | spark.stage.output_records | Number of records written and read in this stage. | direction: out | |

| spark.stage.io.size | spark.stage.input_bytes | Amount of data written and read at this stage. | direction: in | |

| spark.stage.io.size | spark.stage.output_bytes | Amount of data written and read at this stage. | direction: out | |

| spark.stage.shuffle.io.read.size | spark.stage.shuffle_read_bytes | Amount of data read in shuffle operations in this stage. | ||

| spark.stage.shuffle.io.records | spark.stage.shuffle_read_records | Number of records written or read in shuffle operations in this stage. | direction: in | |

| spark.stage.shuffle.io.records | spark.stage.shuffle_write_records | Number of records written or read in shuffle operations in this stage. | direction: out |

Consulta Asignación de métricas de OpenTelemetry para obtener más información.

Referencias adicionales

Más enlaces, artículos y documentación útiles: