- Esenciales

- Empezando

- Agent

- API

- Rastreo de APM

- Contenedores

- Dashboards

- Monitorización de bases de datos

- Datadog

- Sitio web de Datadog

- DevSecOps

- Gestión de incidencias

- Integraciones

- Internal Developer Portal

- Logs

- Monitores

- OpenTelemetry

- Generador de perfiles

- Session Replay

- Security

- Serverless para Lambda AWS

- Software Delivery

- Monitorización Synthetic

- Etiquetas (tags)

- Workflow Automation

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Build an Integration with Datadog

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un dashboard de integración

- Create a Monitor Template

- Crear una regla de detección Cloud SIEM

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Aplicación móvil de Datadog

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Reference Tables

- Hojas

- Monitores y alertas

- Watchdog

- Métricas

- Bits AI

- Internal Developer Portal

- Error Tracking

- Explorador

- Estados de problemas

- Detección de regresión

- Suspected Causes

- Error Grouping

- Bits AI Dev Agent

- Monitores

- Issue Correlation

- Identificar confirmaciones sospechosas

- Auto Assign

- Issue Team Ownership

- Rastrear errores del navegador y móviles

- Rastrear errores de backend

- Manage Data Collection

- Solucionar problemas

- Guides

- Change Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Status Pages

- Gestión de eventos

- Gestión de casos

- Actions & Remediations

- Infraestructura

- Cloudcraft

- Catálogo de recursos

- Universal Service Monitoring

- Hosts

- Contenedores

- Processes

- Serverless

- Monitorización de red

- Cloud Cost

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilidad del servicio

- Endpoint Observability

- Instrumentación dinámica

- Live Debugger

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Límites de tasa del Agent

- Métricas de APM del Agent

- Uso de recursos del Agent

- Logs correlacionados

- Stacks tecnológicos de llamada en profundidad PHP 5

- Herramienta de diagnóstico de .NET

- Cuantificación de APM

- Go Compile-Time Instrumentation

- Logs de inicio del rastreador

- Logs de depuración del rastreador

- Errores de conexión

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Setting Up Amazon DocumentDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Exploring Database Schemas

- Exploring Recommendations

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Experiencia digital

- Real User Monitoring

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Análisis de productos

- Entrega de software

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Configuración

- Network Settings

- Tests en contenedores

- Repositories

- Explorador

- Monitores

- Test Health

- Flaky Test Management

- Working with Flaky Tests

- Test Impact Analysis

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Code Coverage

- Quality Gates

- Métricas de DORA

- Feature Flags

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- CloudPrem

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Ayuda

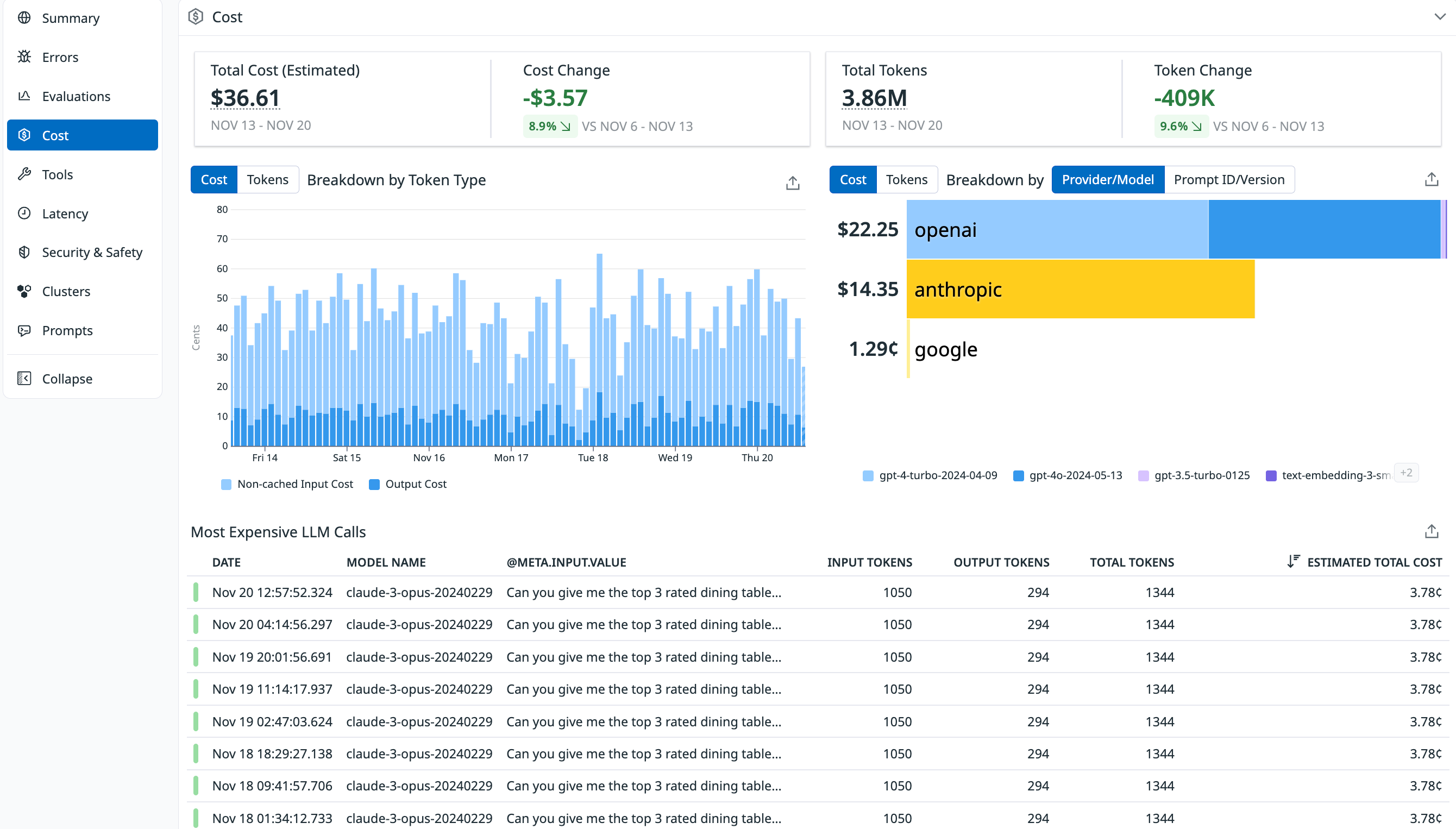

Costo

Este producto no es compatible con el sitio Datadog seleccionado. ().

LLM Observability de Datadog calcula automáticamente un costo estimado de cada solicitud de LLM, utilizando los modelos de precios públicos de los proveedores y los counts de tokens anotados en los spans (tramos) de LLM/integración.

Al agregar esta información en todas las traces (trazas) y aplicaciones, puedes obtener información sobre los patrones de uso de tus modelos de LLM y su efecto en el gasto global.

Casos prácticos:

- Ver y comprender de dónde procede el gasto de LLM, a nivel de modelo, la solicitud y la aplicación

- Rastreo de los cambios en el uso y costo de los tokens a lo largo del tiempo para evitar de forma proactiva facturas más elevadas en el futuro

- Correlacionar el costo de LLM con el rendimiento general de la aplicación, las versiones de los modelos, los proveedores de modelos y los detalles de las solicitudes en una única vista

Configurar costos de monitorización

Datadog ofrece dos formas de monitorizar tus costos de LLM:

- Automático: Utilizar las tarifas de precios públicos de proveedores de LLM admitidos.

- Manual: Para tarifas de precios personalizadas, modelos autoalojados o proveedores no compatibles, suministra manualmente tus propios valores de costo.

Automático

Si tus solicitudes de LLM implican a alguno de los proveedores admitidos de la lista, Datadog calcula automáticamente el costo de cada solicitud basándose en lo siguiente:

- Counts de tokens adjuntos al LLM/span (tramo) de integración, proporcionados mediante autoinstrumentación o mediante anotación manual del usuario.

- Tarifas de precios públicos del proveedor del modelo

Manual

Para suministrar manualmente la información de costos, sigue los steps (UI) / pasos (generic) de instrumentación descritos en Referencia de kit de desarrollo de software (SDK) o API.

Si proporcionas información parcial sobre los costos, Datadog intenta calcular la información que falta. Por ejemplo, si proporcionas un costo total, pero no los valores de los costos de entrada/salida, Datadog utiliza los precios del proveedor y los valores de los tokens anotados en tu span (tramo) para calcular los valores de los costos de entrada/salida. Esto puede provocar un desajuste entre el costo total proporcionado manualmente y la suma de los costos de entrada/salida calculados por Datadog. Datadog siempre muestra el costo total proporcionado tal cual, incluso si estos valores difieren.

Proveedores compatibles

Datadog calcula automáticamente el costo de las solicitudes de LLM realizadas a los siguientes proveedores admitidos utilizando la información sobre precios disponible públicamente de su documentación oficial.

Datadog solo admite costos de monitorización de los modelos basados en texto.

- OpenAI: Precios de OpenAI

- Antrópico: Precios de Claude

- Azure OpenAI: Precios de Azure OpenAI

- En aras de la coherencia, Datadog utiliza las tarifas de US East 2 y Global Standard Deployment para todas las solicitudes.

- Google Gemini: Precios de Gemini

Métricas

Encontrarás las métricas de costos en Métricas de LLM Observability.

Las métricas de costos incluyen una tag (etiqueta) source para indicar dónde se originó el valor:

source:auto— calculado automáticamentesource:user— provisto manualmente

Ver costos en LLM Observability

Visualiza tu aplicación en LLM Observability y selecciona Costs (Costos) a la izquierda. Las funciones Vista de costos:

- Información general de alto nivel del uso de tu LLM a lo largo del tiempo, incluidos el Total Cost (Costo total), el Cost Change (Cambio de costo), el Total Tokens (Total de tokens) y el Token Change (Cambio de tokens).

- Desglose por tipo de token: Desglose del uso de tokens, junto con los costos asociados.

- Desglose por proveedor/modelo o identificación/versión de avisos: Costo y uso de tokens desglosados por proveedor y modelo de LLM o por avisos individuales o versiones de avisos (con tecnología de Rastreo de avisos)

- Llamadas de LLM más costosas: Una lista de sus solicitudes más costosas

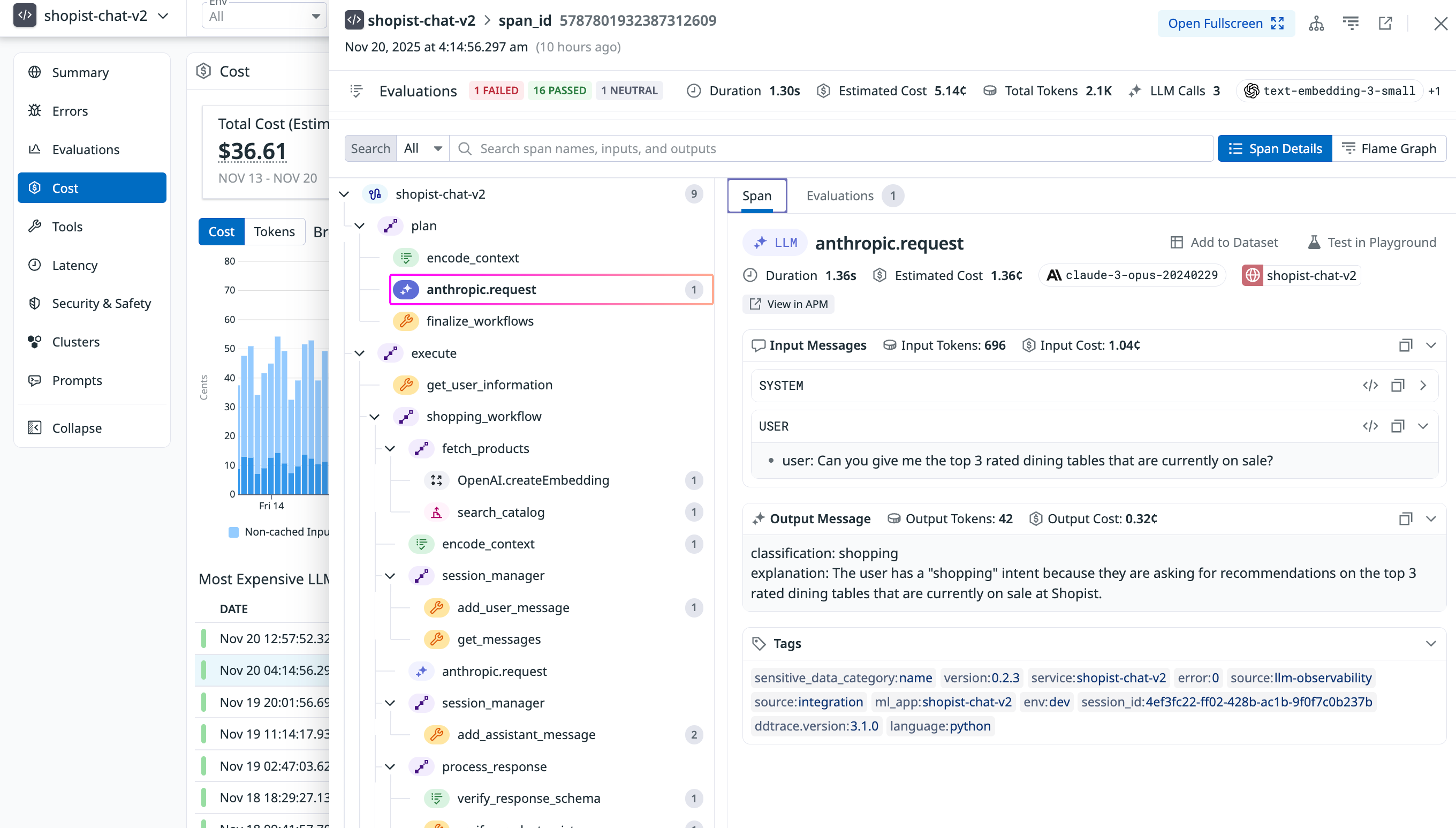

Los datos de costos también están disponibles en las traces (trazas) y spans (tramos) de tu aplicación, lo que te permite comprender los costos a nivel de la solicitud (trace (traza)) y de la operación (span (tramo)). Haz clic en cualquier trace (traza) o span (tramo) para abrir una vista detallada del panel lateral que incluye métricas de costos de la trace (traza) completa y de cada llamada de LLM individual. En la parte superior de la vista de traces (trazas), el banner muestra información de costos agregados de la trace (traza) completa, incluido el costo estimado y los tokens totales. Al pasar el ratón sobre estos valores, se muestra un desglose de los costos/tokens de entrada y salida.

Al seleccionar un span (tramo) de LLM individual, se muestran métricas de costos similares específicas de esa solicitud de LLM.