- Esenciales

- Empezando

- Agent

- API

- Rastreo de APM

- Contenedores

- Dashboards

- Monitorización de bases de datos

- Datadog

- Sitio web de Datadog

- DevSecOps

- Gestión de incidencias

- Integraciones

- Internal Developer Portal

- Logs

- Monitores

- OpenTelemetry

- Generador de perfiles

- Session Replay

- Security

- Serverless para Lambda AWS

- Software Delivery

- Monitorización Synthetic

- Etiquetas (tags)

- Workflow Automation

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Build an Integration with Datadog

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un dashboard de integración

- Create a Monitor Template

- Crear una regla de detección Cloud SIEM

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Aplicación móvil de Datadog

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Reference Tables

- Hojas

- Monitores y alertas

- Watchdog

- Métricas

- Bits AI

- Internal Developer Portal

- Error Tracking

- Explorador

- Estados de problemas

- Detección de regresión

- Suspected Causes

- Error Grouping

- Bits AI Dev Agent

- Monitores

- Issue Correlation

- Identificar confirmaciones sospechosas

- Auto Assign

- Issue Team Ownership

- Rastrear errores del navegador y móviles

- Rastrear errores de backend

- Manage Data Collection

- Solucionar problemas

- Guides

- Change Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Status Pages

- Gestión de eventos

- Gestión de casos

- Actions & Remediations

- Infraestructura

- Cloudcraft

- Catálogo de recursos

- Universal Service Monitoring

- Hosts

- Contenedores

- Processes

- Serverless

- Monitorización de red

- Cloud Cost

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilidad del servicio

- Endpoint Observability

- Instrumentación dinámica

- Live Debugger

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Límites de tasa del Agent

- Métricas de APM del Agent

- Uso de recursos del Agent

- Logs correlacionados

- Stacks tecnológicos de llamada en profundidad PHP 5

- Herramienta de diagnóstico de .NET

- Cuantificación de APM

- Go Compile-Time Instrumentation

- Logs de inicio del rastreador

- Logs de depuración del rastreador

- Errores de conexión

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Setting Up Amazon DocumentDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Exploring Database Schemas

- Exploring Recommendations

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Experiencia digital

- Real User Monitoring

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Análisis de productos

- Entrega de software

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Configuración

- Network Settings

- Tests en contenedores

- Repositories

- Explorador

- Monitores

- Test Health

- Flaky Test Management

- Working with Flaky Tests

- Test Impact Analysis

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Code Coverage

- Quality Gates

- Métricas de DORA

- Feature Flags

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- CloudPrem

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Ayuda

Quality Evaluations

Este producto no es compatible con el sitio Datadog seleccionado. ().

Esta página aún no está disponible en español. Estamos trabajando en su traducción.

Si tienes alguna pregunta o comentario sobre nuestro actual proyecto de traducción, no dudes en ponerte en contacto con nosotros.

Si tienes alguna pregunta o comentario sobre nuestro actual proyecto de traducción, no dudes en ponerte en contacto con nosotros.

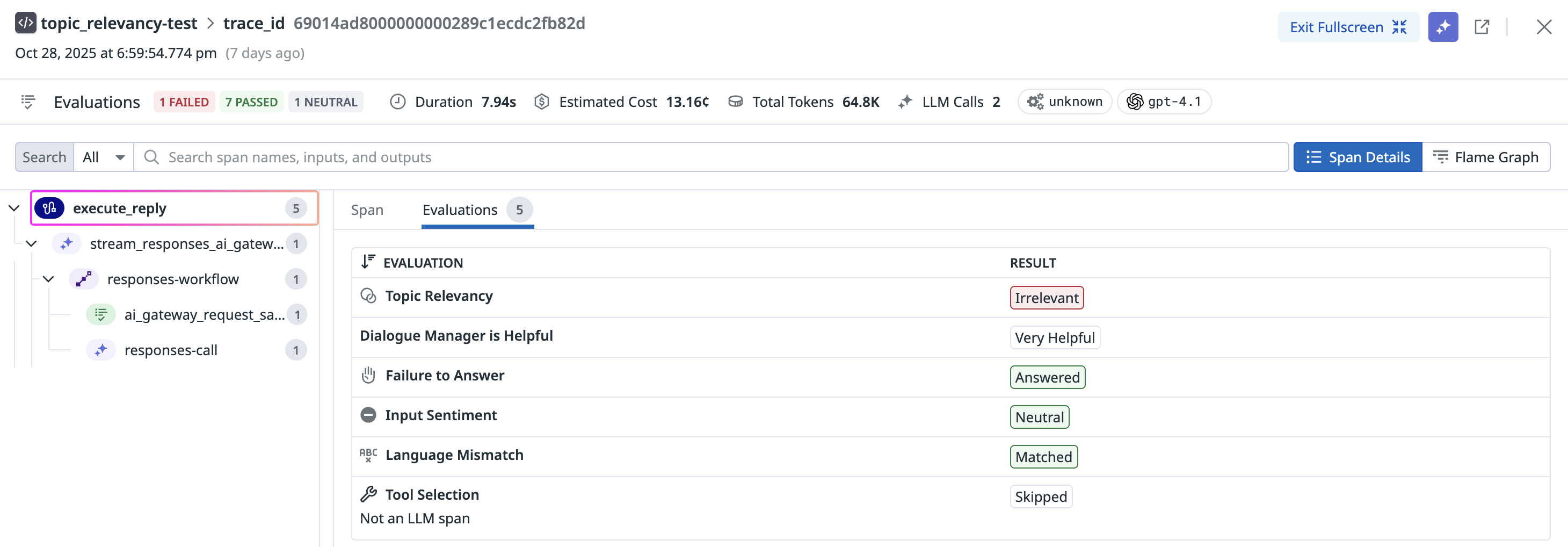

Quality evaluations help ensure your LLM-powered applications generate accurate, relevant, and safe responses. Managed evaluations automatically score model outputs on key quality dimensions and attach results to traces, helping you detect issues, monitor trends, and improve response quality over time.

Topic relevancy

This check identifies and flags user inputs that deviate from the configured acceptable input topics. This ensures that interactions stay pertinent to the LLM’s designated purpose and scope.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input | Evaluated using LLM | Topic relevancy assesses whether each prompt-response pair remains aligned with the intended subject matter of the Large Language Model (LLM) application. For instance, an e-commerce chatbot receiving a question about a pizza recipe would be flagged as irrelevant. |

You can provide topics for this evaluation.

- Go to LLM Observability > Applications.

- Select the application you want to add topics for.

- At the right corner of the top panel, select Settings.

- Beside Topic Relevancy, click Configure Evaluation.

- Click the Edit Evaluations icon for Topic Relevancy.

- Add topics on the configuration page.

Topics can contain multiple words and should be as specific and descriptive as possible. For example, for an LLM application that was designed for incident management, add “observability”, “software engineering”, or “incident resolution”. If your application handles customer inquiries for an e-commerce store, you can use “Customer questions about purchasing furniture on an e-commerce store”.

Hallucination

This check identifies instances where the LLM makes a claim that disagrees with the provided input context.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Output | Evaluated using LLM | Hallucination flags any output that disagrees with the context provided to the LLM. |

Instrumentation

You can use Prompt Tracking annotations to track your prompts and set them up for hallucination configuration. Annotate your LLM spans with the user query and context so hallucination detection can evaluate model outputs against the retrieved data.

from ddtrace.llmobs import LLMObs

from ddtrace.llmobs.types import Prompt

# if your llm call is auto-instrumented...

with LLMObs.annotation_context(

prompt=Prompt(

id="generate_answer_prompt",

template="Generate an answer to this question :{user_question}. Only answer based on the information from this article : {article}",

variables={"user_question": user_question, "article": article},

rag_query_variables=["user_question"],

rag_context_variables=["article"]

),

name="generate_answer"

):

oai_client.chat.completions.create(...) # autoinstrumented llm call

# if your llm call is manually instrumented ...

@llm(name="generate_answer")

def generate_answer():

...

LLMObs.annotate(

prompt=Prompt(

id="generate_answer_prompt",

template="Generate an answer to this question :{user_question}. Only answer based on the information from this article : {article}",

variables={"user_question": user_question, "article": article},

rag_query_variables=["user_question"],

rag_context_variables=["article"]

),

)variables dictionary should contain the key-value pairs your app uses to construct the LLM input prompt (for example, the messages for an OpenAI chat completion request). Use rag_query_variables and rag_context_variables to specify which variables represent the user query and which represent the retrieval context. A list of variables is allowed to account for cases where multiple variables make up the context (for example, multiple articles retrieved from a knowledge base).Hallucination detection does not run if either the rag query, the rag context, or the span output is empty.

Prompt Tracking is available on python starting from the 3.15 version, It also requires an ID for the prompt and the template set up to monitor and track your prompt versions. You can find more examples of prompt tracking and instrumentation in the SDK documentation.

Hallucination configuration

Hallucination detection is only available for OpenAI.

Hallucination detection makes a distinction between two types of hallucinations, which can be configured when Hallucination is enabled.| Configuration Option | Description |

|---|---|

| Contradiction | Claims made in the LLM-generated response that go directly against the provided context |

| Unsupported Claim | Claims made in the LLM-generated response that are not grounded in the context |

Contradictions are always detected, while Unsupported Claims can be optionally included. For sensitive use cases, we recommend including Unsupported Claims.

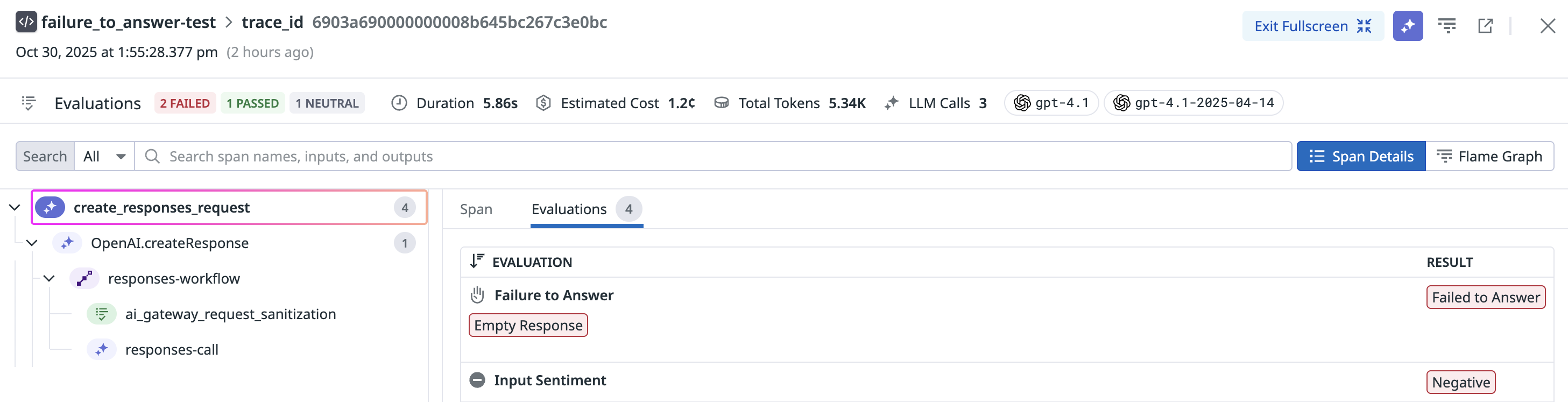

Failure to Answer

This check identifies instances where the LLM fails to deliver an appropriate response, which may occur due to limitations in the LLM’s knowledge or understanding, ambiguity in the user query, or the complexity of the topic.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Output | Evaluated using LLM | Failure To Answer flags whether each prompt-response pair demonstrates that the LLM application has provided a relevant and satisfactory answer to the user’s question. |

Failure to Answer Configuration

Configuring failure to answer evaluation categories is supported if OpenAI or Azure OpenAI is selected as your LLM provider.

You can configure the Failure to Answer evaluation to use specific categories of failure to answer, listed in the following table.| Configuration Option | Description | Example(s) |

|---|---|---|

| Empty Code Response | An empty code object, like an empty list or tuple, signifiying no data or results | (), [], {}, “”, '' |

| Empty Response | No meaningful response, returning only whitespace | whitespace |

| No Content Response | An empty output accompanied by a message indicating no content is available | Not found, N/A |

| Redirection Response | Redirects the user to another source or suggests an alternative approach | If you have additional details, I’d be happy to include them |

| Refusal Response | Explicitly declines to provide an answer or to complete the request | Sorry, I can’t answer this question |

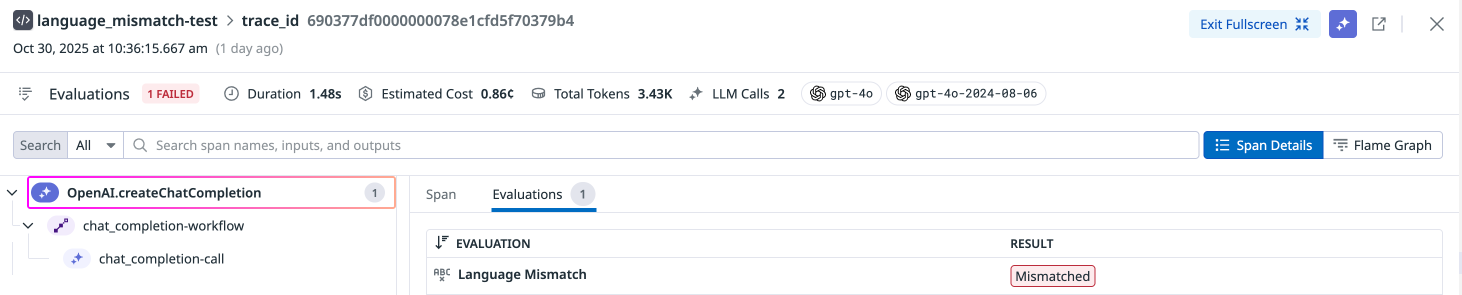

Language Mismatch

This check identifies instances where the LLM generates responses in a different language or dialect than the one used by the user, which can lead to confusion or miscommunication. This check ensures that the LLM’s responses are clear, relevant, and appropriate for the user’s linguistic preferences and needs.

Language mismatch is only supported for natural language prompts. Input and output pairs that mainly consist of structured data such as JSON, code snippets, or special characters are not flagged as a language mismatch.

Supported languages

Supported languages

Afrikaans, Albanian, Arabic, Armenian, Azerbaijani, Belarusian, Bengali, Norwegian Bokmal, Bosnian, Bulgarian, Chinese, Croatian, Czech, Danish, Dutch, English, Estonian, Finnish, French, Georgian, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Irish, Italian, Japanese, Kazakh, Korean, Latvian, Lithuanian, Macedonian, Malay, Marathi, Mongolian, Norwegian Nynorsk, Persian, Polish, Portuguese, Punjabi, Romanian, Russian, Serbian, Slovak, Slovene, Spanish, Swahili, Swedish, Tamil, Telugu, Thai, Turkish, Ukrainian, Urdu, Vietnamese, Yoruba, Zulu

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input and Output | Evaluated using Open Source Model | Language Mismatch flags whether each prompt-response pair demonstrates that the LLM application answered the user’s question in the same language that the user used. |

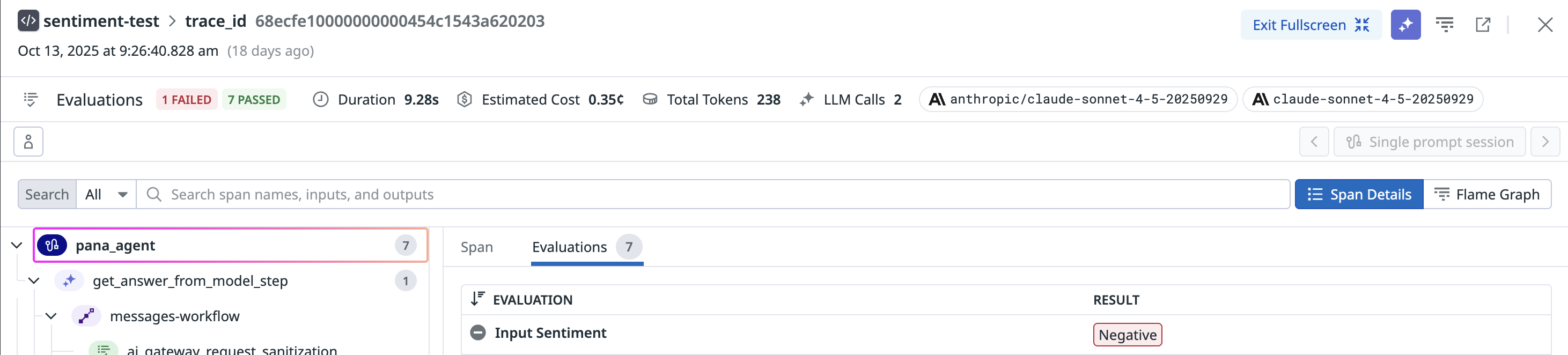

Sentiment

This check helps understand the overall mood of the conversation, gauge user satisfaction, identify sentiment trends, and interpret emotional responses. This check accurately classifies the sentiment of the text, providing insights to improve user experiences and tailor responses to better meet user needs.

| Evaluation Stage | Evaluation Method | Evaluation Definition |

|---|---|---|

| Evaluated on Input and Output | Evaluated using LLM | Sentiment flags the emotional tone or attitude expressed in the text, categorizing it as positive, negative, or neutral. |