- Esenciales

- Empezando

- Agent

- API

- Rastreo de APM

- Contenedores

- Dashboards

- Monitorización de bases de datos

- Datadog

- Sitio web de Datadog

- DevSecOps

- Gestión de incidencias

- Integraciones

- Internal Developer Portal

- Logs

- Monitores

- OpenTelemetry

- Generador de perfiles

- Session Replay

- Security

- Serverless para Lambda AWS

- Software Delivery

- Monitorización Synthetic

- Etiquetas (tags)

- Workflow Automation

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Build an Integration with Datadog

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un dashboard de integración

- Create a Monitor Template

- Crear una regla de detección Cloud SIEM

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Aplicación móvil de Datadog

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Reference Tables

- Hojas

- Monitores y alertas

- Watchdog

- Métricas

- Bits AI

- Internal Developer Portal

- Error Tracking

- Explorador

- Estados de problemas

- Detección de regresión

- Suspected Causes

- Error Grouping

- Bits AI Dev Agent

- Monitores

- Issue Correlation

- Identificar confirmaciones sospechosas

- Auto Assign

- Issue Team Ownership

- Rastrear errores del navegador y móviles

- Rastrear errores de backend

- Manage Data Collection

- Solucionar problemas

- Guides

- Change Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Status Pages

- Gestión de eventos

- Gestión de casos

- Actions & Remediations

- Infraestructura

- Cloudcraft

- Catálogo de recursos

- Universal Service Monitoring

- Hosts

- Contenedores

- Processes

- Serverless

- Monitorización de red

- Cloud Cost

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilidad del servicio

- Endpoint Observability

- Instrumentación dinámica

- Live Debugger

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Límites de tasa del Agent

- Métricas de APM del Agent

- Uso de recursos del Agent

- Logs correlacionados

- Stacks tecnológicos de llamada en profundidad PHP 5

- Herramienta de diagnóstico de .NET

- Cuantificación de APM

- Go Compile-Time Instrumentation

- Logs de inicio del rastreador

- Logs de depuración del rastreador

- Errores de conexión

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Setting Up Amazon DocumentDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Exploring Database Schemas

- Exploring Recommendations

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Experiencia digital

- Real User Monitoring

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Análisis de productos

- Entrega de software

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Configuración

- Network Settings

- Tests en contenedores

- Repositories

- Explorador

- Monitores

- Test Health

- Flaky Test Management

- Working with Flaky Tests

- Test Impact Analysis

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Code Coverage

- Quality Gates

- Métricas de DORA

- Feature Flags

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- CloudPrem

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Ayuda

Annotation Queues

Este producto no es compatible con el sitio Datadog seleccionado. ().

Esta página aún no está disponible en español. Estamos trabajando en su traducción.

Si tienes alguna pregunta o comentario sobre nuestro actual proyecto de traducción, no dudes en ponerte en contacto con nosotros.

Si tienes alguna pregunta o comentario sobre nuestro actual proyecto de traducción, no dudes en ponerte en contacto con nosotros.

Overview

Preview Feature

Annotation Queues are in Preview. To request access, contact ml-observability-product@datadoghq.com.

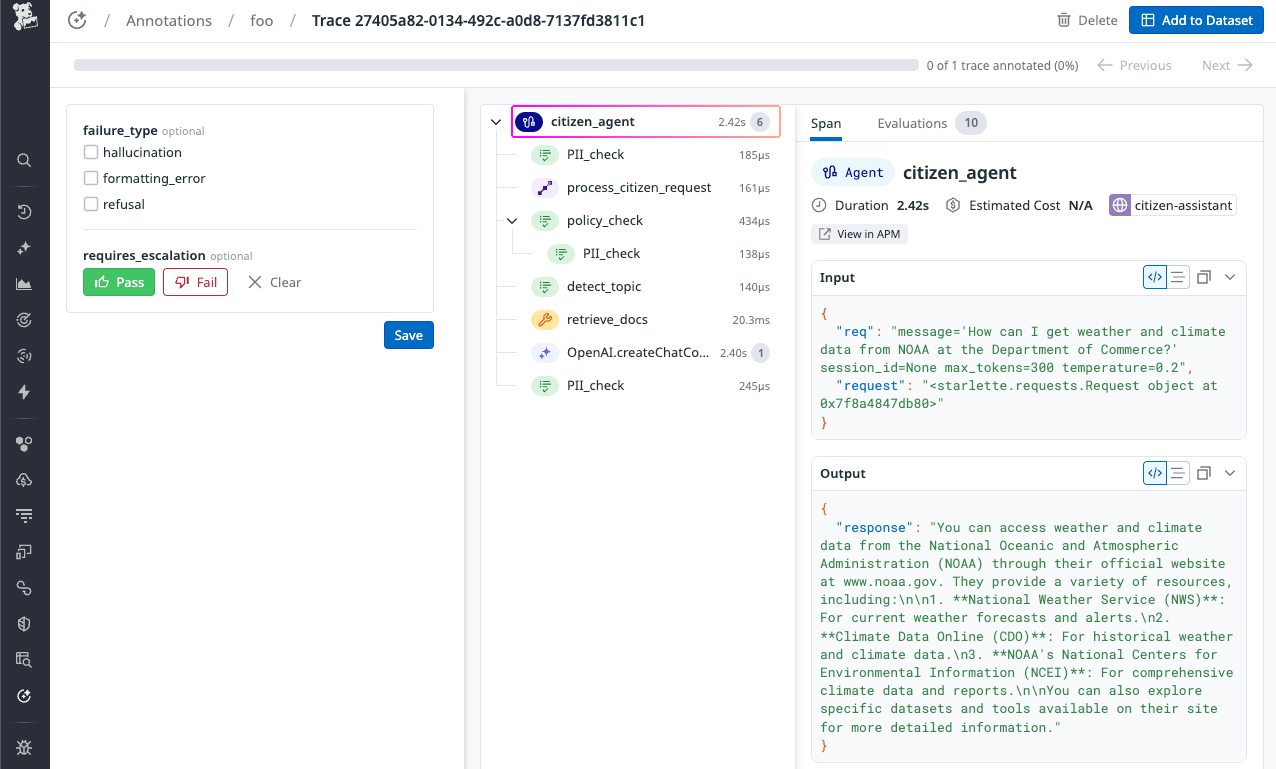

Annotation Queues provide a structured workflow for human review of LLM traces. Use annotation queues to:

- Review traces with complete context including spans, metadata, tool calls, inputs, outputs, and evaluation results

- Apply structured labels and free-form observations to traces

- Identify and categorize failure patterns

- Validate LLM-as-a-Judge evaluation accuracy

- Build golden datasets with human-verified labels for testing and validation

Create and use an annotation queue

Navigate to AI Observability > Experiment > Annotations and select your project.

Click Create Queue.

On the About tab, configure:

- Name: Descriptive name reflecting the queue’s purpose (for example, “Failed Evaluations Review - Q1 2026”)

- Project: LLM Observability project this queue belongs to

- Description (optional): Explain the queue’s purpose and any special instructions for annotators

Then click Next.

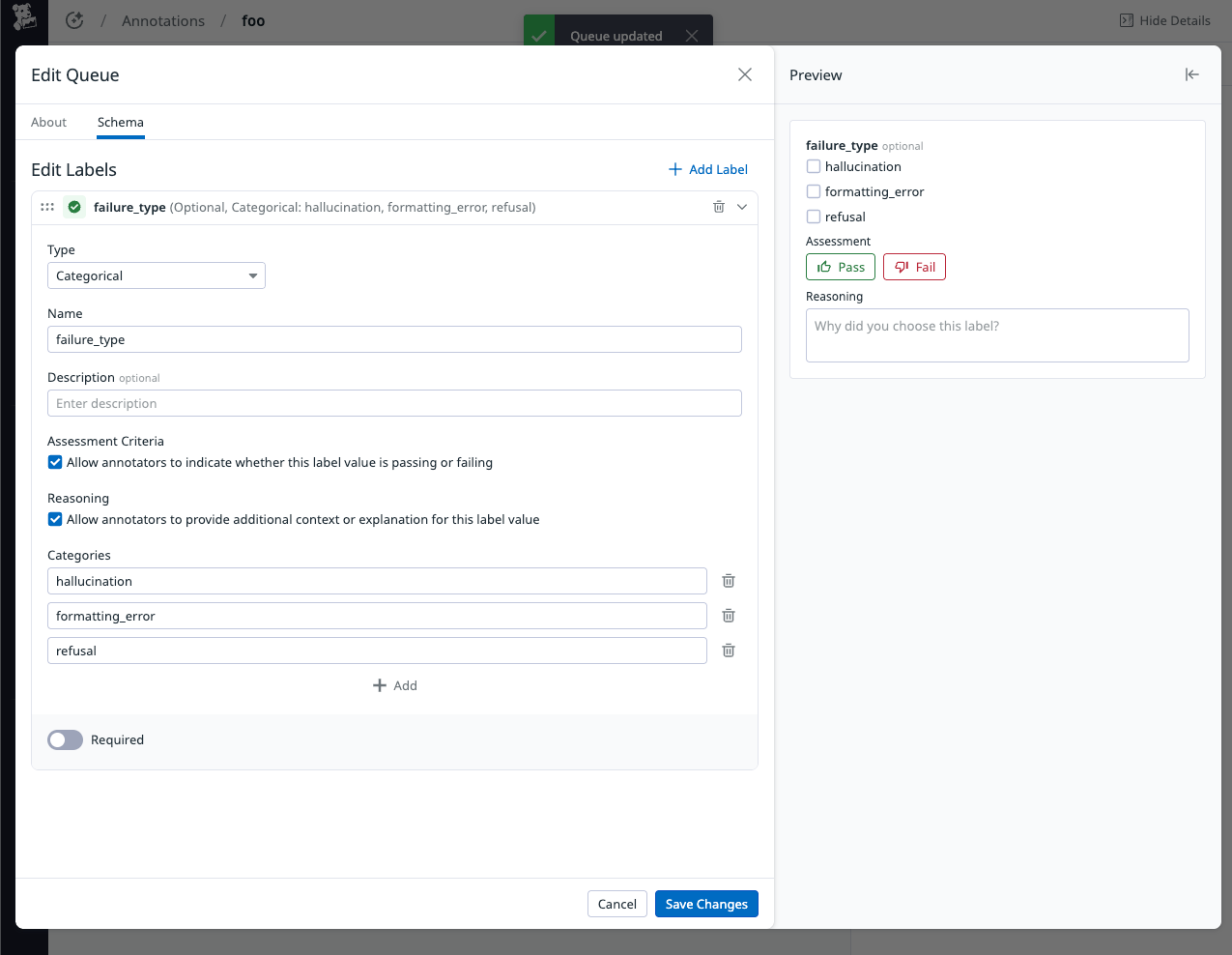

On the Schema tab, define your new queue’s label schema. Use the Preview pane to see how labels appear to annotators as you configure them.

Then click Create.

Add interactions.

- Navigate to AI Observability > Traces.

- Filter traces using available facets (evaluation results, error status, application, time range).

- Click on an individual trace, or bulk select multiple traces.

- Click Flag for Annotation.

- Select your queue from the drop-down.

Return to the annotation queue you created, and click Review to begin annotating.

Managing queues

Editing queue schema

You can modify a queue’s label schema after creation:

- Navigate to AI Observability > Experiment > Annotations

- Open the queue

- If the Details panel is hidden, click View Details

- Click Edit

- Add, remove, or modify labels

- Click Save Changes

Changing the schema doesn't affect already-applied labels, but annotators will see the updated schema going forward.

Exporting annotated data

Export annotated traces for analysis or use in other workflows:

- Navigate to AI Observability > Experiment > Annotations

- Open the queue

- Select traces (or select all)

- Click Export

Adding to datasets

Transfer annotated traces to datasets for experiment evaluation:

- Navigate to AI Observability > Experiment > Annotations

- Open the queue

- Select traces to transfer

- Click Add to Dataset

- Choose an existing dataset, or create a dataset

Labels are included with each trace as metadata.

See Datasets for more information about using datasets in experiments.

Deleting queues

To delete a queue:

- Navigate to AI Observability > Experiment > Annotations

- Open the queue

- Click Delete in the Details panel

Deleting a queue removes the queue and label associations, but does not delete the underlying traces from LLM Observability. Traces remain accessible in Trace Explorer.

Data retention

| Data | Retention period |

|---|---|

| Traces in queues | 15 days |

| Annotation labels | Indefinite |

Best practices for annotation

Be consistent:

- Review the queue description and label definitions before starting

- When multiple annotators work on the same queue, establish shared understanding of criteria

- Document reasoning in notes for borderline cases

Provide reasoning:

- Use free-form notes to document why you applied specific labels

- Note patterns you observe across multiple traces

- Reasoning helps refine evaluation criteria and understand failure modes

Example workflows

Error analysis and failure mode discovery

Error analysis and failure mode discovery

Review failed traces to identify recurring patterns and categorize how your application fails in production.

- Filter traces in Trace Explorer for failed evaluations or specific error patterns

- Manually select traces and add to an annotation queue

- Annotators review traces and document failure types in free-form notes

- Common patterns emerge: hallucinations in specific contexts, formatting issues, inappropriate refusals

- Create categorical labels for identified failure modes and re-code traces

- Use failure mode distribution to prioritize fixes

Queue configuration

Labels: Free-form notes, categorical

failure_typelabel, pass/fail ratingAnnotators: Product managers, engineers, domain experts

Validating LLM-as-a-Judge evaluations

Validating LLM-as-a-Judge evaluations

Find traces where automated evaluators may be uncertain or incorrect, then have humans provide ground truth.

- Sample evaluation results: all results, or a given score/threshold

- Add selected traces to an annotation queue

- Annotators review traces and provide human scores for the same criteria

- Compare human labels to automated evaluation scores

- Identify systematic disagreements (judge too strict, too lenient, or misunderstanding criteria)

- Refine evaluation prompts based on disagreements

Queue configuration

Labels: Numeric scores matching evaluation criteria (0-10), categorical

judge_accuracylabel, reasoning notesAnnotators: Subject matter experts who understand evaluation criteria

Golden dataset creation

Golden dataset creation

Build benchmark datasets with human-verified labels for regression testing and continuous validation.

- Sample diverse production traces from Trace Explorer (both good and bad examples)

- Add traces to annotation queue

- Annotators review and label traces across multiple quality dimensions

- Add high-confidence, well-labeled examples to golden dataset

- Use dataset for CI/CD regression testing of prompt changes

- Continuously expand dataset with new edge cases

Queue configuration

Labels: Multiple categorical labels covering quality dimensions, numeric scores, pass/fail rating, notes

Annotators: Team of domain experts for consistency

Further Reading

Más enlaces, artículos y documentación útiles: