- Esenciales

- Empezando

- Agent

- API

- Rastreo de APM

- Contenedores

- Dashboards

- Monitorización de bases de datos

- Datadog

- Sitio web de Datadog

- DevSecOps

- Gestión de incidencias

- Integraciones

- Internal Developer Portal

- Logs

- Monitores

- OpenTelemetry

- Generador de perfiles

- Session Replay

- Security

- Serverless para Lambda AWS

- Software Delivery

- Monitorización Synthetic

- Etiquetas (tags)

- Workflow Automation

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Build an Integration with Datadog

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un dashboard de integración

- Create a Monitor Template

- Crear una regla de detección Cloud SIEM

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Aplicación móvil de Datadog

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Reference Tables

- Hojas

- Monitores y alertas

- Watchdog

- Métricas

- Bits AI

- Internal Developer Portal

- Error Tracking

- Explorador

- Estados de problemas

- Detección de regresión

- Suspected Causes

- Error Grouping

- Bits AI Dev Agent

- Monitores

- Issue Correlation

- Identificar confirmaciones sospechosas

- Auto Assign

- Issue Team Ownership

- Rastrear errores del navegador y móviles

- Rastrear errores de backend

- Manage Data Collection

- Solucionar problemas

- Guides

- Change Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Status Pages

- Gestión de eventos

- Gestión de casos

- Actions & Remediations

- Infraestructura

- Cloudcraft

- Catálogo de recursos

- Universal Service Monitoring

- Hosts

- Contenedores

- Processes

- Serverless

- Monitorización de red

- Cloud Cost

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilidad del servicio

- Endpoint Observability

- Instrumentación dinámica

- Live Debugger

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Límites de tasa del Agent

- Métricas de APM del Agent

- Uso de recursos del Agent

- Logs correlacionados

- Stacks tecnológicos de llamada en profundidad PHP 5

- Herramienta de diagnóstico de .NET

- Cuantificación de APM

- Go Compile-Time Instrumentation

- Logs de inicio del rastreador

- Logs de depuración del rastreador

- Errores de conexión

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Setting Up Amazon DocumentDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Exploring Database Schemas

- Exploring Recommendations

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Experiencia digital

- Real User Monitoring

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Análisis de productos

- Entrega de software

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Configuración

- Network Settings

- Tests en contenedores

- Repositories

- Explorador

- Monitores

- Test Health

- Flaky Test Management

- Working with Flaky Tests

- Test Impact Analysis

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Code Coverage

- Quality Gates

- Métricas de DORA

- Feature Flags

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- CloudPrem

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Ayuda

Hugging Face TGI

Supported OS

Versión de la integración1.4.0

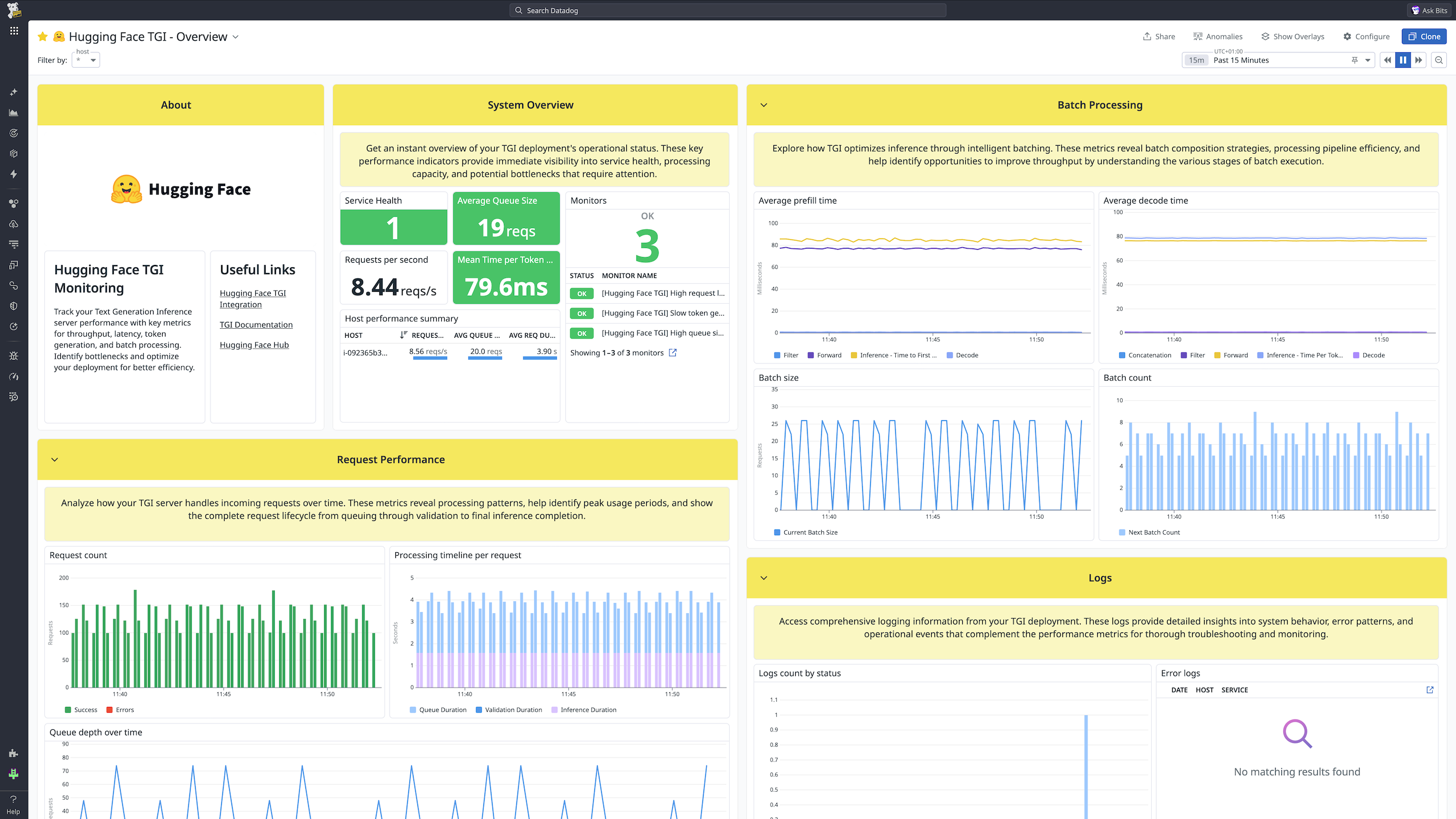

Hugging Face TGI - Overview

Esta página aún no está disponible en español. Estamos trabajando en su traducción.

Si tienes alguna pregunta o comentario sobre nuestro actual proyecto de traducción, no dudes en ponerte en contacto con nosotros.

Si tienes alguna pregunta o comentario sobre nuestro actual proyecto de traducción, no dudes en ponerte en contacto con nosotros.

Overview

This check monitors Hugging Face Text Generation Inference (TGI) through the Datadog Agent. TGI is a library for deploying and serving large language models (LLMs) optimized for text generation. It provides features such as continuous batching, tensor parallelism, token streaming, and optimizations for production use.

The integration provides comprehensive monitoring of your TGI servers by collecting:

- Request performance metrics, including latency, throughput, and token generation rates

- Batch processing metrics for inference optimization insights

- Queue depth and request flow monitoring

- Model serving health and operational metrics

This enables teams to optimize LLM inference performance, track resource utilization, troubleshoot bottlenecks, and ensure reliable model serving at scale.

Minimum Agent version: 7.70.1

Setup

Follow these instructions to install and configure this check for an Agent running on a host. For containerized environments, see the Autodiscovery Integration Templates for guidance on applying these instructions.

Installation

The Hugging Face TGI check is included in the Datadog Agent package. No additional installation is needed on your server.

Configuration

Metrics

Ensure that your TGI server exposes Prometheus metrics on the default

/metricsendpoint. For more information, see the TGI monitoring documentation.Edit

hugging_face_tgi.d/conf.yaml, located in theconf.d/folder at the root of your Agent’s configuration directory, to start collecting Hugging Face TGI performance data. See the sample configuration file for all available options.instances: - openmetrics_endpoint: http://localhost:80/metrics

Logs

The Hugging Face TGI integration can collect logs from the server container and forward them to Datadog. The TGI server container needs to be started with the environment variable NO_COLOR=1 and the option --json-output for the logs output to be correctly parsed by Datadog. After setting these variables, the server must be restarted to enable log ingestion.

Collecting logs is disabled by default in the Datadog Agent. Enable it in your

datadog.yamlfile:logs_enabled: trueUncomment and edit the logs configuration block in your

hugging_face_tgi.d/conf.yamlfile. Here’s an example:logs: - type: docker source: hugging_face_tgi service: text-generation-inference auto_multi_line_detection: true

Collecting logs is disabled by default in the Datadog Agent. To enable it, see Kubernetes Log Collection.

Then, set Log Integrations as pod annotations. This can also be configured with a file, a configmap, or a key-value store. For more information, see the configuration section of Kubernetes Log Collection.

Validation

Run the Agent’s status subcommand and look for hugging_face_tgi under the Checks section.

Data Collected

Metrics

| hugging_face_tgi.batch.concat.count (count) | Number of batch concatenates |

| hugging_face_tgi.batch.concat.duration.bucket (count) | Batch concatenation duration distribution |

| hugging_face_tgi.batch.concat.duration.count (count) | Number of batch concatenation duration measurements |

| hugging_face_tgi.batch.concat.duration.sum (count) | Total batch concatenation duration Shown as second |

| hugging_face_tgi.batch.current.max_tokens (gauge) | Maximum tokens the current batch will grow to Shown as token |

| hugging_face_tgi.batch.current.size (gauge) | Current batch size Shown as request |

| hugging_face_tgi.batch.decode.duration.bucket (count) | Batch decode duration distribution |

| hugging_face_tgi.batch.decode.duration.count (count) | Number of batch decode duration measurements |

| hugging_face_tgi.batch.decode.duration.sum (count) | Total batch decode duration Shown as second |

| hugging_face_tgi.batch.filter.duration.bucket (count) | Batch filtering duration distribution |

| hugging_face_tgi.batch.filter.duration.count (count) | Number of batch filter duration measurements |

| hugging_face_tgi.batch.filter.duration.sum (count) | Total batch filter duration Shown as second |

| hugging_face_tgi.batch.forward.duration.bucket (count) | Batch forward duration distribution |

| hugging_face_tgi.batch.forward.duration.count (count) | Number of batch forward duration measurements |

| hugging_face_tgi.batch.forward.duration.sum (count) | Total batch forward duration Shown as second |

| hugging_face_tgi.batch.inference.count (count) | Total number of batch inferences |

| hugging_face_tgi.batch.inference.duration.bucket (count) | Batch inference duration distribution |

| hugging_face_tgi.batch.inference.duration.count (count) | Number of batch inference duration measurements |

| hugging_face_tgi.batch.inference.duration.sum (count) | Total batch inference duration Shown as second |

| hugging_face_tgi.batch.inference.success.count (count) | Number of successful batch inferences |

| hugging_face_tgi.batch.next.size.bucket (count) | Next batch size distribution |

| hugging_face_tgi.batch.next.size.count (count) | Number of next batch size measurements |

| hugging_face_tgi.batch.next.size.sum (count) | Total next batch size Shown as request |

| hugging_face_tgi.queue.size (gauge) | Number of requests waiting in the internal queue Shown as request |

| hugging_face_tgi.request.count (count) | Total number of requests received Shown as request |

| hugging_face_tgi.request.duration.bucket (count) | Request duration distribution |

| hugging_face_tgi.request.duration.count (count) | Number of request duration measurements |

| hugging_face_tgi.request.duration.sum (count) | Total request duration Shown as second |

| hugging_face_tgi.request.failure.count (count) | Number of failed requests Shown as request |

| hugging_face_tgi.request.generated_tokens.bucket (count) | Generated tokens per request distribution |

| hugging_face_tgi.request.generated_tokens.count (count) | Number of generated token measurements |

| hugging_face_tgi.request.generated_tokens.sum (count) | Total generated tokens Shown as token |

| hugging_face_tgi.request.inference.duration.bucket (count) | Request inference duration distribution |

| hugging_face_tgi.request.inference.duration.count (count) | Number of request inference duration measurements |

| hugging_face_tgi.request.inference.duration.sum (count) | Total request inference duration Shown as second |

| hugging_face_tgi.request.input_length.bucket (count) | Input token length per request distribution |

| hugging_face_tgi.request.input_length.count (count) | Number of input length measurements |

| hugging_face_tgi.request.input_length.sum (count) | Total input length Shown as token |

| hugging_face_tgi.request.max_new_tokens.bucket (count) | Maximum new tokens per request distribution |

| hugging_face_tgi.request.max_new_tokens.count (count) | Number of max new tokens measurements |

| hugging_face_tgi.request.max_new_tokens.sum (count) | Total max new tokens Shown as token |

| hugging_face_tgi.request.mean_time_per_token.duration.bucket (count) | Mean time per token duration distribution |

| hugging_face_tgi.request.mean_time_per_token.duration.count (count) | Number of mean time per token measurements |

| hugging_face_tgi.request.mean_time_per_token.duration.sum (count) | Total mean time per token duration Shown as second |

| hugging_face_tgi.request.queue.duration.bucket (count) | Request queue duration distribution |

| hugging_face_tgi.request.queue.duration.count (count) | Number of request queue duration measurements |

| hugging_face_tgi.request.queue.duration.sum (count) | Total request queue duration Shown as second |

| hugging_face_tgi.request.skipped_tokens.count (count) | Number of skipped token measurements |

| hugging_face_tgi.request.skipped_tokens.quantile (gauge) | Skipped tokens per request quantile Shown as token |

| hugging_face_tgi.request.skipped_tokens.sum (count) | Total skipped tokens Shown as token |

| hugging_face_tgi.request.success.count (count) | Number of successful requests Shown as request |

| hugging_face_tgi.request.validation.duration.bucket (count) | Request validation duration distribution |

| hugging_face_tgi.request.validation.duration.count (count) | Number of request validation duration measurements |

| hugging_face_tgi.request.validation.duration.sum (count) | Total request validation duration Shown as second |

Key metrics include:

- Request metrics: Total requests, successful requests, failed requests, and request duration

- Queue metrics: Queue size and queue duration for monitoring throughput bottlenecks

- Token metrics: Generated tokens, input length, and mean time per token for performance analysis

- Batch metrics: Batch size, batch concatenation, and batch processing durations for optimization insights

- Inference metrics: Forward pass duration, decode duration, and filter duration for model performance monitoring

Events

The Hugging Face TGI integration does not include any events.

Service Checks

See service_checks.json for a list of service checks provided by this integration.

Troubleshooting

In containerized environments, ensure that the Agent has network access to the TGI metrics endpoint specified in hugging_face_tgi.d/conf.yaml.

If you wish to ingest non JSON TGI logs, use the following logs configuration:

logs:

- type: docker

source: hugging_face_tgi

service: text-generation-inference

auto_multi_line_detection: true

log_processing_rules:

- type: mask_sequences

name: strip_ansi

pattern: "\\x1B\\[[0-9;]*m"

replace_placeholder: ""

Need help? Contact Datadog support.