- Esenciales

- Empezando

- Agent

- API

- Rastreo de APM

- Contenedores

- Dashboards

- Monitorización de bases de datos

- Datadog

- Sitio web de Datadog

- DevSecOps

- Gestión de incidencias

- Integraciones

- Internal Developer Portal

- Logs

- Monitores

- OpenTelemetry

- Generador de perfiles

- Session Replay

- Security

- Serverless para Lambda AWS

- Software Delivery

- Monitorización Synthetic

- Etiquetas (tags)

- Workflow Automation

- Centro de aprendizaje

- Compatibilidad

- Glosario

- Atributos estándar

- Guías

- Agent

- Arquitectura

- IoT

- Plataformas compatibles

- Recopilación de logs

- Configuración

- Automatización de flotas

- Solucionar problemas

- Detección de nombres de host en contenedores

- Modo de depuración

- Flare del Agent

- Estado del check del Agent

- Problemas de NTP

- Problemas de permisos

- Problemas de integraciones

- Problemas del sitio

- Problemas de Autodiscovery

- Problemas de contenedores de Windows

- Configuración del tiempo de ejecución del Agent

- Consumo elevado de memoria o CPU

- Guías

- Seguridad de datos

- Integraciones

- Desarrolladores

- Autorización

- DogStatsD

- Checks personalizados

- Integraciones

- Build an Integration with Datadog

- Crear una integración basada en el Agent

- Crear una integración API

- Crear un pipeline de logs

- Referencia de activos de integración

- Crear una oferta de mercado

- Crear un dashboard de integración

- Create a Monitor Template

- Crear una regla de detección Cloud SIEM

- Instalar la herramienta de desarrollo de integraciones del Agente

- Checks de servicio

- Complementos de IDE

- Comunidad

- Guías

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Aplicación móvil de Datadog

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- En la aplicación

- Dashboards

- Notebooks

- Editor DDSQL

- Reference Tables

- Hojas

- Monitores y alertas

- Watchdog

- Métricas

- Bits AI

- Internal Developer Portal

- Error Tracking

- Explorador

- Estados de problemas

- Detección de regresión

- Suspected Causes

- Error Grouping

- Bits AI Dev Agent

- Monitores

- Issue Correlation

- Identificar confirmaciones sospechosas

- Auto Assign

- Issue Team Ownership

- Rastrear errores del navegador y móviles

- Rastrear errores de backend

- Manage Data Collection

- Solucionar problemas

- Guides

- Change Tracking

- Gestión de servicios

- Objetivos de nivel de servicio (SLOs)

- Gestión de incidentes

- De guardia

- Status Pages

- Gestión de eventos

- Gestión de casos

- Actions & Remediations

- Infraestructura

- Cloudcraft

- Catálogo de recursos

- Universal Service Monitoring

- Hosts

- Contenedores

- Processes

- Serverless

- Monitorización de red

- Cloud Cost

- Rendimiento de las aplicaciones

- APM

- Términos y conceptos de APM

- Instrumentación de aplicación

- Recopilación de métricas de APM

- Configuración de pipelines de trazas

- Correlacionar trazas (traces) y otros datos de telemetría

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilidad del servicio

- Endpoint Observability

- Instrumentación dinámica

- Live Debugger

- Error Tracking

- Seguridad de los datos

- Guías

- Solucionar problemas

- Límites de tasa del Agent

- Métricas de APM del Agent

- Uso de recursos del Agent

- Logs correlacionados

- Stacks tecnológicos de llamada en profundidad PHP 5

- Herramienta de diagnóstico de .NET

- Cuantificación de APM

- Go Compile-Time Instrumentation

- Logs de inicio del rastreador

- Logs de depuración del rastreador

- Errores de conexión

- Continuous Profiler

- Database Monitoring

- Gastos generales de integración del Agent

- Arquitecturas de configuración

- Configuración de Postgres

- Configuración de MySQL

- Configuración de SQL Server

- Configuración de Oracle

- Configuración de MongoDB

- Setting Up Amazon DocumentDB

- Conexión de DBM y trazas

- Datos recopilados

- Explorar hosts de bases de datos

- Explorar métricas de consultas

- Explorar ejemplos de consulta

- Exploring Database Schemas

- Exploring Recommendations

- Solucionar problemas

- Guías

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Experiencia digital

- Real User Monitoring

- Pruebas y monitorización de Synthetics

- Continuous Testing

- Análisis de productos

- Entrega de software

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Configuración

- Network Settings

- Tests en contenedores

- Repositories

- Explorador

- Monitores

- Test Health

- Flaky Test Management

- Working with Flaky Tests

- Test Impact Analysis

- Flujos de trabajo de desarrolladores

- Cobertura de código

- Instrumentar tests de navegador con RUM

- Instrumentar tests de Swift con RUM

- Correlacionar logs y tests

- Guías

- Solucionar problemas

- Code Coverage

- Quality Gates

- Métricas de DORA

- Feature Flags

- Seguridad

- Información general de seguridad

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- Observabilidad de la IA

- Log Management

- Observability Pipelines

- Gestión de logs

- CloudPrem

- Administración

- Gestión de cuentas

- Seguridad de los datos

- Ayuda

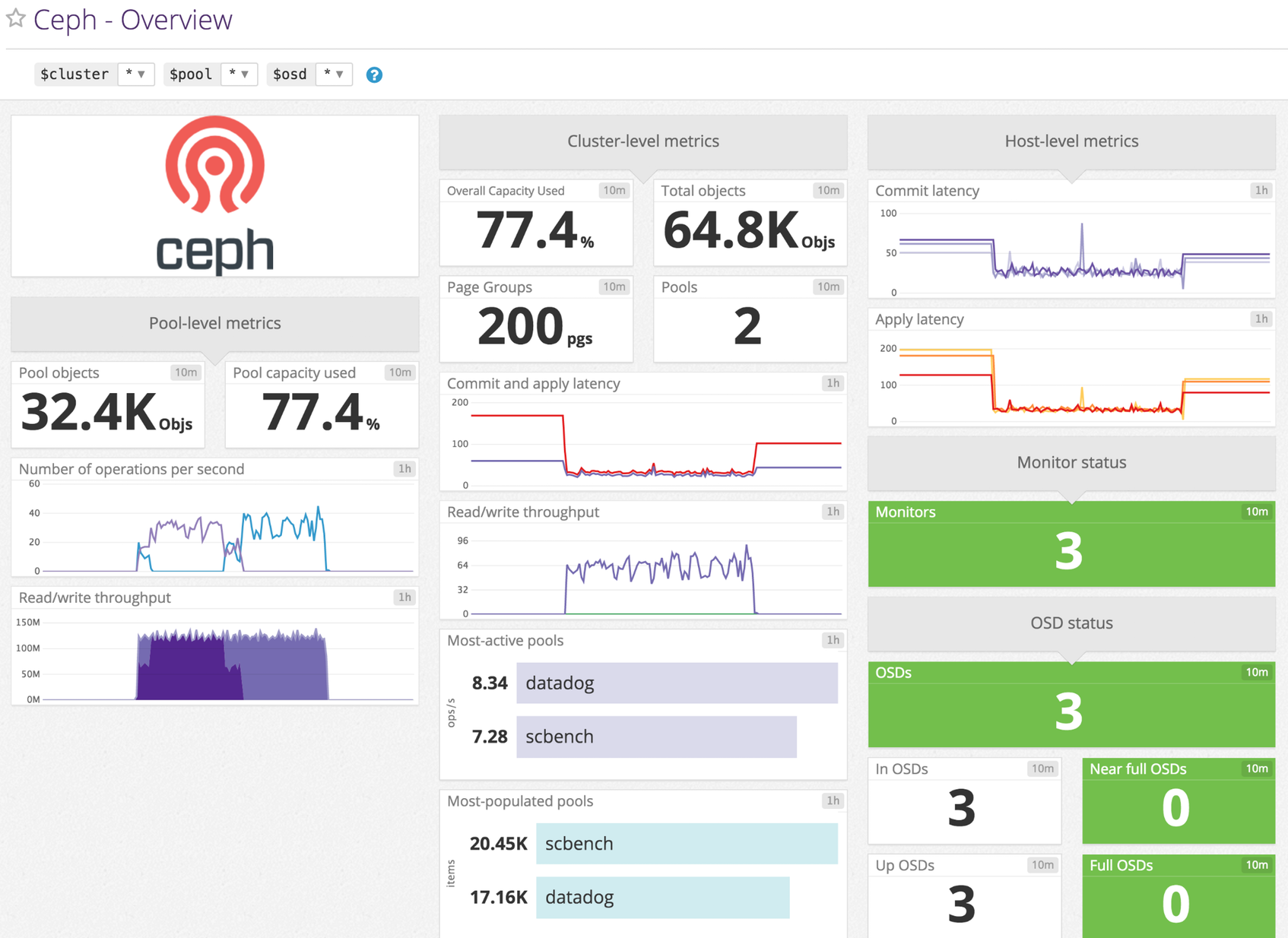

Ceph

Supported OS

Versión de la integración4.1.0

Información general

Habilita la integración de Ceph con Datadog para:

- Rastrear el uso del disco en los grupos de almacenamiento

- Recibir checks de servicio en caso de problemas

- Monitorizar las métricas de rendimiento de E/S

Configuración

Instalación

El check de Ceph está incluido en el paquete del Datadog Agent, por lo que no necesitas instalar nada más en tus servidores Ceph.

Configuración

Edita el archivo ceph.d/conf.yaml, en la carpeta conf.d/ en la raíz de tu directorio de configuración del Agent. Consulta el ejemplo de ceph.d/conf.yaml para conocer todas las opciones de configuración disponibles.

init_config:

instances:

- ceph_cmd: /path/to/your/ceph # default is /usr/bin/ceph

use_sudo: true # only if the ceph binary needs sudo on your nodes

Si has habilitado use_sudo, añade una línea como la siguiente a tu archivo sudoers:

dd-agent ALL=(ALL) NOPASSWD:/path/to/your/ceph

Recopilación de logs

Disponible para las versiones 6.0 o posteriores del Agent

La recopilación de logs está desactivada en forma predeterminada en el Datadog Agent, actívala en tu archivo

datadog.yaml:logs_enabled: trueLuego, edita

ceph.d/conf.yamlal quitar los comentarios de las líneaslogsde la parte inferior. Actualiza lapathde los logs con la ruta correcta a tus archivos de logs de Ceph.logs: - type: file path: /var/log/ceph/*.log source: ceph service: "<APPLICATION_NAME>"

Validación

Ejecuta el subcomando de estado del Agent y busca ceph en la sección Checks.

Datos recopilados

Métricas

| ceph.aggregate_pct_used (gauge) | Métrica de uso de la capacidad global Se muestra como porcentaje. |

| ceph.apply_latency_ms (gauge) | Tiempo que se tarda en enviar una actualización a los discos Se muestra como milisegundo |

| ceph.class_pct_used (gauge) | Porcentaje por clase de almacenamiento bruto utilizado Se muestra como porcentaje |

| ceph.commit_latency_ms (gauge) | Tiempo que se tarda en confirmar una operación en el diario Se muestra como milisegundo |

| ceph.misplaced_objects (gauge) | Número de objetos extraviados Se muestra como elemento |

| ceph.misplaced_total (gauge) | Número total de objetos si hay objetos extraviados Se muestra como elemento |

| ceph.num_full_osds (gauge) | Número de OSD completas Se muestra como elemento |

| ceph.num_in_osds (gauge) | Número de daemons de almacenamiento participantes Se muestra como elemento |

| ceph.num_mons (gauge) | Número de daemons de monitor Se muestra como elemento |

| ceph.num_near_full_osds (gauge) | Número de OSD casi completas Se muestra como elemento |

| ceph.num_objects (gauge) | Recuento de objetos de un grupo determinado Se muestra como elemento |

| ceph.num_osds (gauge) | Número de daemons de almacenamiento conocidos Se muestra como elemento |

| ceph.num_pgs (gauge) | Número de grupos de colocación disponibles Se muestra como elemento |

| ceph.num_pools (gauge) | Número de grupos Se muestra como elemento |

| ceph.num_up_osds (gauge) | Número de daemons de almacenamiento en línea Se muestra como elemento |

| ceph.op_per_sec (gauge) | Operaciones de E/S por segundo para un grupo determinado Se muestra como operación |

| ceph.osd.pct_used (gauge) | Porcentaje utilizado de OSD completas/casi completas Se muestra como porcentaje. |

| ceph.pgstate.active_clean (gauge) | Número de grupos de colocación activos+limpios Se muestra como elemento |

| ceph.read_bytes (gauge) | Bytes de lectura por grupo Se muestra como byte |

| ceph.read_bytes_sec (gauge) | Bytes/segundo que se leen Se muestra como byte |

| ceph.read_op_per_sec (gauge) | Operaciones de lectura por grupo/segundo Se muestra como operación |

| ceph.recovery_bytes_per_sec (gauge) | Tasa de bytes recuperados Se muestra como byte |

| ceph.recovery_keys_per_sec (gauge) | Tasa de claves recuperadas Se muestra como elemento |

| ceph.recovery_objects_per_sec (gauge) | Tasa de objetos recuperados Se muestra como elemento |

| ceph.total_objects (gauge) | Recuento de objetos del almacén de objetos subyacente. [v<=3 only] Se muestra como elemento |

| ceph.write_bytes (gauge) | Bytes de escritura por grupo Se muestra como byte |

| ceph.write_bytes_sec (gauge) | Bytes/segundo que se escriben Se muestra como byte |

| ceph.write_op_per_sec (gauge) | Operaciones de escritura por grupo/segundo Se muestra como operación |

Nota: Si estás ejecutando Ceph Luminous o posterior, la métrica ceph.osd.pct_used no está incluida.

Eventos

El check de Ceph no incluye eventos.

Checks de servicio

ceph.overall_status

Devuelve OK si el estado de tu clúster Ceph es HEALTH_OK, WARNING si es HEALTH_WARNING, CRITICAL en caso contrario.

Estados: ok, warning, critical

ceph.osd_down

Devuelve OK si no tienes ningún OSD caído. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.osd_orphan

Devuelve OK si no tienes ningún OSD huérfano. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.osd_full

Devuelve OK si tus OSD no están completas. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.osd_nearfull

Devuelve OK si tus OSD no están casi completas. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pool_full

Devuelve OK si tus grupos no han alcanzado su cuota. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pool_near_full

Devuelve OK si tus grupos no están cerca de alcanzar su cuota. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pg_availability

Devuelve OK si hay plena disponibilidad de datos. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pg_degraded

Devuelve OK si hay redundancia total de datos. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pg_degraded_full

Devuelve OK si hay espacio suficiente en el cluster para la redundancia de datos. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pg_damaged

Devuelve OK si no hay incoherencias tras la depuración de datos. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pg_not_scrubbed

Devuelve OK si los PG fueron depurados recientemente. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.pg_not_deep_scrubbed

Devuelve OK si los PG fueron enteramente depurados recientemente. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.cache_pool_near_full

Devuelve OK si los grupos de caché no están casi llenos. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.too_few_pgs

Devuelve OK si el número de PG supera el umbral mínimo. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.too_many_pgs

Devuelve OK si el número de PG está por debajo del umbral máximo. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.object_unfound

Devuelve OK si se pueden encontrar todos los objetos. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.request_slow

Devuelve OK si las solicitudes tardan un tiempo normal en procesarse. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

ceph.request_stuck

Devuelve OK si las solicitudes tardan un tiempo normal en procesarse. En caso contrario, devuelve WARNING si la gravedad es HEALTH_WARN, si no devuelve CRITICAL.

Estados: ok, warning, critical

Solucionar problemas

¿Necesitas ayuda? Ponte en contacto con el servicio de asistencia de Datadog.