- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Setup Data Streams Monitoring for Go

The following instrumentation types are available:

- Automatic instrumentation for Kafka-based workloads

- Manual Instrumentation for Kafka-based workloads

- Manual instrumentation for other queuing technology or protocol

Prerequisites

To start with Data Streams Monitoring, you need recent versions of the Datadog Agent and Data Streams Monitoring libraries.

Note: This documentation uses v2 of the Go tracer, which Datadog recommends for all users. If you are using v1, see the migration guide to upgrade to v2.

Data Streams Monitoring has not been changed between v1 and v2 of the tracer.

Supported libraries

| Technology | Library | Minimal tracer version | Recommended tracer version |

|---|---|---|---|

| Kafka | confluent-kafka-go | 1.56.1 | 1.66.0 or later |

| Kafka | Sarama | 1.56.1 | 1.66.0 or later |

| Kafka | kafka-go | 1.63.0 | 1.63.0 or later |

Installation

Monitoring Kafka Pipelines

Data Streams Monitoring uses message headers to propagate context through Kafka streams. If log.message.format.version is set in the Kafka broker configuration, it must be set to 0.11.0.0 or higher. Data Streams Monitoring is not supported for versions lower than this.

Monitoring RabbitMQ pipelines

The RabbitMQ integration can provide detailed monitoring and metrics of your RabbitMQ deployments. For full compatibility with Data Streams Monitoring, Datadog recommends configuring the integration as follows:

instances:

- prometheus_plugin:

url: http://<HOST>:15692

unaggregated_endpoint: detailed?family=queue_coarse_metrics&family=queue_consumer_count&family=channel_exchange_metrics&family=channel_queue_exchange_metrics&family=node_coarse_metrics

This ensures that all RabbitMQ graphs populate, and that you see detailed metrics for individual exchanges as well as queues.

Automatic Instrumentation

Automatic instrumentation uses Orchestrion to install dd-trace-go and supports both the Sarama and Confluent Kafka libraries.

To automatically instrument your service:

- Follow the Orchestrion Getting Started guide to compile or run your service using Orchestrion.

- Set the

DD_DATA_STREAMS_ENABLED=trueenvironment variable

Manual instrumentation

Sarama Kafka client

To manually instrument the Sarama Kafka client with Data Streams Monitoring:

- Import the

ddsaramago library

import (

ddsarama "github.com/DataDog/dd-trace-go/contrib/IBM/sarama/v2"

)

2. Wrap the producer with `ddsarama.WrapAsyncProducer`

...

config := sarama.NewConfig()

producer, err := sarama.NewAsyncProducer([]string{bootStrapServers}, config)

// ADD THIS LINE

producer = ddsarama.WrapAsyncProducer(config, producer, ddsarama.WithDataStreams())

Confluent Kafka client

To manually instrument Confluent Kafka with Data Streams Monitoring:

- Import the

ddkafkago library

import (

ddkafka "github.com/DataDog/dd-trace-go/contrib/confluentinc/confluent-kafka-go/kafka.v2/v2"

)

- Wrap the producer creation with

ddkafka.NewProducerand use theddkafka.WithDataStreams()configuration

// CREATE PRODUCER WITH THIS WRAPPER

producer, err := ddkafka.NewProducer(&kafka.ConfigMap{

"bootstrap.servers": bootStrapServers,

}, ddkafka.WithDataStreams())

If a service consumes data from one point and produces to another point, propagate context between the two places using the Go context structure:

Extract the context from headers

ctx = datastreams.ExtractFromBase64Carrier(ctx, ddsarama.NewConsumerMessageCarrier(message))Inject it into the header before producing downstream

datastreams.InjectToBase64Carrier(ctx, ddsarama.NewProducerMessageCarrier(message))

Other queuing technologies or protocols

You can also use manual instrumentation. For example, you can propagate context through Kinesis.

Instrumenting the produce call

- Ensure your message supports the TextMapWriter interface.

- Inject the context into your message and instrument the produce call by calling:

ctx, ok := tracer.SetDataStreamsCheckpointWithParams(ctx, options.CheckpointParams{PayloadSize: getProducerMsgSize(msg)}, "direction:out", "type:kinesis", "topic:kinesis_arn")

if ok {

datastreams.InjectToBase64Carrier(ctx, message)

}

Instrumenting the consume call

- Ensure your message supports the TextMapReader interface.

- Extract the context from your message and instrument the consume call by calling:

ctx, ok := tracer.SetDataStreamsCheckpointWithParams(datastreams.ExtractFromBase64Carrier(context.Background(), message), options.CheckpointParams{PayloadSize: payloadSize}, "direction:in", "type:kinesis", "topic:kinesis_arn")

Monitoring connectors

Confluent Cloud connectors

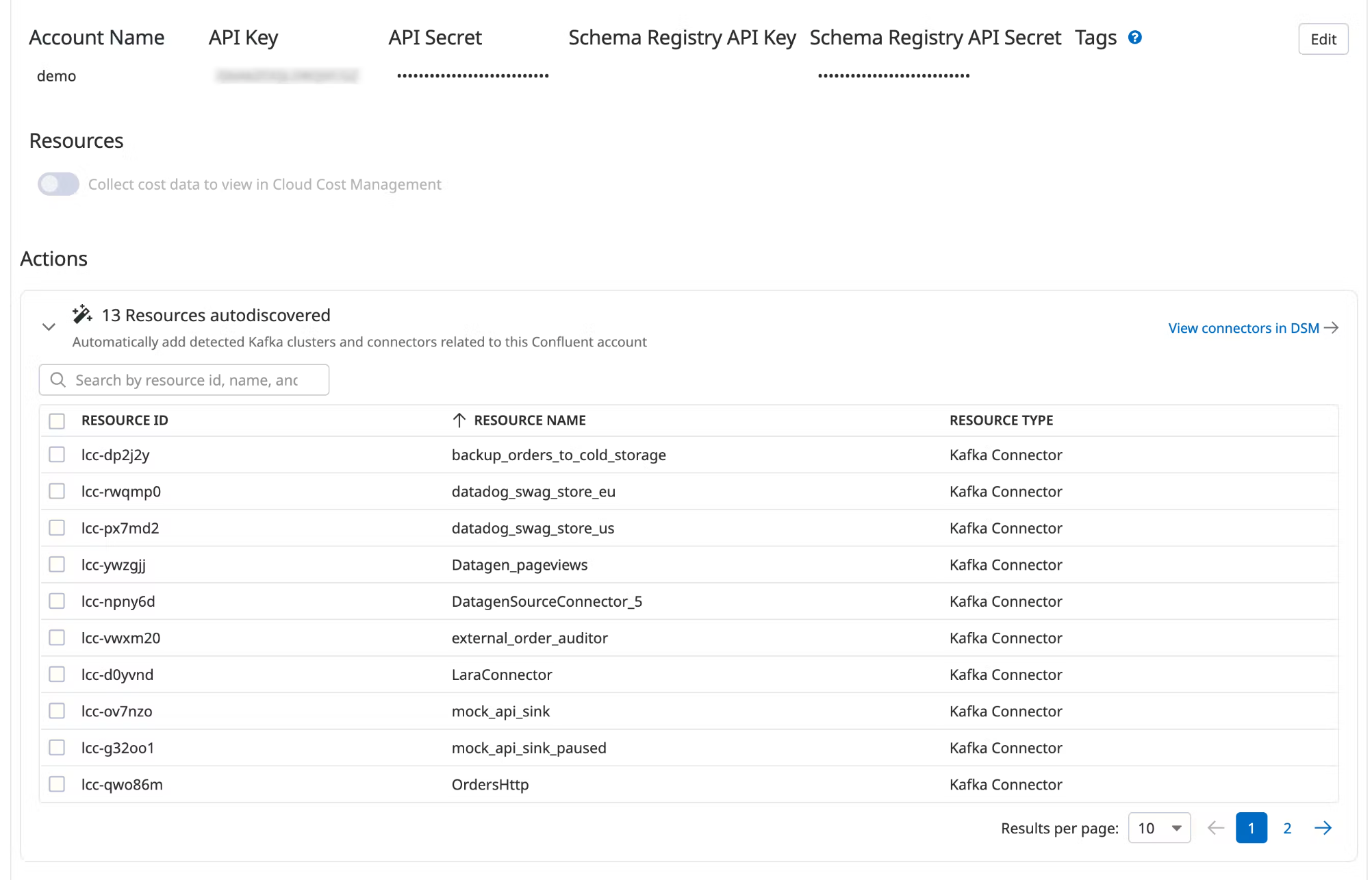

Data Streams Monitoring can automatically discover your Confluent Cloud connectors and visualize them within the context of your end-to-end streaming data pipeline.

Setup

Install and configure the Datadog-Confluent Cloud integration.

In Datadog, open the Confluent Cloud integration tile.

Under Actions, a list of resources populates with detected clusters and connectors. Datadog attempts to discover new connectors every time you view this integration tile.

Select the resources you want to add.

Click Add Resources.

Navigate to Data Streams Monitoring to visualize the connectors and track connector status and throughput.

Further reading

Additional helpful documentation, links, and articles: