- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Set Up the OpenTelemetry Collector

Overview

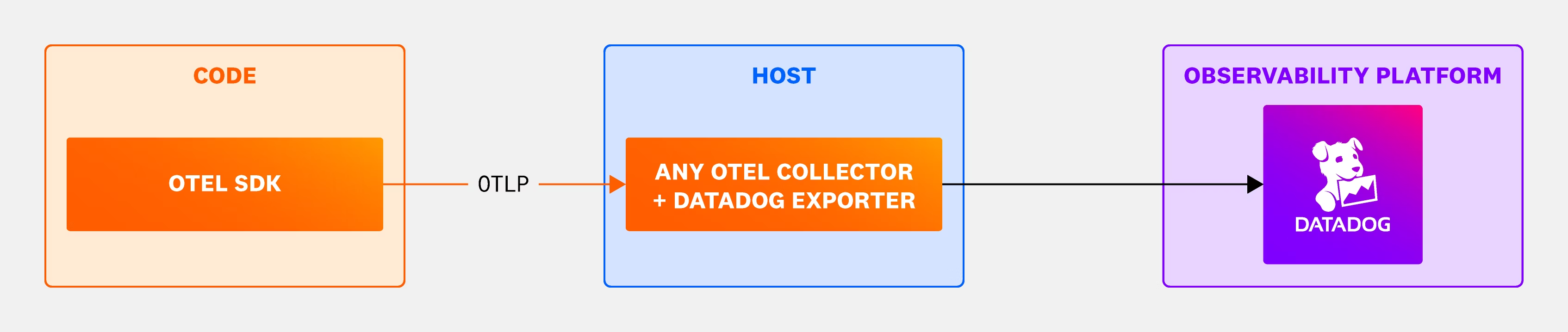

The OpenTelemetry Collector enables you to collect, process, and export telemetry data from your applications in a vendor-neutral way. When configured with the Datadog Exporter and Datadog Connector, you can send your traces, logs, and metrics to Datadog without the Datadog Agent.

- Datadog Exporter: Forwards trace, metric, and logs data from OpenTelemetry SDKs to Datadog (without the Datadog Agent)

- Datadog Connector: Calculates Trace Metrics from collected span data

To see which Datadog features are supported with this setup, see the feature compatibility table under Full OTel.

Install and configure

1 - Download the OpenTelemetry Collector

Download the latest release of the OpenTelemetry Collector Contrib distribution, from the project’s repository.

2 - Configure the Datadog Exporter and Connector

To use the Datadog Exporter and Datadog Connector, configure them in your OpenTelemetry Collector configuration:

- Create a configuration file named

collector.yaml. - Use the following example file to get started.

- Set your Datadog API key as the

DD_API_KEYenvironment variable.

The following examples use

0.0.0.0 as the endpoint address for convenience. This allows connections from any network interface. For enhanced security, especially in local deployments, consider using localhost instead.

For more information on secure endpoint configuration, see the OpenTelemetry security documentation.receivers:

otlp:

protocols:

http:

endpoint: 0.0.0.0:4318

grpc:

endpoint: 0.0.0.0:4317

# The hostmetrics receiver is required to get correct infrastructure metrics in Datadog.

hostmetrics:

collection_interval: 10s

scrapers:

paging:

metrics:

system.paging.utilization:

enabled: true

cpu:

metrics:

system.cpu.utilization:

enabled: true

disk:

filesystem:

metrics:

system.filesystem.utilization:

enabled: true

load:

memory:

network:

processes:

# The prometheus receiver scrapes metrics needed for the OpenTelemetry Collector Dashboard.

prometheus:

config:

scrape_configs:

- job_name: 'otelcol'

scrape_interval: 10s

static_configs:

- targets: ['0.0.0.0:8888']

filelog:

include_file_path: true

poll_interval: 500ms

include:

- /var/log/**/*example*/*.log

processors:

batch:

send_batch_max_size: 100

send_batch_size: 10

timeout: 10s

connectors:

datadog/connector:

exporters:

datadog/exporter:

api:

site:

key: ${env:DD_API_KEY}

service:

pipelines:

metrics:

receivers: [hostmetrics, prometheus, otlp, datadog/connector]

processors: [batch]

exporters: [datadog/exporter]

traces:

receivers: [otlp]

processors: [batch]

exporters: [datadog/connector, datadog/exporter]

logs:

receivers: [otlp, filelog]

processors: [batch]

exporters: [datadog/exporter]

This basic configuration enables the receiving of OTLP data over HTTP and gRPC, and sets up a batch processor.

For a complete list of configuration options for the Datadog Exporter, see the fully documented example configuration file. Additional options like api::site and host_metadata settings may be relevant depending on your deployment.

Batch processor configuration

The batch processor is required for non-development environments. The exact configuration depends on your specific workload and signal types.

Configure the batch processor based on Datadog’s intake limits:

- Trace intake: 3.2MB

- Log intake: 5MB uncompressed

- Metrics V2 intake: 500KB or 5MB after decompression

You may get 413 - Request Entity Too Large errors if you batch too much telemetry data in the batch processor.

3 - Configure your application

To get better metadata for traces and for smooth integration with Datadog:

Use resource detectors: If they are provided by the language SDK, attach container information as resource attributes. For example, in Go, use the

WithContainer()resource option.Apply Unified Service Tagging: Make sure you’ve configured your application with the appropriate resource attributes for unified service tagging. This ties Datadog telemetry together with tags for service name, deployment environment, and service version. The application should set these tags using the OpenTelemetry semantic conventions:

service.name,deployment.environment, andservice.version.

4 - Configure the logger for your application

Since the OpenTelemetry SDKs’ logging functionality is not fully supported (see your specific language in the OpenTelemetry documentation for more information), Datadog recommends using a standard logging library for your application. Follow the language-specific Log Collection documentation to set up the appropriate logger in your application. Datadog strongly encourages setting up your logging library to output your logs in JSON to avoid the need for custom parsing rules.

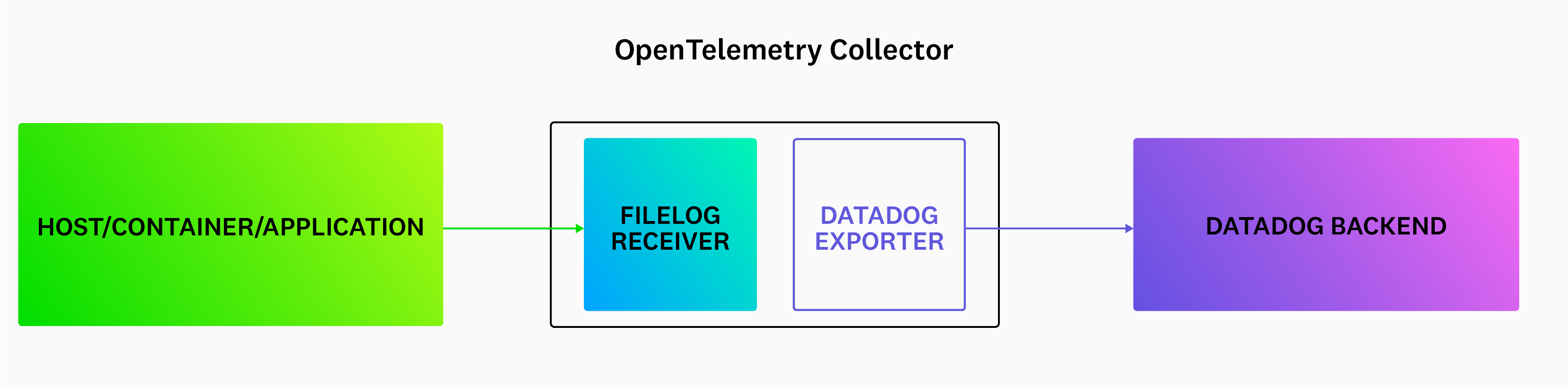

Configure the filelog receiver

Configure the filelog receiver using operators. For example, if there is a service checkoutservice that is writing logs to /var/log/pods/services/checkout/0.log, a sample log might look like this:

{"level":"info","message":"order confirmation email sent to \"jack@example.com\"","service":"checkoutservice","span_id":"197492ff2b4e1c65","timestamp":"2022-10-10T22:17:14.841359661Z","trace_id":"e12c408e028299900d48a9dd29b0dc4c"}

Example filelog configuration:

filelog:

include:

- /var/log/pods/**/*checkout*/*.log

start_at: end

poll_interval: 500ms

operators:

- id: parse_log

type: json_parser

parse_from: body

- id: trace

type: trace_parser

trace_id:

parse_from: attributes.trace_id

span_id:

parse_from: attributes.span_id

attributes:

ddtags: env:staging

include: The list of files the receiver tailsstart_at: end: Indicates to read new content that is being writtenpoll_internal: Sets the poll frequency- Operators:

json_parser: Parses JSON logs. By default, the filelog receiver converts each log line into a log record, which is thebodyof the logs’ data model. Then, thejson_parserconverts the JSON body into attributes in the data model.trace_parser: Extract thetrace_idandspan_idfrom the log to correlate logs and traces in Datadog.

Remap OTel’s service.name attribute to service for logs

For Datadog Exporter versions 0.83.0 and later, the service field of OTel logs is populated as OTel semantic convention service.name. However, service.name is not one of the default service attributes in Datadog’s log preprocessing.

To get the service field correctly populated in your logs, you can specify service.name to be the source of a log’s service by setting a log service remapper processor.

Optional: Using Kubernetes

Optional: Using Kubernetes

There are multiple ways to deploy the OpenTelemetry Collector and Datadog Exporter in a Kubernetes infrastructure. For the filelog receiver to work, the Agent/DaemonSet deployment is the recommended deployment method.

In containerized environments, applications write logs to stdout or stderr. Kubernetes collects the logs and writes them to a standard location. You need to mount the location on the host node into the Collector for the filelog receiver. Below is an extension example with the mounts required for sending logs.

apiVersion: apps/v1

metadata:

name: otel-agent

labels:

app: opentelemetry

component: otel-collector

spec:

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

containers:

- name: collector

command:

- "/otelcol-contrib"

- "--config=/conf/otel-agent-config.yaml"

image: otel/opentelemetry-collector-contrib:0.71.0

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

# The k8s.pod.ip is used to associate pods for k8sattributes

- name: OTEL_RESOURCE_ATTRIBUTES

value: "k8s.pod.ip=$(POD_IP)"

ports:

- containerPort: 4318 # default port for OpenTelemetry HTTP receiver.

hostPort: 4318

- containerPort: 4317 # default port for OpenTelemetry gRPC receiver.

hostPort: 4317

- containerPort: 8888 # Default endpoint for querying metrics.

volumeMounts:

- name: otel-agent-config-vol

mountPath: /conf

- name: varlogpods

mountPath: /var/log/pods

readOnly: true

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: otel-agent-config-vol

configMap:

name: otel-agent-conf

items:

- key: otel-agent-config

path: otel-agent-config.yaml

# Mount nodes log file location.

- name: varlogpods

hostPath:

path: /var/log/pods

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

Out-of-the-box Datadog Exporter configuration

You can find working examples of out-of-the-box configuration for Datadog Exporter in the exporter/datadogexporter/examples folder in the OpenTelemetry Collector Contrib project. See the full configuration example file, ootb-ec2.yaml. Configure each of the following components to suit your needs:

Further reading

Additional helpful documentation, links, and articles: