- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Trace Sampling and Storage

This page describes deprecated features with configuration information relevant to legacy App Analytics, useful for troubleshooting or modifying some old setups. To have full control over your traces, use ingestion controls and retention filters instead.

Trace sampling

Trace Sampling is applicable for high-volume web-scale applications, where a sampled proportion of traces is kept in Datadog based on the following rules.

Statistics (requests, errors, latency, etc.), are calculated based on the full volume of traces at the Agent level, and are therefore always accurate.

Statistics

Datadog APM computes following aggregate statistics over all the traces instrumented, regardless of sampling:

- Total requests and requests per second

- Total errors and errors per second

- Latency

- Breakdown of time spent by service/type

- Apdex score (web services only)

Goal of sampling

The goal of sampling is to keep the traces that matter the most:

- Distributed traces

- Low QPS Services

- Representative variety set of traces

Sampling rules

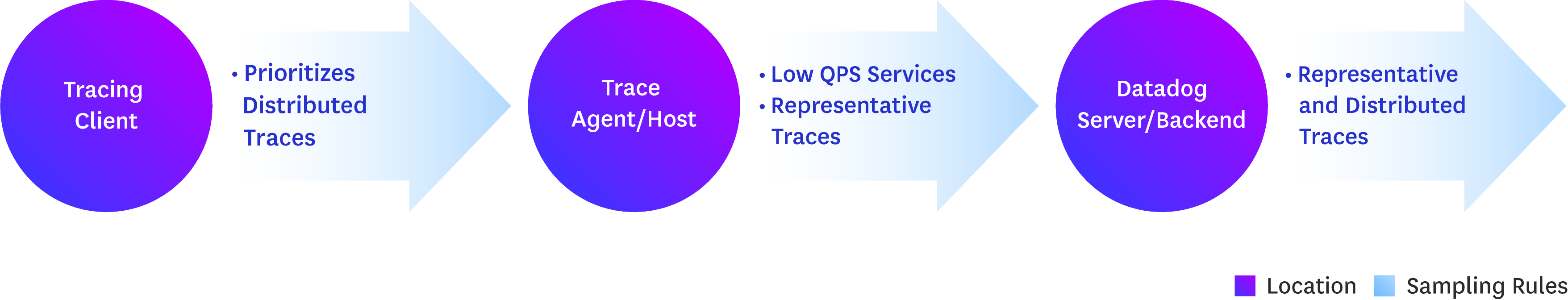

For the lifecycle of a trace, decisions are made at Tracing Client, Agent, and Backend level in the following order.

Tracing Client - The tracing client adds a context attribute

sampling.priorityto traces, allowing a single trace to be propagated in a distributed architecture across language agnostic request headers.Sampling-priorityattribute is a hint to the Datadog Agent to do its best to prioritize the trace or drop unimportant ones.Value Type Action MANUAL_DROP User input The Agent drops the trace. AUTO_DROP Automatic sampling decision The Agent drops the trace. AUTO_KEEP Automatic sampling decision The Agent keeps the trace. MANUAL_KEEP User input The Agent keeps the trace, and the backend will only apply sampling if above maximum volume allowed. Note that when used with App Analytics filtering - all spans marked for MANUAL_KEEPare counted as billable spans.Traces are automatically assigned a priority of AUTO_DROP or AUTO_KEEP, with a proportion ensuring that the Agent won’t have to sample more than it is allowed. Users can manually adjust this attribute to give priority to specific types of traces, or entirely drop uninteresting ones.

Trace Agent (Host or Container Level)- The Agent receives traces from various tracing clients and filters requests based on two rules -

- Ensure traces are kept across variety of traces. (across services, resources, HTTP status codes, errors)

- Ensure traces are kept for low volume resources (web endpoints, DB queries).

The Agent computes a

signaturefor every trace reported, based on its services, resources, errors, etc.. Traces of the same signature are considered similar. For example, a signature could be:env=prod,my_web_service,is_error=true,resource=/loginenv=staging,my_database_service,is_error=false,query=SELECT...

A proportion of traces with each signature is then kept, so you get full visibility into all the different kinds of traces happening in your system. This method ensures traces for resources with low volumes are still kept.

Moreover, the Agent provides a service-based rate to the prioritized traces from tracing client to ensure traces from low QPS services are prioritized to be kept.

Users can manually drop entire uninteresting resource endpoints at Agent level by using resource filtering.

DD Backend/Server - The server receives traces from various Agents running on hosts and applies sampling to ensure representation from every reporting Agent. It does so by keeping traces on the basis of the signature marked by Agent.

Manually control trace priority

APM enables distributed tracing by default to allow trace propagation between tracing headers across multiple services/hosts. Tracing headers include a priority tag to ensure complete traces between upstream and downstream services during trace propagation. You can override this tag to manually keep a trace (critical transaction, debug mode, etc.) or drop a trace (health checks, static assets, etc).

Manually keep a trace:

import datadog.trace.api.DDTags;

import datadog.trace.api.interceptor.MutableSpan;

import datadog.trace.api.Trace;

import io.opentracing.util.GlobalTracer;

public class MyClass {

@Trace

public static void myMethod() {

// grab the active span out of the traced method

MutableSpan ddspan = (MutableSpan) GlobalTracer.get().activeSpan();

// Always keep the trace

ddspan.setTag(DDTags.MANUAL_KEEP, true);

// method impl follows

}

}

Manually drop a trace:

import datadog.trace.api.DDTags;

import datadog.trace.api.interceptor.MutableSpan;

import datadog.trace.api.Trace;

import io.opentracing.util.GlobalTracer;

public class MyClass {

@Trace

public static void myMethod() {

// grab the active span out of the traced method

MutableSpan ddspan = (MutableSpan) GlobalTracer.get().activeSpan();

// Always Drop the trace

ddspan.setTag(DDTags.MANUAL_DROP, true);

// method impl follows

}

}

Manually keep a trace:

from ddtrace import tracer

from ddtrace.constants import MANUAL_DROP_KEY, MANUAL_KEEP_KEY

@tracer.wrap()

def handler():

span = tracer.current_span()

// Always Keep the Trace

span.set_tag(MANUAL_KEEP_KEY)

// method impl follows

Manually drop a trace:

from ddtrace import tracer

from ddtrace.constants import MANUAL_DROP_KEY, MANUAL_KEEP_KEY

@tracer.wrap()

def handler():

span = tracer.current_span()

//Always Drop the Trace

span.set_tag(MANUAL_DROP_KEY)

//method impl follows

Manually keep a trace:

Datadog::Tracing.trace(name, options) do |span|

Datadog::Tracing.keep! # Affects the active span

# Method implementation follows

end

Manually drop a trace:

Datadog::Tracing.trace(name, options) do |span|

Datadog::Tracing.reject! # Affects the active span

# Method implementation follows

end

Note: This documentation uses v2 of the Go tracer, which Datadog recommends for all users. If you are using v1, see the migration guide to upgrade to v2.

Manually keep a trace:

package main

import (

"log"

"net/http"

"github.com/DataDog/dd-trace-go/v2/ddtrace/ext"

"github.com/DataDog/dd-trace-go/v2/ddtrace/tracer"

)

func handler(w http.ResponseWriter, r *http.Request) {

// Create a span for a web request at the /posts URL.

span := tracer.StartSpan("web.request", tracer.ResourceName("/posts"))

defer span.Finish()

// Always keep this trace:

span.SetTag(ext.ManualKeep, true)

//method impl follows

}

Manually drop a trace:

package main

import (

"log"

"net/http"

"github.com/DataDog/dd-trace-go/v2/ddtrace/ext"

"github.com/DataDog/dd-trace-go/v2/ddtrace/tracer"

)

func handler(w http.ResponseWriter, r *http.Request) {

// Create a span for a web request at the /posts URL.

span := tracer.StartSpan("web.request", tracer.ResourceName("/posts"))

defer span.Finish()

// Always drop this trace:

span.SetTag(ext.ManualDrop, true)

//method impl follows

}

Manually keep a trace:

const tracer = require('dd-trace')

const tags = require('dd-trace/ext/tags')

const span = tracer.startSpan('web.request')

// Always keep the trace

span.setTag(tags.MANUAL_KEEP)

//method impl follows

Manually drop a trace:

const tracer = require('dd-trace')

const tags = require('dd-trace/ext/tags')

const span = tracer.startSpan('web.request')

// Always drop the trace

span.setTag(tags.MANUAL_DROP)

//method impl follows

Manually keep a trace:

using Datadog.Trace;

using(var scope = Tracer.Instance.StartActive(operationName))

{

var span = scope.Span;

// Always keep this trace

span.SetTag(Tags.ManualKeep, "true");

//method impl follows

}

Manually drop a trace:

using Datadog.Trace;

using(var scope = Tracer.Instance.StartActive(operationName))

{

var span = scope.Span;

// Always drop this trace

span.SetTag(Tags.ManualDrop, "true");

//method impl follows

}

Manually keep a trace:

<?php

$tracer = \OpenTracing\GlobalTracer::get();

$span = $tracer->getActiveSpan();

if (null !== $span) {

// Always keep this trace

$span->setTag(\DDTrace\Tag::MANUAL_KEEP, true);

//method impl follows

}

?>

Manually drop a trace:

<?php

$tracer = \OpenTracing\GlobalTracer::get();

$span = $tracer->getActiveSpan();

if (null !== $span) {

// Always drop this trace

$span->setTag(\DDTrace\Tag::MANUAL_DROP, true);

//method impl follows

}

?>

Manually keep a trace:

...

#include <datadog/tags.h>

...

auto tracer = ...

auto span = tracer->StartSpan("operation_name");

// Always keep this trace

span->SetTag(datadog::tags::manual_keep, {});

//method impl follows

Manually drop a trace:

...

#include <datadog/tags.h>

...

auto tracer = ...

auto another_span = tracer->StartSpan("operation_name");

// Always drop this trace

another_span->SetTag(datadog::tags::manual_drop, {});

//method impl follows

Note that trace priority should be manually controlled only before any context propagation. If this happens after the propagation of a context, the system can’t ensure that the entire trace is kept across services. Manually controlled trace priority is set at tracing client location, the trace can still be dropped by Agent or server location based on the sampling rules.

Trace storage

Individual traces are stored for 30 days. This means that all sampled traces are retained for a period of 30 days and at the end of the 30th day, the entire set of expired traces is deleted. In addition, once a trace has been viewed by opening a full page, it continues to be available by using its trace ID in the URL: /apm/trace/<TRACE_ID>. This is true even if it “expires” from the UI. This behavior is independent of the UI retention time buckets.

Further Reading

Additional helpful documentation, links, and articles: