- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Create a Scheduled Rule

Overview

Scheduled detection rules run at predefined intervals to analyze indexed log data and detect security threats. These rules can identify patterns, anomalies, or specific conditions within a defined time frame, and trigger alerts or reports if the criteria are met.

Scheduled rules complement real-time monitoring by ensuring periodic, in-depth analysis of logs using calculated fields.

Create a rule

- To create a detection rule, navigate to the Create a New Detection page.

- Select Scheduled Rule.

Define your scheduled rule

Select the detection method you want to use for creating signals.

Define search queries

Choose the query language you want to use.

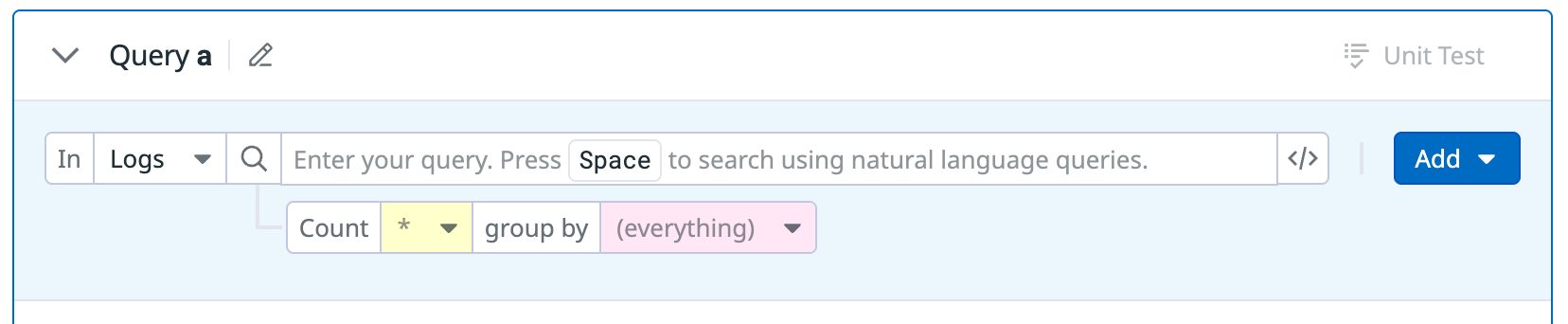

Event Query

Event Query

To search Audit Trail events or events from Events Management, click the down arrow next to Logs and select Audit Trail or Events.

If you are an add-on and see the Index dropdown menu, select the index of logs you want to analyze.

Construct a search query for your logs or events using the [Log Explorer search syntax][1].

(Optional) In the Count dropdown menu, select attributes whose unique values are counted over the specified time frame.

(Optional) In the group by dropdown menu, select attributes you want to group by.

- The defined

group bygenerates a signal for eachgroup byvalue. - Typically, the

group byis an entity (like user, or IP). Thegroup byis also used to join the queries together. - Joining logs that span a time frame can increase the confidence or severity of the security signal. For example, to detect a successful brute force attack, both successful and unsuccessful authentication logs must be correlated for a user.

- The defined

(Optional) To create calculated fields that transform your logs during query time:

- Click Add and select Calculated fields.

- In Name your field, enter a descriptive name that indicates the purpose of the calculated field.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

fullName.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

- In the Define your formula field, enter a formula or expression, which determines the result to be computed and stored as the value of the calculated field for each log event.

- See Calculated Fields Expressions Language for information on syntax and language constructs.

(Optional) To filter logs using reference tables:

- Click the Add button next to the query editor and select Join with Reference Table.

- In the Inner join with reference table dropdown menu, select your reference table.

- In the where field dropdown menu, select the log field to join on.

- Select the IN or NOT IN operator to filter in or filter out matching logs.

- In the column dropdown menu, select the column of the reference table to join on.

(Optional) To test your rules against sample logs, click Unit Test.

- To construct a sample log, you can:

- Navigate to Log Explorer in a new window.

- In the search bar, enter the query you are using for the detection rule.

- Select one of the logs.

- Click the export button at the top right side of the log side panel, and then select Copy.

- Navigate back to the Unit Test modal, and then paste the log into the text box. Edit the sample as needed for your use case.

- Toggle the switch for Query is expected to match based on the example event to fit your use case.

- Click Run Query Test.

- To construct a sample log, you can:

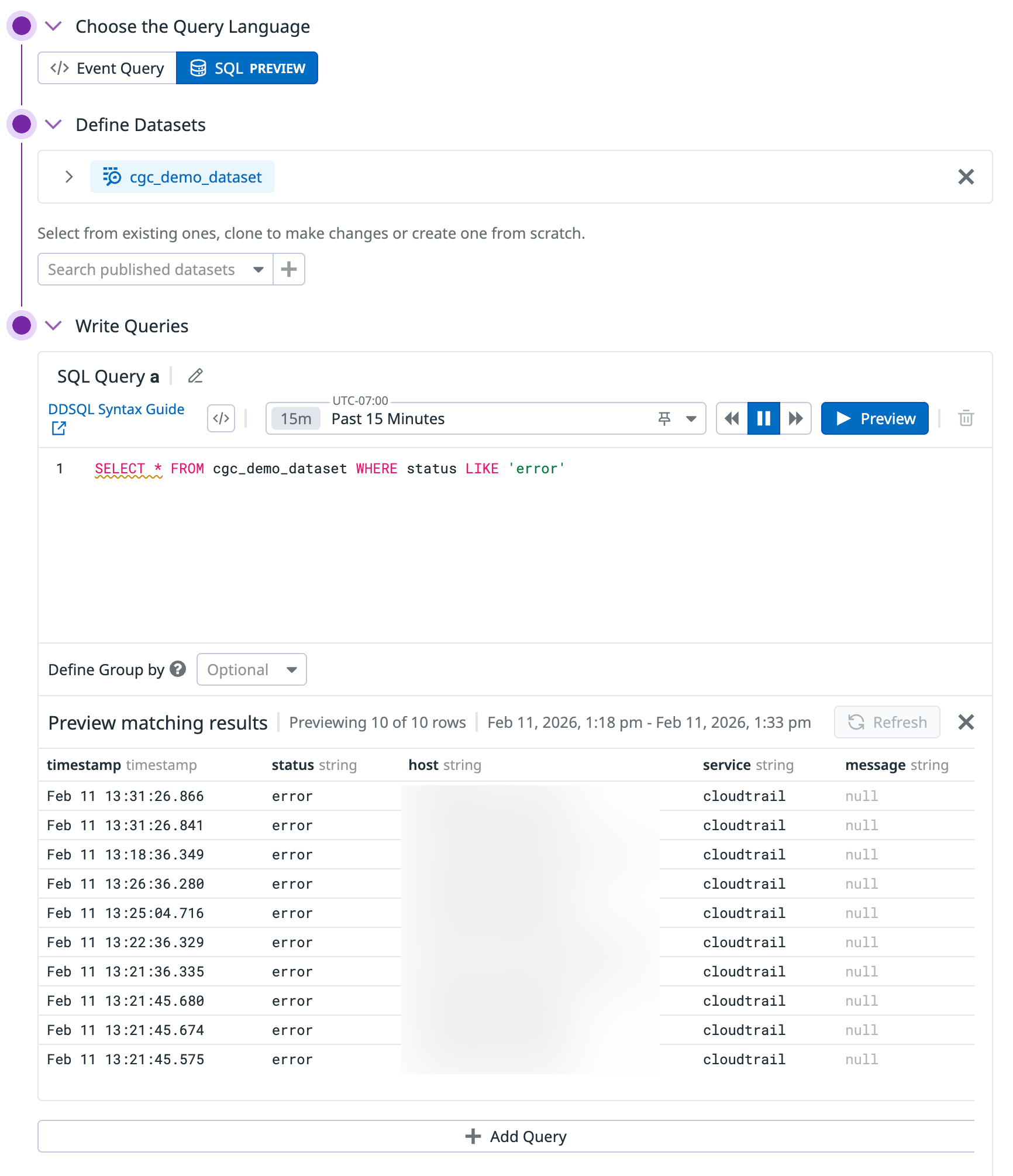

SQL

SQL

SQL queries are in Preview.

You can use SQL syntax to write detection rules for additional flexibility, consistency, and portability. For information on the available syntax, see DDSQL Reference.

In Datadog, SQL queries are compatible with data stored in datasets. You can create datasets to format data already stored in tables for the following data types:

- Logs

- Audit Trail logs

- Events

- Security signals

- Spans

- RUM events

- Product Analytics events

- Cloud Network data

- NetFlow data

- Reference tables

- Infrastructure tables

- Under Define Datasets, choose one or more datasets to use in your query. In the dropdown, you can select an existing published dataset to either use or clone, or click the New icon to create a database from scratch.

- If you chose an existing dataset and made changes, click Update to apply those changes to that dataset, or Clone With Changes to create a dataset with your changes applied.

- If you created a dataset, click Create so you can use it in your rule.

- Under Write Queries, enter one or more SQL queries. For more information, see DDSQL Reference. Click Preview to see a list of matching results.

To search Audit Trail events or events from Events Management, click the down arrow next to Logs and select Audit Trail or Events.

If you are an add-on and see the Index dropdown menu, select the index of logs you want to analyze.

Construct a search query for your logs or events using the Log Explorer search syntax.

In the Detect new value dropdown menu, select the attributes you want to detect.

- For example, if you create a query for successful user authentication with the following settings:

- Detect new value is

country - group by is

user - Learning duration is

after 7 days

Then, logs coming in over the next 7 days are evaluated with those configured values. If a log comes in with a new value after the learning duration (7 days), a signal is generated, and the new value is learned to prevent future signals with this value.

- Detect new value is

- You can also identify users and entities using multiple Detect new value attributes in a single query.

- For example, if you want to detect when a user signs in from a new device and from a country that they’ve never signed in from before, add

device_idandcountry_nameto the Detect new value field.

- For example, if you want to detect when a user signs in from a new device and from a country that they’ve never signed in from before, add

- For example, if you create a query for successful user authentication with the following settings:

(Optional) Define a signal grouping in the group by dropdown menu.

- The defined

group bygenerates a signal for eachgroup byvalue. - Typically, the

group byis an entity (like user or IP address).

- The defined

In the dropdown menu to the right of group by, select the learning duration.

(Optional) Define a signal grouping in the group by dropdown menu.

- The defined

group bygenerates a signal for eachgroup byvalue. - Typically, the

group byis an entity (like user or IP address).

- The defined

In the dropdown menu to the right of group by, select the learning duration.

(Optional) To create calculated fields that transform your logs during query time:

- Click Add and select Calculated fields.

- In Name your field, enter a descriptive name that indicates the purpose of the calculated field.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

fullName.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

- In the Define your formula field, enter a formula or expression, which determines the result to be computed and stored as the value of the calculated field for each log event.

- See Calculated Fields Expressions Language for information on syntax and language constructs.

(Optional) To filter logs using reference tables:

- Click the Add button next to the query editor and select Join with Reference Table.

- In the Inner join with reference table dropdown menu, select your reference table.

- In the where field dropdown menu, select the log field to join on.

- Select the IN or NOT IN operator to filter in or filter out matching logs.

- In the column dropdown menu, select the column of the reference table to join on.

(Optional) To test your rules against sample logs, click Unit Test.

- To construct a sample log, you can:

- Navigate to Log Explorer in a new window.

- In the search bar, enter the query you are using for the detection rule.

- Select one of the logs.

- Click the export button at the top right side of the log side panel, and then select Copy.

- Navigate back to the Unit Test modal, and then paste the log into the text box. Edit the sample as needed for your use case.

- Toggle the switch for Query is expected to match based on the example event to fit your use case.

- Click Run Query Test.

- To construct a sample log, you can:

To search Audit Trail events or events from Events Management, click the down arrow next to Logs and select Audit Trail or Events.

If you are an add-on and see the Index dropdown menu, select the index of logs you want to analyze.

Construct a search query for your logs or events using the Log Explorer search syntax.

(Optional) In the Count dropdown menu, select attributes whose unique values you want to count during the specified time frame.

(Optional) In the group by dropdown menu, select attributes you want to group by.

- The defined

group bygenerates a signal for eachgroup byvalue. - Typically, the

group byis an entity (like user or IP). Thegroup bycan also join the queries together. - Joining logs that span a time frame can increase the confidence or severity of the security signal. For example, if you want to detect a successful brute force attack, both successful and unsuccessful authentication logs must be correlated for a user.

- Anomaly detection inspects how the

group byattribute has behaved in the past. If agroup byattribute is seen for the first time (for example, the first time an IP is communicating with your system) and is anomalous, it does not generate a security signal because the anomaly detection algorithm has no historical data to compare with.

- The defined

(Optional) To create calculated fields that transform your logs during query time:

- Click Add and select Calculated fields.

- In Name your field, enter a descriptive name that indicates the purpose of the calculated field.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

fullName.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

- In the Define your formula field, enter a formula or expression, which determines the result to be computed and stored as the value of the calculated field for each log event.

- See Calculated Fields Expressions Language for information on syntax and language constructs.

(Optional) To filter logs using reference tables:

- Click the Add button next to the query editor and select Join with Reference Table.

- In the Inner join with reference table dropdown menu, select your reference table.

- In the where field dropdown menu, select the log field to join on.

- Select the IN or NOT IN operator to filter in or filter out matching logs.

- In the column dropdown menu, select the column of the reference table to join on.

(Optional) To test your rules against sample logs, click Unit Test.

- To construct a sample log, you can:

- Navigate to Log Explorer in a new window.

- In the search bar, enter the query you are using for the detection rule.

- Select one of the logs.

- Click the export button at the top right side of the log side panel, and then select Copy.

- Navigate back to the Unit Test modal, and then paste the log into the text box. Edit the sample as needed for your use case.

- Toggle the switch for Query is expected to match based on the example event to fit your use case.

- Click Run Query Test.

- To construct a sample log, you can:

To search Audit Trail events or events from Events Management, click the down arrow next to Logs and select Audit Trail or Events.

If you are an add-on and see the Index dropdown menu, select the index of logs you want to analyze.

Construct a search query for your logs or events using the Log Explorer search syntax.

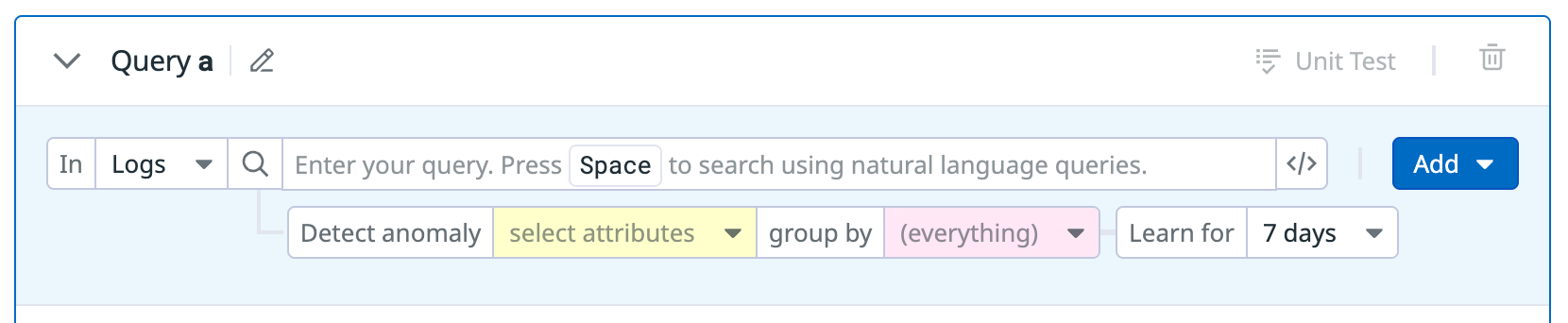

In the Detect anomaly field, specify the fields whose values you want to analyze.

In the group by field, specify the fields you want to group by.

- The defined

group bygenerates a signal for eachgroup byvalue. - Typically, the

group byis an entity (like user or IP). Thegroup bycan also join the queries together. - Joining logs that span a time frame can increase the confidence or severity of the security signal. For example, to detect a successful brute force attack, both successful and unsuccessful authentication logs must be correlated for a user.

- The defined

In the Learn for dropdown menu, select the number of days for the learning period. During the learning period, the rule sets a baseline of normal field values and does not generate any signals.

- Note: If the detection rule is modified, the learning period restarts at day

0.

- Note: If the detection rule is modified, the learning period restarts at day

(Optional) To create calculated fields that transform your logs during query time:

- Click Add and select Calculated fields.

- In Name your field, enter a descriptive name that indicates the purpose of the calculated field.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

fullName.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

- In the Define your formula field, enter a formula or expression, which determines the result to be computed and stored as the value of the calculated field for each log event.

- See Calculated Fields Expressions Language for information on syntax and language constructs.

(Optional) To filter logs using reference tables:

- Click the Add button next to the query editor and select Join with Reference Table.

- In the Inner join with reference table dropdown menu, select your reference table.

- In the where field dropdown menu, select the log field to join on.

- Select the IN or NOT IN operator to filter in or filter out matching logs.

- In the column dropdown menu, select the column of the reference table to join on.

(Optional) To test your rules against sample logs, click Unit Test.

- To construct a sample log, you can:

- Navigate to Log Explorer in a new window.

- In the search bar, enter the query you are using for the detection rule.

- Select one of the logs.

- Click the export button at the top right side of the log side panel, and then select Copy.

- Navigate back to the Unit Test modal, and then paste the log into the text box. Edit the sample as needed for your use case.

- Toggle the switch for Query is expected to match based on the example event to fit your use case.

- Click Run Query Test.

- To construct a sample log, you can:

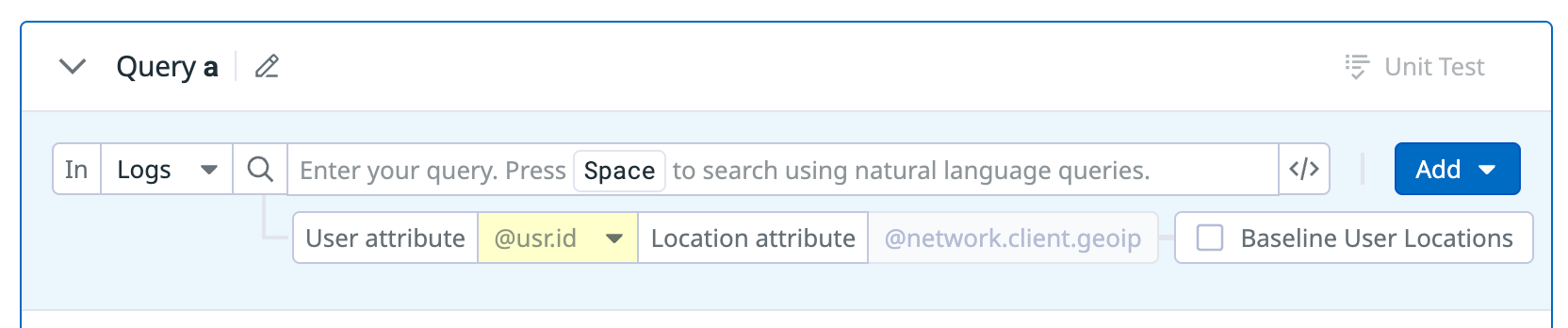

- To search Audit Trail events or events from Events Management, click the down arrow next to Logs and select Audit Trail or Events.

- If you are an add-on and see the Index dropdown menu, select the index of logs you want to analyze.

- Construct a search query for your logs or events using the Log Explorer search syntax.

- In the User attribute dropdown menu, select the log attribute that contains the user ID. This can be an identifier like an email address, user name, or account identifier.

- The Location attribute value is automatically set to

@network.client.geoip.- The

location attributespecifies which field holds the geographic information for a log. - The only supported value is

@network.client.geoip, which is enriched by the GeoIP parser to give a log location information based on the client’s IP address.

- The

- Click the Baseline user locations checkbox if you want Datadog to learn regular access locations before triggering a signal.

- When selected, signals are suppressed for the first 24 hours. During that time, Datadog learns the user’s regular access locations. This can be helpful to reduce noise and infer VPN usage or credentialed API access.

- See How the impossible detection method works for more information.

(Optional) To create calculated fields that transform your logs during query time:

- Click Add and select Calculated fields.

- In Name your field, enter a descriptive name that indicates the purpose of the calculated field.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

fullName.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

- In the Define your formula field, enter a formula or expression, which determines the result to be computed and stored as the value of the calculated field for each log event.

- See Calculated Fields Expressions Language for information on syntax and language constructs.

(Optional) To filter logs using reference tables:

- Click the Add button next to the query editor and select Join with Reference Table.

- In the Inner join with reference table dropdown menu, select your reference table.

- In the where field dropdown menu, select the log field to join on.

- Select the IN or NOT IN operator to filter in or filter out matching logs.

- In the column dropdown menu, select the column of the reference table to join on.

(Optional) To test your rules against sample logs, click Unit Test.

- To construct a sample log, you can:

- Navigate to Log Explorer in a new window.

- In the search bar, enter the query you are using for the detection rule.

- Select one of the logs.

- Click the export button at the top right side of the log side panel, and then select Copy.

- Navigate back to the Unit Test modal, and then paste the log into the text box. Edit the sample as needed for your use case.

- Toggle the switch for Query is expected to match based on the example event to fit your use case.

- Click Run Query Test.

- To construct a sample log, you can:

Note: All logs and events matching this query are analyzed for a potential impossible travel.

To search Audit Trail events or events from Events Management, click the down arrow next to Logs and select Audit Trail or Events.

If you are an add-on and see the Index dropdown menu, select the index of logs you want to analyze.

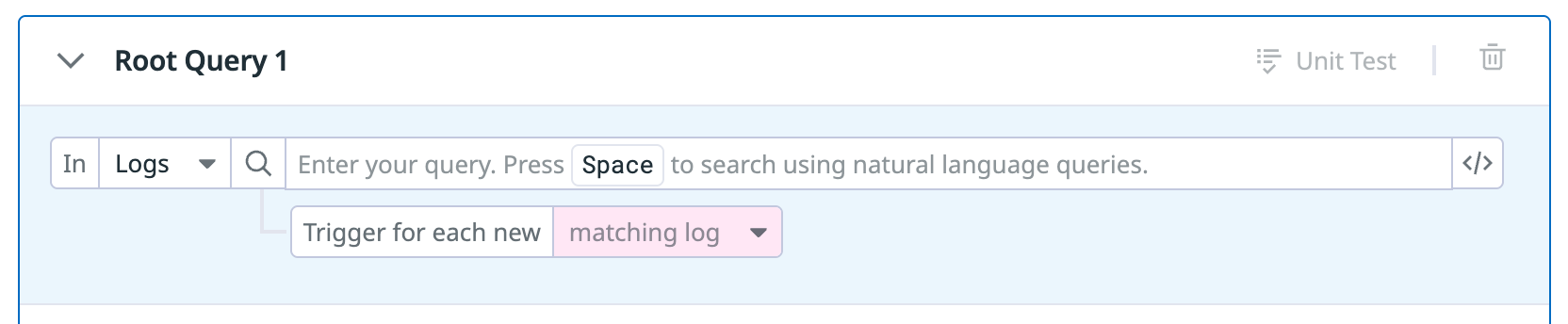

Construct a root query for your logs or events using the Log Explorer search syntax.

In the Trigger for each new dropdown menu, select the attributes where each attribute generates a signal for each new attribute value over 24-hour roll-up period.

(Optional) To create calculated fields that transform your logs during query time:

- Click Add and select Calculated fields.

- In Name your field, enter a descriptive name that indicates the purpose of the calculated field.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

fullName.

- For example, if you want to combine users’ first and last name into one field, you might name the calculated field

- In the Define your formula field, enter a formula or expression, which determines the result to be computed and stored as the value of the calculated field for each log event.

- See Calculated Fields Expressions Language for information on syntax and language constructs.

(Optional) To filter logs using reference tables:

- Click the Add button next to the query editor and select Join with Reference Table.

- In the Inner join with reference table dropdown menu, select your reference table.

- In the where field dropdown menu, select the log field to join on.

- Select the IN or NOT IN operator to filter in or filter out matching logs.

- In the column dropdown menu, select the column of the reference table to join on.

(Optional) To test your rules against sample logs, click Unit Test.

- To construct a sample log, you can:

- Navigate to Log Explorer in a new window.

- In the search bar, enter the query you are using for the detection rule.

- Select one of the logs.

- Click the export button at the top right side of the log side panel, and then select Copy.

- Navigate back to the Unit Test modal, and then paste the log into the text box. Edit the sample as needed for your use case.

- Toggle the switch for Query is expected to match based on the example event to fit your use case.

- Click Run Query Test.

- To construct a sample log, you can:

Click Add Root Query to add additional queries.

- Select a rule for Rule a.

- Click the pencil icon to rename the rule.

- Use the correlated by dropdown to define the correlating attribute.

- You can select multiple attributes (maximum of 3) to correlate the selected rules.

- Select a rule for Rule b in the second Rule editor’s dropdown.

- The attributes and sliding window time frame is automatically set to what was selected for Rule a.

- Click the pencil icon to rename the rule.

Set conditions

- If you have a single query, skip to step 2. If you have multiple queries, you can create a Simple condition or Then condition.

- If you want to create a simple condition, leave the selection as is.

- If you want to create a

thencondition, click THEN condition.- Use the Then condition when you want to trigger a signal if query A occurs and then query B occurs.

- Note: The

thenoperator can only be used on a single rule condition.

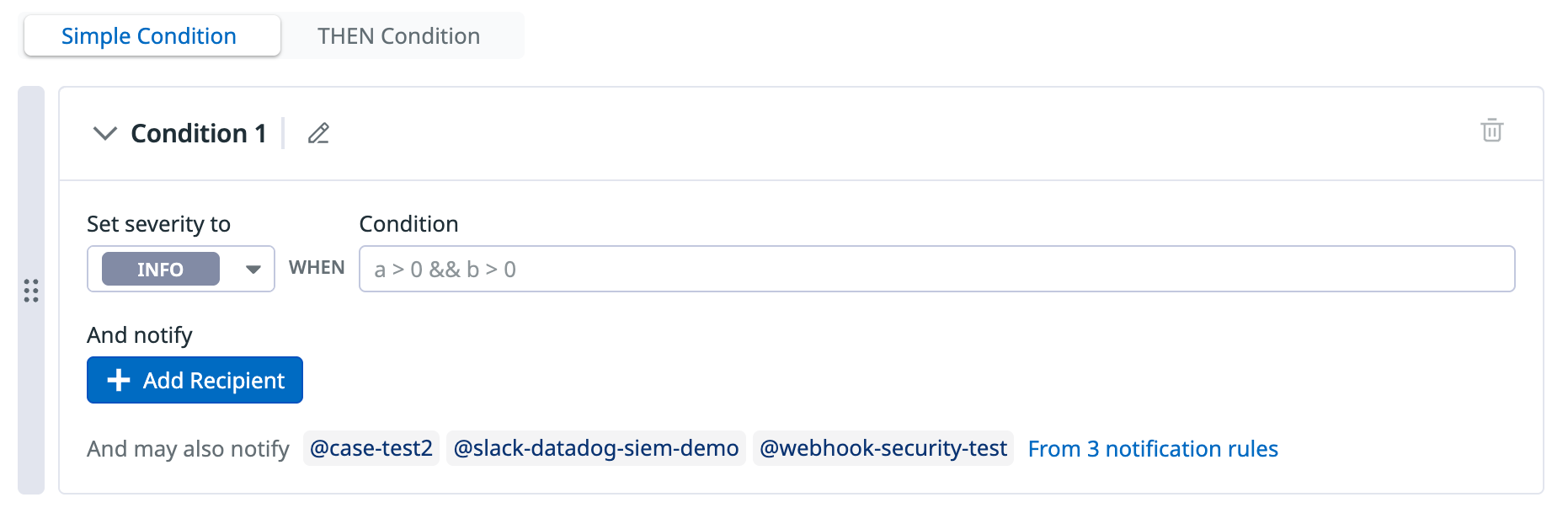

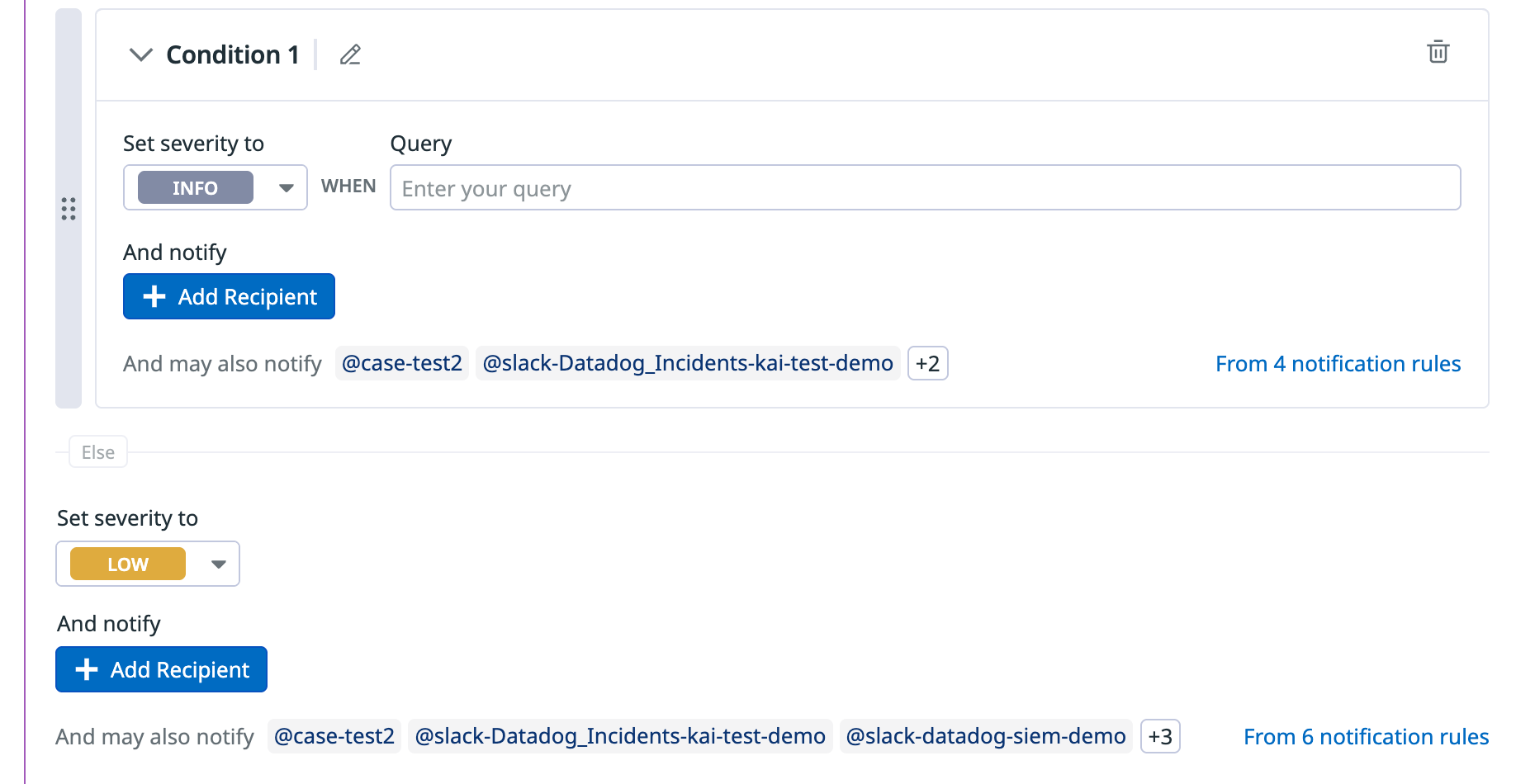

- (Optional) Click the pencil icon next to Condition 1 if you want to rename the condition. This name is appended to the rule name when a signal is generated.

- In the Set severity to dropdown menu, select the appropriate severity level (

INFO,LOW,MEDIUM,HIGH,CRITICAL). - If you are creating a Simple condition, enter the condition when a signal should be created. If you are creating a Then condition, enter the conditions required for a signal to be generated.

- All rule conditions are evaluated as condition statements. Thus, the order of the conditions affects which notifications are sent because the first condition to match generates the signal. Click and drag your rule conditions to change their order.

- A rule condition contains logical operations (

>,>=,<,&&,||) to determine if a signal should be generated based on the event counts in the previously defined queries. - The ASCII lowercase query labels are referenced in this section. An example rule condition for query

aisa > 3. - Note: The query label must precede the operator. For example,

a > 3is allowed;3 < ais not allowed.

- (Optional) In the Add notify section, click Add Recipient to configure notification targets.

- You can also create notification rules to avoid manual edits to notification preferences for individual detection rules.

Other parameters

1. Rule multi-triggering

Configure how often you want to keep updating the same signal if new values are detected within a specified time frame. For example, the same signal updates if any new value is detected within 1 hour, for a maximum duration of 24 hours.

- An

evaluation windowis specified to match when at least one of the cases matches true. This is a sliding window and evaluates cases in real time. - After a signal is generated, the signal remains “open” if a case is matched at least once within the

keep alivewindow. Each time a new event matches any of the cases, the last updated timestamp is updated for the signal. - A signal closes after the time exceeds the

maximum signal duration, regardless of the query being matched. This time is calculated from the first seen timestamp. - Note: The

evaluation windowmust be less than or equal to thekeep aliveandmaximum signal duration.

2. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

3. Enable optional group by

Toggle Enable Optional Group By section if you want to group events even when values are missing. If there is a missing value, a sample value is generated so that the log does not get excluded.

Other parameters

1. Forget value

In the Forget Value dropdown, select the number of days (1-30 days) after which the value is forgotten.

2. Rule multi-triggering behavior

Configure how often you want to keep updating the same signal if new values are detected within a specified time frame. For example, the same signal updates if any new value is detected within 1 hour, for a maximum duration of 24 hours.

- An

evaluation windowis specified to match when at least one of the cases matches true. This is a sliding window and evaluates cases in real time. - After a signal is generated, the signal remains “open” if a case is matched at least once within the

keep alivewindow. Each time a new event matches any of the cases, the last updated timestamp is updated for the signal. - A signal closes after the time exceeds the

maximum signal duration, regardless of the query being matched. This time is calculated from the first seen timestamp. - Note: The

evaluation windowmust be less than or equal to thekeep aliveandmaximum signal duration.

3. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

4. Enable optional group by

Toggle Enable Optional Group By section if you want to group events even when values are missing. If there is a missing value, a sample value is generated so that the log does not get excluded.

5. Enable instantaneous baseline

Toggle Enable instantaneous baseline if you want to build the baseline based on past events for the first event received.

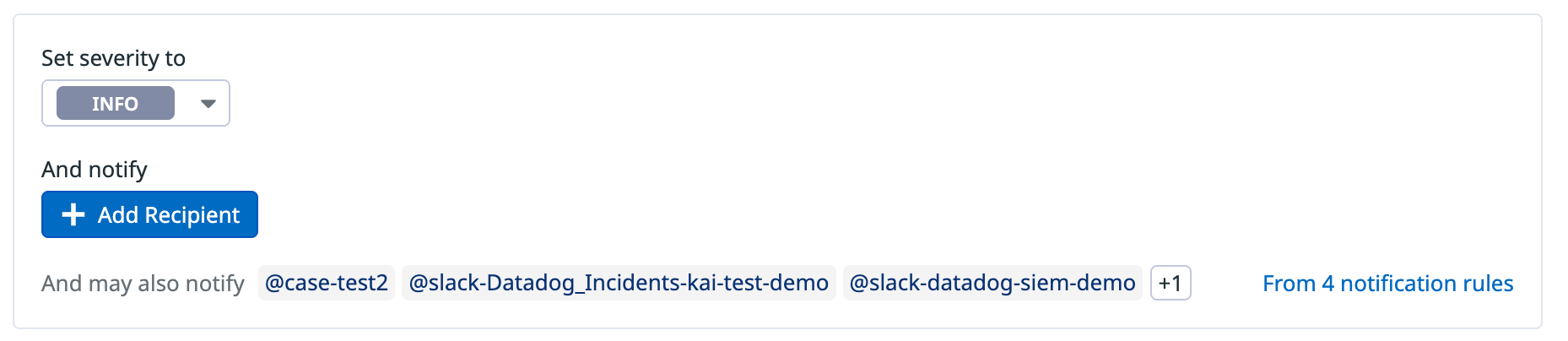

- In the Set severity to dropdown menu, select the appropriate severity level (

INFO,LOW,MEDIUM,HIGH,CRITICAL). - (Optional) In the Add notify section, click Add Recipient to configure notification targets.

- You can create notification rules to manage notifications automatically, avoiding manual edits for each detection rule.

Other parameters

1. Rule multi-triggering

Configure how often you want to keep updating the same signal if new values are detected within a specified time frame. For example, the same signal updates if any new value is detected within 1 hour, for a maximum duration of 24 hours.

- An

evaluation windowis specified to match when at least one of the cases matches true. This is a sliding window and evaluates cases in real time. - After a signal is generated, the signal remains “open” if a case is matched at least once within the

keep alivewindow. Each time a new event matches any of the cases, the last updated timestamp is updated for the signal. - A signal closes after the time exceeds the

maximum signal duration, regardless of the query being matched. This time is calculated from the first seen timestamp. - Note: The

evaluation windowmust be less than or equal to thekeep aliveandmaximum signal duration.

2. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

3. Enable optional group by

Toggle Enable Optional Group By section if you want to group events even when values are missing. If there is a missing value, a sample value is generated so that the log does not get excluded.

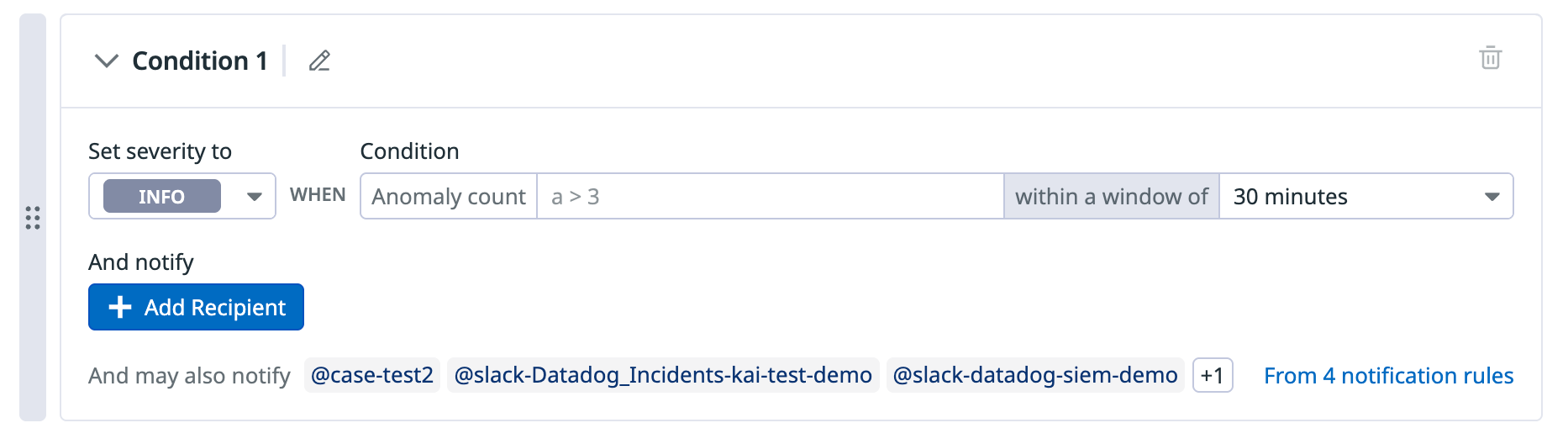

- (Optional) Click the pencil icon next to Condition 1 if you want to rename the condition. This name is appended to the rule name when a signal is generated.

- In the Set severity to dropdown menu, select the appropriate severity level (

INFO,LOW,MEDIUM,HIGH,CRITICAL). - In the Anomaly count field, enter the condition for how many anomalous logs within the specified window are required to trigger a signal.

- For example, if the condition is

a >= 3whereais the query, a signal is triggered if there are at least three anomalous logs within the evaluation window. - All rule conditions are evaluated as condition statements. Thus, the order of the conditions affects which notifications are sent because the first condition to match generates the signal. Click and drag your rule conditions to change their ordering.

- A rule condition contains logical operations (

>,>=,&&,||) to determine if a signal should be generated based on the event counts in the previously defined queries. - The ASCII lowercase query labels are referenced in this section. An example rule condition for query

aisa > 3. - Note: The query label must precede the operator. For example,

a > 3is allowed;3 < ais not allowed.

- For example, if the condition is

- In the within a window of dropdown menu, select the time period during which a signal is triggered if the condition is met.

- An

evaluation windowis specified to match when at least one of the cases matches true. This is a sliding window and evaluates cases in real time.

- An

- In the Add notify section, click Add Recipient to optionally configure notification targets.

- You can also create notification rules to avoid manual edits to notification preferences for individual detection rules.

Other parameters

1. Content anomaly detection

In the Content anomaly detection options section, specify the parameters to assess whether a log is anomalous or not.

- Content anomaly detection balances precision and sensitivity using several rule parameters that you can set:

- Similarity threshold: Defines how dissimilar a field value must be to be considered anomalous (default:

70%). - Minimum similar items: Sets how many similar historical logs must exist for a value to be considered normal (default:

1). - Evaluation window: The time frame during which anomalies are counted toward a signal (for example, a 10-minute time frame).

- Similarity threshold: Defines how dissimilar a field value must be to be considered anomalous (default:

- These parameters help to identify field content that is both unusual and rare, filtering out minor or common variations.

- See Anomaly detection parameters for more information.

2. Rule multi-triggering behavior

Configure how often you want to keep updating the same signal if new values are detected within a specified time frame. For example, the same signal updates if any new value is detected within 1 hour, for a maximum duration of 24 hours.

- After a signal is generated, the signal remains “open” if a case is matched at least once within the

keep alivewindow. Each time a new event matches any of the cases, the last updated timestamp is updated for the signal. - A signal closes after the time exceeds the

maximum signal duration, regardless of the query being matched. This time is calculated from the first seen timestamp. - Note: The

evaluation windowmust be less than or equal to thekeep aliveandmaximum signal duration.

3. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

4. Enable optional group by

Toggle Enable Optional Group By section if you want to group events even when values are missing. If there is a missing value, a sample value is generated so that the log does not get excluded.

- In the Set severity to dropdown menu, select the appropriate severity level (

INFO,LOW,MEDIUM,HIGH,CRITICAL). - (Optional) In the Add notify section, click Add Recipient to configure notification targets.

- You can create notification rules to manage notifications automatically, avoiding manual edits for each detection rule.

Other parameters

1. Rule multi-triggering

Configure how often you want to keep updating the same signal if new values are detected within a specified time frame. For example, the same signal updates if any new value is detected within 1 hour, for a maximum duration of 24 hours.

- An

evaluation windowis specified to match when at least one of the cases matches true. This is a sliding window and evaluates cases in real time. - After a signal is generated, the signal remains “open” if a case is matched at least once within the

keep alivewindow. Each time a new event matches any of the cases, the last updated timestamp is updated for the signal. - A signal closes after the time exceeds the

maximum signal duration, regardless of the query being matched. This time is calculated from the first seen timestamp. - Note: The

evaluation windowmust be less than or equal to thekeep aliveandmaximum signal duration.

2. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

3. Enable optional group by

Toggle Enable Optional Group By section if you want to group events even when values are missing. If there is a missing value, a sample value is generated so that the log does not get excluded.

- (Optional) Click the pencil icon next to Condition 1 if you want to rename the condition. This name is appended to the rule name when a signal is generated.

- In the Set severity to dropdown menu, select the appropriate severity level (

INFO,LOW,MEDIUM,HIGH,CRITICAL). - In the Query field, enter the tags of a log that you want to trigger a signal.

- For example, if you want logs with the tag

dev:demoto trigger signals with a severity ofINFO, enterdev:demoin the query field. Similarly, if you want logs with the tagdev:prodto trigger signals with a severity ofMEDIUM, enterdev:prodin the query field.

- For example, if you want logs with the tag

- (Optional) In the Add notify section, click Add Recipient to configure notification targets.

- You can also create notification rules to avoid manual edits to notification preferences for individual detection rules.

- For the

elsecondition, follow steps 3 and 4.- The

elsecondition is the default condition. If you don’t add any other conditions, then all logs trigger a signal with the severity set in the default condition.

- The

Other parameters

1. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

2. Enable optional group by

Toggle Enable Optional Group By section if you want to group events even when values are missing. If there is a missing value, a sample value is generated so that the log does not get excluded.

- If you want to create a simple condition, leave the selection as is. If you want to create a

thencondition, click THEN condition.- Use the Then condition when you want to trigger a signal if query A occurs and then query B occurs.

- Note: The

thenoperator can only be used on a single rule condition.

- (Optional) Click the pencil icon next to Condition 1 if you want to rename the condition. This name is appended to the rule name when a signal is generated.

- In the Set severity to dropdown menu, select the appropriate severity level (

INFO,LOW,MEDIUM,HIGH,CRITICAL). - If you are creating a Simple condition, enter the condition when a signal should be created. If you are creating a Then condition, enter the conditions required for a signal to be generated.

- All rule conditions are evaluated as conditional statements. Thus, the order of the conditions affects which notifications are sent because the first condition to match generates the signal. Click and drag your rule conditions to change their order.

- A rule condition contains logical operations (

>,>=,<,&&,||) to determine if a signal should be generated based on the event counts in the previously defined queries. - The ASCII lowercase query labels are referenced in this section. An example rule condition for query

aisa > 3. - Note: The query label must precede the operator. For example,

a > 3is allowed;3 < ais not allowed.

- In the Add notify section, click Add Recipient to optionally configure notification targets.

- You can create notification rules to manage notifications automatically, avoiding manual edits for each detection rule.

Other parameters

1. Rule multi-triggering

Configure how often you want to keep updating the same signal if new values are detected within a specified time frame. For example, the same signal updates if any new value is detected within 1 hour, for a maximum duration of 24 hours.

- An

evaluation windowis specified to match when at least one of the cases matches true. This is a sliding window and evaluates cases in real time. - After a signal is generated, the signal remains “open” if a case is matched at least once within the

keep alivewindow. Each time a new event matches any of the cases, the last updated timestamp is updated for the signal. - A signal closes after the time exceeds the

maximum signal duration, regardless of the query being matched. This time is calculated from the first seen timestamp. - Note: The

evaluation windowmust be less than or equal to thekeep aliveandmaximum signal duration.

2. Decrease severity for non-production environments

Toggle Decrease severity for non-production environments if you want to prioritize production environment signals over non-production signals.

- The severity of signals in non-production environments are decreased by one level from what is defined by the rule case.

- The severity decrement is applied to signals with an environment tag starting with

staging,test, ordev.

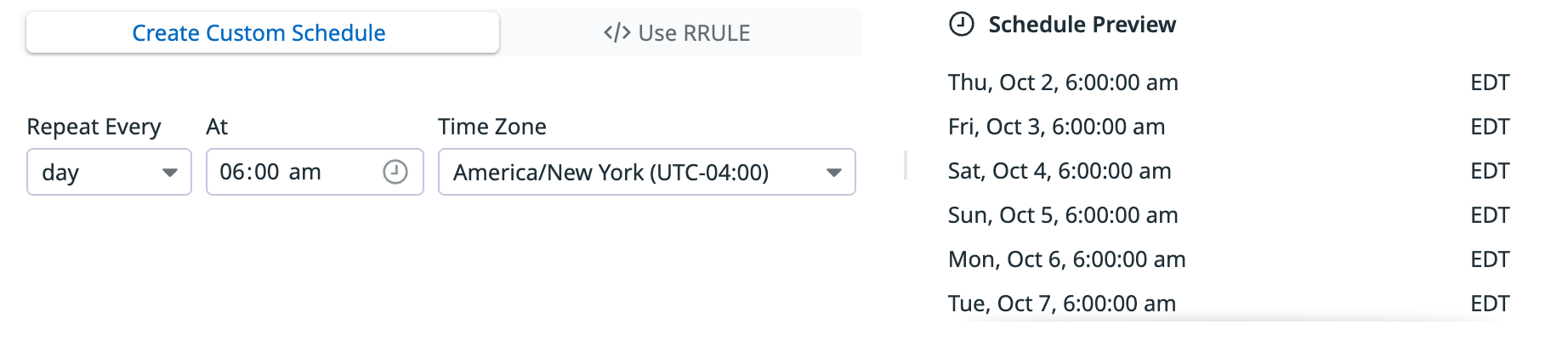

Add custom schedule

You can set specific evaluation time and how often it runs by creating a custom schedule or using a recurrence rule (RRULE).

Create custom schedule

- Select Create Custom Schedules.

- Set how often and at what time you want the rule to run.

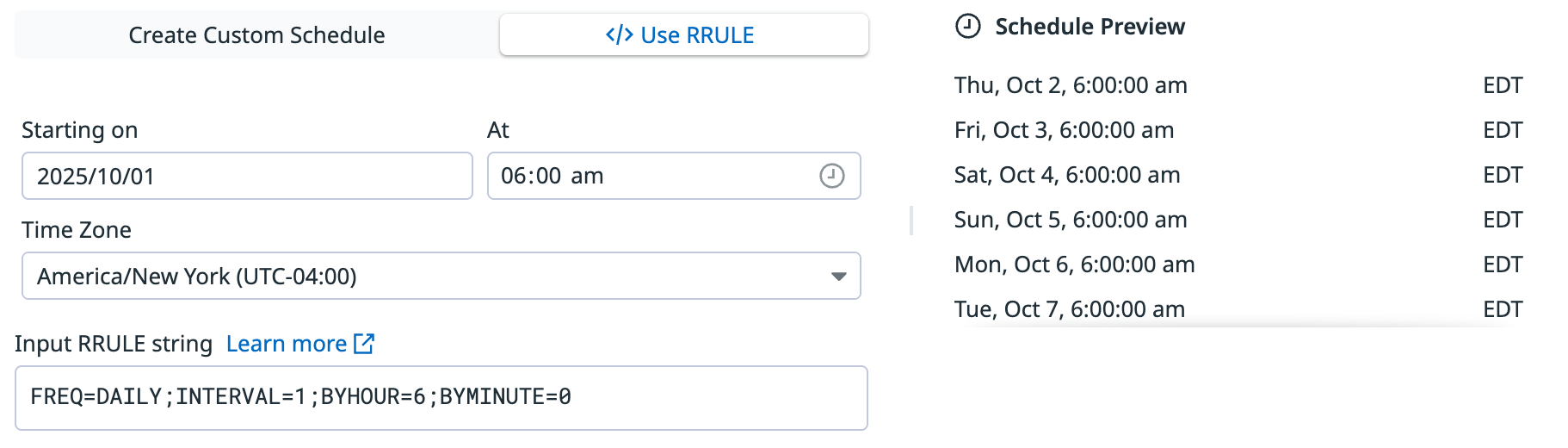

Use RRULE

Recurrence rule (RRULE) is a property name from the iCalendar RFC, which is the standard for defining recurring events. Use the official RRULE generator to generate recurring rules. Leverage RRULEs to cover more advanced scheduling use cases.

For example, if the RRULE is:

FREQ=DAILY;INTERVAL=1;BYHOUR=6;BYMINUTE=0

The example RRULE runs the scheduled rule once a day at 6:00 AM.

Notes:

- Attributes specifying the duration in RRULE are not supported (for example,

DTSTART,DTEND,DURATION). - Evaluation frequencies must be a day or longer. For shorter evaluation frequencies, use the default monitor schedules.

To write a custom RRULE for your detection rule:

- Select </> Use RRULE.

- Set the date and time for when you want the rule to start.

- Input a RRULE string to set how often you want the rule to run.

Describe your playbook

- Enter a Rule name. The name appears in the detection rules list view and the title of the security signal.

- In the Rule message section, use notification variables and Markdown to customize the notifications sent when a signal is generated.

- You can use template variables in the notification to inject dynamic context from triggered logs directly into a security signal and its associated notifications.

- See the Notification Variables documentation for more information and examples.

- Use the Tag resulting signals dropdown menu to add tags to your signals. For example,

security:attackortechnique:T1110-brute-force.- Note: the tag

securityis special. This tag is used to classify the security signal. The recommended options are:attack,threat-intel,compliance,anomaly, anddata-leak.

- Note: the tag

Create a suppression

(Optional) Create a suppression or add the rule to an existing suppression to prevent a signal from getting generated in specific cases. For example, if a user john.doe is triggering a signal, but their actions are benign and you do not want signals triggered from this user, add the following query into the Add a suppression query field: @user.username:john.doe.

Create new suppression

- Enter a name for the suppression rule.

- (Optional) Enter a description.

- Enter a suppression query.

- (Optional) Add a log exclusion query to exclude logs from being analyzed. These queries are based on log attributes.

- Note: The legacy suppression was based on log exclusion queries, but it is now included in the suppression rule’s Add a suppression query step.

Add to existing suppression

- Click Add to Existing Suppression.

- Select an existing suppression in the dropdown menu.