- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Install the DDOT Collector as a Gateway on Kubernetes

This product is not supported for your selected Datadog site. ().

Support for installing the DDOT Collector as a gateway on Kubernetes is in Preview.

This guide assumes you are familiar with deploying the DDOT Collector as a DaemonSet. For more information, see Install the DDOT Collector as a DaemonSet on Kubernetes.

Overview

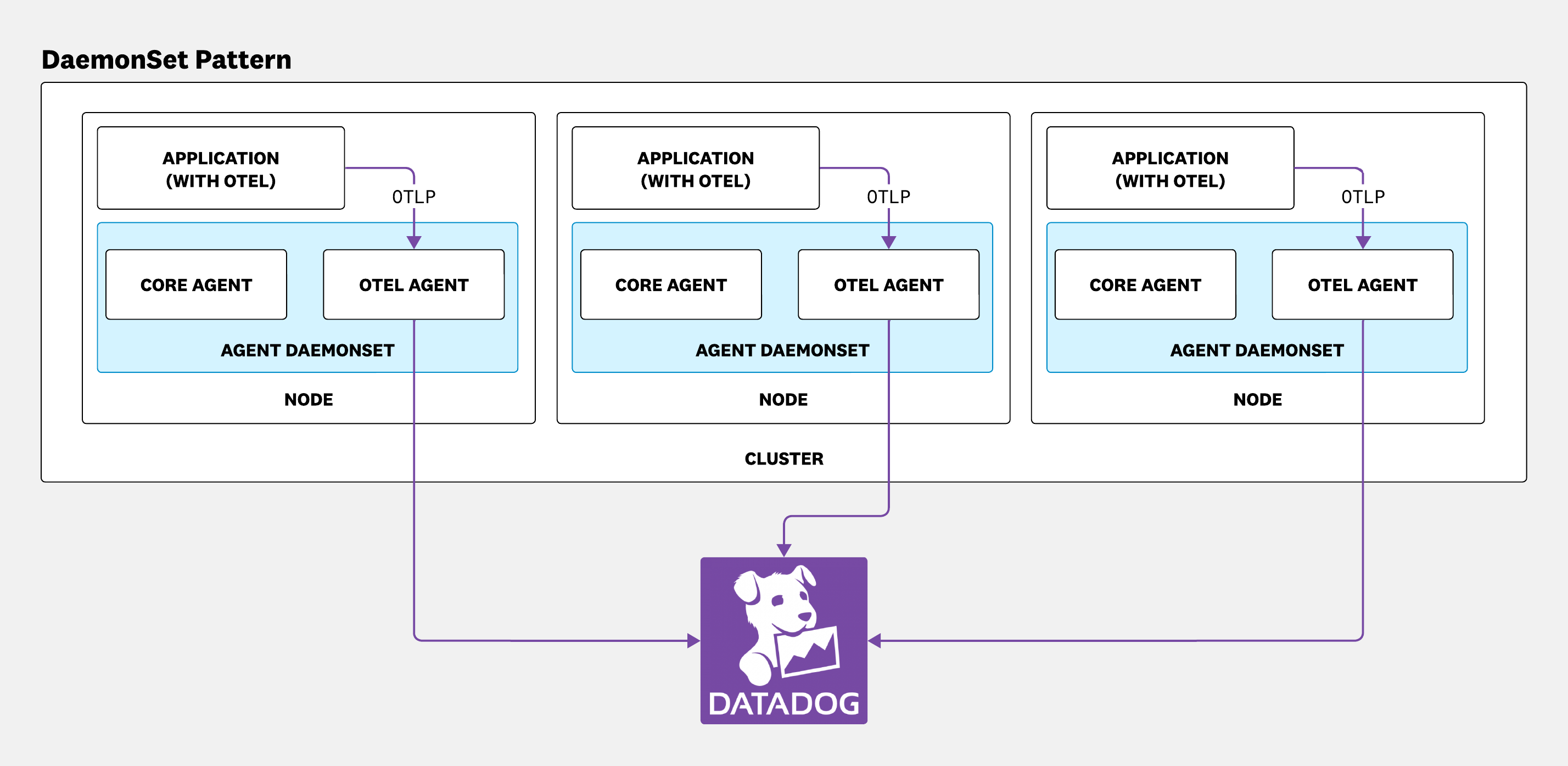

The OpenTelemetry Collector can be deployed in multiple ways. The daemonset pattern is a common deployment where a Collector instance runs on every Kubernetes node alongside the core Datadog Agent.

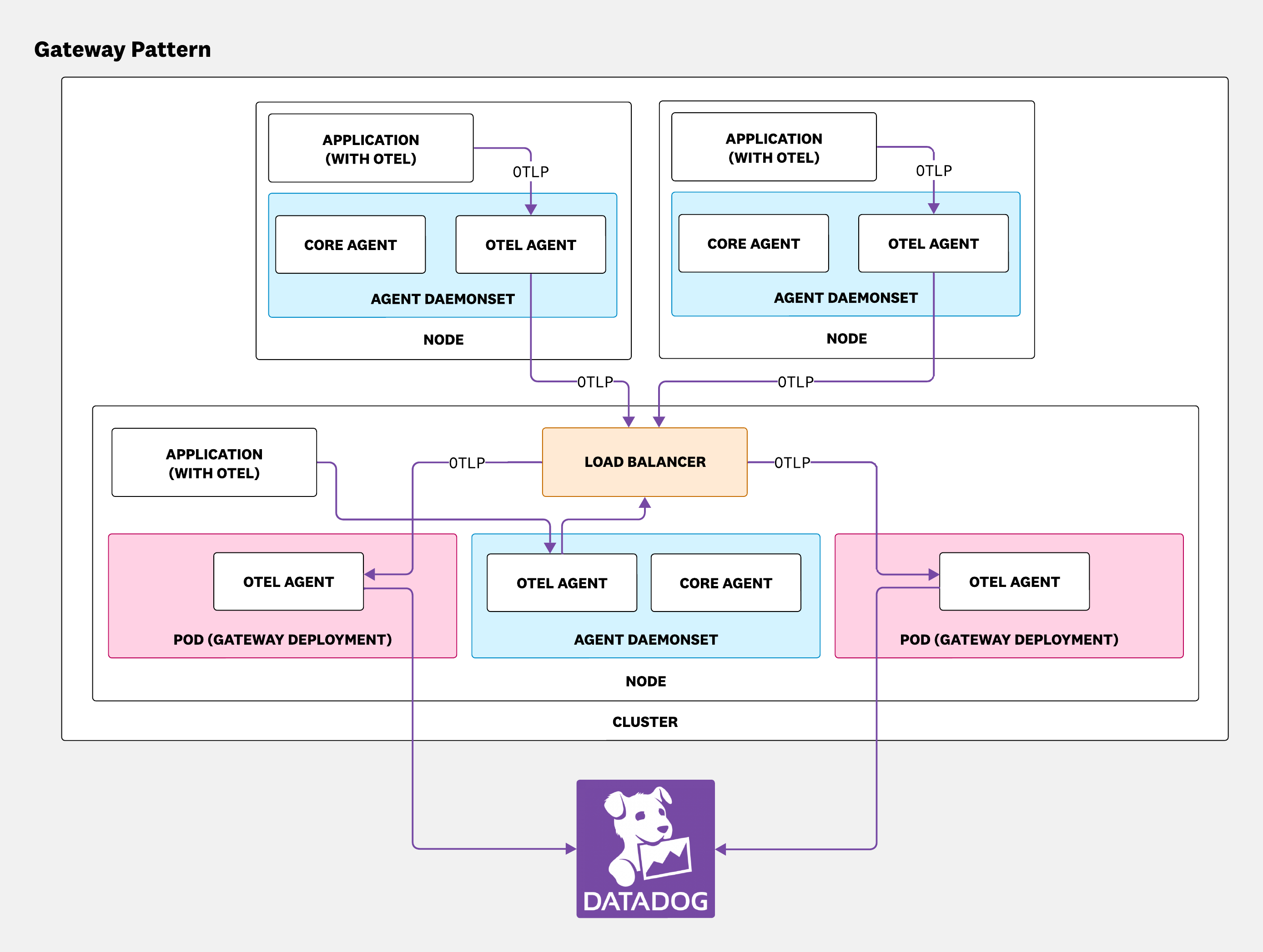

The gateway pattern provides an additional deployment option that uses a centralized, standalone Collector service. This gateway layer can perform actions such as tail-based sampling, aggregation, filtering, and routing before exporting the data to one or more backends such as Datadog. It acts as a central point for managing and enforcing observability policies.

When you enable the gateway:

- A Kubernetes Deployment (

<RELEASE_NAME>-datadog-otel-agent-gateway-deployment) manages the standalone gateway Collector pods. - A Kubernetes Service (

<RELEASE_NAME>-datadog-otel-agent-gateway) exposes the gateway pods and provides load balancing. - The existing DaemonSet Collector pods are configured by default to send their telemetry data to the gateway service instead of directly to Datadog.

Requirements

Before you begin, ensure you have the following:

- Datadog Account:

- A Datadog account.

- Your Datadog API key.

- Software:

- Network:

When using the Datadog SDK with OpenTelemetry API support, telemetry is routed to different components depending on the signal source. Ensure the following ports are accessible on your Datadog Agent or Collector:

Signal Source Protocol Port Destination Component OTel Metrics and Logs API OTLP (gRPC/HTTP) 4317 / 4318 Datadog Agent OTLP Receiver or DDOT Collector Datadog Tracing Datadog trace intake 8126 (TCP) Datadog Trace Agent Runtime Metrics DogStatsD 8125 (UDP) DogStatsD Server

Installation and configuration

This guide shows how to configure the DDOT Collector gateway using either the Datadog Operator or Helm chart.

This installation is required for both Datadog SDK + DDOT and OpenTelemetry SDK + DDOT configurations. While the Datadog SDK implements the OpenTelemetry API, it still requires the DDOT Collector to process and forward OTLP metrics and logs.

Choose one of the following installation methods:

- Datadog Operator: A Kubernetes-native approach that automatically reconciles and maintains your Datadog setup. It reports deployment status, health, and errors in its Custom Resource status, and it limits the risk of misconfiguration thanks to higher-level configuration options.

- Helm chart: A straightforward way to deploy Datadog Agent. It provides versioning, rollback, and templating capabilities, making deployments consistent and easier to replicate.

Install the Datadog Operator or Helm

If you haven’t already installed the Datadog Operator, you can install it in your cluster using the Datadog Operator Helm chart:

helm repo add datadog https://helm.datadoghq.com

helm repo update

helm install datadog-operator datadog/datadog-operator

For more information, see the Datadog Operator documentation.

If you haven’t already added the Datadog Helm repository, add it now:

helm repo add datadog https://helm.datadoghq.com

helm repo update

For more information about Helm configuration options, see the Datadog Helm chart README.

Deploying the gateway with a DaemonSet

To get started, enable both the gateway and the DaemonSet Collector in your DatadogAgent resource. This is the most common setup.

Create a file named datadog-agent.yaml:

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

# Enable the Collector in the Agent DaemonSet

otelCollector:

enabled: true

# Enable the standalone Gateway Deployment

otelAgentGateway:

enabled: true

override:

otelAgentGateway:

# Number of replicas

replicas: 3

# Control placement of gateway pods

nodeSelector:

gateway: "true"

Apply the configuration:

kubectl apply -f datadog-agent.yaml

To get started, enable both the gateway and the DaemonSet Collector in your values.yaml file. This is the most common setup.

# values.yaml

targetSystem: "linux"

datadog:

apiKey: <DATADOG_API_KEY>

appKey: <DATADOG_APP_KEY>

# Enable the Collector in the Agent Daemonset

otelCollector:

enabled: true

# Enable the standalone Gateway Deployment

otelAgentGateway:

enabled: true

replicas: 3

nodeSelector:

# Example selector to place gateway pods on specific nodes

gateway: "true"

In this case, the daemonset Collector uses a default config that sends OTLP data to the gateway’s Kubernetes service:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

otlphttp:

endpoint: http://<release>-datadog-otel-agent-gateway:4318

tls:

insecure: true

sending_queue:

batch:

flush_timeout: 10s

processors:

infraattributes:

cardinality: 2

connectors:

datadog/connector:

traces:

compute_top_level_by_span_kind: true

peer_tags_aggregation: true

compute_stats_by_span_kind: true

service:

pipelines:

traces:

receivers: [otlp]

processors: [infraattributes]

exporters: [otlphttp, datadog/connector]

metrics:

receivers: [otlp, datadog/connector]

processors: [infraattributes]

exporters: [otlphttp]

logs:

receivers: [otlp]

processors: [infraattributes]

exporters: [otlphttp]

The gateway Collector uses a default config that listens on the service ports and sends data to Datadog:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

debug:

verbosity: detailed

datadog:

api:

key: ${env:DD_API_KEY}

sending_queue:

batch:

flush_timeout: 10s

processors:

extension:

datadog:

api:

key: ${env:DD_API_KEY}

deployment_type: gateway

service:

pipelines:

traces:

receivers: [otlp]

exporters: [datadog]

metrics:

receivers: [otlp]

exporters: [datadog]

logs:

receivers: [otlp]

exporters: [datadog]

For Helm users: Configure

For Operator users: Use

otelAgentGateway.affinity or otelAgentGateway.nodeSelector to control pod placement, and adjust otelAgentGateway.replicas to scale the gateway.For Operator users: Use

override.otelAgentGateway.affinity, override.otelAgentGateway.nodeSelector, and override.otelAgentGateway.replicas for these settings.Deploying a standalone gateway

If you have an existing DaemonSet deployment, you can deploy the gateway independently by disabling other components:

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog-gateway

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

override:

otelAgentGateway:

# Number of replicas

replicas: 3

# Control placement of gateway pods

nodeSelector:

gateway: "true"

# Disable the Agent DaemonSet

nodeAgent:

disabled: true

# Disable the Cluster Agent

clusterAgent:

disabled: true

After deploying the gateway, you must update the configuration of your existing DaemonSet Collectors to send data to the new gateway service endpoint (for example, http://datadog-gateway-otel-agent-gateway:4318).

If you have an existing DaemonSet deployment, you can deploy the gateway independently.

# values.yaml

targetSystem: "linux"

fullnameOverride: "gw-only"

agents:

enabled: false

clusterAgent:

enabled: false

datadog:

apiKey: <DATADOG_API_KEY>

appKey: <DATADOG_APP_KEY>

otelAgentGateway:

enabled: true

replicas: 3

nodeSelector:

gateway: "true"

After deploying the gateway, you must update the configuration of your existing DaemonSet Collectors to send data to the new gateway service endpoint (for example, http://gw-only-otel-agent-gateway:4318).

Customizing Collector configurations

You can customize the gateway Collector configuration using ConfigMaps. Create a ConfigMap with your custom configuration:

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-gateway-config

data:

otel-gateway-config.yaml: |

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

http:

endpoint: "0.0.0.0:4318"

exporters:

datadog:

api:

key: ${env:DD_API_KEY}

sending_queue:

batch:

flush_timeout: 10s

service:

pipelines:

traces:

receivers: [otlp]

exporters: [datadog]

metrics:

receivers: [otlp]

exporters: [datadog]

logs:

receivers: [otlp]

exporters: [datadog]

Then reference it in your DatadogAgent resource:

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

# Reference the custom ConfigMap

config:

configMap:

name: otel-gateway-config

override:

otelAgentGateway:

replicas: 3

For multi-item ConfigMaps or inline configuration, see the DatadogAgent examples.

You can override the default configurations for both the DaemonSet and gateway Collectors using the datadog.otelCollector.config and otelAgentGateway.config values, respectively.

# values.yaml

targetSystem: "linux"

fullnameOverride: "my-gw"

datadog:

apiKey: <DATADOG_API_KEY>

appKey: <DATADOG_APP_KEY>

# Enable and configure the DaemonSet Collector

otelCollector:

enabled: true

config: |

receivers:

otlp:

protocols:

grpc:

endpoint: "localhost:4317"

exporters:

otlp:

endpoint: http://my-gw-otel-agent-gateway:4317

tls:

insecure: true

service:

pipelines:

traces:

receivers: [otlp]

exporters: [otlp]

metrics:

receivers: [otlp]

exporters: [otlp]

logs:

receivers: [otlp]

exporters: [otlp]

# Enable and configure the gateway Collector

otelAgentGateway:

enabled: true

replicas: 3

nodeSelector:

gateway: "true"

ports:

- containerPort: 4317

name: "otel-grpc"

config: |

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

exporters:

datadog:

api:

key: ${env:DD_API_KEY}

sending_queue:

batch:

flush_timeout: 10s

service:

pipelines:

traces:

receivers: [otlp]

exporters: [datadog]

metrics:

receivers: [otlp]

exporters: [datadog]

logs:

receivers: [otlp]

exporters: [datadog]

For the infraattributes processor to add Kubernetes tags, your telemetry must include the container.id resource attribute. This is often, but not always, added by OTel SDK auto-instrumentation.

If your tags are missing, see the troubleshooting guide for details on how to add this attribute.

If you set

fullnameOverride, the gateway's Kubernetes service name becomes -otel-agent-gateway otelAgentGateway.ports are exposed on this service. Ensure these ports match the OTLP receiver configuration in the gateway and the OTLP exporter configuration in the DaemonSet.The example configurations use insecure TLS for simplicity. Follow the OTel configtls instructions if you want to enable TLS.

Advanced configuration options

The Datadog Operator provides additional configuration options for the OTel Agent Gateway under override.otelAgentGateway (NOT features.otelAgentGateway except featureGates):

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

# Feature gates for OTel collector (feature-specific configuration)

featureGates: "telemetry.UseLocalHostAsDefaultMetricsAddress"

override:

otelAgentGateway:

# Number of replicas

replicas: 3

# Node selector for pod placement

nodeSelector:

kubernetes.io/os: linux

gateway: "true"

# Affinity configuration

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- datadog-otel-agent-gateway

topologyKey: kubernetes.io/hostname

# Tolerations for tainted nodes

tolerations:

- key: "dedicated"

operator: "Equal"

value: "otel-gateway"

effect: "NoSchedule"

# Priority class for scheduling

priorityClassName: high-priority

# Environment variables

env:

- name: OTEL_LOG_LEVEL

value: "info"

# Environment variables from ConfigMaps or Secrets

envFrom:

- configMapRef:

name: otel-gateway-config

# Custom image (optional)

image:

name: ddot-collector

tag: "7.74.0"

pullPolicy: IfNotPresent

# Pod-level security context

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

# Configure resources

containers:

otel-agent:

resources:

requests:

cpu: 200m

memory: 512Mi

limits:

cpu: 500m

memory: 1Gi

# Additional labels and annotations

labels:

team: observability

annotations:

prometheus.io/scrape: "true"

For a complete reference of all available options, see the DatadogAgent v2alpha1 configuration documentation.

For Helm-based deployments, many of these advanced configuration options can be set directly in the values.yaml file under the otelAgentGateway section. For a complete reference, see the Datadog Helm chart README.

Advanced use cases

Tail sampling with the load balancing exporter

A primary use case for the gateway is tail-based sampling. To ensure that all spans for a given trace are processed by the same gateway pod, use the load balancing exporter in your DaemonSet Collectors. This exporter consistently routes spans based on a key, such as traceID.

The DaemonSet Collector is configured with the loadbalancing exporter, which uses the Kubernetes service resolver to discover and route data to the gateway pods. The gateway Collector uses the tail_sampling processor to sample traces based on defined policies before exporting them to Datadog.

Note: RBAC permissions are required for the k8s resolver in the loadbalancing exporter.

Create a ConfigMap for the DaemonSet Collector configuration with the load balancing exporter:

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-daemonset-config

data:

otel-config.yaml: |

receivers:

otlp:

protocols:

grpc:

endpoint: "localhost:4317"

exporters:

loadbalancing:

routing_key: "traceID"

protocol:

otlp:

tls:

insecure: true

resolver:

k8s:

service: datadog-otel-agent-gateway

ports:

- 4317

service:

pipelines:

traces:

receivers: [otlp]

exporters: [loadbalancing]

Create a ConfigMap for the gateway Collector configuration with tail sampling:

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-gateway-tailsampling-config

data:

otel-gateway-config.yaml: |

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

processors:

tail_sampling:

decision_wait: 10s

policies:

# Add your sampling policies here

- name: sample-errors

type: status_code

status_code:

status_codes: [ERROR]

- name: sample-slow-traces

type: latency

latency:

threshold_ms: 1000

connectors:

datadog/connector:

exporters:

datadog:

api:

key: ${env:DD_API_KEY}

service:

pipelines:

traces/sample:

receivers: [otlp]

processors: [tail_sampling]

exporters: [datadog]

traces:

receivers: [otlp]

exporters: [datadog/connector]

metrics:

receivers: [datadog/connector]

exporters: [datadog]

Apply the DatadogAgent configuration:

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelCollector:

enabled: true

# Reference the custom DaemonSet config

config:

configMap:

name: otel-daemonset-config

# RBAC permissions for the k8s resolver

rbac:

create: true

otelAgentGateway:

enabled: true

# Reference the custom gateway config

config:

configMap:

name: otel-gateway-tailsampling-config

override:

otelAgentGateway:

replicas: 3

Create a ClusterRole for the DaemonSet to access endpoints:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: otel-collector-k8s-resolver

rules:

- apiGroups: [""]

resources: ["endpoints"] # for v0.139.0 and before

verbs: ["get", "watch", "list"]

- apiGroups: ["discovery.k8s.io"]

resources: ["endpointslices"] # for v0.140.0 and after

verbs: ["get", "watch", "list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: otel-collector-k8s-resolver

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: otel-collector-k8s-resolver

subjects:

- kind: ServiceAccount

name: datadog-agent

namespace: default

To ensure APM Stats are calculated on 100% of your traces before sampling, the

datadog/connector runs in a separate pipeline without the tail_sampling processor. The Connector can run in either the DaemonSet or the gateway layer.In the configuration below:

- The daemonset Collector (

datadog.otelCollector) is configured with theloadbalancingexporter, which uses the Kubernetes service resolver to discover and route data to the gateway pods. - The gateway Collector (

otelAgentGateway) uses thetail_samplingprocessor to sample traces based on defined policies before exporting them to Datadog.

# values.yaml

targetSystem: "linux"

fullnameOverride: "my-gw"

datadog:

apiKey: <DATADOG_API_KEY>

appKey: <DATADOG_APP_KEY>

otelCollector:

enabled: true

# RBAC permissions are required for the k8s resolver in the loadbalancing exporter

rbac:

create: true

rules:

- apiGroups: [""]

resources: ["endpoints"] # for v0.139.0 and before

verbs: ["get", "watch", "list"]

- apiGroups: ["discovery.k8s.io"]

resources: ["endpointslices"] # for v0.140.0 and after

verbs: ["get", "watch", "list"]

config: |

receivers:

otlp:

protocols:

grpc:

endpoint: "localhost:4317"

exporters:

loadbalancing:

routing_key: "traceID"

protocol:

otlp:

tls:

insecure: true

resolver:

k8s:

service: my-gw-otel-agent-gateway

ports:

- 4317

service:

pipelines:

traces:

receivers: [otlp]

exporters: [loadbalancing]

otelAgentGateway:

enabled: true

replicas: 3

ports:

- containerPort: 4317

name: "otel-grpc"

config: |

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

processors:

tail_sampling:

decision_wait: 10s

policies: <Add your sampling policies here>

connectors:

datadog/connector:

exporters:

datadog:

api:

key: ${env:DD_API_KEY}

service:

pipelines:

traces/sample:

receivers: [otlp]

processors: [tail_sampling]

exporters: [datadog]

traces:

receivers: [otlp]

exporters: [datadog/connector]

metrics:

receivers: [datadog/connector]

exporters: [datadog]

To ensure APM Stats are calculated on 100% of your traces before sampling, the

datadog/connector runs in a separate pipeline without the tail_sampling processor. The Connector can run in either the DaemonSet or the gateway layer.Using a custom Collector image

To use a custom-built Collector image for your gateway, specify the image repository and tag. If you need instructions on how to build the custom images, see Use Custom OpenTelemetry Components.

Note: The Datadog Operator supports the following image name formats:

name- The image name (for example,ddot-collector)name:tag- Image name with tag (for example,ddot-collector:7.74.0)registry/name:tag- Full image reference (for example,gcr.io/datadoghq/ddot-collector:7.74.0)

registry/name format (without tag in the name field) is not supported when using a separate tag field. Either include the full image reference with tag in the name field, or use the image name with a separate tag field.apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

override:

otelAgentGateway:

image:

name: <YOUR REPO>:<IMAGE TAG>

# values.yaml

targetSystem: "linux"

agents:

enabled: false

clusterAgent:

enabled: false

otelAgentGateway:

enabled: true

image:

repository: <YOUR REPO>

tag: <IMAGE TAG>

doNotCheckTag: true

ports:

- containerPort: "4317"

name: "otel-grpc"

config: | <YOUR CONFIG>

Enable Autoscaling with Horizontal Pod Autoscaler (HPA)

The DDOT Collector gateway supports autoscaling with the Kubernetes Horizontal Pod Autoscaler (HPA) feature.

Note: The Datadog Operator does not directly manage HPA resources. You need to create the HPA resource separately and configure it to target the OTel Agent Gateway deployment.

Create an HPA resource:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: datadog-otel-agent-gateway-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: datadog-otel-agent-gateway

minReplicas: 2

maxReplicas: 10

metrics:

# Aim for high CPU utilization for higher throughput

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

behavior:

scaleUp:

stabilizationWindowSeconds: 30

scaleDown:

stabilizationWindowSeconds: 60

Apply the DatadogAgent configuration with resource requests/limits (required for HPA):

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

override:

otelAgentGateway:

replicas: 4 # Initial replicas, HPA will override based on metrics

containers:

otel-agent:

resources:

requests:

cpu: 200m

memory: 512Mi

limits:

cpu: 500m

memory: 1Gi

To enable HPA, configure otelAgentGateway.autoscaling:

# values.yaml

targetSystem: "linux"

agents:

enabled: false

clusterAgent:

enabled: false

otelAgentGateway:

enabled: true

ports:

- containerPort: "4317"

name: "otel-grpc"

config: | <YOUR CONFIG>

replicas: 4 # 4 replicas to begin with and HPA may override it based on the metrics

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 10

metrics:

# Aim for high CPU utilization for higher throughput

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

behavior:

scaleUp:

stabilizationWindowSeconds: 30

scaleDown:

stabilizationWindowSeconds: 60

You can use resource metrics (CPU or memory), custom metrics (Kubernetes Pod or Object), or external metrics as autoscaling inputs. For resource metrics, ensure that the Kubernetes metrics server is running in your cluster. For custom or external metrics, consider configuring the Datadog Cluster Agent metrics provider.

Deploying a multi-layer gateway

For advanced scenarios, you can deploy multiple gateway layers to create a processing chain.

Deploy each layer as a separate DatadogAgent resource, starting from the final layer and working backward.

- Deploy Layer 1 (Final Layer): This layer receives from Layer 2 and exports to Datadog.

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog-gw-layer-1

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

config:

configMap:

name: gw-layer-1-config

override:

otelAgentGateway:

replicas: 3

nodeSelector:

gateway: "gw-node-1"

nodeAgent:

disabled: true

clusterAgent:

disabled: true

---

apiVersion: v1

kind: ConfigMap

metadata:

name: gw-layer-1-config

data:

otel-gateway-config.yaml: |

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

exporters:

datadog:

api:

key: ${env:DD_API_KEY}

service:

pipelines:

traces:

receivers: [otlp]

exporters: [datadog]

metrics:

receivers: [otlp]

exporters: [datadog]

logs:

receivers: [otlp]

exporters: [datadog]

- Deploy Layer 2 (Intermediate Layer): This layer receives from the DaemonSet and exports to Layer 1.

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog-gw-layer-2

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelAgentGateway:

enabled: true

config:

configMap:

name: gw-layer-2-config

override:

otelAgentGateway:

replicas: 3

nodeSelector:

gateway: "gw-node-2"

nodeAgent:

disabled: true

clusterAgent:

disabled: true

---

apiVersion: v1

kind: ConfigMap

metadata:

name: gw-layer-2-config

data:

otel-gateway-config.yaml: |

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

exporters:

otlp:

endpoint: http://datadog-gw-layer-1-otel-agent-gateway:4317

tls:

insecure: true

service:

pipelines:

traces:

receivers: [otlp]

exporters: [otlp]

metrics:

receivers: [otlp]

exporters: [otlp]

logs:

receivers: [otlp]

exporters: [otlp]

- Deploy DaemonSet: Configure the DaemonSet to export to Layer 2.

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiSecret:

secretName: datadog-secret

keyName: api-key

features:

otelCollector:

enabled: true

config:

configMap:

name: daemonset-layer2-config

---

apiVersion: v1

kind: ConfigMap

metadata:

name: daemonset-layer2-config

data:

otel-config.yaml: |

receivers:

otlp:

protocols:

grpc:

endpoint: "localhost:4317"

exporters:

otlp:

endpoint: http://datadog-gw-layer-2-otel-agent-gateway:4317

tls:

insecure: true

service:

pipelines:

traces:

receivers: [otlp]

exporters: [otlp]

metrics:

receivers: [otlp]

exporters: [otlp]

logs:

receivers: [otlp]

exporters: [otlp]

Deploy each layer as a separate Helm release, starting from the final layer and working backward.

Deploy Layer 1 (Final Layer): This layer receives from Layer 2 and exports to Datadog.

# layer-1-values.yaml targetSystem: "linux" fullnameOverride: "gw-layer-1" agents: enabled: false clusterAgent: enabled: false otelAgentGateway: enabled: true replicas: 3 nodeSelector: gateway: "gw-node-1" ports: - containerPort: "4317" hostPort: "4317" name: "otel-grpc" config: | receivers: otlp: protocols: grpc: endpoint: "0.0.0.0:4317" exporters: datadog: api: key: <API Key> service: pipelines: traces: receivers: [otlp] exporters: [datadog] metrics: receivers: [otlp] exporters: [datadog] logs: receivers: [otlp] exporters: [datadog]Deploy Layer 2 (Intermediate Layer): This layer receives from the DaemonSet and exports to Layer 1.

# layer-2-values.yaml targetSystem: "linux" fullnameOverride: "gw-layer-2" agents: enabled: false clusterAgent: enabled: false otelAgentGateway: enabled: true replicas: 3 nodeSelector: gateway: "gw-node-2" ports: - containerPort: "4317" hostPort: "4317" name: "otel-grpc" config: | receivers: otlp: protocols: grpc: endpoint: "0.0.0.0:4317" exporters: otlp: endpoint: http://gw-layer-1-otel-agent-gateway:4317 tls: insecure: true service: pipelines: traces: receivers: [otlp] exporters: [otlp] metrics: receivers: [otlp] exporters: [otlp] logs: receivers: [otlp] exporters: [otlp]Deploy DaemonSet: Configure the DaemonSet to export to Layer 2.

# daemonset-values.yaml targetSystem: "linux" datadog: apiKey: <DATADOG_API_KEY> appKey: <DATADOG_APP_KEY> otelCollector: enabled: true config: | receivers: otlp: protocols: grpc: endpoint: "localhost:4317" exporters: otlp: endpoint: http://gw-layer-2-otel-agent-gateway:4317 tls: insecure: true service: pipelines: traces: receivers: [otlp] exporters: [otlp] metrics: receivers: [otlp] exporters: [otlp] logs: receivers: [otlp] exporters: [otlp]

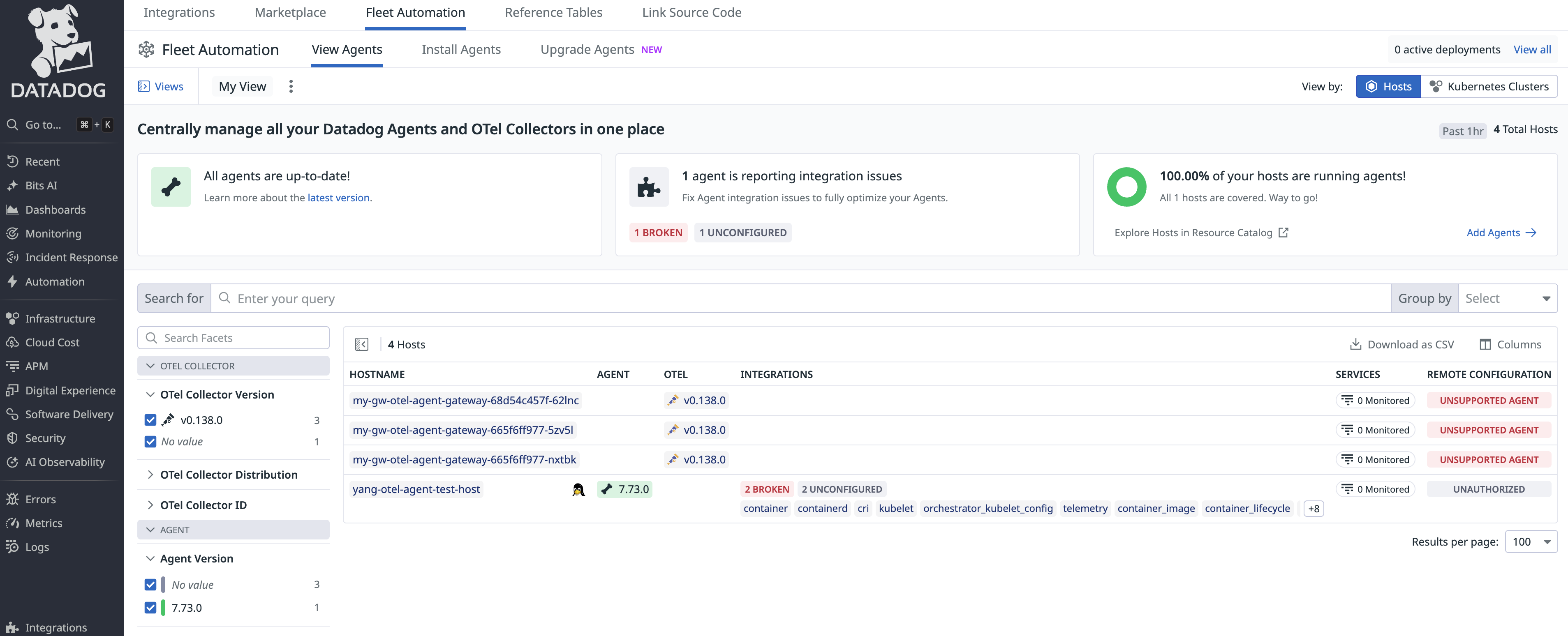

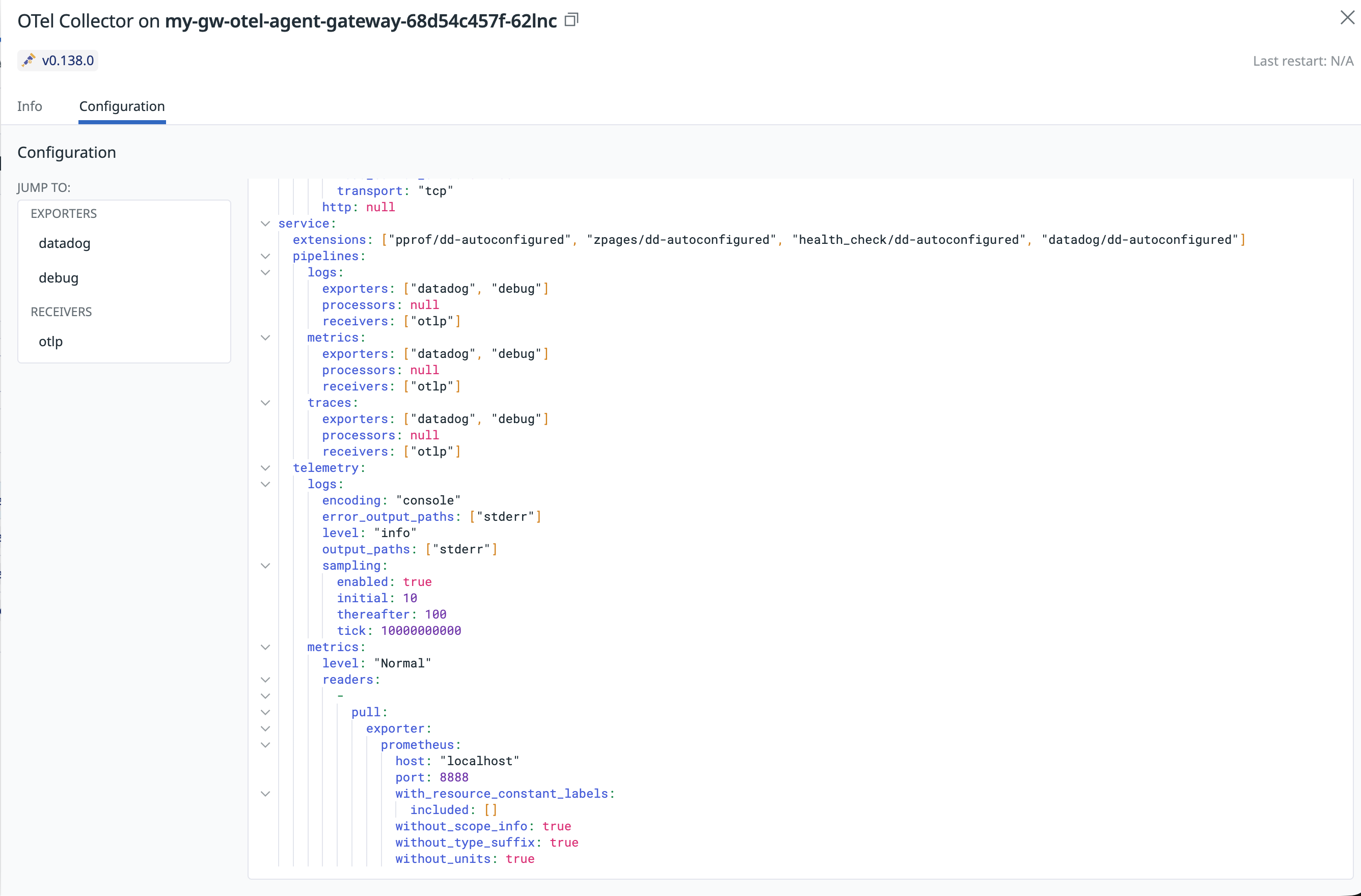

View gateway pods on Fleet Automation

The DDOT Collector gateway includes the Datadog extension by default. This extension exports Collector build information and configurations to Datadog, allowing you to monitor your telemetry pipeline from Infrastructure Monitoring and Fleet Automation.

To view your gateway pods:

- Navigate to Integrations > Fleet Automation.

- Select a gateway pod to view detailed build information and the running Collector configuration.

Known limitations

- Startup race condition: When deploying the DaemonSet and gateway in the same release, DaemonSet pods might start before the gateway service is ready, causing initial connection error logs. The OTLP exporter automatically retries, so these logs can be safely ignored. Alternatively, deploy the gateway first and wait for it to become ready before deploying the DaemonSet.

Further reading

Additional helpful documentation, links, and articles: