- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Set Up the Worker in ECS Fargate

This product is not supported for your selected Datadog site. ().

Overview

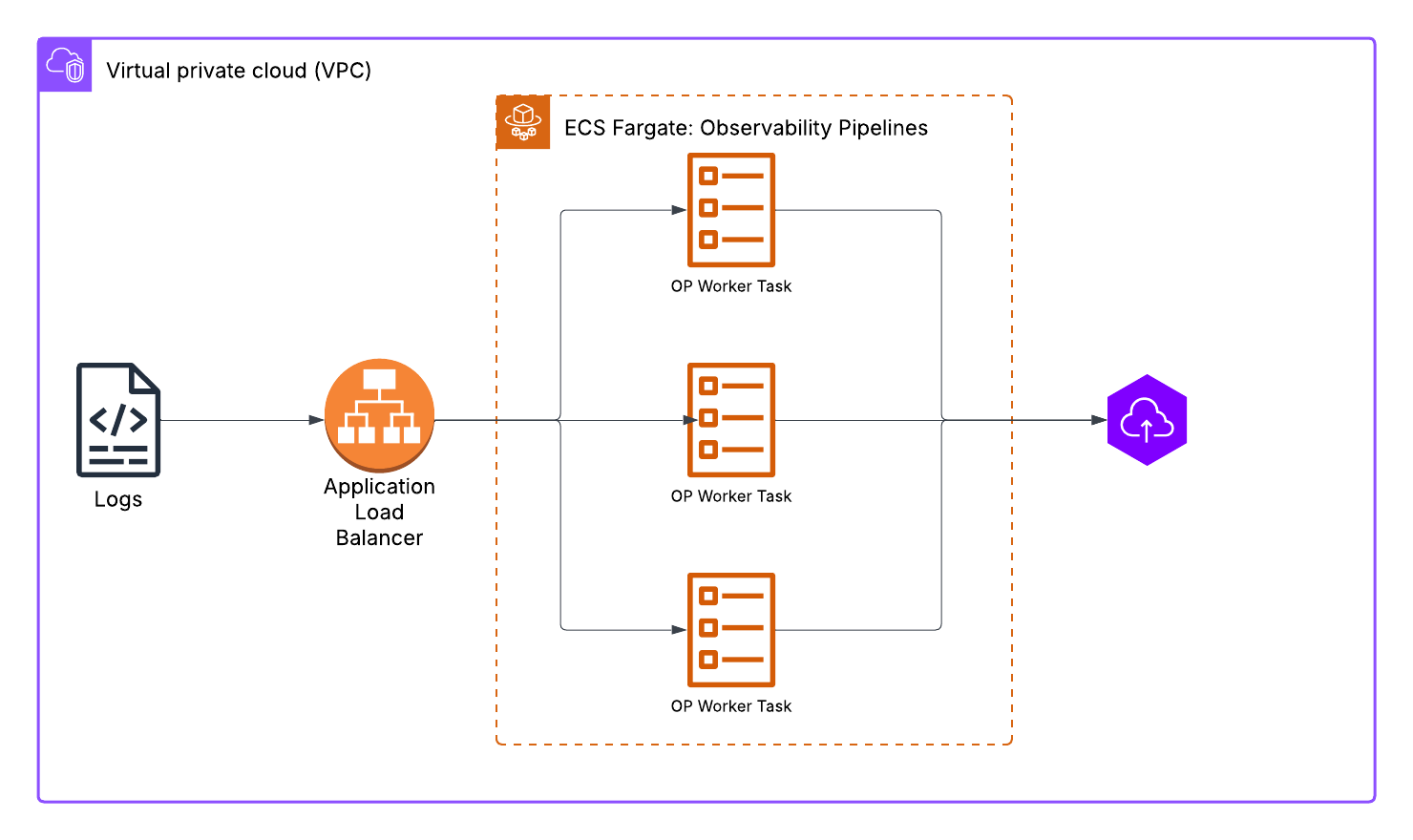

This document goes over one of the ways you can set up the Observability Pipelines Worker in ECS Fargate.

Setup

The setup configuration for this example consists of a Fargate task, Fargate service, and a load balancer.

Configure the task definition

Create a task definition. The task definition describes which containers to run, the configuration (such as the environment variables and ports), and the CPU and memory resources allocated for the task.

The tasks should be deployed as a replica with auto scaling enabled, where the minimum number of containers should be based on your log volume and the maximum number of containers should be able to absorb any spikes or growth in log volume. See Best Practices for Scaling Observability Pipelines to help determine how much CPU and memory resources to allocate.

Notes:

- The guidance for CPU and memory allocation is not for a single instance of the task, but for the total number of tasks. For example, if you want to send 3 TB of logs to the Worker, you could either deploy three replicas with one vCPU each or deploy one replica with three vCPUs.

- Datadog recommends enabling load balancers for the pool of replica tasks.

Set the DD_OP_SOURCE_* environment variable according to the configuration of the pipeline and port mappings. DD_OP_API_ENABLED and DD_OP_API_ADDRESS allow the load balancer to do health checks on the Observability Pipelines Worker.

An example task definition:

{

"family": "my-opw",

"containerDefinitions": [

{

"name": "my-opw",

"image": "datadog/observability-pipelines-worker",

"cpu": 0,

"portMappings": [

{

"name": "my-opw-80-tcp",

"containerPort": 80,

"hostPort": 80,

"protocol": "tcp"

}

],

"essential": true,

"command": [

"run"

],

"environment": [

{

"name": "DD_OP_API_ENABLED",

"value": "true"

},

{

"name": "DD_API_KEY",

"value": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

},

{

"name": "DD_SITE",

"value": "datadoghq.com"

},

{

"name": "DD_OP_API_ADDRESS",

"value": "0.0.0.0:8181"

},

{

"name": "DD_OP_SOURCE_HTTP_SERVER_ADDRESS",

"value": "0.0.0.0:80"

},

{

"name": "DD_OP_PIPELINE_ID",

"value": "xxxxxxx-xxxx-xxxx-xxxx-xxxx"

}

],

"mountPoints": [],

"volumesFrom": [],

"systemControls": []

}

],

"tags": [

{

"key": "PrincipalId",

"value": "AROAYYB64AB3JW3TEST"

},

{

"key": "User",

"value": "username@test.com"

}

],

"executionRoleArn": "arn:aws:iam::60142xxxxxx:role/ecsTaskExecutionRole",

"networkMode": "awsvpc",

"volumes": [],

"placementConstraints": [],

"requiresCompatibilities": [

"FARGATE"

],

"cpu": "xxx",

"memory": "xxx"

}

Configure the ECS service

Create an ECS service. The service configuration sets the number of Worker replicas to run and the scaling policy. In this example, the scaling policy is set to target an average CPU utilization of 70% with a minimum of two replicas and a maximum of five replicas.

Set up load balancing

Depending on your use case, configure either an Application Load Balancer or a Network Load Balancer to target the group of Fargate tasks you defined earlier. Configure the health check against the Observability Pipelines’ API port that was set in the task definition.