- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Setting Up ClickHouse

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Install the Worker

This product is not supported for your selected Datadog site. ().

Overview

The Observability Pipelines Worker is software that runs in your environment to centrally aggregate and process your logs and metrics (PREVIEW indicates an early access version of a major product or feature that you can opt into before its official release.Glossary), and then route them to different destinations.

Note: If you are using a proxy, see the proxy option in Bootstrap options.

Install the Worker

If you set up your pipeline using the API or Terraform, see API or Terraform pipeline setup on how to install the Worker.

If you set up your pipeline in the UI, see Pipelines UI setup on how to install the Worker.

API or Terraform pipeline setup

After setting up your pipeline using the API or Terraform, follow the instructions below to install the Worker for your platform.

If you are using:

Secrets Management: Run this command to install the Worker:

docker run -i -e DD_API_KEY=<DATADOG_API_KEY> \ -e DD_OP_PIPELINE_ID=<PIPELINE_ID> \ -e DD_SITE=<DATADOG_SITE> \ -v /path/to/local/bootstrap.yaml:/etc/observability-pipelines-worker/bootstrap.yaml \ datadog/observability-pipelines-worker runEnvironment variables: Run this command to install the Worker:

docker run -i -e DD_API_KEY=<DATADOG_API_KEY> \ -e DD_OP_PIPELINE_ID=<PIPELINE_ID> \ -e DD_SITE=<DATADOG_SITE> \ -e <SOURCE_ENV_VARIABLE> \ -e <DESTINATION_ENV_VARIABLE> \ -p 8088:8088 \ datadog/observability-pipelines-worker run

You must replace the placeholders with the following values, if applicable:

<DATADOG_API_KEY>: Your Datadog API key.- Note: The API key must be enabled for Remote Configuration.

<PIPELINE_ID>: The ID of your pipeline.<DATADOG_SITE>: The Datadog site.<SOURCE_ENV_VARIABLE>: The environment variables required by the source you are using for your pipeline.- For example:

DD_OP_SOURCE_DATADOG_AGENT_ADDRESS=0.0.0.0:8282 - See Environment Variables for a list of source environment variables.

- For example:

<DESTINATION_ENV_VARIABLE>: The environment variables required by the destinations you are using for your pipeline.- For example:

DD_OP_DESTINATION_SPLUNK_HEC_ENDPOINT_URL=https://hec.splunkcloud.com:8088 - See Environment Variables for a list of destination environment variables.

- For example:

Note: By default, the

docker runcommand exposes the same port the Worker is listening on. If you want to map the Worker’s container port to a different port on the Docker host, use the-p | --publishoption in the command:-p 8282:8088 datadog/observability-pipelines-worker runIf you are using Secrets Management:

- Modify the Worker bootstrap file to connect the Worker to your secrets manager. See Secrets Management for more information.

- Restart the Worker to use the updated bootstrap file:

sudo systemctl restart observability-pipelines-worker

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

The Observability Pipelines Worker supports all major Kubernetes distributions, such as:

- Amazon Elastic Kubernetes Service (EKS)

- Azure Kubernetes Service (AKS)

- Google Kubernetes Engine (GKE)

- Red Hat Openshift

- Rancher

Download the Helm chart values file. See the full list of configuration options available.

- If you are not using a managed service, see Self-hosted and self-managed Kubernetes clusters before continuing to the next step.

Add the Datadog chart repository to Helm:

helm repo add datadog https://helm.datadoghq.comIf you already have the Datadog chart repository, run the following command to ensure it is up to date:

helm repo updateIf you are using:

- Secrets Management:

- See Secrets Management on how to configure your

values.yamlfile for your secrets manager. - Run this command to install the Worker:

helm upgrade --install opw \ -f values.yaml \ --set datadog.apiKey=<DATADOG_API_KEY> \ --set datadog.pipelineId=<PIPELINE_ID> \ datadog/observability-pipelines-worker

Environment variables: Run this command to install the Worker:

helm upgrade --install opw \ -f values.yaml \ --set datadog.apiKey=<DATADOG_API_KEY> \ --set datadog.pipelineId=<PIPELINE_ID> \ --set <SOURCE_ENV_VARIABLES> \ --set <DESTINATION_ENV_VARIABLES> \ --set service.ports[0].protocol=TCP,service.ports[0].port=<SERVICE_PORT>,service.ports[0].targetPort=<TARGET_PORT> \ datadog/observability-pipelines-worker

You must replace the placeholders with the following values:

<DATADOG_API_KEY>: Your Datadog API.- Note: The API key must be enabled for Remote Configuration.

<PIPELINE_ID>: The ID of your pipeline.<SOURCE_ENV_VARIABLE>: The environment variables required by the source you are using for your pipeline.- For example:

--set env[0].name=DD_OP_SOURCE_DATADOG_AGENT_ADDRESS,env[0].value='0.0.0.0' \ - See Environment Variables for a list of source environment variables.

- For example:

<DESTINATION_ENV_VARIABLE>: The environment variables required by the destinations you are using for your pipeline.- For example:

--set env[1].name=DD_OP_DESTINATION_SPLUNK_HEC_ENDPOINT_URL,env[1].value='https://hec.splunkcloud.com:8088' \ - See Environment Variables for a list of destination environment variables.

- For example:

Note: By default, the Kubernetes Service maps incoming port

<SERVICE_PORT>to the port the Worker is listening on (<TARGET_PORT>). If you want to map the Worker’s pod port to a different incoming port of the Kubernetes Service, use the followingservice.ports[0].portandservice.ports[0].targetPortvalues in the command:--set service.ports[0].protocol=TCP,service.ports[0].port=8088,service.ports[0].targetPort=8282

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Notes:

- If you enable disk buffering for destinations, you must enable Kubernetes persistent volumes in the Observability Pipelines helm chart.

- See Add domains to firewall allowlist if you are using a firewall.

Self-hosted and self-managed Kubernetes clusters

If you are running a self-hosted and self-managed Kubernetes cluster, and defined zones with node labels using topology.kubernetes.io/zone, then you can use the Helm chart values file as is. However, if you are not using the label topology.kubernetes.io/zone, you need to update the topologyKey in the values.yaml file to match the key you are using. Or if you run your Kubernetes install without zones, remove the entire topology.kubernetes.io/zone section.

Kubernetes services created

When you install the Observability Pipelines Worker on Kubernetes, the Helm chart creates:

- A headless Service (

clusterIP: None) that exposes the individual Worker Pods using DNS.

This allows direct Pod-to-Pod communication and stable network identities for peer discovery or direct Pod addressing. - A ClusterIP service that provides a single virtual IP and DNS name for the Worker.

This enables load balancing across Worker Pods for internal cluster traffic.

LoadBalancer service

If you set service.type: LoadBalancer in the Helm chart, Kubernetes provisions a load balancer in supported environments and exposes the Worker Service with an external IP/DNS name. For example, Amazon EKS with the AWS Load Balancer Controller installed. Use this LoadBalancer service when traffic originates outside the cluster.

For RHEL and CentOS, the Observability Pipelines Worker supports versions 8.0 or later.

Follow the steps below if you want to use the one-line installation script to install the Worker. Otherwise, see Manually install the Worker on Linux.

- If you are using:

- Secrets Management: Run this one-step command to install the Worker:

DD_API_KEY=<DATADOG_API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<DATADOG_SITE> bash -c "$(curl -L https://install.datadoghq.com/scripts/install_script_op_worker2.sh)"- Environment variables: Run this one-step command to install the Worker:

You must replace the placeholders with the following values, if applicable:DD_API_KEY=<DATADOG_API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<DATADOG_SITE> <SOURCE_ENV_VARIABLE> <DESTINATION_ENV_VARIABLE> bash -c "$(curl -L https://install.datadoghq.com/scripts/install_script_op_worker2.sh)"<DATADOG_API_KEY>: Your Datadog API key.- Note: The API key must be enabled for Remote Configuration.

<PIPELINE_ID>: The ID of your pipeline.<DATADOG_SITE>: The Datadog site.<SOURCE_ENV_VARIABLE>: The environment variables required by the source you are using for your pipeline.- For example:

DD_OP_SOURCE_DATADOG_AGENT_ADDRESS=0.0.0.0:8282 - See Environment Variables for a list of source environment variables.

- For example:

<DESTINATION_ENV_VARIABLE>: The environment variables required by the destinations you are using for your pipeline.- For example:

DD_OP_DESTINATION_SPLUNK_HEC_ENDPOINT_URL=https://hec.splunkcloud.com:8088 - See Environment Variables for a list of destination environment variables.

Note: The environment variables used by the Worker in

/etc/default/observability-pipelines-workerare not updated on subsequent runs of the install script. If changes are needed, update the file manually and restart the Worker.

- For example:

- If you are using Secrets Management:

- Modify the Worker bootstrap file to connect the Worker to your secrets manager. See Secrets Management for more information.

- Restart the Worker to use the updated bootstrap file:

sudo systemctl restart observability-pipelines-worker

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

Select one of the options in the dropdown to provide the expected log or metrics (PREVIEW indicates an early access version of a major product or feature that you can opt into before its official release.Glossary) volume for the pipeline:

Option Description Unsure Use this option if you are not able to project the data volume or you want to test the Worker. This option provisions the EC2 Auto Scaling group with a maximum of 2 general purpose t4g.largeinstances.1-5 TB/day This option provisions the EC2 Auto Scaling group with a maximum of 2 compute optimized instances c6g.large.5-10 TB/day This option provisions the EC2 Auto Scaling group with a minimum of 2 and a maximum of 5 compute optimized c6g.largeinstances.>10 TB/day Datadog recommends this option for large-scale production deployments. It provisions the EC2 Auto Scaling group with a minimum of 2 and a maximum of 10 compute optimized c6g.xlargeinstances.Note: All other parameters are set to reasonable defaults for a Worker deployment, but you can adjust them for your use case as needed in the AWS Console before creating the stack.

Select the AWS region you want to use to install the Worker.

Click Select API key to choose the Datadog API key you want to use.

- Note: The API key must be enabled for Remote Configuration.

Click Launch CloudFormation Template to navigate to the AWS Console to review the stack configuration and then launch it. Make sure the CloudFormation parameters are as expected.

Select the VPC and subnet you want to use to install the Worker.

Review and check the necessary permissions checkboxes for IAM. Click Submit to create the stack. CloudFormation handles the installation at this point; the Worker instances are launched, the necessary software is downloaded, and the Worker starts automatically.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

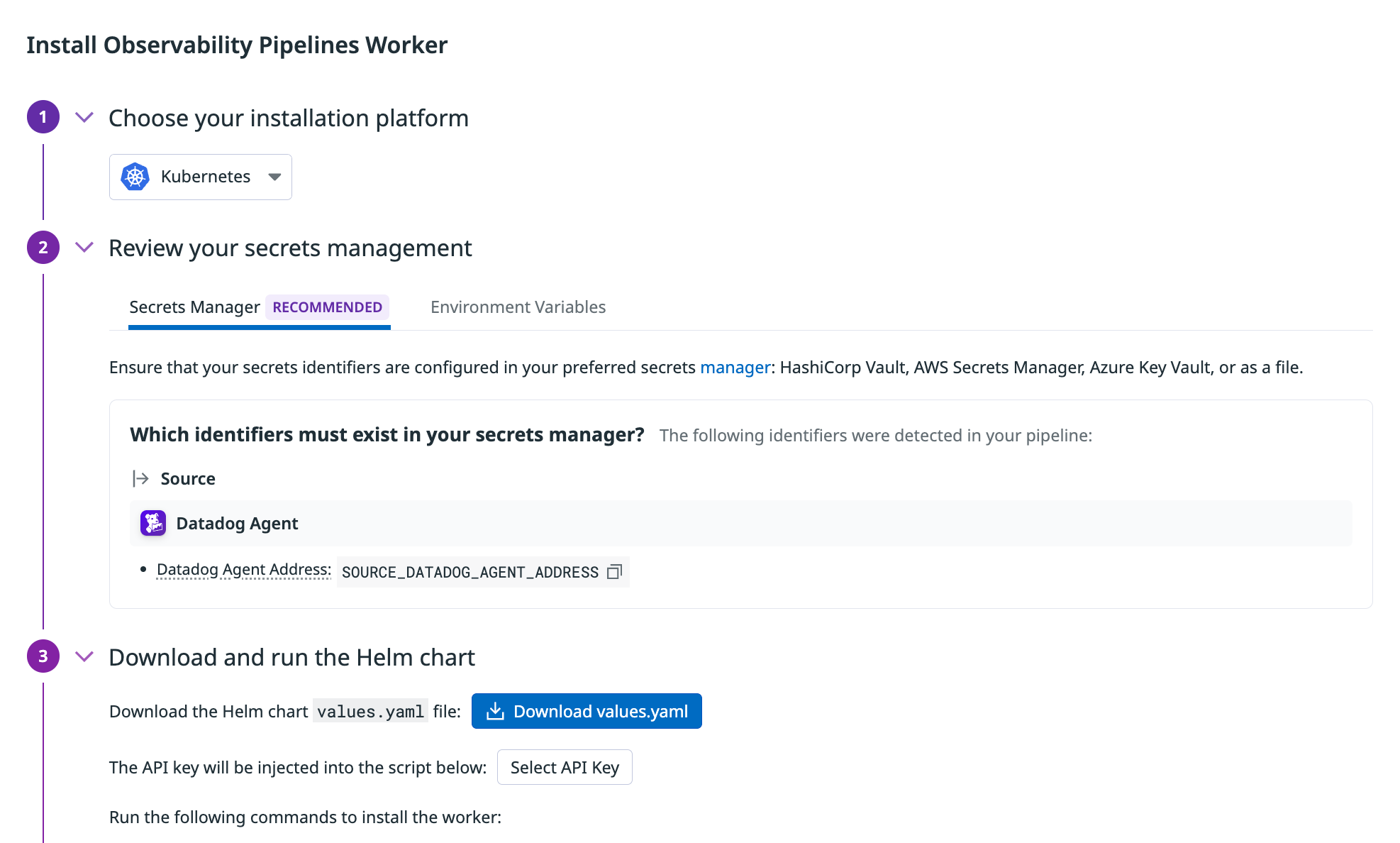

Pipeline UI setup

After you set up your source, destinations, and processors on the Build page of the pipeline UI, follow the steps on the Install page to install the Worker.

- Select the platform on which you want to install the Worker.

- In Review your secrets management, if you select:

- Secrets Management (Recommended): Ensure that your secrets are configured in your secrets manager.

- Environment Variables: Enter the environment variables for your sources and destinations, if applicable.

- Follow the instructions on installing the Worker for your platform.

- Click Select API key to choose the Datadog API key you want to use.

- Note: The API key must be enabled for Remote Configuration.

- Run the command provided in the UI to install the Worker. If you are using:

- Secrets Manager: The command points to the Worker bootstrap file that you configure to resolve secrets using your secrets manager.

- Environment variables: The command is automatically populated with the environment variables you entered earlier.

- Note: By default, the

docker runcommand exposes the same port the Worker is listening on. If you want to map the Worker’s container port to a different port on the Docker host, use the-p | --publishoption in the command:-p 8282:8088 datadog/observability-pipelines-worker run

- If you are using Secrets Management:

- Modify the Worker bootstrap file to connect the Worker to your secrets manager. See Secrets Management for more information.

- Restart the Worker to use the updated bootstrap file:

sudo systemctl restart observability-pipelines-worker

- Navigate back to the Observability Pipelines installation page and click Deploy.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

The Observability Pipelines Worker supports all major Kubernetes distributions, such as:

- Amazon Elastic Kubernetes Service (EKS)

- Azure Kubernetes Service (AKS)

- Google Kubernetes Engine (GKE)

- Red Hat Openshift

- Rancher

- Download the Helm chart values file. See the full list of configuration options available.

- If you are not using a managed service, see Self-hosted and self-managed Kubernetes clusters before continuing to the next step.

- Click Select API key to choose the Datadog API key you want to use.

- Note: The API key must be enabled for Remote Configuration.

- Add the Datadog chart repository to Helm:If you already have the Datadog chart repository, run the following command to make sure it is up to date:

helm repo add datadog https://helm.datadoghq.comhelm repo update - If you are using:

- Secrets Management:

- See Secrets Management on how to configure your

values.yamlfile for your secrets manager. - Run this command to install the Worker:

helm upgrade --install opw \ -f values.yaml \ --set datadog.apiKey=<DATADOG_API_KEY> \ --set datadog.pipelineId=<PIPELINE_ID> \ datadog/observability-pipelines-worker

- Environment variables: Run the command provided in the UI to install the Worker. The command is automatically populated with the environment variables you entered earlier.Note: By default, the Kubernetes Service maps incoming port

helm upgrade --install opw \ -f values.yaml \ --set datadog.apiKey=<DATADOG_API_KEY> \ --set datadog.pipelineId=<PIPELINE_ID> \ --set <SOURCE_ENV_VARIABLES> \ --set <DESTINATION_ENV_VARIABLES> \ --set service.ports[0].protocol=TCP,service.ports[0].port=<SERVICE_PORT>,service.ports[0].targetPort=<TARGET_PORT> \ datadog/observability-pipelines-worker<SERVICE_PORT>to the port the Worker is listening on (<TARGET_PORT>). If you want to map the Worker’s pod port to a different incoming port of the Kubernetes Service, use the followingservice.ports[0].portandservice.ports[0].targetPortvalues in the command:--set service.ports[0].protocol=TCP,service.ports[0].port=8088,service.ports[0].targetPort=8282

- Navigate back to the Observability Pipelines installation page and click Deploy.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Notes:

- If you enable disk buffering for destinations, you must enable Kubernetes persistent volumes in the Observability Pipelines helm chart.

- See Add domains to firewall allowlist if you are using a firewall.

Self-hosted and self-managed Kubernetes clusters

If you are running a self-hosted and self-managed Kubernetes cluster, and defined zones with node labels using topology.kubernetes.io/zone, then you can use the Helm chart values file as is. However, if you are not using the label topology.kubernetes.io/zone, you need to update the topologyKey in the values.yaml file to match the key you are using. Or if you run your Kubernetes install without zones, remove the entire topology.kubernetes.io/zone section.

Kubernetes services created

When you install the Observability Pipelines Worker on Kubernetes, the Helm chart creates:

- A headless Service (

clusterIP: None) that exposes the individual Worker Pods using DNS.

This allows direct Pod-to-Pod communication and stable network identities for peer discovery or direct Pod addressing. - A ClusterIP service that provides a single virtual IP and DNS name for the Worker.

This enables load balancing across Worker Pods for internal cluster traffic.

LoadBalancer service

If you set service.type: LoadBalancer in the Helm chart, Kubernetes provisions a load balancer in supported environments and exposes the Worker Service with an external IP/DNS name. For example, Amazon EKS with the AWS Load Balancer Controller installed. Use this LoadBalancer service when traffic originates outside the cluster.

For RHEL and CentOS, the Observability Pipelines Worker supports versions 8.0 or later.

Follow the steps below if you want to use the one-line installation script to install the Worker. Otherwise, see Manually install the Worker on Linux.

- Click Select API key to choose the Datadog API key you want to use.

- Note: The API key must be enabled for Remote Configuration.

- Run the one-step command provided in the UI to install the Worker.

- Note: If you are using environment variables, the environment variables used by the Worker in

/etc/default/observability-pipelines-workerare not updated on subsequent runs of the install script. If changes are needed, update the file manually and restart the Worker.

- Note: If you are using environment variables, the environment variables used by the Worker in

- If you are using Secrets Management:

- Modify the Worker bootstrap file to connect the Worker to your secrets manager. See Secrets Management for more information.

- Restart the Worker to use the updated bootstrap file:

sudo systemctl restart observability-pipelines-worker

- Navigate back to the Observability Pipelines installation page and click Deploy.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

See Set Up the Worker in ECS Fargate for instructions.

Select one of the options in the dropdown to provide the expected log or metrics (in Preview) volume for the pipeline:

Option Description Unsure Use this option if you are not able to project the data volume or you want to test the Worker. This option provisions the EC2 Auto Scaling group with a maximum of 2 general purpose t4g.largeinstances.1-5 TB/day This option provisions the EC2 Auto Scaling group with a maximum of 2 compute optimized instances c6g.large.5-10 TB/day This option provisions the EC2 Auto Scaling group with a minimum of 2 and a maximum of 5 compute optimized c6g.largeinstances.>10 TB/day Datadog recommends this option for large-scale production deployments. It provisions the EC2 Auto Scaling group with a minimum of 2 and a maximum of 10 compute optimized c6g.xlargeinstances.Note: All other parameters are set to reasonable defaults for a Worker deployment, but you can adjust them for your use case as needed in the AWS Console before creating the stack.

Select the AWS region you want to use to install the Worker.

Click Select API key to choose the Datadog API key you want to use.

- Note: The API key must be enabled for Remote Configuration.

Click Launch CloudFormation Template to navigate to the AWS Console to review the stack configuration and then launch it. Make sure the CloudFormation parameters are as expected.

Select the VPC and subnet you want to use to install the Worker.

Review and check the necessary permissions checkboxes for IAM. Click Submit to create the stack. CloudFormation handles the installation at this point; the Worker instances are launched, the necessary software is downloaded, and the Worker starts automatically.

Navigate back to the Observability Pipelines installation page and click Deploy.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

Manually install the Worker on Linux

If you prefer not to use the one-line installation script for Linux, follow these step-by-step instructions:

- Set up APT transport for downloading using HTTPS:

sudo apt-get update sudo apt-get install apt-transport-https curl gnupg - Run the following commands to set up the Datadog

debrepo on your system and create a Datadog archive keyring:sudo sh -c "echo 'deb [signed-by=/usr/share/keyrings/datadog-archive-keyring.gpg] https://apt.datadoghq.com/ stable observability-pipelines-worker-2' > /etc/apt/sources.list.d/datadog-observability-pipelines-worker.list" sudo touch /usr/share/keyrings/datadog-archive-keyring.gpg sudo chmod a+r /usr/share/keyrings/datadog-archive-keyring.gpg curl https://keys.datadoghq.com/DATADOG_APT_KEY_CURRENT.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch curl https://keys.datadoghq.com/DATADOG_APT_KEY_06462314.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch curl https://keys.datadoghq.com/DATADOG_APT_KEY_F14F620E.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch curl https://keys.datadoghq.com/DATADOG_APT_KEY_C0962C7D.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch - Run the following commands to update your local

aptrepo and install the Worker:sudo apt-get update sudo apt-get install observability-pipelines-worker datadog-signing-keys - If you are using:

- Secrets Management: Add your API key, site (for example,

datadoghq.comfor US1), and pipeline ID to the Worker’s environment file:sudo cat <<EOF > /etc/default/observability-pipelines-worker DD_API_KEY=<DATADOG_API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<DATADOG_SITE> EOF - Environment variables: Add your API key, site (for example,

datadoghq.comfor US1), source, and destination environment variables to the Worker’s environment file:sudo cat <<EOF > /etc/default/observability-pipelines-worker DD_API_KEY=<DATADOG_API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<DATADOG_SITE> <SOURCE_ENV_VARIABLES> <DESTINATION_ENV_VARIABLES> EOF

- Secrets Management: Add your API key, site (for example,

- Start the worker:

sudo systemctl restart observability-pipelines-worker

Note: The environment variables used by the Worker in /etc/default/observability-pipelines-worker are not updated on subsequent runs of the install script. If changes are needed, update the file manually and restart the Worker.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

For RHEL and CentOS, the Observability Pipelines Worker supports versions 8.0 or later.

- Set up the Datadog

rpmrepo on your system with the below command.

Note: If you are running RHEL 8.1 or CentOS 8.1, userepo_gpgcheck=0instead ofrepo_gpgcheck=1in the configuration below.cat <<EOF > /etc/yum.repos.d/datadog-observability-pipelines-worker.repo [observability-pipelines-worker] name = Observability Pipelines Worker baseurl = https://yum.datadoghq.com/stable/observability-pipelines-worker-2/\$basearch/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://keys.datadoghq.com/DATADOG_RPM_KEY_CURRENT.public https://keys.datadoghq.com/DATADOG_RPM_KEY_B01082D3.public EOF - Update your packages and install the Worker:

sudo yum makecache sudo yum install observability-pipelines-worker - If you are using:

- Secrets Management: Add your API key, site (for example,

datadoghq.comfor US1), and pipeline ID to the Worker’s environment file:sudo cat <<-EOF > /etc/default/observability-pipelines-worker DD_API_KEY=<API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<SITE> EOF - Environment variables: Add your API key, site (for example,

datadoghq.comfor US1), source, and destination environment variables to the Worker’s environment file:sudo cat <<-EOF > /etc/default/observability-pipelines-worker DD_API_KEY=<API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<SITE> <SOURCE_ENV_VARIABLES> <DESTINATION_ENV_VARIABLES> EOF

- Secrets Management: Add your API key, site (for example,

- Start the worker:

sudo systemctl restart observability-pipelines-worker - Navigate back to the Observability Pipelines installation page and click Deploy.

Note: The environment variables used by the Worker in /etc/default/observability-pipelines-worker are not updated on subsequent runs of the install script. If changes are needed, update the file manually and restart the Worker.

See Update Existing Pipelines if you want to make changes to your pipeline’s configuration.

Note: See Add domains to firewall allowlist if you are using a firewall.

Upgrade the Worker

To upgrade the Worker to the latest version, run the following command:

sudo apt-get install --only-upgrade observability-pipelines-worker

sudo yum install --only-upgrade observability-pipelines-worker

Uninstall the Worker

If you want to uninstall the Worker, run the following commands:

sudo apt-get remove --purge observability-pipelines-worker

yum remove observability-pipelines-workerrpm -q --configfiles observability-pipelines-worker

Index your Worker logs

Make sure your Worker logs are indexed in Log Management for optimal functionality. The logs provide deployment information, such as Worker status, version, and any errors, that is shown in the UI. The logs are also helpful for troubleshooting Worker or pipelines issues. All Worker logs have the tag source:op_worker.

Add domains to firewall allowlist

If you are using a firewall, these domains must be added to the allowlist:

api.<DD_SITE>config.<DD_SITE>http-intake.<DD_SITE>keys.datadoghq.com

api.<DD_SITE>config.<DD_SITE>http-intake.<DD_SITE>install.<DD_SITE>yum.datadoghq.comkeys.datadoghq.com

Replace <DD_SITE> with .

Further reading

Additional helpful documentation, links, and articles: