- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Logs Troubleshooting

If you experience unexpected behavior with Datadog Logs, there are a few common issues you can investigate and this guide may help resolve issues quickly. If you continue to have trouble, reach out to Datadog support for further assistance.

Missing logs - data access restrictions

You cannot see any logs in the Log Explorer or Live Tail. This may be happening because your role is part of a restriction query.

If you are unable to access your Restriction Queries in Datadog, contact your Datadog Administrator to verify if your role is affected.

See Check Restrictions Queries for more information on configuring Logs RBAC data access controls.

Legacy Permissions can also restrict access to Logs, particularly in the Log Explorer. Depending on configuration, access may be limited to specific indexes or to a single index at a time. For more information on how Legacy Permissions are applied at the role and organization level, see Legacy Permissions.

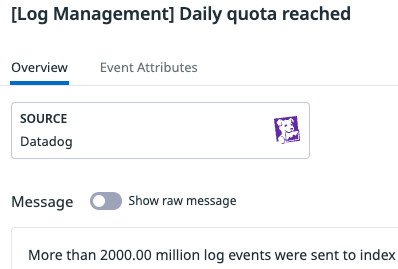

Missing logs - logs daily quota reached

You have not made any changes to your log configuration, but the Log Explorer shows that logs are missing for today. This may be happening because you have reached your daily quota.

See Set daily quota for more information on setting up, updating or removing the quota.

To verify if a daily quota has been reached historically, you can search in the Event Explorer with the tag datadog_index:{index_name}.

Missing logs - timestamp outside of the ingestion window

Logs with a timestamp further than 18 hours in the past are dropped at intake.

Fix the issue at the source by checking which service and source are impacted with the datadog.estimated_usage.logs.drop_count metric.

Missing logs - timestamp not aligned with timezone

By default, Datadog parses all epoch timestamps in Logs using UTC. If incoming logs use a different timezone, timestamps may appear shifted by the corresponding offset from UTC. For example, if logs are sent from New York (EST -5), they may appear 5 hours in the past, or if logs are sent from Australia (AEST +10), they may appear 10 hours ahead of the expected time frame.

To adjust the timezone of the logs during processing, see the footnotes in Datadog’s Parsing guide on using the timezone parameter with the date matcher.

Epoch timestamps can be adjusted using the timezone parameter in a Grok Parser processor to adjust localizations.

Epoch timestamps can be adjusted using the timezone parameter in a Grok Parser processor. Follow these steps to convert a localized timestamp to UTC using the example in Datadog’s Grok Parser guide.

- Navigate to the Pipelines page.

- In Pipelines, select the correct pipeline matching to your logs.

- Open the Grok Parser processor that is parsing your logs.

- Given that a local host is logging in UTC+1, adjust the date matcher to account for this difference. The resulting rule will have a comma and a new string defining the timezone to UTC+1.

- Verify that the Log Date Remapper is using the parsed attribute as the official timestamp for the matching logs.

Go to the Log Explorer and verify that the logs appear in line with their original timestamp.

Unable to parse timestamp key from JSON logs

Datadog requires timestamp attributes to use one of the supported date formats:

- ISO8601

- UNIX (the milliseconds EPOCH format)

- RFC3164

Timestamps that do not exactly match these formats may be dropped, even if they are similar (for example, epoch timestamps in nanoseconds).

If you are unable to convert the timestamp of JSON logs to a recognized date format before they are ingested into Datadog, follow these steps to convert and map the timestamps using Datadog’s arithmetic processor and log date remapper:

Navigate to the Pipelines page.

In Pipelines, hover over Preprocessing for JSON logs, and click the pencil icon.

Remove

timestampfrom the reserved attribute mapping list. The attribute is not being parsed as the official timestamp of the log during preprocessing.

Set up the arithmetic processor so that the formula multiplies your timestamp by 1000 to convert it to milliseconds. The formula’s result is a new attribute.

Set up the log date remapper to use the new attribute as the official timestamp.

Go to Log Explorer to see new JSON logs with their mapped timestamp.

Truncated logs

Logs above 1MB are truncated. Fix the issue at the source by checking which service and source are impacted with the datadog.estimated_usage.logs.truncated_count and datadog.estimated_usage.logs.truncated_bytes metrics.

Truncated log messages

There is an additional truncation in fields that applies only to indexed logs: the value is truncated to 75 KiB for the message field and 25 KiB for non-message fields. Datadog stores the full text, and it remains visible in regular list queries in the Log Explorer. However, the truncated version is displayed when performing a grouped query, such as when grouping logs by that truncated field or performing similar operations that display that specific field.

Logs present in Live Tail, but missing from Logs Explorer

Logging Without Limits™ decouples log ingestion from indexing, allowing you to retain the logs that matter most. When exclusion filters applied to indexes are too broad, they may exclude more logs than intended.

Review both exclusion filters and index filters carefully. Parsed and unparsed JSON logs can match index filters in unexpected ways, particularly when free-text search is used to exclude short strings. This can result in entire logs being dropped from indexing, even when they contain other valuable data. For details on the differences between full-text and free-text search, see Search Syntax.

Estimated Usage Metrics

If Logs do not appear to be indexed, or at a smaller or higher rate than expected, check Estimated Usage Metric volumes in your Log Management dashboard.

Depending on the metric, tags such as datadog_index, datadog_is_excluded, service, and status are available for filtering. Use these tags to filter metrics such as datadog.estimated_usage.logs.ingested_events by exclusion status and by the index that is indexing or excluding the logs.

If the datadog_index tag is set to N/A for a metric datapoint, the corresponding logs do not match any index in your organization. Review index order and filter queries to identify potential exclusions.

Note: Estimated Usage Metrics do not respect Daily Quotas.

Create a support ticket

If the above troubleshooting steps do not resolve your issues with missing logs in Datadog, create a support ticket. If possible, include the following information:

| Information | Description |

|---|---|

| Raw log sample | Collect the log directly from the source generating it, based on your architecture or logger configuration. Attach the log to the support ticket as a text file or raw JSON. |

| Indexes configuration | If the log appears in Live Tail but not in the Log Explorer, include the response from the Get All Indexes API call. If your organization has many indexes, review Estimated Usage Metrics to identify the relevant index, then include the response from the Get an Index API call for that index. |

| Agent flare | If logs are sent using the Agent and do not appear anywhere in the Datadog UI, submit an Agent Flare with the support ticket. |

| Other sources | If logs are sent using a source other than the Agent, include details on the originating source of the logs (for example, Lambda Forwarder or Kinesis Firehose). |