- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Setting Up ClickHouse

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Create a Log Pipeline

Overview

Log pipelines parse, filter, and enrich incoming logs to make them searchable and actionable within Datadog. For Technology Partners, pipelines ensure that their logs are delivered in a structured, meaningful format right out of the box. For end users, prebuilt pipelines reduce the need to create custom parsing rules, allowing them to focus on troubleshooting and monitoring. Log pipelines are required for integrations that submit logs to Datadog.

This guide explains how to create a log pipeline, including best practices and requirements. For a hands-on learning experience, check out related courses in the Datadog Learning Center:

Core concepts

Below are important concepts to understand before building your pipeline. See Log Pipelines to learn more.

Processors: The building blocks that transform or enrich logs. See Processors to learn more.

Facets: Provide a straightforward filtering interface for logs and improve readability by exposing clear, human-friendly labels for important attributes. See Facets to learn more.

Nested pipelines: Pipelines within a pipeline that allow you to split processing into separate paths for different log types or conditions.

Order matters: Pipelines run from top to bottom, so the sequence of processors matters for achieving the desired log transformation.

Building an integration log pipeline

To get started, make sure you’re a registered Technology Partner with access to a Datadog developer instance. If you haven’t joined yet, see Join the Datadog Partner Network.

- Create a pipeline that filters by your integration’s log source.

- Add processors to normalize and enrich logs.

- Create custom facets to improve user filtering and exploration.

- Validate and test the pipeline.

- Review log pipeline requirements.

- Export the pipeline and include it in your integration submission.

Create a pipeline

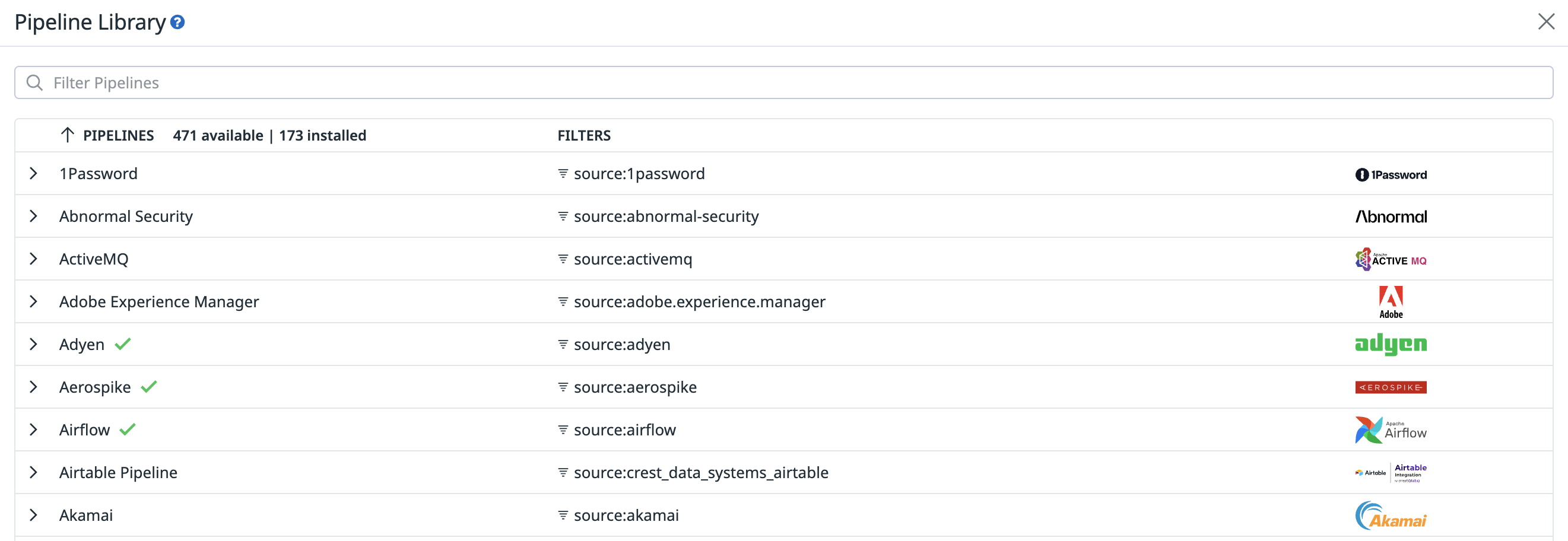

- Navigate to the Pipelines page and select New Pipeline.

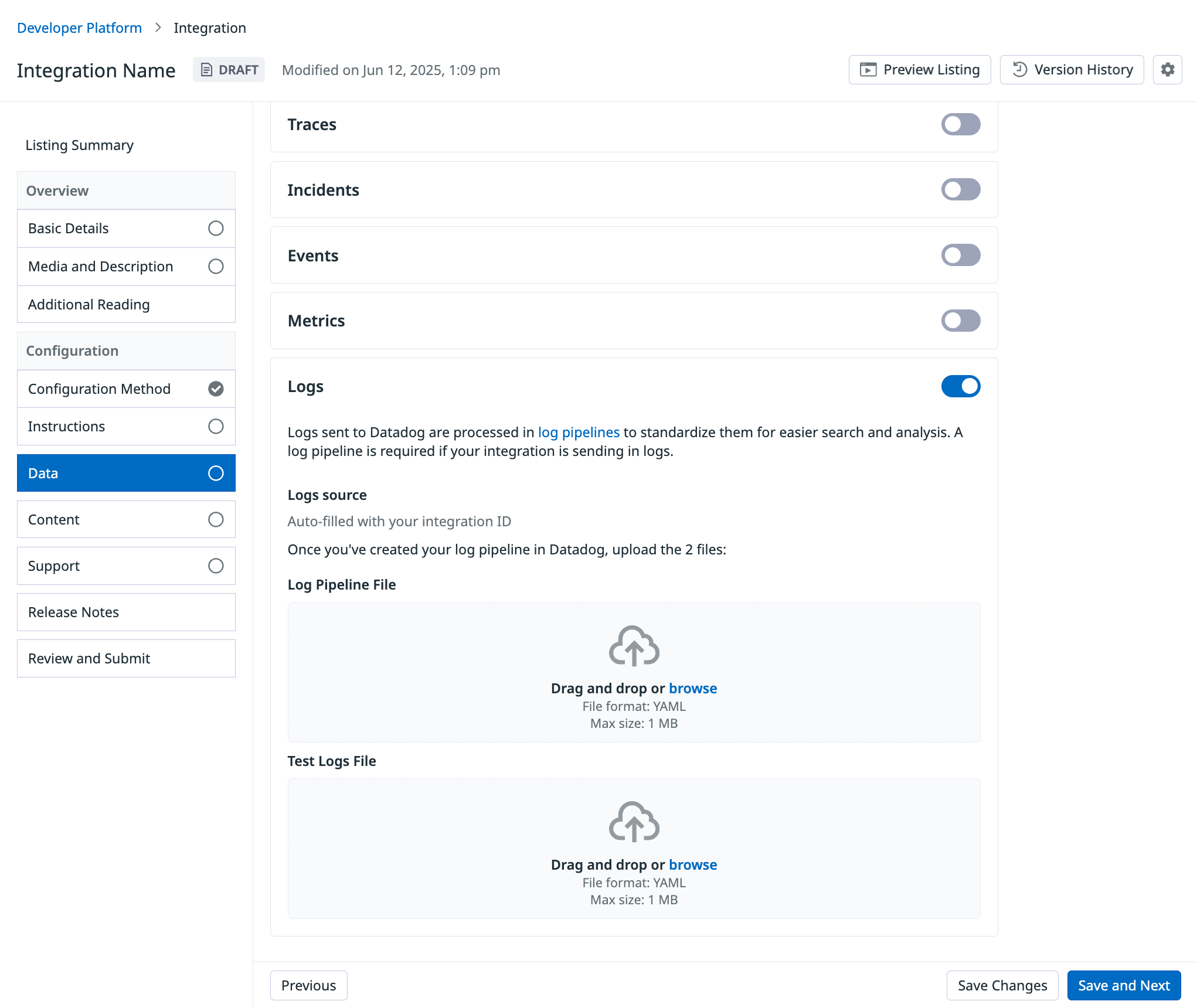

- In the Filter field, filter by your integration’s logs source (

source:<logs_source>). Your logs source can be found in the Data tab when viewing your integration in the Developer Platform. - (Optional) Add tags and a description.

- Click Create.

Add processors

To add a processor, open your newly created pipeline and select Add Processor. Use the following guidance to determine which processors to configure:

- Parse raw logs (if needed).

- If your logs are not in JSON format, add a Grok Parser to extract attributes before remapping or enrichment.

- To maintain optimal grok parsing performance, avoid wildcard matchers.

- Skip this step if logs are already sent to Datadog in JSON format.

- If your logs are not in JSON format, add a Grok Parser to extract attributes before remapping or enrichment.

- Normalize logs with Datadog Standard Attributes in mind.

- Map incoming attributes to Datadog Standard Attributes so logs are consistent across the platform. For example, an attribute for a client IP value should be remapped to

network.client.ip. - For reserved attributes (

date,message,status,service), use the dedicated processors: Date Remapper, Message Remapper, Status Remapper, Service Remapper.- Important: Remappers must be explicitly added for these attributes, even if the incoming value matches Datadog defaults. Organization-level overrides can change default behavior.

- At a minimum, add a Date Remapper to map the log timestamp to the reserved

dateattribute. - For non-reserved standard attributes, use the general Remapper.

- Unless updating an existing integration log pipeline, disable Preserve source attribute to prevent duplicate values.

- Map incoming attributes to Datadog Standard Attributes so logs are consistent across the platform. For example, an attribute for a client IP value should be remapped to

- Enrich logs as needed.

- For a list of all log processors, see the Processors documentation.

- Consider adding processors that provide context or derived attributes, such as:

- Geo IP Parser to add geolocation data based on an IP address field.

- Category Processor to group logs by predefined rules.

- Arithmetic Processor to compute numeric values from existing attributes.

- String Builder Processor to concatenate multiple attributes into a single field.

Define custom facets

After logs are normalized and enriched, the next step is to create custom facets, which map individual attributes to user-friendly fields in Datadog.

Custom facets provide users with a consistent interface for filtering logs and power autocomplete in the Logs Explorer, making it easier to discover and aggregate important information. They also allow attributes with low readability to be renamed with clear, user-friendly labels. For example, transforming @deviceCPUper into Device CPU Utilization Percentage.

Facets can be qualitative (for basic filtering and grouping) or quantitative, known as measures (for aggregation operations, such as averaging or range filtering). Create facets for attributes that users are most likely to filter, search, or group by in the Logs Explorer. See Facets to learn more.

Note: You do not need to create facets for Datadog Standard Attributes. These attributes are mapped to predefined facets, which Datadog automatically generates when a pipeline is published.

After you’ve identified the attributes that can benefit from facets, follow these steps for each:

- Navigate to the Logs Explorer.

- Click Add in the facets panel.

- Choose whether to create a Facet or Measure, then expand Advanced options.

- Define the Path.

- Custom facets must include a prefix matching your logs source.

- For example, a facet on

project_nameshould use the path@<logs_source>.project_name.

- Edit the Display Name to be user-friendly.

- For example, the facet path

@<logs_source>.project_nameshould have the display nameProject Name.

- For example, the facet path

- Select the correct Type (and Unit if defining a Measure).

- Add a Group, which should match your integration name.

- Use this same group for all additional custom facets in the integration.

- Add a Description explaining the facet.

- Click Add to save.

- Navigate back to the pipeline definition and add a Remapper to align the raw attribute with the prefixed path used by the facet.

Review requirements checklist

Prior to testing your pipeline, review the following requirements to avoid common mistakes.

- Log pipelines must not be empty: Every pipeline must contain at least one processor. At a minimum, include a Date Remapper.

- Add dedicated remappers for

date,message,status, andservice: Remappers must be explicitly added for these attributes, even if the incoming value matches Datadog defaults. Organization-level overrides can change default behavior. - Disable

Preserve source attributewhen using a general Remapper: Enable this option only if the attribute is required for downstream processing, or if you are updating an existing pipeline and need to maintain backward compatibility. - Do not duplicate existing Datadog facets: To avoid confusion with existing out-of-the-box Datadog facets, do not create custom facets that overlap with Datadog Standard Attributes.

- Use a custom prefix for custom facets: Attributes that do not map to a Datadog Standard Attribute must include a unique prefix when being mapped to a custom facet. Use the general Remapper to add a prefix.

- Group custom facets: Assign all custom facets to a group that matches your integration’s name.

- Match facet data types: Ensure the facet’s data type (String, Boolean, Double, or Integer) matches the type of the attribute it maps to. Mismatched types can prevent the facet from working correctly.

- Protect Datadog API and application keys: Never log API keys. Keys should only be passed in request headers, not in log messages.

Validate and test your log pipeline

Test your pipeline to confirm that logs are being parsed, normalized, and enriched correctly. For more complex pipelines, the Pipeline Scanner may be helpful.

- Generate new logs that flow through the pipeline.

- Pipelines are automatically triggered if Datadog ingests a log matching the filter query.

- If the pipeline is not triggered, ensure it is enabled with the toggle.

- Verify custom facets in the Logs Explorer by checking that they appear and filter results as expected.

- Inspect individual logs in the Log Details panel to ensure:

service,source, andmessageattributes are set correctly.- Tags are applied as expected.

Export and add your pipeline to the integration submission

The final step is to export the pipeline and upload the files to the Developer Platform.

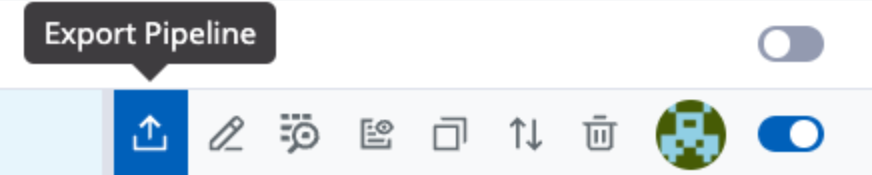

- Hover over your pipeline and select the Export Pipeline icon.

- Review the pipeline and click Select Facets.

- Select only the custom facets created for this integration and click Add Sample Logs.

- Facets for Datadog Standard Attributes are automatically created by Datadog.

- Add sample logs.

- These samples must be in the format in which logs are sent to the Datadog Logs API or ingested with the Datadog Agent.

- Ensure samples have coverage for variations of remappers. For example, if you’ve defined a category processor, add samples that trigger different category values.

- Click Export.

- Two YAML files are generated: one for the pipeline definition and one that Datadog uses internally for testing.

- Upload the two YAML files to the Developer Platform, under Data > Submitted Data > Logs.

Further reading

Additional helpful documentation, links, and articles: