- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Rehydrating from Archives

Overview

Log Rehydration* enables you to capture log events from customer-owned storage-optimized archives back into Datadog’s search-optimized Log Explorer, so that you can use Datadog to analyze or investigate log events that are either old or were excluded from indexing.

Historical views

With historical views, teams rehydrate archived log events precisely by timeframe and query filter to meet specific, unexpected use cases efficiently.

To create a historical view:

- Navigate to the Rehydrate From Archives page.

- Click New Historical View.

Index exclusion filters do not apply to historical views, so there is no need to modify exclusion filters when you rehydrate from archives.

If you download historical views as a CSV, the data is limited to the last 90 days.

Add new historical views

Choose the time period for which you wish to rehydrate log events.

Select the archive from which you wish to rehydrate log events. Only archives that are configured to use role delegation are available for rehydrating.

(Optional) Estimate scan size and get the total amount of compressed data that is contained in your archive for the selected timeframe.

Name your historical view. Names must begin with a lowercase letter and can only contain lowercase letters, numbers, and the

-character.Input the query. The query syntax is the same as that of the Log Explorer search. Make sure your logs are archived with their tags if you use tags (such as

env:prodorversion:x.y.z) in the rehydration query.Define the maximum number of logs that should be rehydrated in this historical view. If the limit of the rehydration is reached, the reloading is stopped but you still have access to the rehydrated logs.

Define the retention period of the rehydrated logs (available retentions are based on your contract, default is 15 days).

(Optional) Notify trigger notifications on rehydration completion through integrations with the @handle syntax.

Note: The query is applied after the files matching the time period are downloaded from your archive. To reduce your cloud data transfer cost, reduce the selected date range.

Rehydrate by query

By creating historical views with specific queries (for example, over one or more services, URL endpoints, or customer IDs), you can reduce the time and cost involved in rehydrating your logs. This is especially helpful when rehydrating over wider time ranges. You can rehydrate up to 1 billion log events per historical view you create.

Notify

Events are triggered automatically when a rehydration starts and finishes. These events are available in your Events Explorer.

During the creation of a historical view, you can use the built-in template variables to customize the notification triggered at the end of the rehydration:

| Variable | Description |

|---|---|

{{archive}} | Name of the archives used for the rehydration. |

{{from}} | Start of the time range selected for the rehydration. |

{{to}} | End of the time range selected for the rehydration. |

{{scan_size}} | Total size of the files processed during the rehydration. |

{{number_of_indexed_logs}} | Total number of rehydrated logs. |

{{explorer_url}} | Direct link to the rehydrated logs. |

View historical view content

From the historical view page

After selecting “Rehydrate from Archive,” the historical view is marked as “pending” until its content is ready to be queried.

Once the content is rehydrated, the historical view is marked as active, and the link in the query column leads to the historical view in the Log Explorer.

From the Log Explorer

Alternatively, find the historical view from the Log Explorer directly from the index selector.

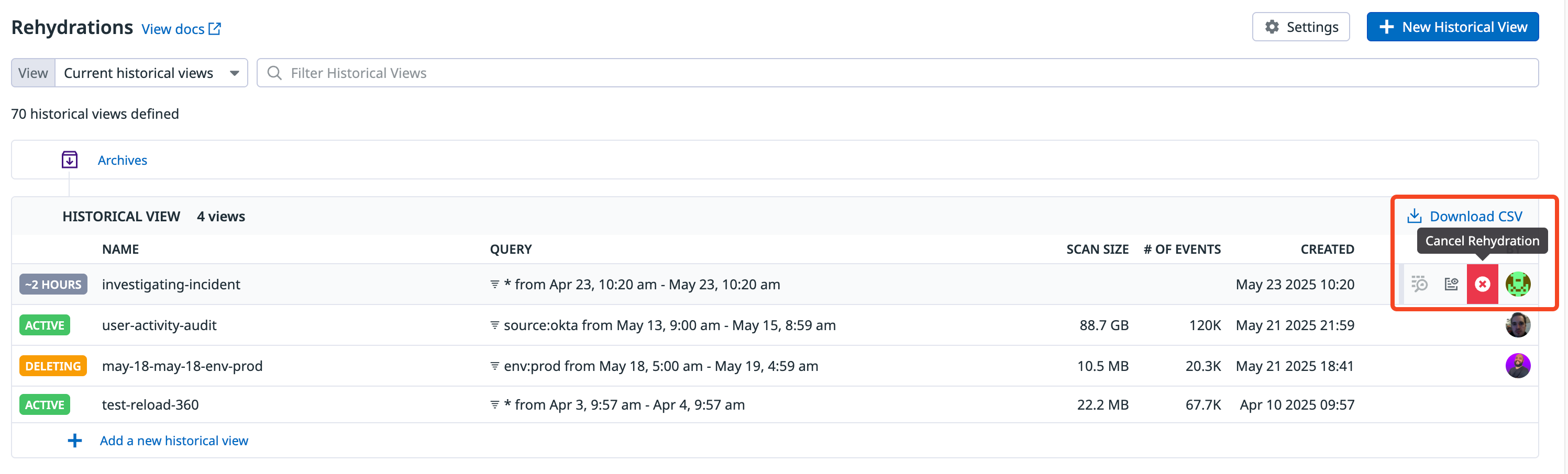

Canceling ongoing historical views

Cancel ongoing Rehydrations directly in the Rehydrate from Archives page to avoid starting Rehydrations with the incorrect time range or when you accidentally make typos in your indexing query.

The logs already indexed will remain queryable until the end of the retention period selected for that historical view, and all the logs already scanned and indexed will still be billed.

Deleting historical views

Historical views stay in Datadog until they have exceeded the selected retention period, or you can opt to delete them sooner if you no longer need the view. You can mark a historical view to be deleted by selecting and confirming the delete icon at the far right of the historical view.

One hour later, the historical view is definitively deleted; until that time, the team is able to cancel the deletion.

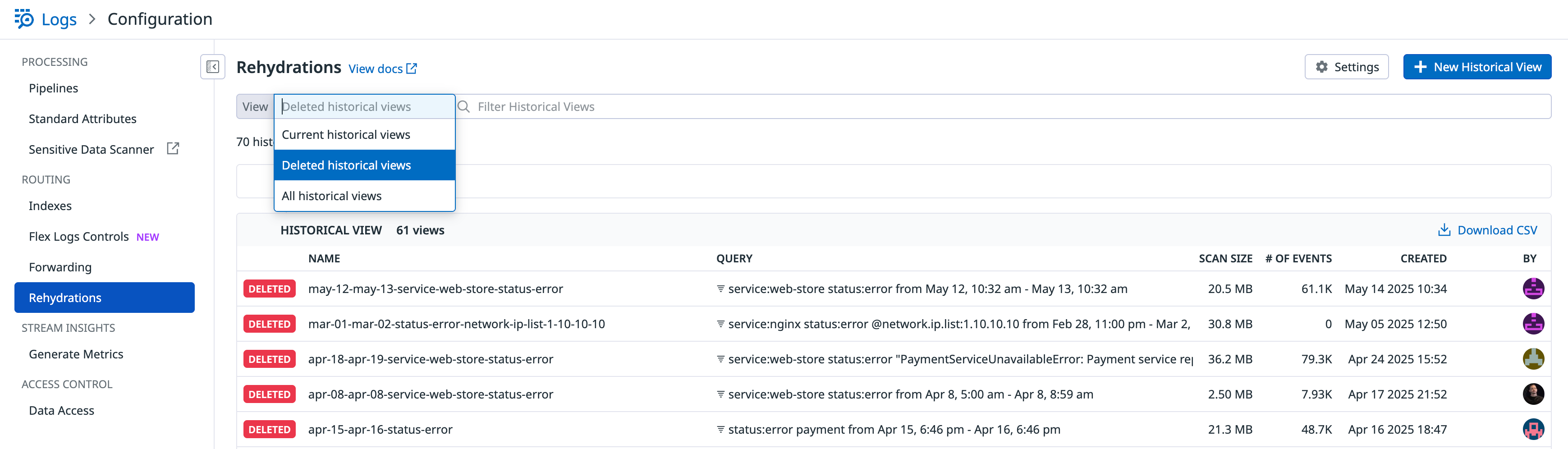

Viewing deleted historical views

View deleted historical views for up to 1 year in the past using the View dropdown menu:

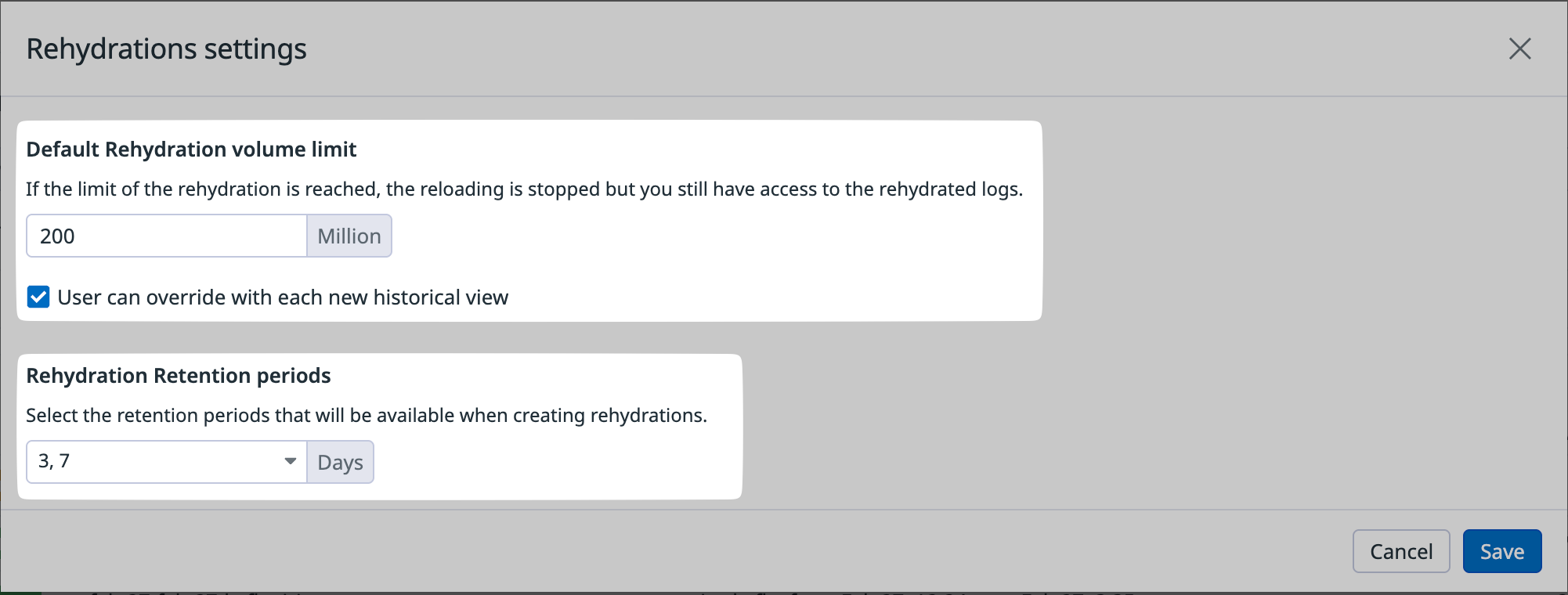

Set default limits for historical views

Admins with the Logs Write Archives permission can configure default controls to ensure efficient use of log rehydration across teams.

These settings can be configured from the Rehydration settings panel, available from the Historical Views page.

Default rehydration volume limit: Define the default number of logs (in millions) that can be rehydrated per historical view. If the limit is reached, the rehydration automatically stops, but already rehydrated logs remain accessible. Admins can also allow this limit to be overridden during view creation.

Retention period selector: Choose which retention periods are available when creating rehydrations. Only the selected durations (for example, 3, 7, 15, 30, 45, 60, 90, or 180 days) appear in the dropdown menu when selecting how long logs should remain searchable in Datadog.

Setting up archive rehydrating

Define a Datadog archive

An external archive must be configured in order to rehydrate data from it. Follow the guide to archive your logs in the available destinations.

Permissions

Datadog requires the permission to read from your archives in order to rehydrate content from them. This permission can be changed at any time.

In order to rehydrate log events from your archives, Datadog uses the IAM Role in your AWS account that you configured for your AWS integration. If you have not yet created that Role, follow these steps to do so. To allow that Role to rehydrate log events from your archives, add the following permission statement to its IAM policies. Be sure to edit the bucket names and, if desired, specify the paths that contain your log archives.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "DatadogUploadAndRehydrateLogArchives",

"Effect": "Allow",

"Action": ["s3:PutObject", "s3:GetObject"],

"Resource": [

"arn:aws:s3:::<MY_BUCKET_NAME_1_/_MY_OPTIONAL_BUCKET_PATH_1>/*",

"arn:aws:s3:::<MY_BUCKET_NAME_2_/_MY_OPTIONAL_BUCKET_PATH_2>/*"

]

},

{

"Sid": "DatadogRehydrateLogArchivesListBucket",

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": [

"arn:aws:s3:::<MY_BUCKET_NAME_1>",

"arn:aws:s3:::<MY_BUCKET_NAME_2>"

]

}

]

}

Adding role delegation to S3 archives

Datadog only supports rehydrating from archives that have been configured to use role delegation to grant access. Once you have modified your Datadog IAM role to include the IAM policy above, ensure that each archive in your archive configuration page has the correct AWS Account + Role combination.

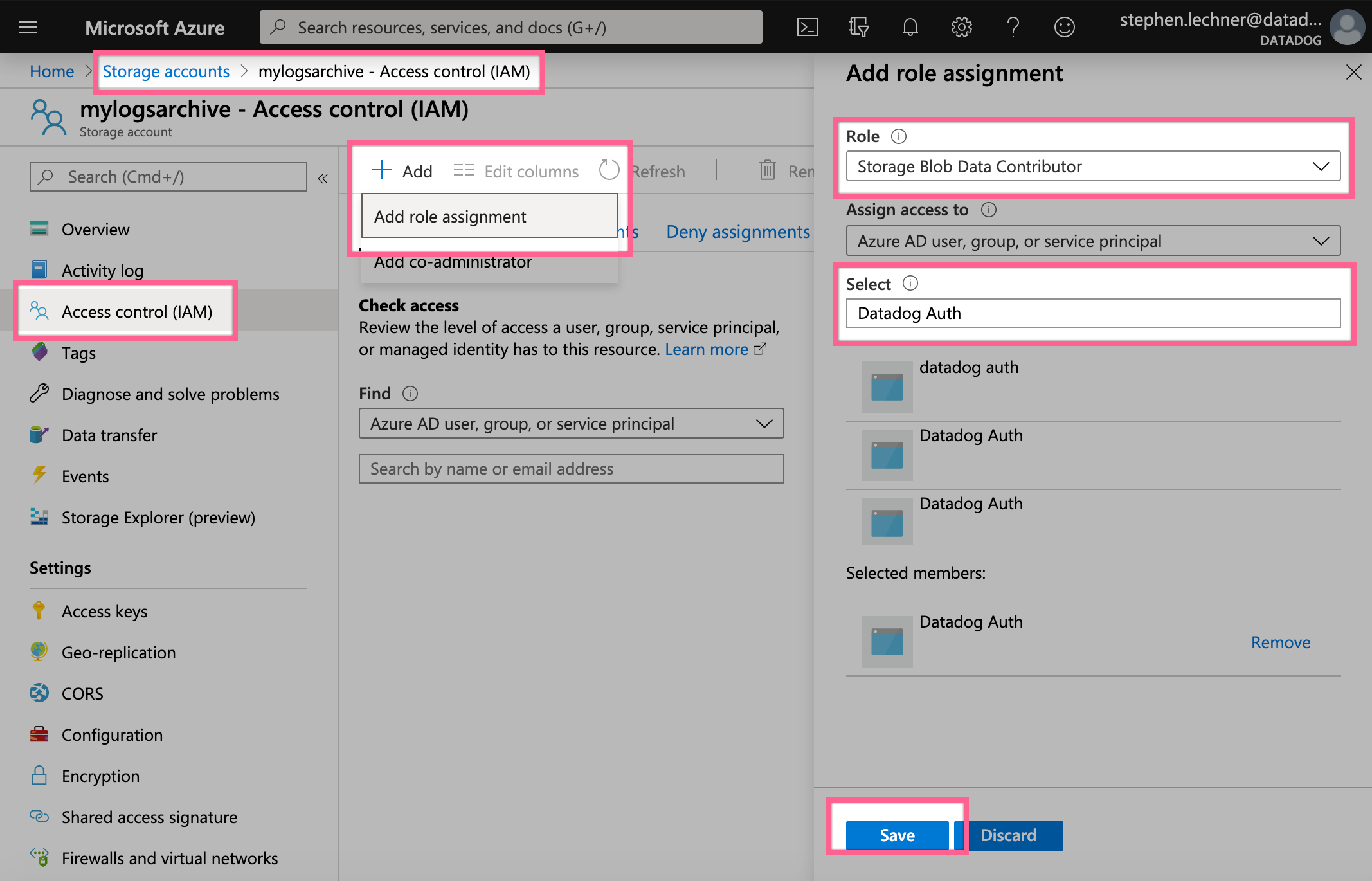

Datadog uses an Azure AD group with the Storage Blob Data Contributor role scoped to your archives’ storage account to rehydrate log events. You can grant this role to your Datadog service account from your storage account’s Access Control (IAM) page by assigning the Storage Blob Data Contributor role to your Datadog integration app.

In order to rehydrate log events from your archives, Datadog uses a service account with the Storage Object Viewer role. You can grant this role to your Datadog service account from the Google Cloud IAM Admin page by editing the service account’s permissions, adding another role, and then selecting Storage > Storage Object Viewer.

*Log Rehydration is a trademark of Datadog, Inc.