- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Ragas Evaluations

This product is not supported for your selected Datadog site. ().

Overview

Ragas is an open source library for evaluating and improving LLM applications. Ragas also provides LLM and non-LLM-based metrics to help assess the performance of your LLM application offline and in production. Datadog’s Ragas integration enables you to evaluate your production application with scores for faithfulness, answer relevancy, and context precision. You can use these scores to find traces that have a high likelihood of inaccurate answers and review them to improve your RAG pipeline.

For a simplified setup guide, see Ragas Quickstart.

Datadog recommends that you use sampling for Ragas evaluations. These LLM-as-a-judge evaluations are powered by your LLM provider's account. Evaluations are automatically traced and sent to Datadog. These traces contain LLM spans, which may affect your LLM Observability billing. See Sampling.

Evaluations

Faithfulness

The Faithfulness score evaluates how consistent an LLM’s generation is against the provided ground truth context data.

This score is generated through three steps:

- Creating statements: Asking another LLM to break down an answer into individual statements

- Creating verdicts: For each statement, determining if it is unfaithful to the provided context

- Computing a score: Dividing the number of contradicting statements over the total number of statements

For more information, see Ragas’s Faithfulness documentation.

Answer Relevancy

The Answer Relevancy (or Response Relevancy) score assesses how pertinent the generated answer is to the given prompt. A lower score is assigned to answers that are incomplete or contain redundant information, and higher scores indicate better relevancy. This metric is computed using the question, the retrieved contexts, and the answer.

The Answer Relevancy score is defined as the mean cosine similarity of the original question to a number of artificial questions, which are generated (reverse engineered) based on the response.

For more information, see Ragas’s Answer Relevancy documentation.

Context Precision

The Context Precision score assesses if the context was useful in arriving at the given answer.

This score is modified from Ragas’s original Context Precision metric, which computes the mean of the Precision@k for each chunk in the context. Precision@k is the ratio of the number of relevant chunks at rank k to the total number of chunks at rank k.

Datadog’s Context Precision score is computed by dividing the number of relevant contexts by the total number of contexts.

For more information, see Ragas’s Context Precision documentation.

Setup

Datadog’s Ragas evaluations require ragas v0.1+ and ddtrace v3.0.0+.

Install dependencies. Run the following command:

pip install ragas==0.1.21 openai ddtrace>=3.0.0The Ragas integration automatically runs evaluations in the background of your application. By default, Ragas uses OpenAI’s GPT-4 model for evaluations, which requires you to set an

OPENAI_API_KEYin your environment. You can also customize Ragas to use a different LLM.Instrument your LLM calls with RAG context information. Datadog’s Ragas integration attempts to extract context information from the prompt variables attached to a span.

Examples:

from ddtrace.llmobs import LLMObs from ddtrace.llmobs.utils import Prompt with LLMOBs.annotation_context( prompt=Prompt( variables={"context": "rag context here"}, rag_context_variable_keys = ["context"], # defaults to ['context'] rag_query_variable_keys = ["question"], # defaults to ['question'] ), name="generate_answer", ): oai_client.chat.completions.create(...)from ddtrace.llmobs import LLMObs from ddtrace.llmobs.utils import Prompt @llm(model = "llama") def generate_answer(): ... LLMObs.annotate( prompt=Prompt(variables={'context': "rag context..."}) )(Optional, but recommended) Enable sampling. Datadog traces Ragas score generation. These traces contain LLM spans, which may affect your LLM Observability billing. See Sampling.

Run your script and specify enabled Ragas evaluators. Use the environment variable

DD_LLMOBS_EVALUATORSto provide a comma-separated list of Ragas evaluators you wish to enable. These evaluators areragas_faithfulness,ragas_context_precision, andragas_answer_relevancy.For example, to run your script with all Ragas evaluators enabled:

DD_LLMOBS_EVALUATORS="ragas_faithfulness,ragas_context_precision,ragas_answer_relevancy" \ DD_ENV=dev \ DD_API_KEY=<YOUR-DATADOG-API-KEY> \ DD_SITE=\ python driver.py

Configuration

Sampling

To enable Ragas scoring for a sampled subset of LLM calls, use the DD_LLMOBS_EVALUATOR_SAMPLING_RULES environment variable. Pass in a list of objects, each containing the following fields:

| Field | Description | Required | Type |

|---|---|---|---|

sample_rate | Sampling rate from 0 to 1 | Yes | Float |

evaluator_label | RAGAS evaluator to apply rule to | No | String |

span_name | Name of spans to apply rule to | No | String |

In the following example, Ragas Faithfulness scoring is enabled for 50% of all answer_question spans. Ragas evaluations are disabled for all other spans ("sample_rate": 0).

export DD_LLMOBS_EVALUATOR_SAMPLING_RULES='[

{

"sample_rate": 0.5,

"evaluator_label": "ragas_faithfulness",

"span_name": "answer_question"

},

{

"sample_rate": 0

}

]'

Customization

Ragas supports customizations. For example, the following snippet configures the Faithfulness evaluator to use gpt-4 and adds custom instructions for the prompt to the evaluating LLM:

from langchain_openai import ChatOpenAI

from ragas.metrics import faithfulness

from ragas.llms.base import LangchainLLMWrapper

faithfulness.llm = LangchainLLMWrapper(ChatOpenAI(model="gpt-4"))

faithfulness.statement_prompt.instruction += "\nMake sure text containing code instructions are grouped with contextual information on how to run that code."

Any customizations you make to the global Ragas instance are automatically applied to Datadog’s Ragas evaluators. No action is required.

View Ragas evaluations in Datadog

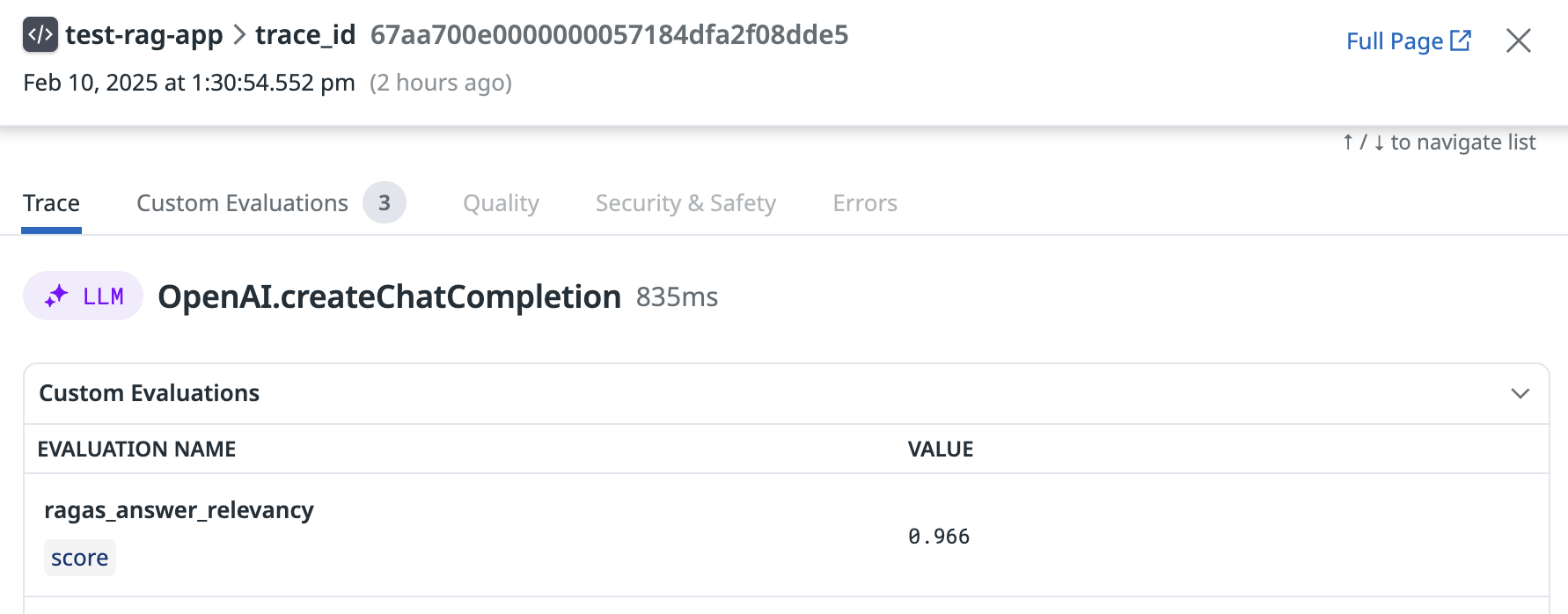

Ragas scores are sent to Datadog as evaluation metrics. When you view a scored trace in LLM Observability, Ragas scores appear under Custom Evaluations.

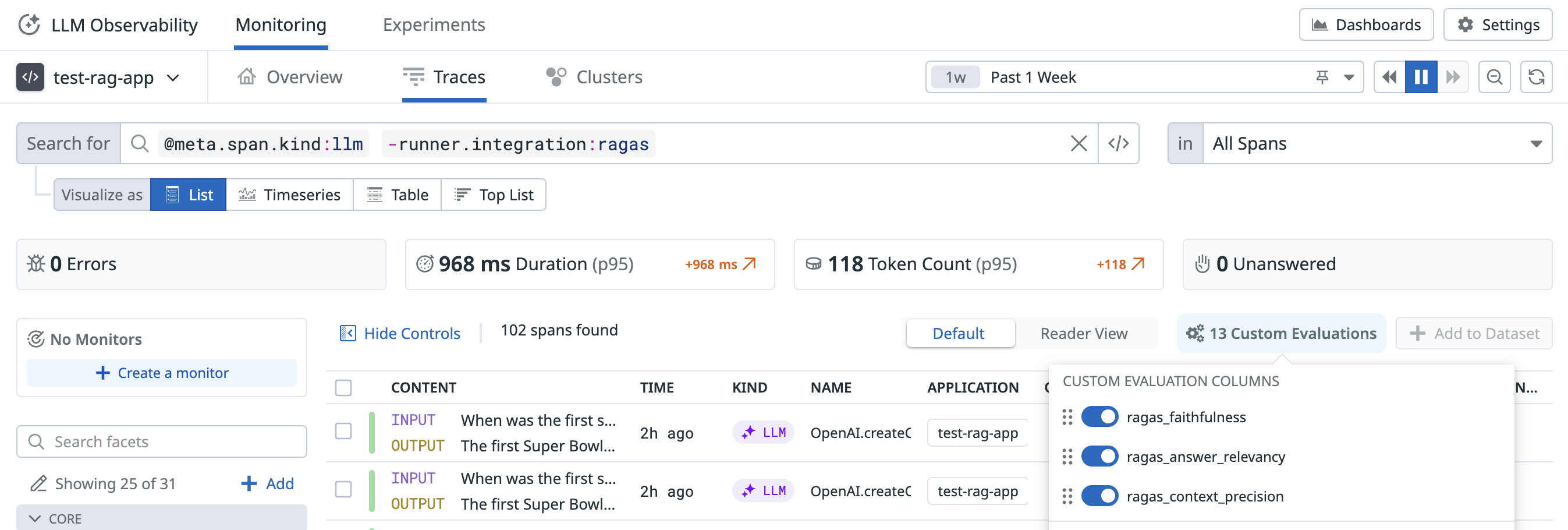

You can also configure your LLM Traces page to display Ragas scores.

- Go to the LLM Observability Traces page in Datadog.

- Search for

@meta.span.kind:llmin All Spans to view only LLM spans. - Add

-runner.integration:ragasto the search field. Datadog automatically traces the generation of Ragas scores. Use this exclusion term to filter out these traces. - Select Custom Evaluations and enable your desired Ragas evaluations. These scores are then displayed in additional columns on the LLM Observability Traces page.

Troubleshooting

Missing evaluations

Your LLM inference could be missing an evaluation for the following reasons:

- The LLM span was not sampled for an evaluation because you have implemented sampling.

- An error occurred during the Ragas evaluation. Search for

runner.integration:ragasto see traces for the Ragas evaluation itself.

Flushing

Use the LLMObs.flush() command to guarantee all traces and evaluations are flushed to Datadog.

Note: This is a blocking function.

Further reading

Additional helpful documentation, links, and articles: