- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Prompt Tracking

This product is not supported for your selected Datadog site. ().

In Datadog’s LLM Observability, the Prompt Tracking feature links prompt templates and versions to LLM calls. Prompt Tracking works alongside LLM Observability’s traces, spans, and Playground.

Prompt Tracking enables you to:

- See all prompts used by your LLM application or agent, with call volume and latency over time

- Compare prompts or versions by calls, latency, tokens used, and cost

- See detailed information about a prompt: review its version history, view a text diff, and jump to traces using a specific version

- Filter Trace Explorer by prompt name, ID, or version to isolate impacted requests

- Reproduce a run by populating LLM Observability Playground with the exact template and variables from any span

Set up Prompt Tracking

With structured prompt metadata

To use Prompt Tracking, you can submit structured prompt metadata (ID, optional version, template, variables).

LLM Observability Python SDK

If you are using the LLM Observability Python SDK (dd-trace v3.16.0+), attach prompt metadata to the LLM span using the prompt argument or helper. See the LLM Observability Python SDK documentation.

LLM Observability Node.js SDK

If you are using the LLM Observability Node.js SDK (dd-trace v5.83.0+), attach prompt metadata to the LLM span using the prompt option. See the LLM Observability Node.js SDK documentation.

LLM Observability API

If you are using the LLM Observability API intake, submit prompt metadata to the Spans API endpoint. See the LLM Observability HTTP API reference documentation.

If you are using prompt templates, LLM Observability can automatically attach version information based on prompt content.

With LangChain templates

If you are using LangChain prompt templates, Datadog automatically captures prompt metadata without code changes. IDs are derived from module or template names. To override these IDs, see LLM Observability Auto-instrumentation: LangChain.

Use Prompt Tracking in LLM Observability

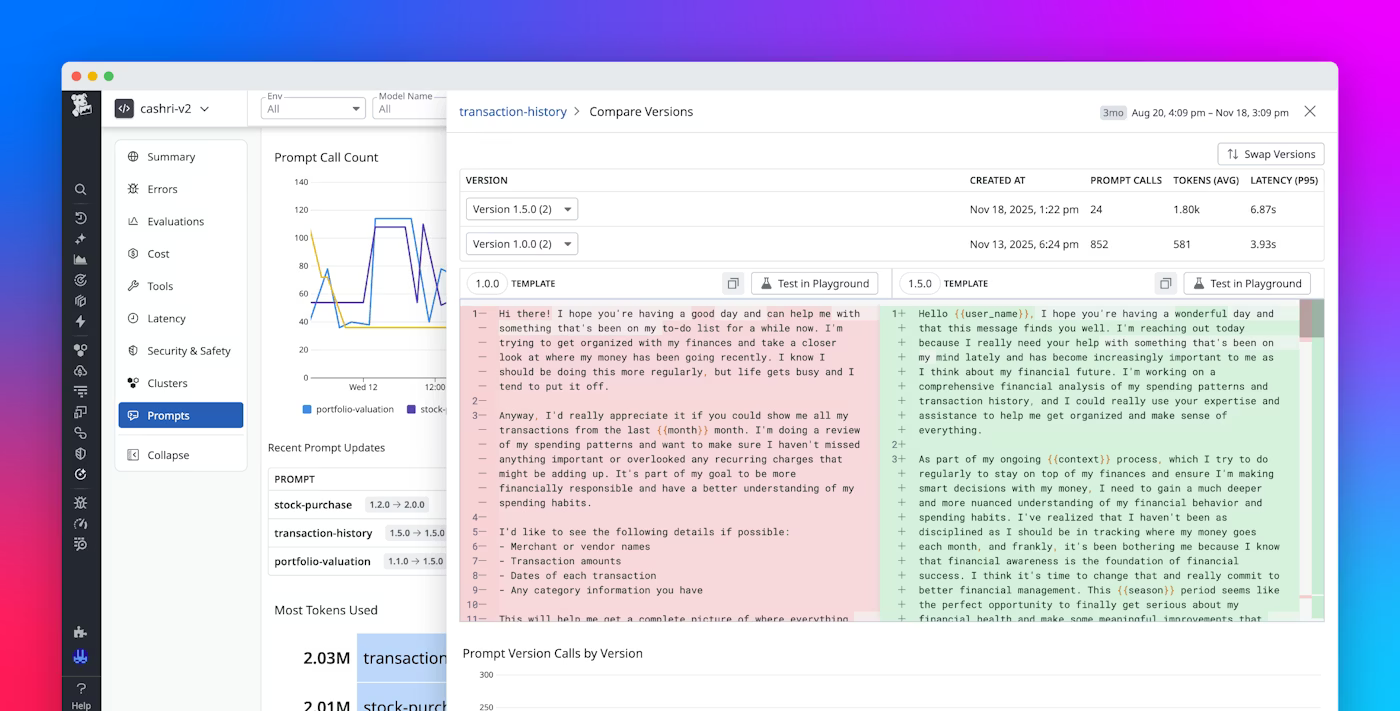

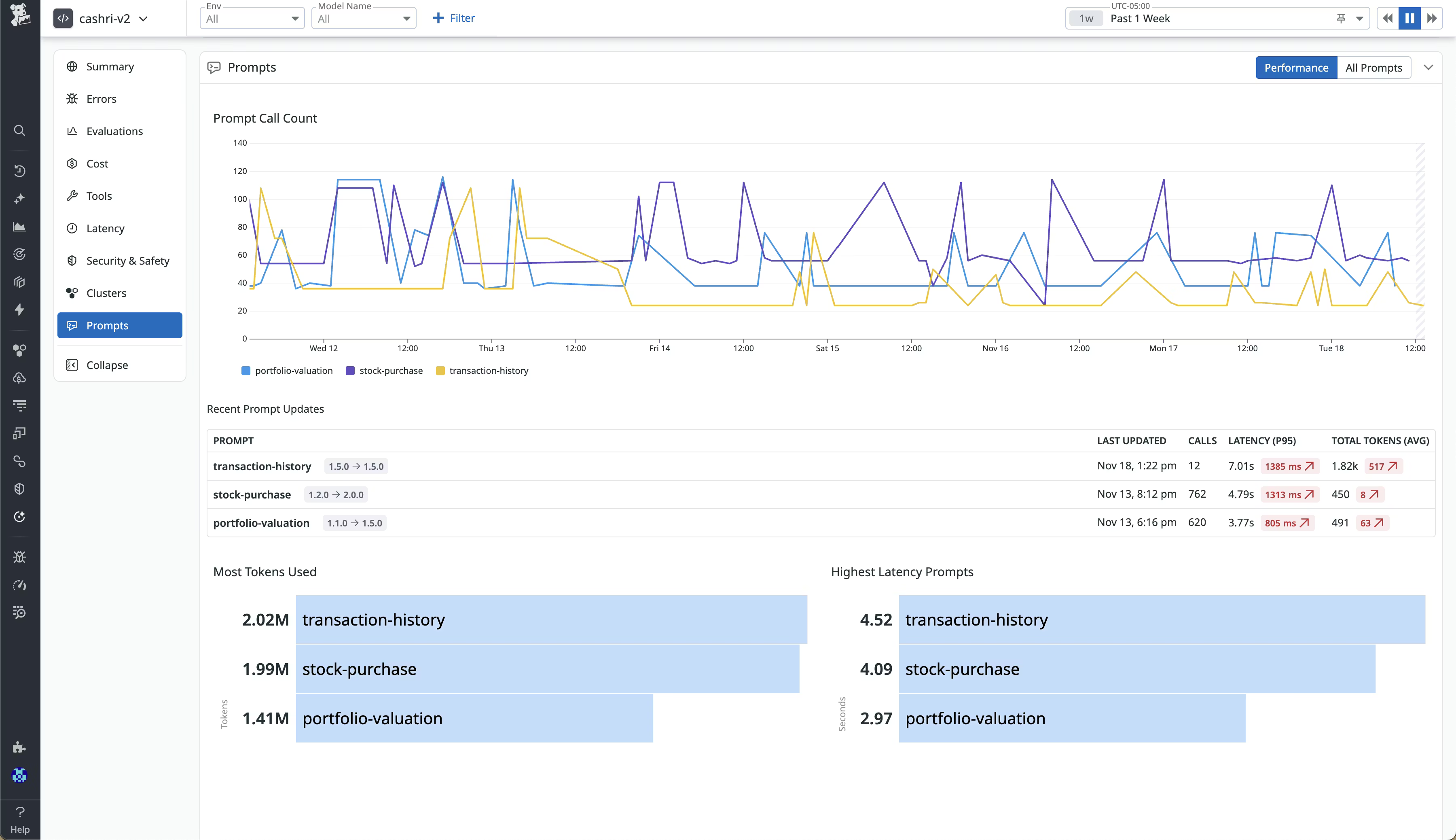

View your app in LLM Observability and select Prompts on the left. The Prompts view features the following information:

- Prompt Call Count: A timeseries chart displaying calls per prompt (or per version) over time

- Recent Prompt Updates: Information about recent prompt updates, including time of last update, call count, average latency, and average tokens per call

- Most Tokens Used: Prompts ranked by total (input or output) tokens

- Highest Latency Prompts: Prompts ranked by average duration

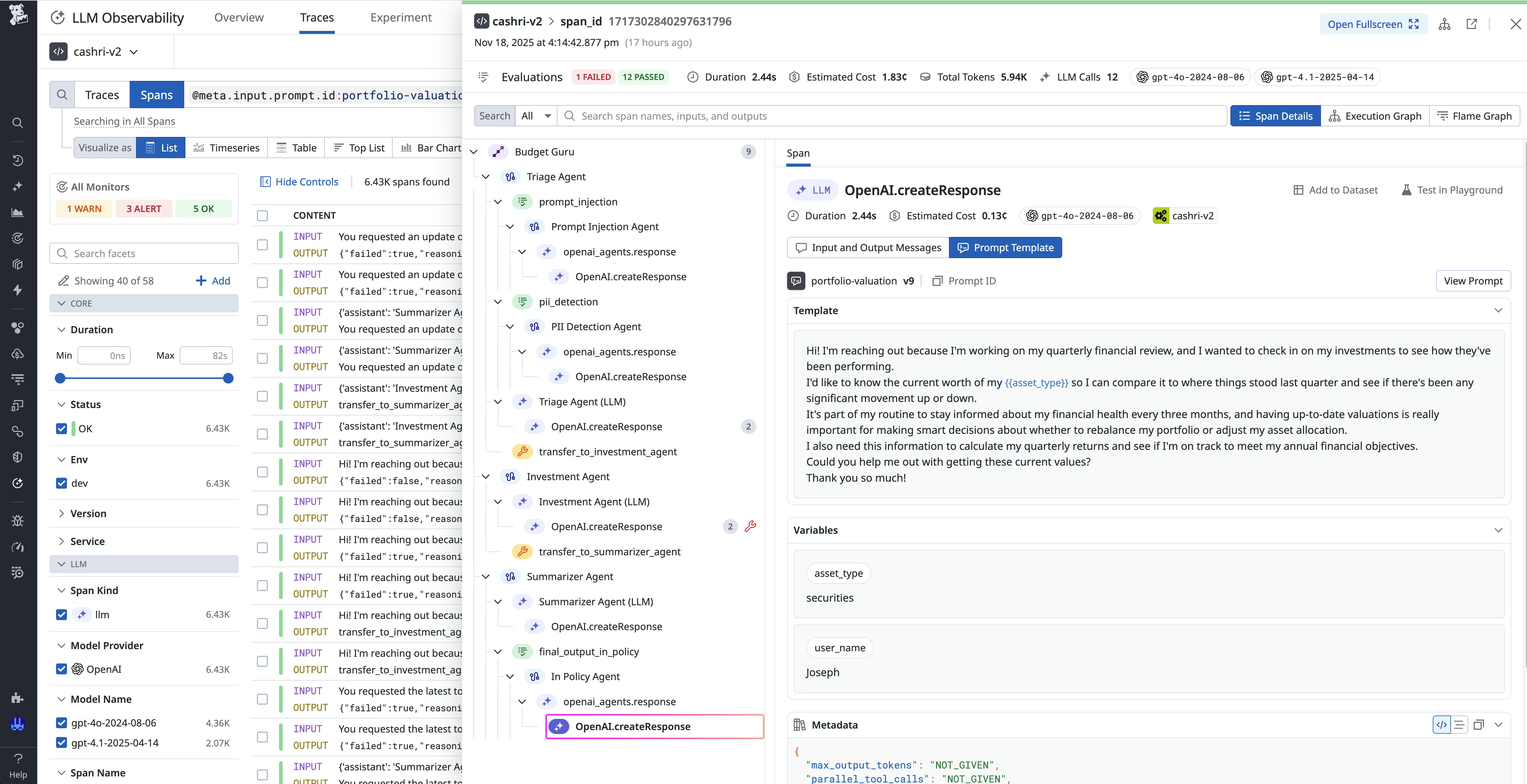

Click on a prompt to open a detailed side-panel view that features information about version activity and various metrics. You can also see a diff view of two versions, open Trace Explorer pre-filtered to spans that use a selected version, or start a Playground session pre-populated with the selected version’s template and variables.

You can use the LLM Observability Trace Explorer to locate requests by prompt usage. You can use a prompt’s name, ID, and version as facets for both trace-level and span-level search. Click any LLM span to see the prompt that generated it.

Further Reading

Additional helpful documentation, links, and articles: