- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Datadog-CrewAI integration for LLM Observability

This product is not supported for your selected Datadog site. ().

This guide demonstrates how to integrate LLM Observability with CrewAI using auto-instrumentation. This also includes how to submit LLM Observability traces to Datadog and view your CrewAI agent runs in Datadog’s Agentic Execution View.

Getting started

Install dependencies

Run this command to install the required dependencies:

pip install ddtrace crewai crewai-tools

Set environment variables

If you do not have a Datadog API key, create an account and get your API key.

You also need to specify an ML application name in the following environment variables. An ML application is a grouping of LLM Observability traces associated with a specific LLM-based application. See Application naming guidelines for more information on limitations with ML application names.

export DD_API_KEY=<YOUR_DD_API_KEY>

export DD_SITE=<YOUR_DD_SITE>

export DD_LLMOBS_ENABLED=true

export DD_LLMOBS_ML_APP=<YOUR_ML_APP_NAME>

export DD_LLMOBS_AGENTLESS_ENABLED=true

export DD_APM_TRACING_ENABLED=false

Additionally, configure any LLM provider API keys:

export OPENAI_API_KEY=<YOUR_OPENAI_API_KEY>

export ANTHROPIC_API_KEY=<YOUR_ANTHROPIC_API_KEY>

export GEMINI_API_KEY=<YOUR_GEMINI_API_KEY>

...

Create a CrewAI agent application

The following example creates a CrewAI agent to solve a simple problem:

# crewai_agent.py

from crewai import Agent, Task, Crew

from crewai_tools import (

WebsiteSearchTool

)

web_rag_tool = WebsiteSearchTool()

writer = Agent(

role="Writer",

goal="You make math engaging and understandable for young children through poetry",

backstory="You're an expert in writing haikus but you know nothing of math.",

tools=[web_rag_tool],

)

task = Task(

description=("What is {multiplication}?"),

expected_output=("Compose a haiku that includes the answer."),

agent=writer

)

crew = Crew(

agents=[writer],

tasks=[task],

share_crew=False

)

output = crew.kickoff(dict(multiplication="2 * 2"))

Run the application with Datadog auto-instrumentation

With the environment variables set, you can now run the application with Datadog auto-instrumentation.

ddtrace-run python crewai_agent.py

View the traces in Datadog

After running the application, you can view the traces in Datadog LLM Observability’s Traces View, selecting the ML application name you chose from the top-left dropdown.

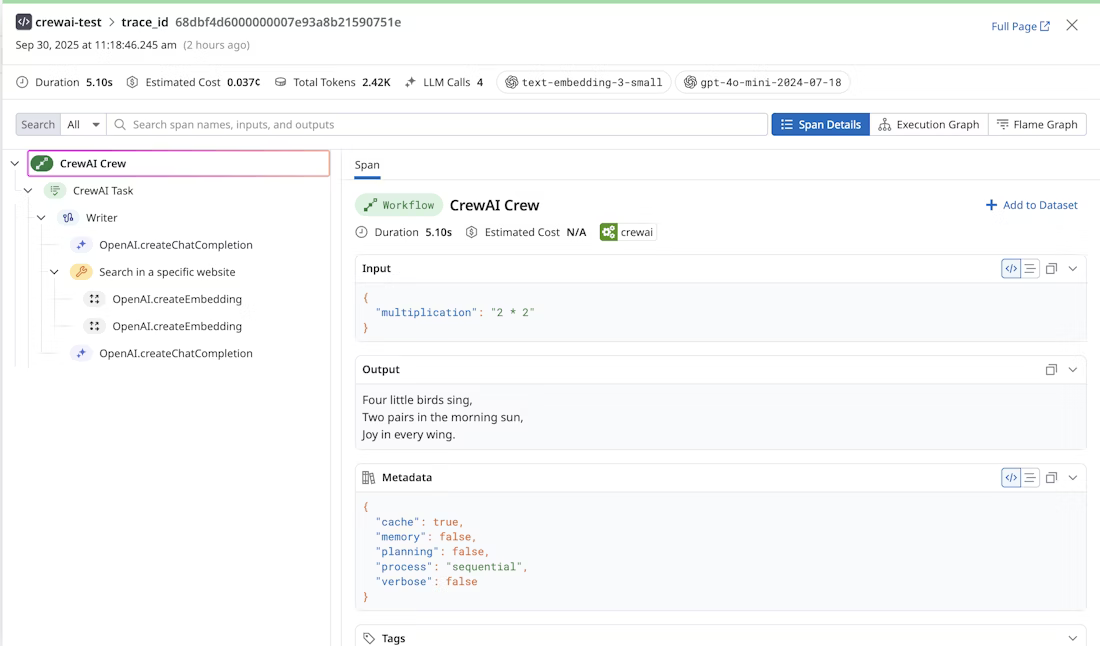

Clicking on a trace shows you the details of the trace, including total tokens used, number of LLM calls, models used, and estimated cost. Clicking into a specific span narrows down these details and shows related input, output, and metadata.

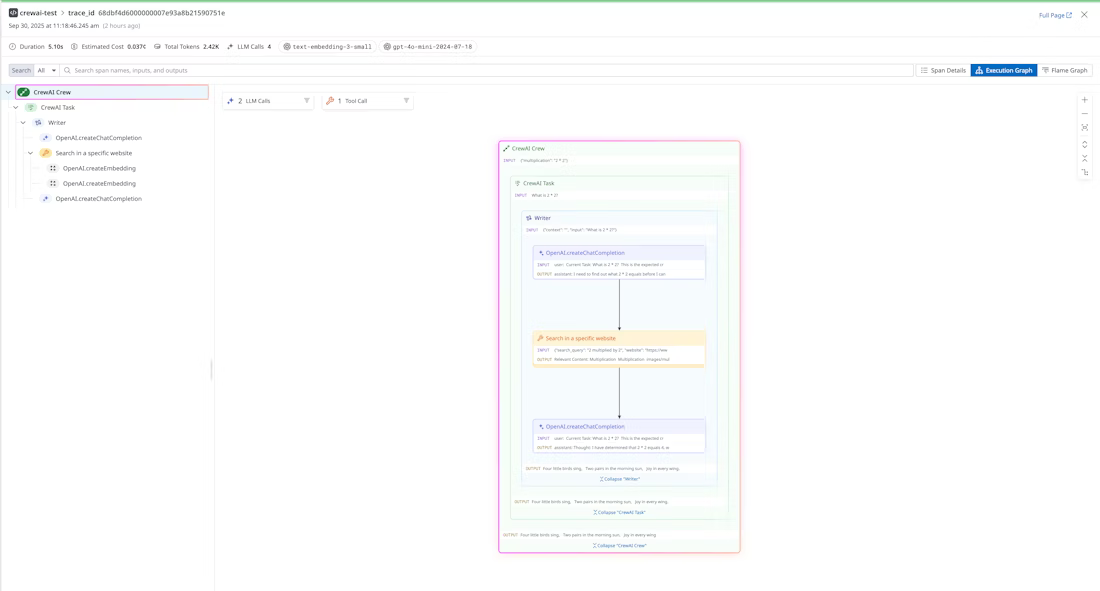

Additionally, you can view the execution graph view of the trace, which shows the control and data flow of the trace. This scales with larger agents to show handoffs and relationships between LLM calls, tool calls, and agent interactions.