- 重要な情報

- はじめに

- Datadog

- Datadog サイト

- DevSecOps

- AWS Lambda のサーバーレス

- エージェント

- インテグレーション

- コンテナ

- ダッシュボード

- アラート設定

- ログ管理

- トレーシング

- プロファイラー

- タグ

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic モニタリング

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- 認可

- DogStatsD

- カスタムチェック

- インテグレーション

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- サービスのチェック

- IDE インテグレーション

- コミュニティ

- ガイド

- Administrator's Guide

- API

- モバイルアプリケーション

- CoScreen

- Cloudcraft

- アプリ内

- Service Management

- インフラストラクチャー

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- クラウド セキュリティ マネジメント

- Application Security Management

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- 管理

APM トラブルシューティング

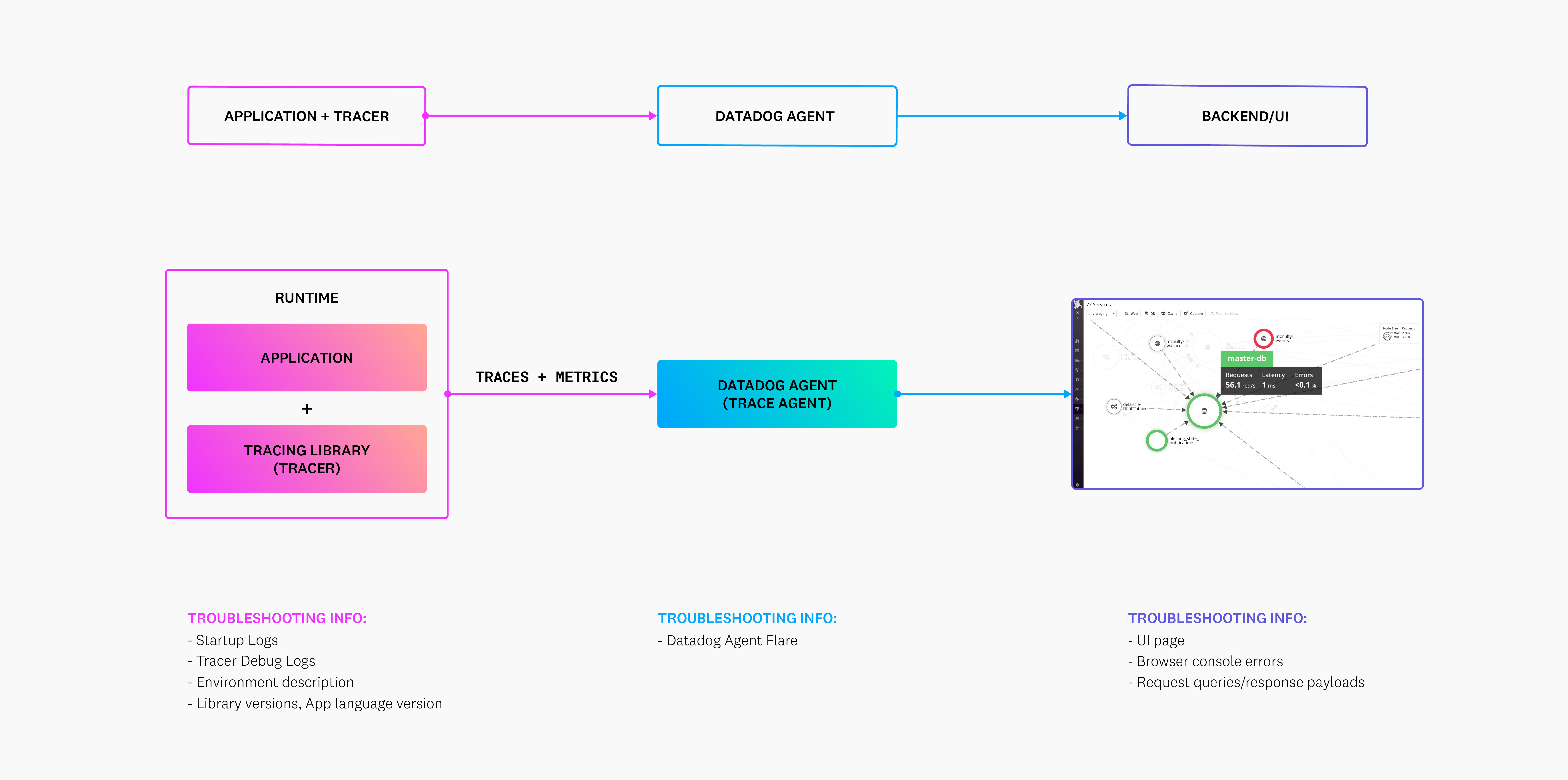

Datadog APM を使用中に予期しない動作が発生した場合は、問題解決に役立つ情報をこのページで確認してください。各リリースには改善や修正が含まれているため、Datadog では使用している Datadog トレーシングライブラリの最新バージョンへの定期的な更新を推奨しています。問題が引き続き発生する場合は、Datadog サポートに連絡してください。

APM データを Datadog に送信する際には、以下のコンポーネントが関与します。

詳細については、追加サポートを参照してください。

トレースの保持

このセクションでは、Datadog 全体におけるトレースデータの保持とフィルタリングに関する問題について説明します。

Trace Explorer に Monitors ページよりも多くのスパンが表示される

Trace Explorer に Monitors ページよりも多くのスパンが表示される

カスタム保持フィルターをセットアップしていない場合、これは想定された動作です。その理由は以下の通りです。

Trace Explorer ページでは、取り込まれたスパンやインデックス化されたスパンを任意のタグを使って検索できます。ここで任意のトレースをクエリすることが可能です。

デフォルトでは、スパンは取り込まれた後、Datadog インテリジェントフィルターによって保持されます。Datadog には、デフォルトで有効になっている他の保持フィルターもあり、サービスやエンドポイント、エラー、遅延の大きいトレースに対する可視性を提供します。

ただし、これらのトレースをモニターで使用するには、カスタム保持フィルターを設定する必要があります。

カスタム保持フィルターを使用すると、タグに基づいて追加のフィルターを作成、変更、無効化し、どのスパンがインデックス化および保持されるかを決定できます。また、各フィルターに一致するスパンの保持率を設定することも可能です。その後、インデックス化されたこれらのトレースはモニターで使用できます。

| 製品 | スパンソース |

|---|---|

| モニター | カスタム保持フィルターからのスパン |

| その他の製品 (ダッシュボード、ノートブックなど) | カスタム保持フィルター + Datadog インテリジェントフィルターからのスパン |

トレースメトリクス

このセクションでは、トレースメトリクスにおける不一致や不整合のトラブルシューティングについて説明します。

トレースメトリクスとカスタムスパンベースのメトリクスが異なる値を持つ

トレースメトリクスとカスタムスパンベースのメトリクスが異なる値を持つ

トレースメトリクスとカスタムスパンベースのメトリクスは、異なるデータセットに基づいて計算されるため、異なる値を持つことがあります。

- トレースメトリクスは、トレース取り込みサンプリングの構成に関係なく、アプリケーションのトラフィックの 100% に基づいて計算されます。トレースメトリクスのネームスペースは次の形式に従います:

trace.<SPAN_NAME>.<METRIC_SUFFIX>。 - カスタムスパンベースのメトリクスは、取り込まれたスパンに基づいて生成されます。これらのスパンは、トレース取り込みサンプリングの設定によって異なります。例えば、トレースの 50% を取り込んでいる場合、カスタムスパンベースのメトリクスは取り込まれた 50% のスパンに基づきます。

トレースメトリクスとカスタムスパンベースのメトリクスの値を一致させるためには、アプリケーションまたはサービスに対して 100% の取り込み率を構成する必要があります。

メトリクス名は、メトリクス命名規則に従う必要があります。

trace.* で始まるメトリクス名は許可されず、保存されません。サービス

このセクションでは、サービスに関連する問題のトラブルシューティング方法について説明します。

Datadog で 1 つのサービスが複数のサービスとして表示される

Datadog で 1 つのサービスが複数のサービスとして表示される

これは、すべてのスパンでサービス名が一貫していない場合に発生する可能性があります。

例えば、service:test という単一のサービスが Datadog で以下の複数のサービスとして表示される場合が考えられます。

service:testservice:test-mongodbservice:test-postgresdb

推測されたサービス依存関係 (プレビュー版) を使用できます。推測された外部 API は、デフォルトの命名スキーム net.peer.name を使用します。例えば、api.stripe.com、api.twilio.com、us6.api.mailchimp.com などです。推測されたデータベースは、デフォルトの命名スキーム db.instance を使用します。

または、DD_SERVICE_MAPPING や DD_TRACE_SERVICE_MAPPING などの環境変数を使用して、サービス名をマージすることもできます (言語に応じて異なります)。

詳細は、Datadog トレーシングライブラリの構成を参照するか、またはこちらで使用する言語を選択してください。

dd.service.mapping- 環境変数:

DD_SERVICE_MAPPING

デフォルト:null

例:mysql:my-mysql-service-name-db, postgresql:my-postgres-service-name-db

構成でサービス名を動的に変更します。異なるサービス間でデータベースに異なる名前を付ける場合に便利です。

DD_SERVICE_MAPPING- サービス名のマッピングを定義し、トレース内におけるサービスの名前変更を許可します (例:

postgres:postgresql,defaultdb:postgresql)。バージョン 0.47 以降で利用可能。

DD_SERVICE_MAPPING- デフォルト:

null

構成により、サービス名を動的に変更することができます。サービス名はカンマやスペースで区切ることができ、例えばmysql:mysql-service-name,postgres:postgres-service-name、mysql:mysql-service-name postgres:postgres-service-nameのようにすることができます。

DD_SERVICE_MAPPING- 構成:

serviceMapping

デフォルト: N/A

例:mysql:my-mysql-service-name-db,pg:my-pg-service-name-db

各プラグインのサービス名を提供します。カンマ区切りのplugin:service-nameペア (スペースありまたはなし) を許容します。

DD_TRACE_SERVICE_MAPPING- コンフィギュレーションを使用してサービスの名前を変更します。名前を変更するサービス名(キー)と、代わりに使う名前(値)のペアを

[from-key]:[to-name]の形式で指定したカンマ区切りのリストを受け入れます。

例:mysql:main-mysql-db, mongodb:offsite-mongodb-servicefrom-keyはインテグレーションタイプに固有で、アプリケーション名のプレフィックスは取り除く必要があります。たとえば、my-application-sql-serverの名前をmain-dbに変更するには、sql-server:main-dbを使用します。バージョン 1.23.0 で追加されました。

DD_SERVICE_MAPPING- INI:

datadog.service_mapping

デフォルト:null

APM インテグレーションのデフォルト名を変更します。例えば、DD_SERVICE_MAPPING=pdo:payments-db,mysqli:orders-dbのように、1つまたは複数のインテグレーションを一度に名前変更します (インテグレーション名を参照してください)。

Ruby は DD_SERVICE_MAPPING または DD_TRACE_SERVICE_MAPPING をサポートしていません。サービス名を変更するためのコードオプションについては、追加の Ruby 構成を参照してください。

Plan and Usage ページで取り込まれたスパン/インデックス化されたスパンが予期せず増加している

Plan and Usage ページで取り込まれたスパン/インデックス化されたスパンが予期せず増加している

データの取り込みやインデックス化の急増は、さまざまな要因によって引き起こされることがあります。増加の原因を調査するには、APM トレース推定使用量メトリクスを使用してください。

| 使用量タイプ | メトリクス | 説明 |

|---|---|---|

| APM インデックス化スパン | datadog.estimated_usage.apm.indexed_spans | タグ付ベースの保持フィルターによってインデックス化されたスパンの総数。 |

| APM 取り込みスパン | datadog.estimated_usage.apm.ingested_spans | 取り込みスパンの総数。 |

APM Traces Usage ダッシュボードには、大まかな KPI と追加の使用情報を表示する複数のウィジェットグループが含まれています。

エラーメッセージとスタックトレースが欠落している

エラーメッセージとスタックトレースが欠落している

エラーステータスを持つ一部のトレースでは、Errors タブに例外の詳細ではなく Missing error message and stack trace (エラーメッセージとスタックトレースが欠落している) と表示されます。

スパンがこのメッセージを表示する理由は、以下の 2 つが考えられます。

- スパンが未処理の例外を含んでいる。

- スパン内の HTTP レスポンスが 400 から 599 の間の HTTP ステータスコードを返した。

try/catch ブロックで例外が処理される場合、error.message、error.type、および error.stack スパンタグは設定されません。詳細なエラースパンタグを設定するには、カスタムインスツルメンテーションコードを使用します。

データボリュームガイドライン

以下の問題が発生している場合、Datadog の容量ガイドラインを超過している可能性があります。

- Datadog プラットフォームで、トレースメトリクスが期待通りにレポートされていない。

- Datadog プラットフォームで、期待通りに表示されるはずのリソースの一部が表示されていない。

- サービスからのトレースは表示されているが、サービスカタログページでこのサービスを見つけることができない。

データ容量のガイドライン

データ容量のガイドライン

インスツルメント済みのアプリケーションは、現時点から最大過去18時間および未来2時間までのタイムスタンプのスパンを送信できます。

Datadog は、40 分間隔で以下の組み合わせを受け入れます。

- 5000 個の一意な

環境とサービスの組み合わせ - 環境ごとに 30 個の一意な

秒単位のプライマリタグ値 - 環境およびサービスごとに 100 個の一意な

オペレーション名 - 環境、サービス、および操作名ごとに 1000 個の一意な

リソース - 環境およびサービスごとに 30 個の一意な

バージョン

より大きな容量に対応する必要がある場合は、Datadog サポートに連絡してユースケースを伝えてください。

Datadog では、以下の文字列が指定された文字数を超えた場合、切り捨てられます。

また、スパンに存在するスパンタグの数が、1024 以上にならないようにしてください。

サービスの数がデータ容量のガイドラインで指定された数を超えている

サービスの数がデータ容量のガイドラインで指定された数を超えている

サービス数がデータ量ガイドラインで指定されている数を超える場合は、サービスの命名規則について以下のベストプラクティスを試してみてください。

サービス名から環境タグの値を除外する

デフォルトでは、環境 (env) は Datadog APM のプライマリタグになります。

サービスは通常、prod、staging、dev などの複数の環境にデプロイされます。リクエスト数、レイテンシー、エラー率などのパフォーマンスメトリクスは、さまざまな環境間で異なっています。サービスカタログの環境ドロップダウンを使用すると、Performance タブのデータを特定の環境にスコープすることができます。

サービスの数が増えすぎて問題になりがちなのが、サービス名に環境値を含めるパターンです。例えば、prod-web-store と dev-web-store のように 2 つの環境で動作しているため、1 つではなく 2 つのユニークなサービスがある場合です。

Datadog では、サービス名を変更することでインスツルメンテーションを調整することを推奨しています。

トレースメトリクスはアンサンプリングされるため、インスツルメンテーションされたアプリケーションでは、部分的なデータではなく、すべてのデータが表示されます。また、ボリュームガイドラインも適用されます。

メトリクスパーティションを置いたり、変数をサービス名にグループ化する代わりに、第 2 プライマリタグを使用する

第 2 のプライマリタグは、トレースメトリクスのグループ化および集計に使用できる追加タグです。ドロップダウンを使用して、指定されたクラスター名またはデータセンターの値にパフォーマンスデータをスコープすることができます。

第 2 のプライマリタグを適用せず、サービス名にメトリクスパーティションやグループ化変数を含めると、アカウント内のユニークなサービス数が不必要に増加し、遅延やデータ損失の可能性があります。

例えば、サービス web-store の代わりに、サービス web-store-us-1、web-store-eu-1、web-store-eu-2 という異なるインスタンス名を指定して、これらのパーティションのパフォーマンスメトリクスを並べて確認することができます。Datadog では、リージョン値 (us-1、eu-1、eu-2) を 2 番目のプライマリタグとして実装することを推奨しています。

接続エラー

このセクションでは、アプリケーションと Datadog Agent 間の接続および通信の問題の診断と解決方法について説明します

インスツルメンテーションされたアプリケーションが Datadog Agent と通信していない

インスツルメンテーションされたアプリケーションが Datadog Agent と通信していない

これらの問題の検出と解決方法については、接続エラーを参照してください。

リソース使用量

このセクションでは、リソース使用量に関連するパフォーマンスの問題のトラブルシューティングに関する情報を記載しています。

メモリ不足エラー

メモリ不足エラー

トレースコレクションの CPU 使用率の検出と Agent の適切なリソース制限の計算については、Agent のリソース使用量を参照してください。

レート制限または最大イベントエラーメッセージ

レート制限または最大イベントエラーメッセージ

セキュリティ

このセクションでは、機密データの保護やトラフィックの管理など、APM のセキュリティに関する懸念に対処するためのアプローチを説明します。

スパンを変更、破棄、または難読化する

スパンを変更、破棄、または難読化する

Datadog Agent または一部の言語でトレースクライアント内で構成可能な、ヘルスチェックやその他不要なトラフィックに関連する機密データのスクラブやトレースの破棄に関しては、複数の構成オプションが用意されています。利用可能なオプションについては、セキュリティと Agent のカスタマイズを参照してください。本文では代表的な例をご紹介していますが、これらのオプションをお使いの環境に適用する際にサポートが必要な場合は、Datadog サポートまでお問い合わせください。

デバッグとログ

このセクションでは、デバッグログと起動ログを使用して、Datadog トレーサーの問題を特定し解決する方法について説明します。

デバッグログ

デバッグログ

Datadog トレーサーの詳細をすべて取得するには、DD_TRACE_DEBUG 環境変数を使いトレーサーのデバッグモードを有効にします。独自の調査のために有効にしたり、Datadog サポートもトリアージ目的で推奨している場合に、有効にしたりできます。ただし、ログのオーバーヘッドが発生するのを避けるため、テストが終わったらデバッグログを必ず無効にしてください。

これらのログは、インスツルメンテーションエラーやインテグレーション固有のエラーを明らかにすることができます。デバッグログの有効化と取得に関する詳細は、デバッグモードのトラブルシューティングページを参照してください。

起動ログ

起動ログ

起動時、Datadog トレースライブラリは、JSON オブジェクトに適用された設定を反映するログおよび発生したエラーを出力します。それには、対応する言語で Agent に到達できるかも含まれます。一部の言語では、この起動ログが環境変数 DD_TRACE_STARTUP_LOGS=true で有効化されている必要があります。詳しくは起動ログを参照してください。

追加サポート

追加サポートが必要な場合は、Datadog サポートにチケットを開いてください。

Datadog サポートチケット

Datadog サポートチケット

サポートチケットを開くと、Datadog サポートチームは以下の情報を求める場合があります。

問題のトレースへのリンクまたはスクリーンショット: トラブルシューティングの目的で問題を再現するのに役立ちます。

トレーサーの起動ログ: 起動ログは、トレーサーの誤構成やトレーサーと Datadog Agent 間の通信の問題を特定するのに役立ちます。トレーサーの構成とアプリケーションまたはコンテナの設定を比較することで、サポートチームは不適切に適用された設定を特定できます。

トレーサーのデバッグログ: トレーサーのデバッグログは、起動ログよりも詳細な情報を提供し、以下を明らかにします。

- アプリケーションのトラフィックフロー中の適切なインテグレーションインスツルメンテーション

- トレーサーによって作成されたスパンの中身

- スパンを Agent に送信する際の接続エラー

Datadog Agent フレア: Datadog Agent フレアにより Datadog Agent 内で起きていること (例えば、トレースが拒否または不正な形式にされているか) を確認できます。これはトレースが Datadog Agent に到達していない場合は役に立ちませんが、問題の原因やメトリクスの不一致を特定することはできます。

お客様の環境の説明: お客様のアプリケーションのデプロイ構成を理解することで、サポートチームはトレーサーと Agent 間の通信の問題の可能性を特定し、誤構成を特定できます。複雑な問題の場合、サポートは Kubernetes マニフェスト、ECS タスク定義、または同様のデプロイコンフィギュレーションファイルを求めることがあります。

カスタムトレースコード: カスタムインスツルメンテーション、構成、およびスパンタグの追加は、Datadog でのトレースの視覚化に大きな影響を与える可能性があります。

バージョン情報: 使用している言語、フレームワーク、Datadog Agent、Datadog トレーサーのバージョンを把握することで、サポートは互換性要件の確認、既知の問題の確認、またはバージョンアップグレードの推奨を行うことができます。例: