- 重要な情報

- はじめに

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- Administrator's Guide

- API

- Partners

- DDSQL Reference

- モバイルアプリケーション

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- アプリ内

- ダッシュボード

- ノートブック

- DDSQL Editor

- Reference Tables

- Sheets

- Watchdog

- アラート設定

- メトリクス

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- インフラストラクチャー

- Cloudcraft

- Resource Catalog

- ユニバーサル サービス モニタリング

- Hosts

- コンテナ

- Processes

- サーバーレス

- ネットワークモニタリング

- Cloud Cost

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM & セッションリプレイ

- Synthetic モニタリング

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- Code Security

- クラウド セキュリティ マネジメント

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- CloudPrem

- 管理

Dual Ship Logs for HTTP Client

This product is not supported for your selected Datadog site. ().

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

Overview

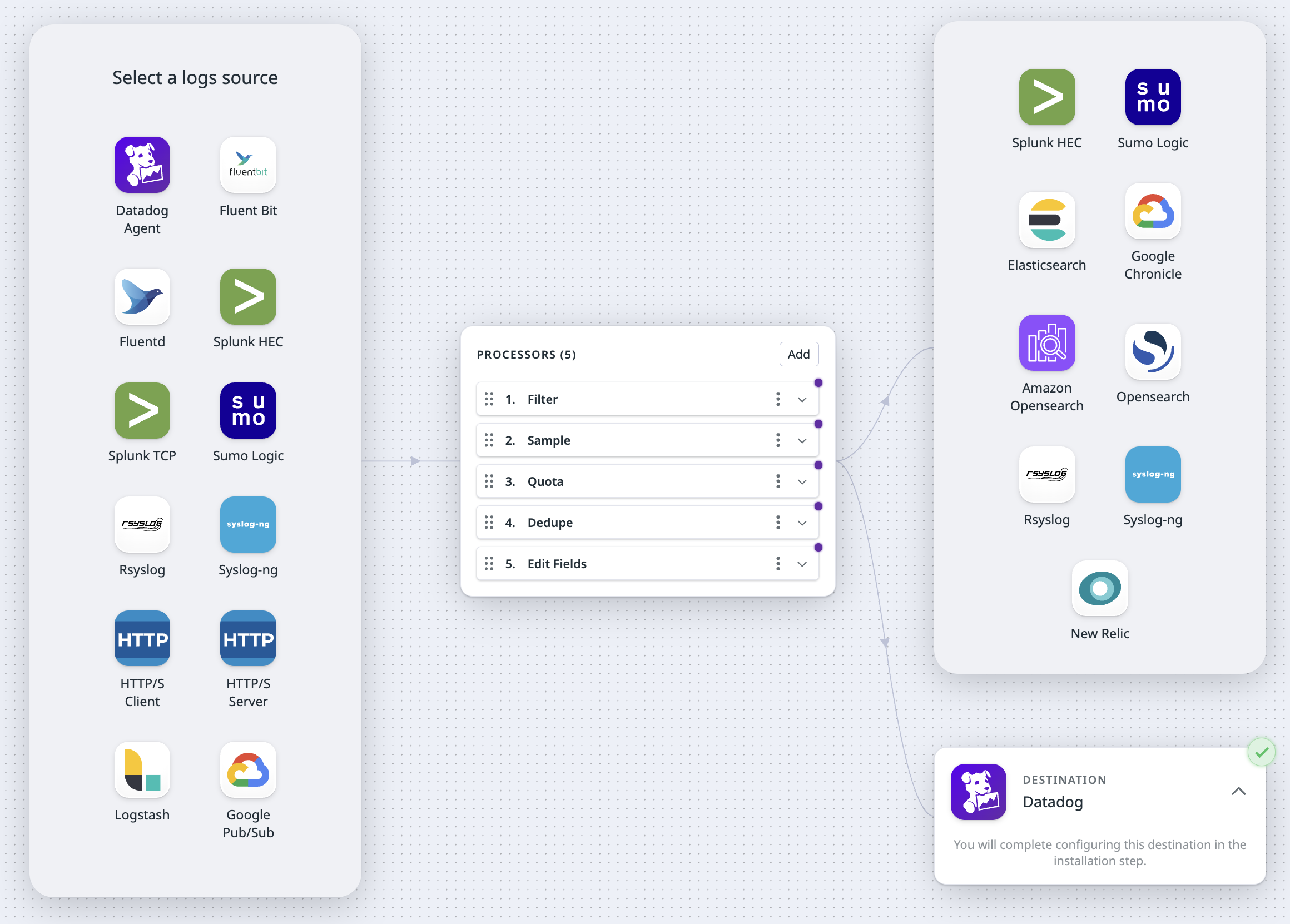

Use the Observability Pipelines Worker to aggregate and process your HTTP server logs before routing them to various applications.

This document walks you through the following:

- The prerequisites needed to set up Observability Pipelines

- Setting up Observability Pipelines

Prerequisites

To use Observability Pipelines’ HTTP/S Client source, you need the following information available:

- The full path of the HTTP Server endpoint that the Observability Pipelines Worker collects log events from. For example,

https://127.0.0.8/logs. - The HTTP authentication token or password.

The HTTP/S Client source pulls data from your upstream HTTP server. Your HTTP server must support GET requests for the HTTP Client endpoint URL that you set as an environment variable when you install the Worker.

Set up Observability Pipelines

- Navigate to Observability Pipelines.

- Select the Dual Ship Logs template to create a new pipeline.

- Select HTTP Client as the source.

Set up the source

To configure your HTTP/S Client source:

- Select your authorization strategy.

- Select the decoder you want to use on the HTTP messages. Logs pulled from the HTTP source must be in this format.

- Optionally, toggle the switch to enable TLS. If you enable TLS, the following certificate and key files are required.

Note: All file paths are made relative to the configuration data directory, which is/var/lib/observability-pipelines-worker/config/by default. See Advanced Configurations for more information. The file must be owned by theobservability-pipelines-worker groupandobservability-pipelines-workeruser, or at least readable by the group or user.Server Certificate Path: The path to the certificate file that has been signed by your Certificate Authority (CA) Root File in DER or PEM (X.509) format.CA Certificate Path: The path to the certificate file that is your Certificate Authority (CA) Root File in DER or PEM (X.509) format.Private Key Path: The path to the.keyprivate key file that belongs to your Server Certificate Path in DER or PEM (PKCS#8) format.

- Enter the interval between scrapes.

- Your HTTP Server must be able to handle GET requests at this interval.

- Since requests run concurrently, if a scrape takes longer than the interval given, a new scrape is started, which can consume extra resources. Set the timeout to a value lower than the scrape interval to prevent this from happening.

- Enter the timeout for each scrape request.

Set up the destinations

Enter the following information based on your selected logs destinations.

- Optionally, enter the name of the Amazon OpenSearch index. See template syntax if you want to route logs to different indexes based on specific fields in your logs.

- Select an authentication strategy, Basic or AWS. For AWS, enter the AWS region.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Prerequisites

- Follow the Getting Started with Amazon Security Lake to set up Amazon Security Lake, and make sure to:

- Enable Amazon Security Lake for the AWS account.

- Select the AWS regions where S3 buckets will be created for OCSF data.

- Follow Collecting data from custom sources in Security Lake to create a custom source in Amazon Security Lake.

- When you configure a custom log source in Security Lake in the AWS console:

- Enter a source name.

- Select the OCSF event class for the log source and type.

- Enter the account details for the AWS account that will write logs to Amazon Security Lake:

- AWS account ID

- External ID

- Select Create and use a new service for service access.

- Take note of the name of the bucket that is created because you need it when you set up the Amazon Security Lake destination later on.

- To find the bucket name, navigate to Custom Sources. The bucket name is in the location for your custom source. For example, if the location is

s3://aws-security-data-lake-us-east-2-qjh9pr8hy/ext/op-api-activity-test, the bucket name isaws-security-data-lake-us-east-2-qjh9pr8hy.

- To find the bucket name, navigate to Custom Sources. The bucket name is in the location for your custom source. For example, if the location is

- When you configure a custom log source in Security Lake in the AWS console:

Set up the destination

- Enter your S3 bucket name.

- Enter the AWS region.

- Enter the custom source name.

- Optionally, select an AWS authentication option.

- Enter the ARN of the IAM role you want to assume.

- Optionally, enter the assumed role session name and external ID.

- Optionally, toggle the switch to enable TLS. If you enable TLS, the following certificate and key files are required.

Note: All file paths are made relative to the configuration data directory, which is/var/lib/observability-pipelines-worker/config/by default. See Advanced Configurations for more information. The file must be owned by theobservability-pipelines-worker groupandobservability-pipelines-workeruser, or at least readable by the group or user.Server Certificate Path: The path to the certificate file that has been signed by your Certificate Authority (CA) Root File in DER or PEM (X.509).CA Certificate Path: The path to the certificate file that is your Certificate Authority (CA) Root File in DER or PEM (X.509).Private Key Path: The path to the.keyprivate key file that belongs to your Server Certificate Path in DER or PEM (PKCS#8) format.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Notes:

- When you add the Amazon Security Lake destination, the OCSF processor is automatically added so that you can convert your logs to Parquet before they are sent to Amazon Security Lake. See Remap to OCSF documentation for setup instructions.

- Only logs formatted by the OCSF processor are converted to Parquet.

To set up the Worker’s Google Chronicle destination:

- Enter the customer ID for your Google Chronicle instance.

- If you have a credentials JSON file, enter the path to your credentials JSON file. The credentials file must be placed under

DD_OP_DATA_DIR/config. Alternatively, you can use theGOOGLE_APPLICATION_CREDENTIALSenvironment variable to provide the credential path.- If you’re using workload identity on Google Kubernetes Engine (GKE), the

GOOGLE_APPLICATION_CREDENTIALSis provided for you. - The Worker uses standard Google authentication methods.

- If you’re using workload identity on Google Kubernetes Engine (GKE), the

- Select JSON or Raw encoding in the dropdown menu.

- Enter the log type. See template syntax if you want to route logs to different log types based on specific fields in your logs.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Note: Logs sent to the Google Chronicle destination must have ingestion labels. For example, if the logs are from a A10 load balancer, it must have the ingestion label A10_LOAD_BALANCER. See Google Cloud’s Support log types with a default parser for a list of available log types and their respective ingestion labels.

To use the CrowdStrike NG-SIEM destination, you need to set up a CrowdStrike data connector using the HEC/HTTP Event Connector. See Step 1: Set up the HEC/HTTP event data connector for instructions. When you set up the data connector, you are given a HEC API key and URL, which you use when you configure the Observability Pipelines Worker later on.

- Select JSON or Raw encoding in the dropdown menu.

- Optionally, enable compressions and select an algorithm (gzip or zlib) in the dropdown menu.

- Optionally, toggle the switch to enable TLS. If you enable TLS, the following certificate and key files are required.

Note: All file paths are made relative to the configuration data directory, which is/var/lib/observability-pipelines-worker/config/by default. See Advanced Configurations for more information. The file must be owned by theobservability-pipelines-worker groupandobservability-pipelines-workeruser, or at least readable by the group or user.Server Certificate Path: The path to the certificate file that has been signed by your Certificate Authority (CA) Root File in DER or PEM (X.509).CA Certificate Path: The path to the certificate file that is your Certificate Authority (CA) Root File in DER or PEM (X.509).Private Key Path: The path to the.keyprivate key file that belongs to your Server Certificate Path in DER or PEM (PKCS#8) format.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

If the Worker is ingesting logs that are not coming from the Datadog Agent and are shipped to an archive using the Observability Pipelines Datadog Archives destination, those logs are not tagged with reserved attributes. In addition, logs rehydrated into Datadog will not have standard attributes mapped. This means that when you rehydrate your logs into Log Management, you may lose Datadog telemetry, the ability to search logs easily, and the benefits of unified service tagging if you do not structure and remap your logs in Observability Pipelines before routing your logs to an archive.

For example, say your syslogs are sent to Datadog Archives and those logs have the

For example, say your syslogs are sent to Datadog Archives and those logs have the

status tagged as severity instead of the reserved attribute of status and the host tagged as host-name instead of the reserved attribute hostname. When these logs are rehydrated in Datadog, the status for each log is set to info and none of the logs have a hostname tag.If you do not have a Datadog Log Archive configured for Observability Pipelines, configure a Log Archive for your cloud provider (Amazon S3, Google Cloud Storage, or Azure Storage).

Note: You need to have the Datadog integration for your cloud provider installed to set up Datadog Log Archives. See the AWS integration, Google Cloud Platform, and Azure integration documentation for more information.

To set up the destination, follow the instructions for the cloud provider you are using to archive your logs.

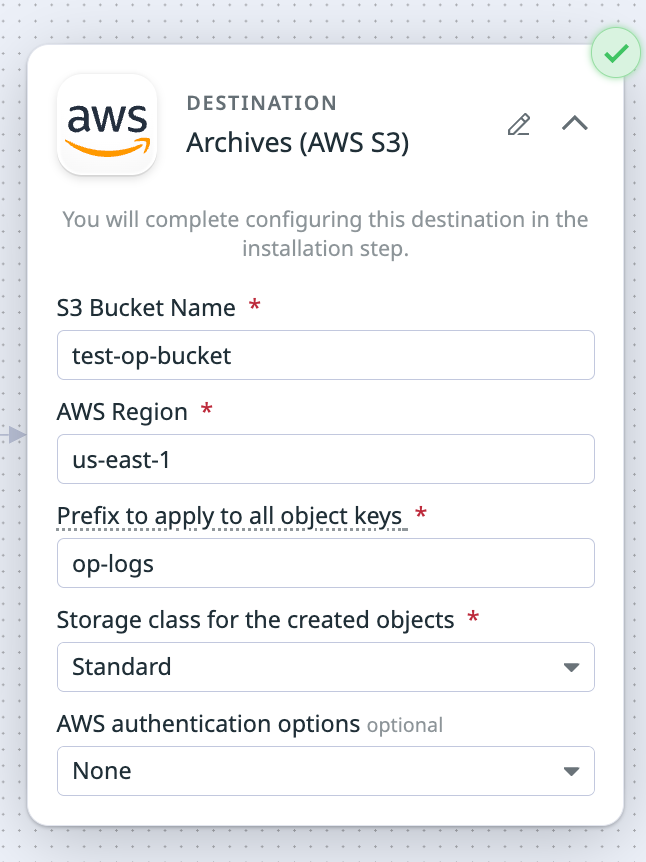

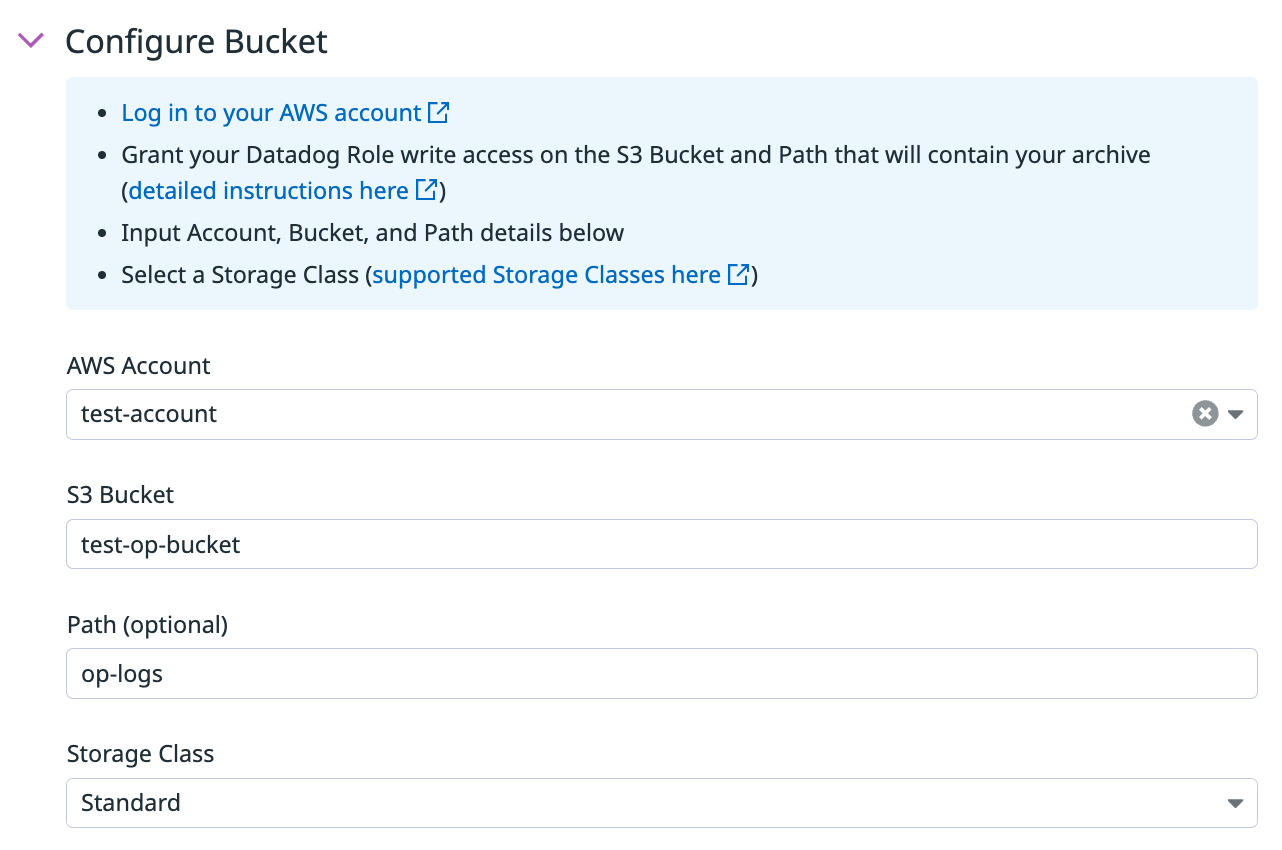

Amazon S3

Amazon S3

- Enter your S3 bucket name. If you configured Log Archives, it’s the name of the bucket you created earlier.

- Enter the AWS region the S3 bucket is in.

- Enter the key prefix.

- Prefixes are useful for partitioning objects. For example, you can use a prefix as an object key to store objects under a particular directory. If using a prefix for this purpose, it must end in

/to act as a directory path; a trailing/is not automatically added. - See template syntax if you want to route logs to different object keys based on specific fields in your logs.

- Note: Datadog recommends that you start your prefixes with the directory name and without a lead slash (

/). For example,app-logs/orservice-logs/.

- Prefixes are useful for partitioning objects. For example, you can use a prefix as an object key to store objects under a particular directory. If using a prefix for this purpose, it must end in

- Select the storage class for your S3 bucket in the Storage Class dropdown menu. If you are going to archive and rehydrate your logs:

- Note: Rehydration only supports the following storage classes:

- Standard

- Intelligent-Tiering, only if the optional asynchronous archive access tiers are both disabled.

- Standard-IA

- One Zone-IA

- If you wish to rehydrate from archives in another storage class, you must first move them to one of the supported storage classes above.

- See the Example destination and log archive setup section of this page for how to configure your Log Archive based on your Amazon S3 destination setup.

- Note: Rehydration only supports the following storage classes:

- Optionally, select an AWS authentication option. If you are only using the user or role you created earlier for authentication, do not select Assume role. The Assume role option should only be used if the user or role you created earlier needs to assume a different role to access the specific AWS resource and that permission has to be explicitly defined.

If you select Assume role:- Enter the ARN of the IAM role you want to assume.

- Optionally, enter the assumed role session name and external ID.

- Note: The user or role you created earlier must have permission to assume this role so that the Worker can authenticate with AWS.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Example destination and log archive setup

If you enter the following values for your Amazon S3 destination:

- S3 Bucket Name:

test-op-bucket - Prefix to apply to all object keys:

op-logs - Storage class for the created objects:

Standard

Then these are the values you enter for configuring the S3 bucket for Log Archives:

- S3 bucket:

test-op-bucket - Path:

op-logs - Storage class:

Standard

Google Cloud Storage

Google Cloud Storage

- Enter the name of your Google Cloud storage bucket. If you configured Log Archives, it’s the bucket you created earlier.

- If you have a credentials JSON file, enter the path to your credentials JSON file. If you configured Log Archives it’s the credentials you downloaded earlier. The credentials file must be placed under

DD_OP_DATA_DIR/config. Alternatively, you can use theGOOGLE_APPLICATION_CREDENTIALSenvironment variable to provide the credential path.- If you’re using workload identity on Google Kubernetes Engine (GKE), the

GOOGLE_APPLICATION_CREDENTIALSis provided for you. - The Worker uses standard Google authentication methods.

- If you’re using workload identity on Google Kubernetes Engine (GKE), the

- Select the storage class for the created objects.

- Select the access level of the created objects.

- Optionally, enter in the prefix.

- Prefixes are useful for partitioning objects. For example, you can use a prefix as an object key to store objects under a particular directory. If using a prefix for this purpose, it must end in

/to act as a directory path; a trailing/is not automatically added. - See template syntax if you want to route logs to different object keys based on specific fields in your logs.

- Note: Datadog recommends that you start your prefixes with the directory name and without a lead slash (

/). For example,app-logs/orservice-logs/.

- Prefixes are useful for partitioning objects. For example, you can use a prefix as an object key to store objects under a particular directory. If using a prefix for this purpose, it must end in

- Optionally, click Add Header to add metadata.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Azure Storage

Azure Storage

- Enter the name of the Azure container you created earlier.

- Optionally, enter a prefix.

- Prefixes are useful for partitioning objects. For example, you can use a prefix as an object key to store objects under a particular directory. If using a prefix for this purpose, it must end in

/to act as a directory path; a trailing/is not automatically added. - See template syntax if you want to route logs to different object keys based on specific fields in your logs.

- Note: Datadog recommends that you start your prefixes with the directory name and without a lead slash (

/). For example,app-logs/orservice-logs/.

- Prefixes are useful for partitioning objects. For example, you can use a prefix as an object key to store objects under a particular directory. If using a prefix for this purpose, it must end in

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

The following fields are optional:

- Enter the name for the Elasticsearch index. See template syntax if you want to route logs to different indexes based on specific fields in your logs.

- Enter the Elasticsearch version.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Prerequisites

To set up the Microsoft Sentinel destination, you need to create a Workspace in Azure if you haven’t already. In that workspace:

- Add Microsoft Sentinel to the workspace.

- Create a Data Collection Endpoint (DCE).

- Create a Log Analytics Workspace in the workspace if you haven’t already.

- In the Log Analytics Workspace, navigate to Settings > Tables.

- Click + Create.

- Define a custom table (for example,

MyOPWLogs).- Notes:

- After the table is configured, the prefixCustom-and suffix_CLare automatically appended to the table name. For example, if you defined the table name in Azure to beMyOPWLogs, the full table name is stored asCustom-MyOPWLogs_CL. You must use the full table name when you set up the Observability Pipelines Microsoft Sentinel destination.

-The full table name can be found in the resource JSON of the DCR understreamDeclarations.

- You can also use an Azure Table instead of a custom table.

- Notes:

- Select New Custom Log (DCR-based).

- Click Create a new data collection rule and select the DCE you created earlier.

- Click Next.

- Upload a sample JSON Log. For this example, the following JSON is used for the Schema and Transformation, where

TimeGeneratedis required:{ "TimeGenerated": "2024-07-22T11:47:51Z", "event": {} } - Click Create.

- In Azure, navigate to Microsoft Entra ID.

- Click Add > App Registration.

- Click Create.

- On the overview page, click Client credentials: Add a certificate or secret.

- Click New client secret.

- Enter a name for the secret and click Add. Note: Make sure to take note of the client secret, which gets obfuscated after 10 minutes.

- Also take note of the Tenant ID and Client ID. You need this information, along with the client secret, when you set up the Observability Pipelines Microsoft Sentinel destination.

- In Azure Portal’s Data Collection Rules page, search for and select the DCR you created earlier.

- Click Access Control (IAM) in the left nav.

- Click Add and select Add role assignment.

- Add the Monitoring Metrics Publisher role.

- On the Members page, select User, group, or service principal.

- Click Select Members and search for the application you created in the app registration step.

- Click Review + Assign. Note: It can take up to 10 minutes for the IAM change to take effect.

The table below summarizes the Azure and Microsoft Sentinel information you need when you set up the Observability Pipelines Microsoft Sentinel destination:

| Name | Description |

|---|---|

| Application (client) ID | The Azure Active Directory (AD) application’s client ID. See Register an application in Microsoft Entra ID for more information. Example: 550e8400-e29b-41d4-a716-446655440000 |

| Directory (tenant) ID | The Azure AD tenant ID. See Register an application in Microsoft Entra ID for more information. Example: 72f988bf-86f1-41af-91ab-2d7cd011db47 |

| Table (Stream) Name | The name of the stream which matches the table chosen when configuring the Data Collection Rule (DCR). Note: The full table name can be found in the resource JSON of the DCR under streamDeclarations.Example: Custom-MyOPWLogs_CL |

| Data Collection Rule (DCR) immutable ID | This is the immutable ID of the DCR where logging routes are defined. It is the Immutable ID shown on the DCR Overview page. Note: Ensure the Monitoring Metrics Publisher role is assigned in the DCR IAM settings. Example: dcr-000a00a000a00000a000000aa000a0aaSee Data collection rules (DCRs) in Azure Monitor to learn more about creating or viewing DCRs. |

Set up the destination in Observability Pipelines

To set up the Microsoft Sentinel destination in Observability Pipelines:

- Enter the client ID for your application, such as

550e8400-e29b-41d4-a716-446655440000. - Enter the directory ID for your tenant, such as

72f988bf-86f1-41af-91ab-2d7cd011db47. This is the Azure AD tenant ID. - Enter the full table name to which you are sending logs. An example table name:

Custom-MyOPWLogs_CL. - Enter the Data Collection Rule (DCR) immutable ID, such as

dcr-000a00a000a00000a000000aa000a0aa. - Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

- Select the data center region (US or EU) of your New Relic account.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

- Optionally, enter the name of the OpenSearch index. See template syntax if you want to route logs to different indexes based on specific fields in your logs.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

- Select your SentinelOne logs environment in the dropdown menu.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

- In the Mode dropdown menu, select the socket type to use.

- In the Encoding dropdown menu, select either

JSONorRaw messageas the output format. - Optionally, toggle the switch to enable TLS. If you enable TLS, the following certificate and key files are required:

Server Certificate Path: The path to the certificate file that has been signed by your Certificate Authority (CA) Root File in DER or PEM (X.509).CA Certificate Path: The path to the certificate file that is your Certificate Authority (CA) Root File in DER or PEM (X.509).Private Key Path: The path to the.keyprivate key file that belongs to your Server Certificate Path in DER or PEM (PKCS#8) format.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Observability Pipelines compresses logs with the gzip (level 6) algorithm.

The following fields are optional:

- Enter the name of the Splunk index you want your data in. This has to be an allowed index for your HEC. See template syntax if you want to route logs to different indexes based on specific fields in your logs.

- Select whether the timestamp should be auto-extracted. If set to

true, Splunk extracts the timestamp from the message with the expected format ofyyyy-mm-dd hh:mm:ss. - Optionally, set the

sourcetypeto override Splunk’s default value, which ishttpeventfor HEC data. See template syntax if you want to route logs to different source types based on specific fields in your logs. - Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

The following fields are optional:

- In the Encoding dropdown menu, select whether you want to encode your pipeline’s output in

JSON,Logfmt, orRawtext. If no decoding is selected, the decoding defaults to JSON. - Enter a source name to override the default

namevalue configured for your Sumo Logic collector’s source. - Enter a host name to override the default

hostvalue configured for your Sumo Logic collector’s source. - Enter a category name to override the default

categoryvalue configured for your Sumo Logic collector’s source. - Click Add Header to add any custom header fields and values.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

The rsyslog and syslog-ng destinations support the RFC5424 format.

The rsyslog and syslog-ng destinations match these log fields to the following Syslog fields:

| Log Event | SYSLOG FIELD | Default |

|---|---|---|

| log[“message”] | MESSAGE | NIL |

| log[“procid”] | PROCID | The running Worker’s process ID. |

| log[“appname”] | APP-NAME | observability_pipelines |

| log[“facility”] | FACILITY | 8 (log_user) |

| log[“msgid”] | MSGID | NIL |

| log[“severity”] | SEVERITY | info |

| log[“host”] | HOSTNAME | NIL |

| log[“timestamp”] | TIMESTAMP | Current UTC time. |

The following destination settings are optional:

- Toggle the switch to enable TLS. If you enable TLS, the following certificate and key files are required:

Server Certificate Path: The path to the certificate file that has been signed by your Certificate Authority (CA) Root File in DER or PEM (X.509).CA Certificate Path: The path to the certificate file that is your Certificate Authority (CA) Root File in DER or PEM (X.509).Private Key Path: The path to the.keyprivate key file that belongs to your Server Certificate Path in DER or PEM (PKCS#8) format.

- Enter the number of seconds to wait before sending TCP keepalive probes on an idle connection.

- Optionally, toggle the switch to enable Buffering Options.

Note: Buffering options is in Preview. Contact your account manager to request access.- If left disabled, the maximum size for buffering is 500 events.

- If enabled:

- Select the buffer type you want to set (Memory or Disk).

- Enter the buffer size and select the unit.

Add additional destinations

Click the plus sign (+) to the left of the destinations to add additional destinations to the same set of processors.

To delete a destination, click on the pencil icon to the top right of the destination, and select Delete destination. If you delete a destination from a processor group that has multiple destinations, only the deleted destination is removed. If you delete a destination from a processor group that only has one destination, both the destination and the processor group are removed.

Notes:

- A pipeline must have at least one destination. If a processor group only has one destination, that destination cannot be deleted.

- You can add a total of three destinations for a pipeline.

- A specific destination can only be added once. For example, you cannot add multiple Splunk HEC destinations.

Set up processors

There are pre-selected processors added to your processor group out of the box. You can add additional processors or delete any existing ones based on your processing needs.

Processor groups are executed from top to bottom. The order of the processors is important because logs are checked by each processor, but only logs that match the processor’s filters are processed. To modify the order of the processors, use the drag handle on the top left corner of the processor you want to move.

Filter query syntax

Each processor has a corresponding filter query in their fields. Processors only process logs that match their filter query. And for all processors except the filter processor, logs that do not match the query are sent to the next step of the pipeline. For the filter processor, logs that do not match the query are dropped.

The following are filter query examples:

NOT (status:debug): This filters for logs that do not have the statusDEBUG.status:ok service:flask-web-app: This filters for all logs with the statusOKfrom yourflask-web-appservice.- This query can also be written as:

status:ok AND service:flask-web-app.

- This query can also be written as:

host:COMP-A9JNGYK OR host:COMP-J58KAS: This filter query only matches logs from the labeled hosts.user.status:inactive: This filters for logs with the statusinactivenested under theuserattribute.http.status:[200 TO 299]orhttp.status:{300 TO 399}: These two filters represent the syntax to query a range forhttp.status. Ranges can be used across any attribute.

Learn more about writing filter queries in Observability Pipelines Search Syntax.

Add processors

Enter the information for the processors you want to use. Click the Add button to add additional processors. To delete a processor, click the kebab on the right side of the processor and select Delete.

Use this processor to add a field name and value of an environment variable to the log message.

To set up this processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they match the filter query, are sent to the next step in the pipeline.

- Enter the field name for the environment variable.

- Enter the environment variable name.

- Click Add Environment Variable if you want to add another environment variable.

Blocked environment variables

Environment variables that match any of the following patterns are blocked from being added to log messages because the environment variable could contain sensitive data.

CONNECTIONSTRING/CONNECTION-STRING/CONNECTION_STRINGAUTHCERTCLIENTID/CLIENT-ID/CLIENT_IDCREDENTIALSDATABASEURL/DATABASE-URL/DATABASE_URLDBURL/DB-URL/DB_URLKEYOAUTHPASSWORDPWDROOTSECRETTOKENUSER

The environment variable is matched to the pattern and not the literal word. For example, PASSWORD blocks environment variables like USER_PASSWORD and PASSWORD_SECRET from getting added to the log messages.

Allowlist

After you have added processors to your pipeline and clicked Next: Install, in the Add environment variable processor(s) allowlist field, enter a comma-separated list of environment variables you want to pull values from and use with this processor.

The allowlist is stored in the environment variable DD_OP_PROCESSOR_ADD_ENV_VARS_ALLOWLIST.

This processor adds a field with the name of the host that sent the log. For example, hostname: 613e197f3526. Note: If the hostname already exists, the Worker throws an error and does not overwrite the existing hostname.

To set up this processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

Use this processor with Vector Remap Language (VRL) to modify and enrich your logs. VRL is an expression-oriented, domain specific language designed for transforming logs. It features a simple syntax and built-in functions for observability use cases. You can use custom functions in the following ways:

- Manipulate arrays, strings, and other data types.

- Encode and decode values using Codec.

- Encrypt and decrypt values.

- Coerce one datatype to another datatype (for example, from an integer to a string).

- Convert syslog values to read-able values.

- Enrich values by using enrichment tables.

- Manipulate IP values.

- Parse values with custom rules (for example, grok, regex, and so on) and out-of-the-box functions (for example, syslog, apache, VPC flow logs, and so on).

- Manipulate event metadata and paths.

See Custom functions for the full list of available functions.

See Remap Reserved Attributes on how to use the Custom Processor to manually and dynamically remap attributes.

To set up this processor:

- If you have not created any functions yet, click Add custom processor and follow the instructions in Add a function to create a function.

- If you have already added custom functions, click Manage custom processors. Click on a function in the list to edit or delete it. You can use the search bar to find a function by its name. Click Add Custom Processor to add a function.

Add a function

- Enter a name for your custom processor.

- Add your script to modify your logs using custom functions. You can also click Autofill with Example and select one of the common use cases to get started. Click the copy icon for the example script and paste it into your script. See Get Started with the Custom Processor for more information.

- Optionally, check Drop events on error if you want to drop events that encounter an error during processing.

- Enter a sample log event.

- Click Run to preview how the functions process the log. After the script has run, you can see the output for the log.

- Click Save.

The deduplicate processor removes copies of data to reduce volume and noise. It caches 5,000 messages at a time and compares your incoming logs traffic against the cached messages. For example, this processor can be used to keep only unique warning logs in the case where multiple identical warning logs are sent in succession.

To set up the deduplicate processor:

- Define a filter query. Only logs that match the specified filter query are processed. Deduped logs and logs that do not match the filter query are sent to the next step in the pipeline.

- In the Type of deduplication dropdown menu, select whether you want to

Matchon orIgnorethe fields specified below.- If

Matchis selected, then after a log passes through, future logs that have the same values for all of the fields you specify below are removed. - If

Ignoreis selected, then after a log passes through, future logs that have the same values for all of their fields, except the ones you specify below, are removed.

- If

- Enter the fields you want to match on, or ignore. At least one field is required, and you can specify a maximum of three fields.

- Use the path notation

<OUTER_FIELD>.<INNER_FIELD>to match subfields. See the Path notation example below.

- Use the path notation

- Click Add field to add additional fields you want to filter on.

Path notation example

For the following message structure:

{

"outer_key": {

"inner_key": "inner_value",

"a": {

"double_inner_key": "double_inner_value",

"b": "b value"

},

"c": "c value"

},

"d": "d value"

}

- Use

outer_key.inner_keyto refer to the key with the valueinner_value. - Use

outer_key.inner_key.double_inner_keyto refer to the key with the valuedouble_inner_value.

The remap processor can add, drop, or rename fields within your individual log data. Use this processor to enrich your logs with additional context, remove low-value fields to reduce volume, and standardize naming across important attributes. Select add field, drop field, or rename field in the dropdown menu to get started.

See the Remap Reserved Attributes guide on how to use the Edit Fields processor to remap attributes.

Add field

Use add field to append a new key-value field to your log.

To set up the add field processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

- Enter the field and value you want to add. To specify a nested field for your key, use the path notation:

<OUTER_FIELD>.<INNER_FIELD>. All values are stored as strings. Note: If the field you want to add already exists, the Worker throws an error and the existing field remains unchanged.

Drop field

Use drop field to drop a field from logging data that matches the filter you specify below. It can delete objects, so you can use the processor to drop nested keys.

To set up the drop field processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

- Enter the key of the field you want to drop. To specify a nested field for your specified key, use the path notation:

<OUTER_FIELD>.<INNER_FIELD>. Note: If your specified key does not exist, your log will be unimpacted.

Rename field

Use rename field to rename a field within your log.

To set up the rename field processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

- Enter the name of the field you want to rename in the Source field. To specify a nested field for your key, use the path notation:

<OUTER_FIELD>.<INNER_FIELD>. Once renamed, your original field is deleted unless you enable the Preserve source tag checkbox described below.

Note: If the source key you specify doesn’t exist, a defaultnullvalue is applied to your target. - In the Target field, enter the name you want the source field to be renamed to. To specify a nested field for your specified key, use the path notation:

<OUTER_FIELD>.<INNER_FIELD>.

Note: If the target field you specify already exists, the Worker throws an error and does not overwrite the existing target field. - Optionally, check the Preserve source tag box if you want to retain the original source field and duplicate the information from your source key to your specified target key. If this box is not checked, the source key is dropped after it is renamed.

Path notation example

For the following message structure:

{

"outer_key": {

"inner_key": "inner_value",

"a": {

"double_inner_key": "double_inner_value",

"b": "b value"

},

"c": "c value"

},

"d": "d value"

}

- Use

outer_key.inner_keyto refer to the key with the valueinner_value. - Use

outer_key.inner_key.double_inner_keyto refer to the key with the valuedouble_inner_value.

Use this processor to enrich your logs with information from a reference table, which could be a local file or database.

To set up the enrichment table processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

- Enter the source attribute of the log. The source attribute’s value is what you want to find in the reference table.

- Enter the target attribute. The target attribute’s value stores, as a JSON object, the information found in the reference table.

- Select the type of reference table you want to use, File or GeoIP.

- For the File type:

- Enter the file path.

Note: All file paths are made relative to the configuration data directory, which is/var/lib/observability-pipelines-worker/config/by default. See Advanced Configurations for more information. The file must be owned by theobservability-pipelines-worker groupandobservability-pipelines-workeruser, or at least readable by the group or user. - Enter the column name. The column name in the enrichment table is used for matching the source attribute value. See the Enrichment file example.

- Enter the file path.

- For the GeoIP type, enter the GeoIP path.

- For the File type:

Enrichment file example

For this example, merchant_id is used as the source attribute and merchant_info as the target attribute.

This is the example reference table that the enrichment processor uses:

| merch_id | merchant_name | city | state |

|---|---|---|---|

| 803 | Andy’s Ottomans | Boise | Idaho |

| 536 | Cindy’s Couches | Boulder | Colorado |

| 235 | Debra’s Benches | Las Vegas | Nevada |

merch_id is set as the column name the processor uses to find the source attribute’s value. Note: The source attribute’s value does not have to match the column name.

If the enrichment processor receives a log with "merchant_id":"536":

- The processor looks for the value

536in the reference table’smerch_idcolumn. - After it finds the value, it adds the entire row of information from the reference table to the

merchant_infoattribute as a JSON object:

merchant_info {

"merchant_name":"Cindy's Couches",

"city":"Boulder",

"state":"Colorado"

}

This processor filters for logs that match the specified filter query and drops all non-matching logs. If a log is dropped at this processor, then none of the processors below this one receives that log. This processor can filter out unnecessary logs, such as debug or warning logs.

To set up the filter processor:

- Define a filter query. The query you specify filters for and passes on only logs that match it, dropping all other logs.

Many types of logs are meant to be used for telemetry to track trends, such as KPIs, over long periods of time. Generating metrics from your logs is a cost-effective way to summarize log data from high-volume logs, such as CDN logs, VPC flow logs, firewall logs, and networks logs. Use the generate metrics processor to generate either a count metric of logs that match a query or a distribution metric of a numeric value contained in the logs, such as a request duration.

Note: The metrics generated are custom metrics and billed accordingly. See Custom Metrics Billing for more information.

To set up the processor:

Click Manage Metrics to create new metrics or edit existing metrics. This opens a side panel.

- If you have not created any metrics yet, enter the metric parameters as described in the Add a metric section to create a metric.

- If you have already created metrics, click on the metric’s row in the overview table to edit or delete it. Use the search bar to find a specific metric by its name, and then select the metric to edit or delete it. Click Add Metric to add another metric.

Add a metric

- Enter a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they match the filter query, are sent to the next step in the pipeline. Note: Since a single processor can generate multiple metrics, you can define a different filter query for each metric.

- Enter a name for the metric.

- In the Define parameters section, select the metric type (count, gauge, or distribution). See the Count metric example and Distribution metric example. Also see Metrics Types for more information.

- For gauge and distribution metric types, select a log field which has a numeric (or parseable numeric string) value that is used for the value of the generated metric.

- For the distribution metric type, the log field’s value can be an array of (parseable) numerics, which is used for the generated metric’s sample set.

- The Group by field determines how the metric values are grouped together. For example, if you have hundreds of hosts spread across four regions, grouping by region allows you to graph one line for every region. The fields listed in the Group by setting are set as tags on the configured metric.

- Click Add Metric.

Metrics Types

You can generate these types of metrics for your logs. See the Metrics Types and Distributions documentation for more details.

| Metric type | Description | Example |

|---|---|---|

| COUNT | Represents the total number of event occurrences in one time interval. This value can be reset to zero, but cannot be decreased. | You want to count the number of logs with status:error. |

| GAUGE | Represents a snapshot of events in one time interval. | You want to measure the latest CPU utilization per host for all logs in the production environment. |

| DISTRIBUTION | Represent the global statistical distribution of a set of values calculated across your entire distributed infrastructure in one time interval. | You want to measure the average time it takes for an API call to be made. |

Count metric example

For this status:error log example:

{"status": "error", "env": "prod", "host": "ip-172-25-222-111.ec2.internal"}

To create a count metric that counts the number of logs that contain "status":"error" and groups them by env and host, enter the following information:

| Input parameters | Value |

|---|---|

| Filter query | @status:error |

| Metric name | status_error_total |

| Metric type | Count |

| Group by | env, prod |

Distribution metric example

For this example of an API response log:

{

"timestamp": "2018-10-15T17:01:33Z",

"method": "GET",

"status": 200,

"request_body": "{"information"}",

"response_time_seconds: 10

}

To create a distribution metric that measures the average time it takes for an API call to be made, enter the following information:

| Input parameters | Value |

|---|---|

| Filter query | @method |

| Metric name | status_200_response |

| Metric type | Distribution |

| Select a log attribute | response_time_seconds |

| Group by | method |

This processor parses logs using the grok parsing rules that are available for a set of sources. The rules are automatically applied to logs based on the log source. Therefore, logs must have a source field with the source name. If this field is not added when the log is sent to the Observability Pipelines Worker, you can use the Add field processor to add it.

If the source field of a log matches one of the grok parsing rule sets, the log’s message field is checked against those rules. If a rule matches, the resulting parsed data is added in the message field as a JSON object, overwriting the original message.

If there isn’t a source field on the log, or no rule matches the log message, then no changes are made to the log and it is sent to the next step in the pipeline.

Datadog’s Grok patterns differ from the standard Grok pattern, where Datadog’s Grok implementation provides:

- Matchers that include options for how you define parsing rules

- Filters for post-processing of extracted data

- A set of built-in patterns tailored to common log formats

See Parsing for more information on Datadog’s Grok patterns.

To set up the grok parser, define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they match the filter query, are sent to the next step in the pipeline.

To test log samples for out-of-the-box rules:

- Click the Preview Library Rules button.

- Search or select a source in the dropdown menu.

- Enter a log sample to test the parsing rules for that source.

To add a custom parsing rule:

- Click Add Custom Rule.

- If you want to clone a library rule, select Clone library rule and then the library source from the dropdown menu.

- If you want to create a custom rule, select Custom and then enter the

source. The parsing rules are applied to logs with thatsource. - Enter log samples to test the parsing rules.

- Enter the rules for parsing the logs. See Parsing for more information on writing parsing rules with Datadog Grok patterns.

Note: Theurl,useragent, andcsvfilters are not available. - Click Advanced Settings if you want to add helper rules. See Using helper rules to factorize multiple parsing rules for more information.

- Click Add Rule.

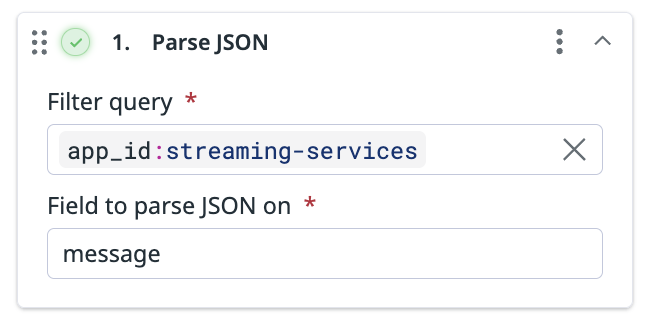

This processor parses the specified JSON field into objects. For example, if you have a message field that contains stringified JSON:

{

"foo": "bar",

"team": "my-team",

"message": "{\"level\":\"info\",\"timestamp\":\"2024-01-15T10:30:00Z\",\"service\":\"user-service\",\"user_id\":\"12345\",\"action\":\"login\",\"success\":true,\"ip_address\":\"192.168.1.100\"}"

"app_id":"streaming-services",

"ddtags": [

"kube_service:my-service",

"k8_deployment :your-host"

]

}

Use the Parse JSON processor to parse the message field so the message field has all the attributes within a nested object.

This output contains the message field with the parsed JSON:

{

"foo": "bar",

"team": "my-team",

"message": {

"action": "login",

"ip_address": "192.168.1.100",

"level": "info",

"service": "user-service",

"success": true,

"timestamp": "2024-01-15T10:30:00Z",

"user_id": "12345"

}

"app_id":"streaming-services",

"ddtags": [

"kube_service:my-service",

"k8_deployment :your-host"

]

}

To set up this processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

- Enter the name of the field you want to parse JSON on.

Note: The parsed JSON overwrites what was originally contained in the field.

This processor parses Extensible Markup Language (XML) so the data can be processed and sent to different destinations. XML is a log format used to store and transport structured data. It is organized in a tree-like structure to represent nested information and uses tags and attributes to define the data. For example, this is XML data using only tags (<recipe>,<type>, and <name>) and no attributes:

<recipe>

<type>pasta</type>

<name>Carbonara</name>

</recipe>

This is an XML example where the tag recipe has the attribute type:

<recipe>

<recipe type="pasta">

<name>Carbonara</name>

</recipe>

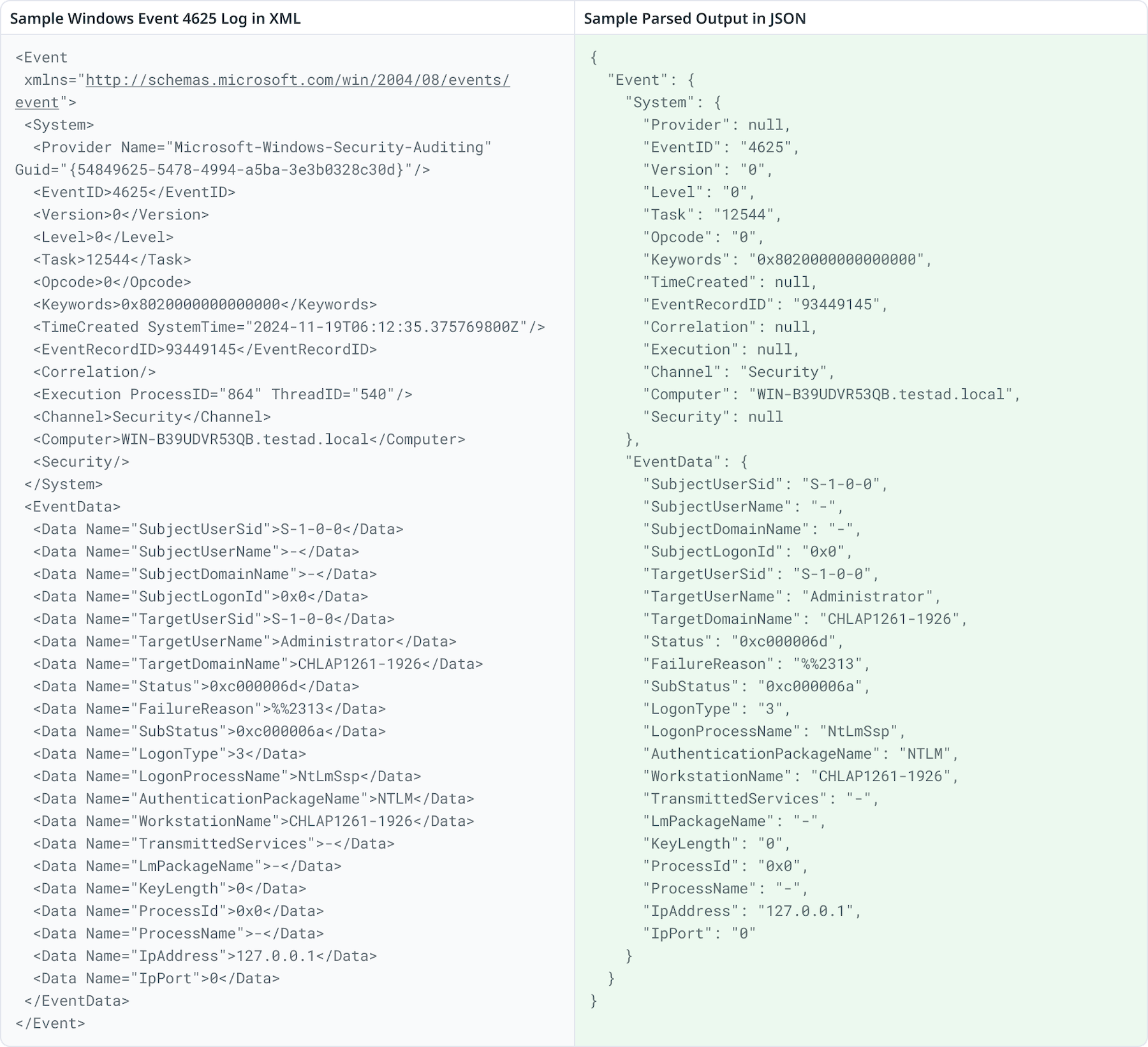

The following image shows a Windows Event 4625 log in XML, next to the same log parsed and output in JSON. By parsing the XML log, the size of the log event was reduced by approximately 30%.

To set up this processor:

- Define a filter query. Only logs that match the specified filter query are processed. All logs, regardless of whether they match the filter query, are sent to the next step in the pipeline.

- Enter the path to the log field on which you want to parse XML. Use the path notation

<OUTER_FIELD>.<INNER_FIELD>to match subfields. See the Path notation example below. - Optionally, in the

Enter text keyfield, input the key name to use for the text node when XML attributes are appended. See the text key example. If the field is left empty,valueis used as the key name. - Optionally, select

Always use text keyif you want to store text inside an object using the text key even when no attributes exist. - Optionally, toggle

Include XML attributeson if you want to include XML attributes. You can then choose to add the attribute prefix you want to use. See attribute prefix example. If the field is left empty, the original attribute key is used. - Optionally, select if you want to convert data types into numbers, Booleans, or nulls.

- If Numbers is selected, numbers are parsed as integers and floats.

- If Booleans is selected,

trueandfalseare parsed as Booleans. - If Nulls is selected, the string

nullis parsed as null.

Path notation example

For the following message structure:

{

"outer_key": {

"inner_key": "inner_value",

"a": {

"double_inner_key": "double_inner_value",

"b": "b value"

},

"c": "c value"

},

"d": "d value"

}

- Use

outer_key.inner_keyto refer to the key with the valueinner_value. - Use

outer_key.inner_key.double_inner_keyto refer to the key with the valuedouble_inner_value.

Always use text key example

If Always use text key is selected, the text key is the default (value), and you have the following XML:

<recipe>

<recipe type="pasta">

<name>Carbonara</name>

</recipe>

The XML is converted to:

{

"recipe": {

"type": "pasta",

"value": "Carbonara"

}

}

Text key example

If the key is text and you have the following XML:

<recipe>

<recipe type="pasta">

<name>Carbonara</name>

</recipe>

The XML is converted to:

{

"recipe": {

"type": "pasta",

"text": "Carbonara"

}

}

Attribute prefix example

If you enable Include XML attributes, the attribute is added as a prefix to each XML attribute. For example, if the attribute prefix is @ and you have the following XML:

<recipe type="pasta">Carbonara</recipe>

Then it is converted to the JSON:

{

"recipe": {

"@type": "pasta",

"<text key>": "Carbonara"

}

}

The quota processor measures the logging traffic for logs that match the filter you specify. When the configured daily quota is met inside the 24-hour rolling window, the processor can either keep or drop additional logs, or send them to a storage bucket. For example, you can configure this processor to drop new logs or trigger an alert without dropping logs after the processor has received 10 million events from a certain service in the last 24 hours.

You can also use field-based partitioning, such as service, env, status. Each unique fields uses a separate quota bucket with its own daily quota limit. See Partition example for more information.

Note: The pipeline uses the name of the quota to identify the same quota across multiple Remote Configuration deployments of the Worker.

Limits

- Each pipeline can have up to 1000 buckets. If you need to increase the bucket limit, contact support.

- The quota processor is synchronized across all Workers in a Datadog organization. For the synchronization, there is a default rate limit of 50 Workers per organization. When there are more than 50 Workers for an organization:

- The processor continues to run, but does not sync correctly with the other Workers, which can result in logs being sent after the quota limit has been reached.

- The Worker prints

Failed to sync quota stateerrors. - Contact support if you want to increase the default number of Workers per organization.

- The quota processor periodically synchronizes counts across Workers a few times per minute. The limit set on the processor can therefore be overshot, depending on the number of Workers and the logs throughput. Datadog recommends setting a limit that is at least one order of magnitude higher than the volume of logs that the processor is expected to receive per minute. You can use a throttle processor with the quota processor to control these short bursts by limiting the number of logs allowed per minute.

To set up the quota processor:

- Enter a name for the quota processor.

- Define a filter query. Only logs that match the specified filter query are counted towards the daily limit.

- Logs that match the quota filter and are within the daily quota are sent to the next step in the pipeline.

- Logs that do not match the quota filter are sent to the next step of the pipeline.

- In the Unit for quota dropdown menu, select if you want to measure the quota by the number of

Eventsor by theVolumein bytes. - Set the daily quota limit and select the unit of magnitude for your desired quota.

- Optional, Click Add Field if you want to set a quota on a specific service or region field.

a. Enter the field name you want to partition by. See the Partition example for more information.

i. Select the Ignore when missing if you want the quota applied only to events that match the partition. See the Ignore when missing example for more information.

ii. Optional: Click Overrides if you want to set different quotas for the partitioned field.

- Click Download as CSV for an example of how to structure the CSV.

- Drag and drop your overrides CSV to upload it. You can also click Browse to select the file to upload it. See the Overrides example for more information.

b. Click Add Field if you want to add another partition. - In the When quota is met dropdown menu, select if you want to drop events, keep events, or send events to overflow destination, when the quota has been met.

- If you select send events to overflow destination, an overflow destination is added with the following cloud storage options: Amazon S3, Azure Blob, and Google Cloud.

- Select the cloud storage you want to send overflow logs to. See the setup instructions for your cloud storage: Amazon S3, Azure Blob Storage, or Google Cloud Storage.

Examples

Partition example

Use Partition by if you want to set a quota on a specific service or region. For example, if you want to set a quota for 10 events per day and group the events by the service field, enter service into the Partition by field.

Example for the “ignore when missing” option

Select Ignore when missing if you want the quota applied only to events that match the partition. For example, if the Worker receives the following set of events:

{"service":"a", "source":"foo", "message": "..."}

{"service":"b", "source":"bar", "message": "..."}

{"service":"b", "message": "..."}

{"source":"redis", "message": "..."}

{"message": "..."}

And the Ignore when missing is selected, then the Worker:

- creates a set for logs with

service:aandsource:foo - creates a set for logs with

service:bandsource:bar - ignores the last three events

The quota is applied to the two sets of logs and not to the last three events.

If the Ignore when missing is not selected, the quota is applied to all five events.

Overrides example

If you are partitioning by service and have two services: a and b, you can use overrides to apply different quotas for them. For example, if you want service:a to have a quota limit of 5,000 bytes and service:b to have a limit of 50 events, the override rules look like this:

| Service | Type | Limit |

|---|---|---|

a | Bytes | 5,000 |

b | Events | 50 |

The reduce processor groups multiple log events into a single log, based on the fields specified and the merge strategies selected. Logs are grouped at 10-second intervals. After the interval has elapsed for the group, the reduced log for that group is sent to the next step in the pipeline.

To set up the reduce processor:

- Define a filter query. Only logs that match the specified filter query are processed. Reduced logs and logs that do not match the filter query are sent to the next step in the pipeline.

- In the Group By section, enter the field you want to group the logs by.

- Click Add Group by Field to add additional fields.

- In the Merge Strategy section:

- In On Field, enter the name of the field you want to merge the logs on.

- Select the merge strategy in the Apply dropdown menu. This is the strategy used to combine events. See the following Merge strategies section for descriptions of the available strategies.

- Click Add Merge Strategy to add additional strategies.

Merge strategies

These are the available merge strategies for combining log events.

| Name | Description |

|---|---|

| Array | Appends each value to an array. |

| Concat | Concatenates each string value, delimited with a space. |

| Concat newline | Concatenates each string value, delimited with a newline. |

| Concat raw | Concatenates each string value, without a delimiter. |

| Discard | Discards all values except the first value that was received. |

| Flat unique | Creates a flattened array of all unique values that were received. |

| Longest array | Keeps the longest array that was received. |

| Max | Keeps the maximum numeric value that was received. |

| Min | Keeps the minimum numeric value that was received. |

| Retain | Discards all values except the last value that was received. Works as a way to coalesce by not retaining `null`. |

| Shortest array | Keeps the shortest array that was received. |

| Sum | Sums all numeric values that were received. |

Use this processor to remap logs to Open Cybersecurity Schema Framework (OCSF) events. OCSF schema event classes are set for a specific log source and type. You can add multiple mappings to one processor. Note: Datadog recommends that the OCSF processor be the last processor in your pipeline, so that remapping is done after the logs have been processed by all the other processors.

To set up this processor:

Click Manage mappings. This opens a modal:

- If you have already added mappings, click on a mapping in the list to edit or delete it. You can use the search bar to find a mapping by its name. Click Add Mapping if you want to add another mapping. Select Library Mapping or Custom Mapping and click Continue.

- If you have not added any mappings yet, select Library Mapping or Custom Mapping. Click Continue.

Library mapping

Library mapping

Add a mapping

- Select the log type in the dropdown menu.

- Define a filter query. Only logs that match the specified filter query are remapped. All logs, regardless of whether they do or do not match the filter query, are sent to the next step in the pipeline.

- Review the sample source log and the resulting OCSF output.

- Click Save Mapping.

Library mappings

These are the library mappings available:

| Log Source | Log Type | OCSF Category | Supported OCSF versions |

|---|---|---|---|

| AWS CloudTrail | Type: Management EventName: ChangePassword | Account Change (3001) | 1.3.0 1.1.0 |

| Google Cloud Audit | SetIamPolicy | Account Change (3001) | 1.3.0 1.1.0 |

| Google Cloud Audit | CreateSink | Account Change (3001) | 1.3.0 1.1.0 |

| Google Cloud Audit | UpdateSync | Account Change (3001) | 1.3.0 1.1.0 |

| Google Cloud Audit | CreateBucket | Account Change (3001) | 1.3.0 1.1.0 |

| GitHub | Create User | Account Change (3001) | 1.1.0 |

| Google Workspace Admin | addPrivilege | User Account Management (3005) | 1.1.0 |

| Okta | User session start | Authentication (3002) | 1.1.0 |

| Microsoft 365 Defender | Incident | Incident Finding (2005) | 1.3.0 1.1.0 |

| Palo Alto Networks | Traffic | Network Activity (4001) | 1.1.0 |

Custom mapping

Custom mapping

When you set up a custom mapping, if you try to close or exit the modal, you are prompted to export your mapping. Datadog recommends that you export your mapping to save what you have set up so far. The exported mapping is saved as a JSON file.

To set up a custom mapping:

- Optionally, add a name for the mapping. The default name is

Custom Authentication. - Define a filter query. Only logs that match the specified filter query are remapped. All logs, regardless of whether they match the filter query, are sent to the next step in the pipeline.

- Select the OCSF event category from the dropdown menu.

- Select the OCSF event class from the dropdown menu.

- Enter a log sample so that you can reference it when you add fields.

- Click Continue.

- Select any OCSF profiles that you want to add. See OCSF Schema Browser for more information.

- All required fields are shown. Enter the required Source Logs Fields and Fallback Values for them. If you want to manually add additional fields, click + Field. Click the trash can icon to delete a field. Note: Required fields cannot be deleted.

- The fallback value is used for the OCSF field if the log doesn’t have the source log field.

- You can add multiple fields for Source Log Fields. For example, Okta’s

user.system.startlogs have either theeventTypeorlegacyEventTypefield. You can map both fields to the same OCSF field. - If you have your own OCSF mappings in JSON or saved a previous mapping that you want to use, click Import Configuration File.

- Click Continue.

- Some log source values must be mapped to OCSF values. For example, the values of a source log’s severity field that is mapped to the OCSF’s

severity_idfield, must be mapped to the OCSFseverity_id’s values. Seeseverity_idin Authentication for a list of OCSF values. An example of mapping severity values:Log source value OCSF value INFOInformationalWARNMediumERRORHigh - All values that are required to be mapped to an OCSF value are listed. Click + Add Row if you want to map additional values.

- Click Save Mapping.

Filter query syntax

Each processor has a corresponding filter query in their fields. Processors only process logs that match their filter query. And for all processors except the filter processor, logs that do not match the query are sent to the next step of the pipeline. For the filter processor, logs that do not match the query are dropped.

The following are filter query examples:

NOT (status:debug): This filters for logs that do not have the statusDEBUG.status:ok service:flask-web-app: This filters for all logs with the statusOKfrom yourflask-web-appservice.- This query can also be written as:

status:ok AND service:flask-web-app.

- This query can also be written as:

host:COMP-A9JNGYK OR host:COMP-J58KAS: This filter query only matches logs from the labeled hosts.user.status:inactive: This filters for logs with the statusinactivenested under theuserattribute.http.status:[200 TO 299]orhttp.status:{300 TO 399}: These two filters represent the syntax to query a range forhttp.status. Ranges can be used across any attribute.

Learn more about writing filter queries in Observability Pipelines Search Syntax.

This processor samples your logging traffic for a representative subset at the rate that you define, dropping the remaining logs. As an example, you can use this processor to sample 20% of logs from a noisy non-critical service.

The sampling only applies to logs that match your filter query and does not impact other logs. If a log is dropped at this processor, none of the processors below receives that log.

To set up the sample processor:

- Define a filter query. Only logs that match the specified filter query are sampled at the specified retention rate below. The sampled logs and the logs that do not match the filter query are sent to the next step in the pipeline.

- Enter your desired sampling rate in the Retain field. For example, entering

2means 2% of logs are retained out of all the logs that match the filter query. - Optionally, enter a Group By field to create separate sampling groups for each unique value for that field. For example,

status:errorandstatus:infoare two unique field values. Each bucket of events with the same field is sampled independently. Click Add Field if you want to add more fields to partition by. See the group-by example.

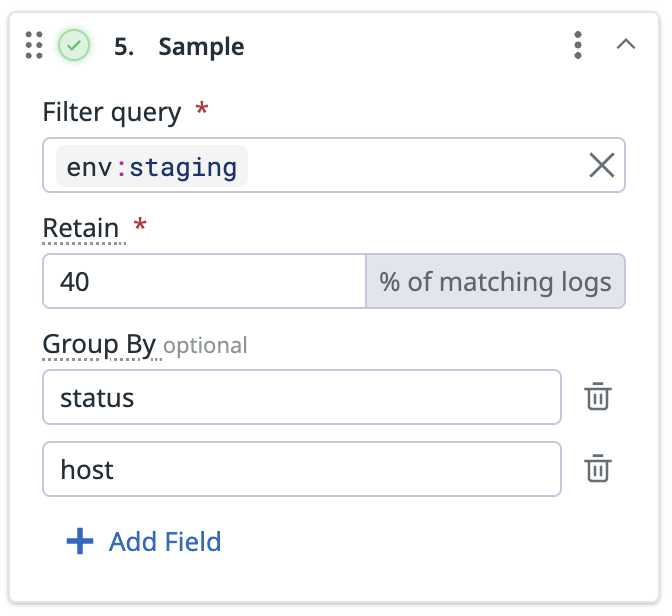

Group-by example

If you have the following setup for the sample processor:

- Filter query:

env:staging - Retain:

40%of matching logs - Group by:

statusandhost

Then, 40% of logs for each unique combination of status and service from env:staging is retained. For example:

- 40% of logs with

status:infoandservice:networksare retained. - 40% of logs with

status:infoandservice:core-webare retained. - 40% of logs with

status:errorandservice:networksare retained. - 40% of logs with

status:errorandservice:core-webare retained.

The Sensitive Data Scanner processor scans logs to detect and redact or hash sensitive information such as PII, PCI, and custom sensitive data. You can pick from Datadog’s library of predefined rules, or input custom Regex rules to scan for sensitive data.

To set up the processor:

- Define a filter query. Only logs that match the specified filter query are scanned and processed. All logs are sent to the next step in the pipeline, regardless of whether they match the filter query.

- Click Add Scanning Rule.

- Select one of the following:

Add rules from the library

Add rules from the library

- In the dropdown menu, select the library rule you want to use.

- Recommended keywords are automatically added based on the library rule selected. After the scanning rule has been added, you can add additional keywords or remove recommended keywords.

- In the Define rule target and action section, select if you want to scan the Entire Event, Specific Attributes, or Exclude Attributes in the dropdown menu.

- If you are scanning the entire event, you can optionally exclude specific attributes from getting scanned. Use path notation (

outer_key.inner_key) to access nested keys. For specified attributes with nested data, all nested data is excluded. - If you are scanning specific attributes, specify which attributes you want to scan. Use path notation (

outer_key.inner_key) to access nested keys. For specified attributes with nested data, all nested data is scanned.

- If you are scanning the entire event, you can optionally exclude specific attributes from getting scanned. Use path notation (

- For Define actions on match, select the action you want to take for the matched information. Note: Redaction, partial redaction, and hashing are all irreversible actions.

- Redact: Replaces all matching values with the text you specify in the Replacement text field.

- Partially Redact: Replaces a specified portion of all matched data. In the Redact section, specify the number of characters you want to redact and which part of the matched data to redact.

- Hash: Replaces all matched data with a unique identifier. The UTF-8 bytes of the match are hashed with the 64-bit fingerprint of FarmHash.

- Optionally, click Add Field to add tags you want to associate with the matched events.

- Add a name for the scanning rule.

- Optionally, add a description for the rule.

- Click Save.

Path notation example

For the following message structure:

{

"outer_key": {

"inner_key": "inner_value",

"a": {

"double_inner_key": "double_inner_value",

"b": "b value"

},

"c": "c value"

},

"d": "d value"

}

- Use

outer_key.inner_keyto refer to the key with the valueinner_value. - Use

outer_key.inner_key.double_inner_keyto refer to the key with the valuedouble_inner_value.

Add additional keywords

After adding scanning rules from the library, you can edit each rule separately and add additional keywords to the keyword dictionary.

- Navigate to your pipeline.

- In the Sensitive Data Scanner processor with the rule you want to edit, click Manage Scanning Rules.

- Toggle Use recommended keywords if you want the rule to use them. Otherwise, add your own keywords to the Create keyword dictionary field. You can also require that these keywords be within a specified number of characters of a match. By default, keywords must be within 30 characters before a matched value.

- Click Update.

Add a custom rule

Add a custom rule

- In the Define match conditions section, specify the regex pattern to use for matching against events in the Define the regex field. Enter sample data in the Add sample data field to verify that your regex pattern is valid.

Sensitive Data Scanner supports Perl Compatible Regular Expressions (PCRE), but the following patterns are not supported:

- Backreferences and capturing sub-expressions (lookarounds)

- Arbitrary zero-width assertions

- Subroutine references and recursive patterns

- Conditional patterns

- Backtracking control verbs

- The

\C“single-byte” directive (which breaks UTF-8 sequences) - The

\Rnewline match - The

\Kstart of match reset directive - Callouts and embedded code

- Atomic grouping and possessive quantifiers

- For Create keyword dictionary, add keywords to refine detection accuracy when matching regex conditions. For example, if you are scanning for a sixteen-digit Visa credit card number, you can add keywords like

visa,credit, andcard. You can also require that these keywords be within a specified number of characters of a match. By default, keywords must be within 30 characters before a matched value. - In the Define rule target and action section, select if you want to scan the Entire Event, Specific Attributes, or Exclude Attributes in the dropdown menu.

- If you are scanning the entire event, you can optionally exclude specific attributes from getting scanned. Use path notation (

outer_key.inner_key) to access nested keys. For specified attributes with nested data, all nested data is excluded. - If you are scanning specific attributes, specify which attributes you want to scan. Use path notation (

outer_key.inner_key) to access nested keys. For specified attributes with nested data, all nested data is scanned.

- If you are scanning the entire event, you can optionally exclude specific attributes from getting scanned. Use path notation (

- For Define actions on match, select the action you want to take for the matched information. Note: Redaction, partial redaction, and hashing are all irreversible actions.

- Redact: Replaces all matching values with the text you specify in the Replacement text field.