- 重要な情報

- はじめに

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- Administrator's Guide

- API

- Partners

- DDSQL Reference

- モバイルアプリケーション

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- アプリ内

- ダッシュボード

- ノートブック

- DDSQL Editor

- Reference Tables

- Sheets

- Watchdog

- アラート設定

- メトリクス

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- インフラストラクチャー

- Cloudcraft

- Resource Catalog

- ユニバーサル サービス モニタリング

- Hosts

- コンテナ

- Processes

- サーバーレス

- ネットワークモニタリング

- Cloud Cost

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM & セッションリプレイ

- Synthetic モニタリング

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- Code Security

- クラウド セキュリティ マネジメント

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- CloudPrem

- 管理

(LEGACY) Advanced Configurations

This product is not supported for your selected Datadog site. ().

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

This guide is for large-scale production-level deployments.

Multiple aggregator deployments

As covered in Networking, Datadog recommends to start with one Observability Pipelines Worker aggregator per region. This is to prevent overcomplicating your initial deployment of Observability Pipelines Worker, but there are circumstances where starting with multiple deployments is ideal:

Prevent sending data over the public internet. If you have multiple clouds and regions, deploy the Observability Pipelines Worker aggregator in each of them to prevent sending large amounts of data over the internet. Your Observability Pipelines Worker aggregator should receive internal data and serve as the single point of egress for your network.

Independent management. You have teams that can operate and manage an Observability Pipelines Worker aggregator independently for their use case. For example, your Data Science team may be responsible for operating their own infrastructure and has the means to independently operate their own Observability Pipelines Worker aggregator.

Multiple cloud accounts

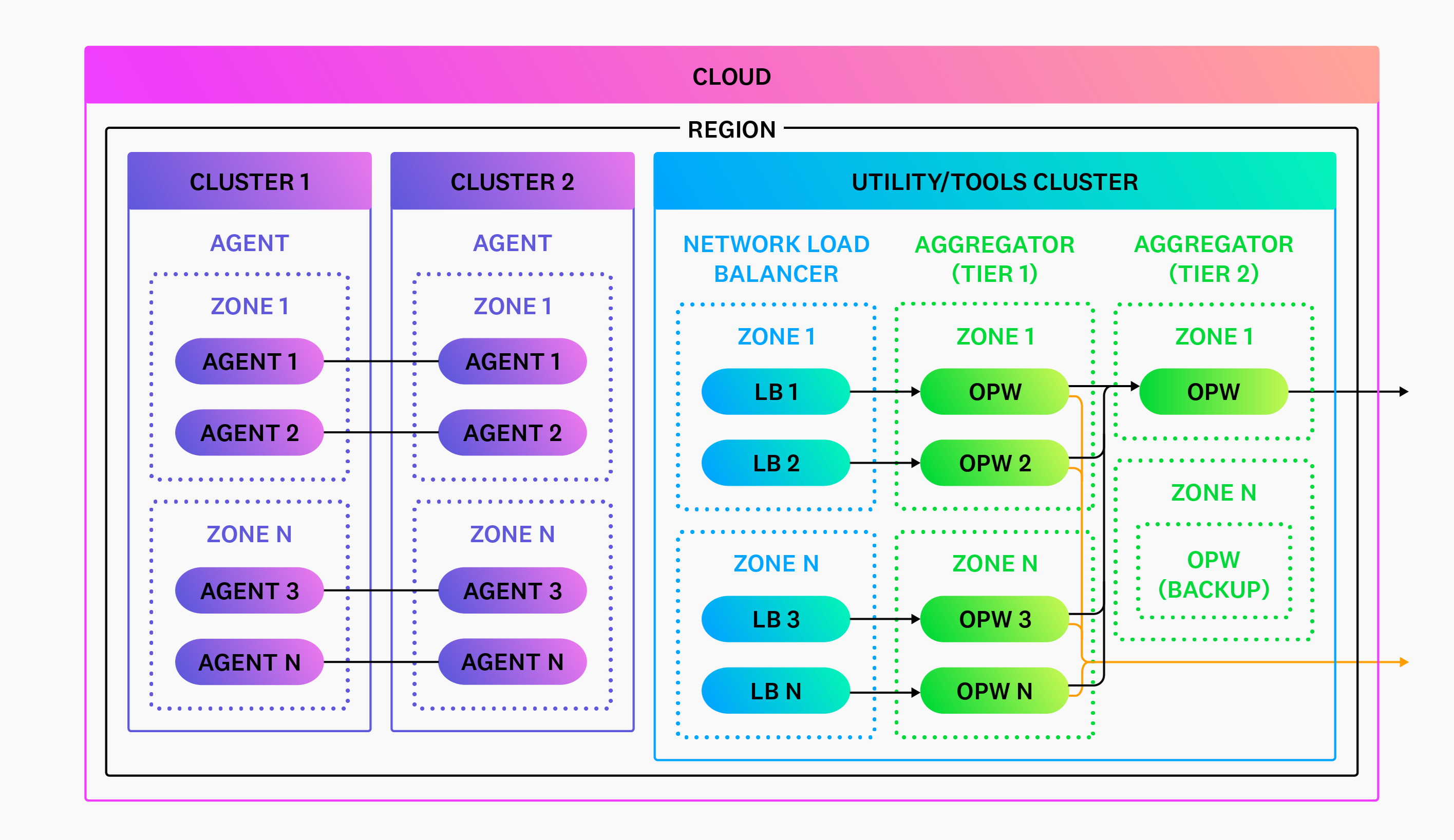

Many users have multiple cloud accounts with VPCs and clusters inside. Datadog still recommends in this case to deploy one Observability Pipelines Worker aggregator per region. Deploy Observability Pipelines Worker into your utility or tools cluster and configure all your cloud accounts to send data to this cluster. See Networking for more information.

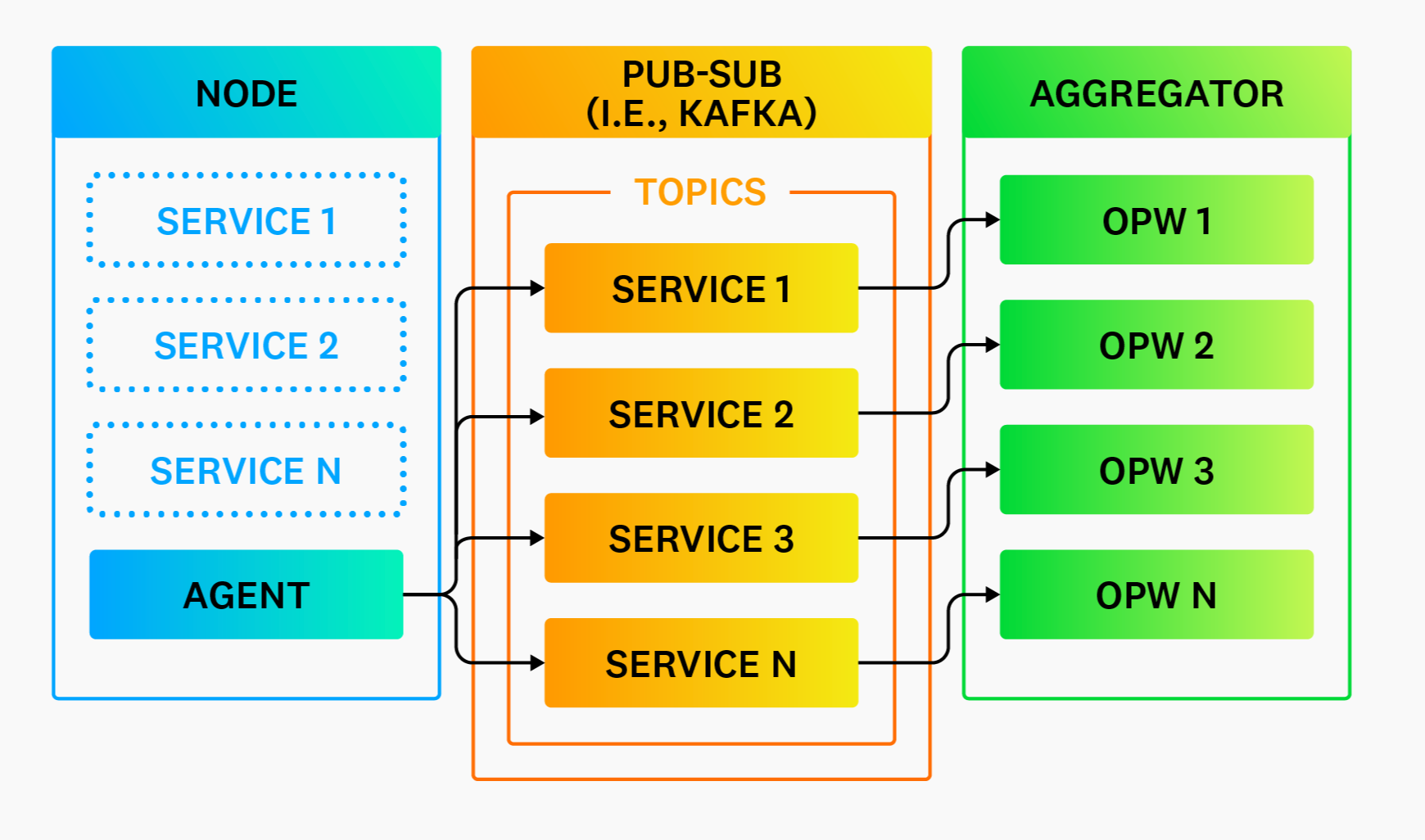

Pub-sub systems

Using a publish-subscribe (pub-sub) system such as Kafka is not required to make your architecture highly available or highly durable (see High Availability and Disaster Recovery), but they do offer the following advantages:

Improved reliability. Pub-sub systems are designed to be highly reliable and durable systems that change infrequently. They are especially reliable if you are using a managed option. The Observability Pipelines Worker is likely to change more often based on its purpose. Isolate Observability Pipelines Worker downtime behind a pub-sub system to increase availability from the perception of your clients and make recovery simpler.

Load balancer not required. Pub-sub systems eliminate the need for a load balancer. Your pub-sub system handles the coordination of consumers, making it easy to scale Observability Pipelines Worker horizontally.

Pub-sub partitioning

Partitioning, or “topics” in Kafka terminology, refers to separating data in your pub-sub systems. You should partition along data origin lines, such as the service or host that generated the data.

Pub-sub configuration

When using a pub-sub system, Datadog recommends the following configuration changes for Observability Pipelines Worker:

- Enable end-to-end acknowledgments for all sinks. This setting ensures that the pub-sub checkpoint is not advanced until data is successfully written.

- Use memory buffers. There is no need to use Observability Pipelines Worker’s disk buffers when it sits behind a pub-sub system. Your pub-sub system is designed for long-term buffering with high durability. Observability Pipelines Worker should only be responsible for reading, processing, and routing the data (not durability).

Global aggregation

This section provides recommendations for performing global calculations for legacy destinations. Modern destinations already support global calculations. For example, Datadog supports distributions (such as DDSketch) that solve global observations of your metrics data.

Global aggregation refers to the ability to aggregate data for an entire region. For example, computing global quantiles for CPU load averages. To achieve this, a single Observability Pipelines Worker instance must have access to every node’s CPU load average statistics. This is not possible with horizontal scaling; each individual Observability Pipelines Worker instance only has access to a slice of the overall data. Therefore, aggregation should be tiered.

In the above diagram, the tier two aggregators receive an aggregated sub-stream of the overall data from the tier one aggregators. This allows a single instance to get a global view without processing the entire stream and introducing a single point of failure.

Recommendations

- Limit global aggregation to tasks that can reduce data, such as computing global histograms. Never send all data to your global aggregators.

- Continue to use your local aggregators to process and deliver most data so that you do not introduce a single point of failure.