- 重要な情報

- はじめに

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- Administrator's Guide

- API

- Partners

- DDSQL Reference

- モバイルアプリケーション

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- アプリ内

- ダッシュボード

- ノートブック

- DDSQL Editor

- Reference Tables

- Sheets

- Watchdog

- アラート設定

- メトリクス

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- インフラストラクチャー

- Cloudcraft

- Resource Catalog

- ユニバーサル サービス モニタリング

- Hosts

- コンテナ

- Processes

- サーバーレス

- ネットワークモニタリング

- Cloud Cost

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM & セッションリプレイ

- Synthetic モニタリング

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- Code Security

- クラウド セキュリティ マネジメント

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- CloudPrem

- 管理

(LEGACY) Observability Pipelines Documentation

This product is not supported for your selected Datadog site. ().

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

If you upgrade your OP Workers version 1.8 or below to version 2.0 or above, your existing pipelines will break. Do not upgrade your OP Workers if you want to continue using OP Workers version 1.8 or below. If you want to use OP Worker 2.0 or above, you must migrate your OP Worker 1.8 or earlier pipelines to OP Worker 2.x.

Datadog recommends that you update to OP Worker versions 2.0 or above. Upgrading to a major OP Worker version and keeping it updated is the only supported way to get the latest OP Worker functionality, fixes, and security updates.

Datadog recommends that you update to OP Worker versions 2.0 or above. Upgrading to a major OP Worker version and keeping it updated is the only supported way to get the latest OP Worker functionality, fixes, and security updates.

The following documents are for the Observability Pipelines Worker 1.8 and older.

Deployment

Working with Data

Monitoring

Reference: Configurations

Guides

Architecture

Legacy Observability Pipelines

Overview

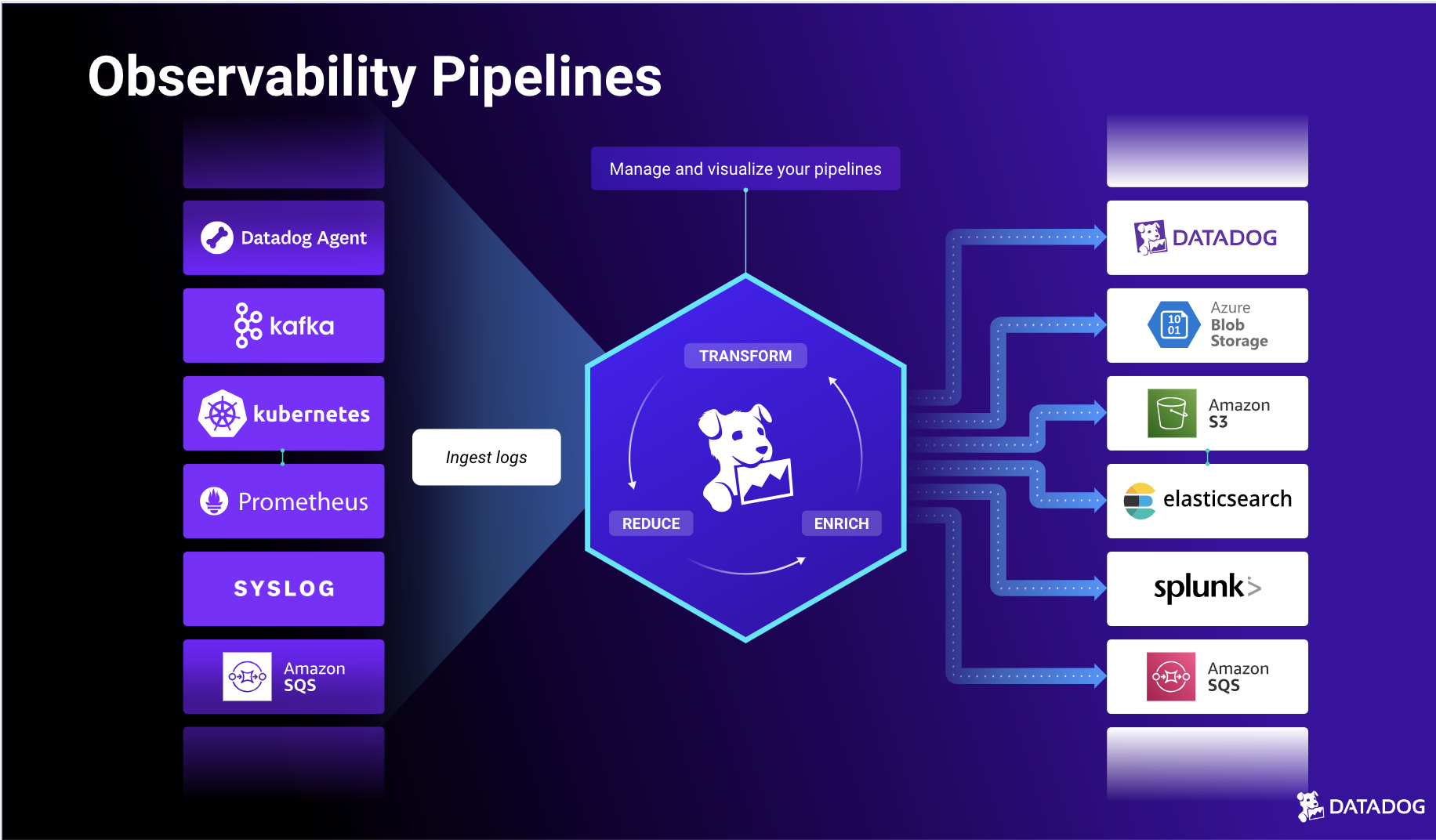

Observability Pipelines allow you to collect, process, and route logs from any source to any destination in infrastructure that you own or manage.

With Observability Pipelines, you can:

- Control your data volume before routing to manage costs.

- Route data anywhere to reduce vendor lock-in and simplify migrations.

- Transform logs by adding, parsing, enriching, and removing fields and tags.

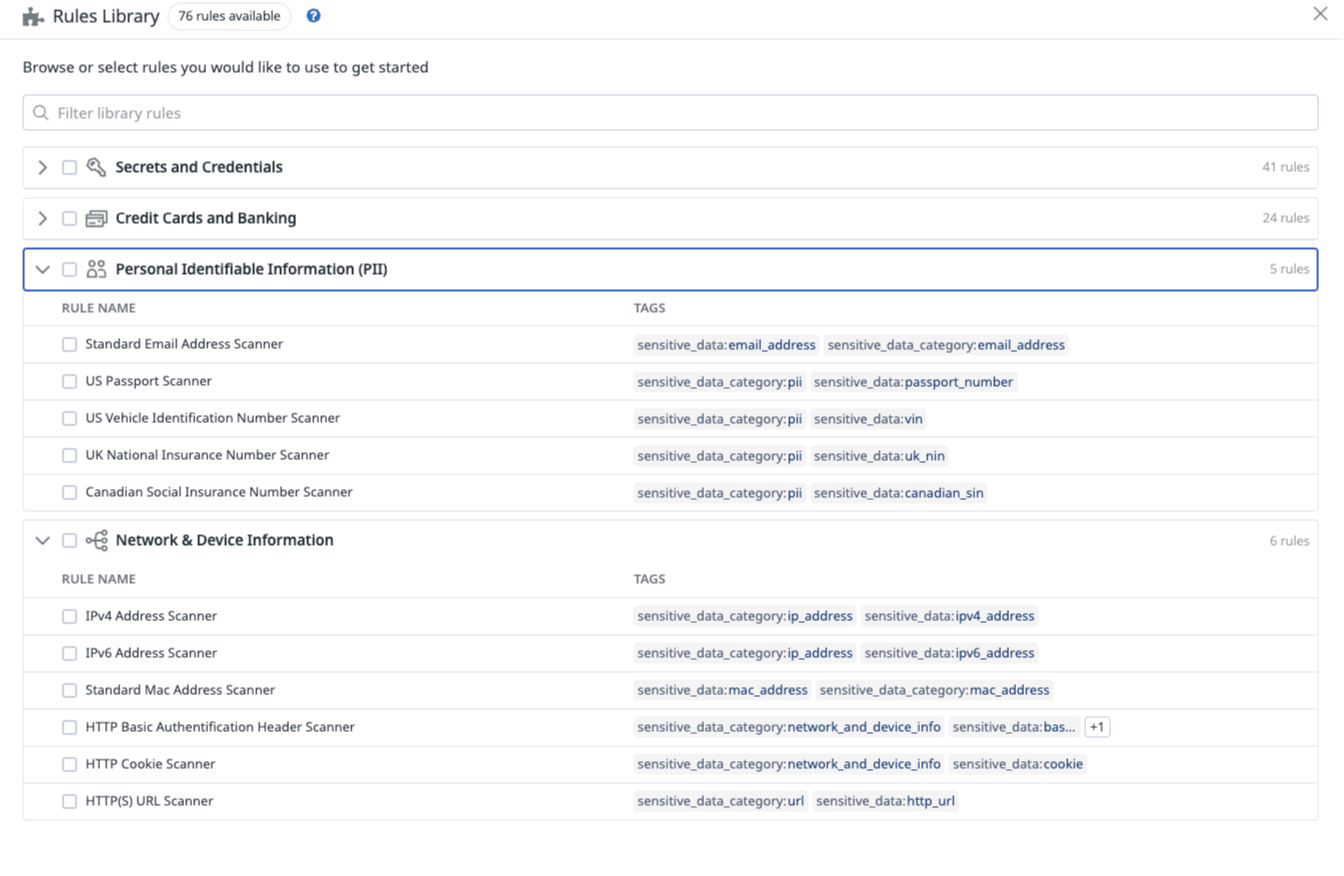

- Redact sensitive data from your telemetry data.

The Observability Pipelines Worker is the software that runs in your infrastructure. It aggregates and centrally processes and routes your data. More specifically, the Worker can:

- Receive or pull all your observability data collected by your agents, collectors, or forwarders.

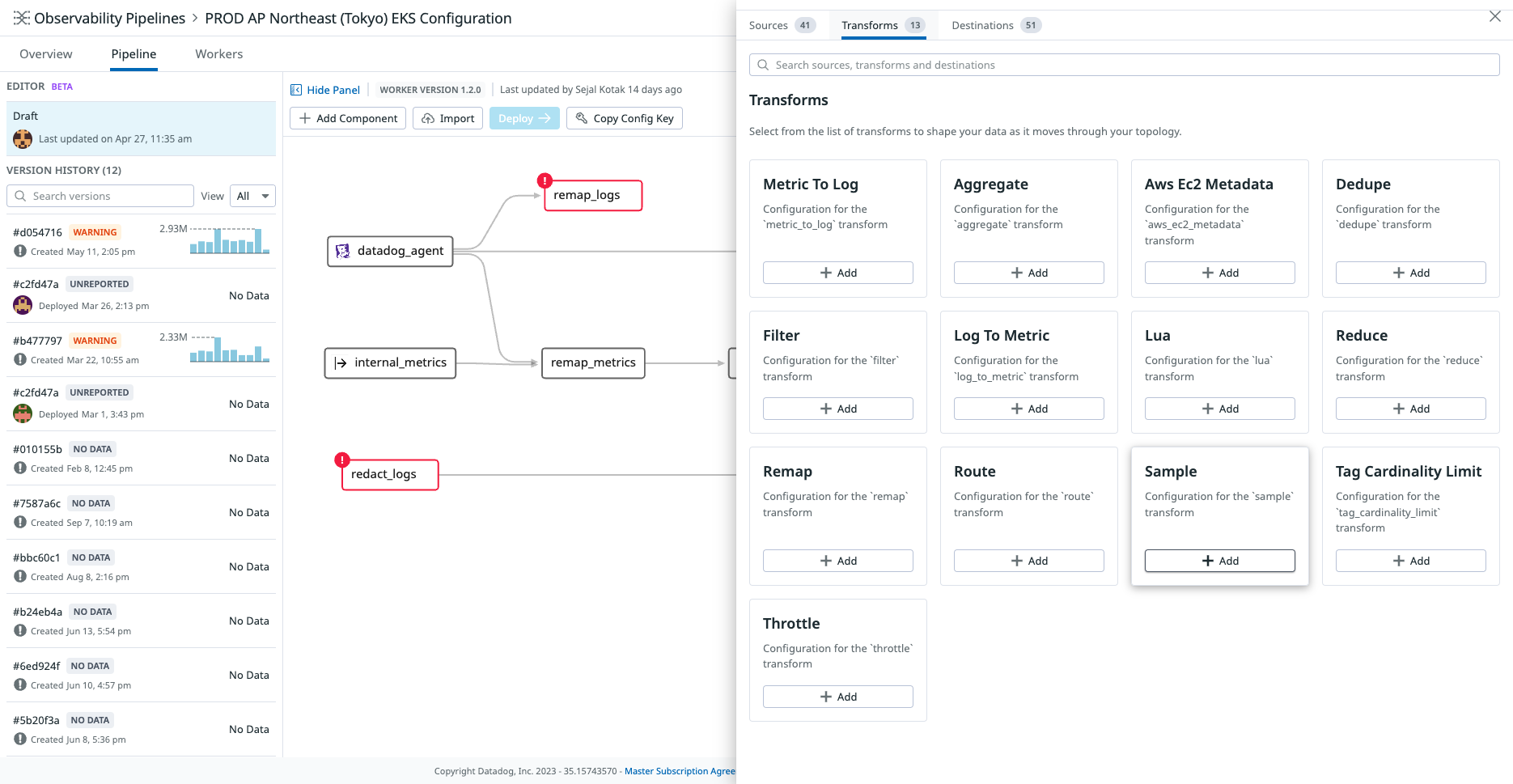

- Transform ingested data (for example: parse, filter, sample, enrich, and more).

- Route the processed data to any destination.

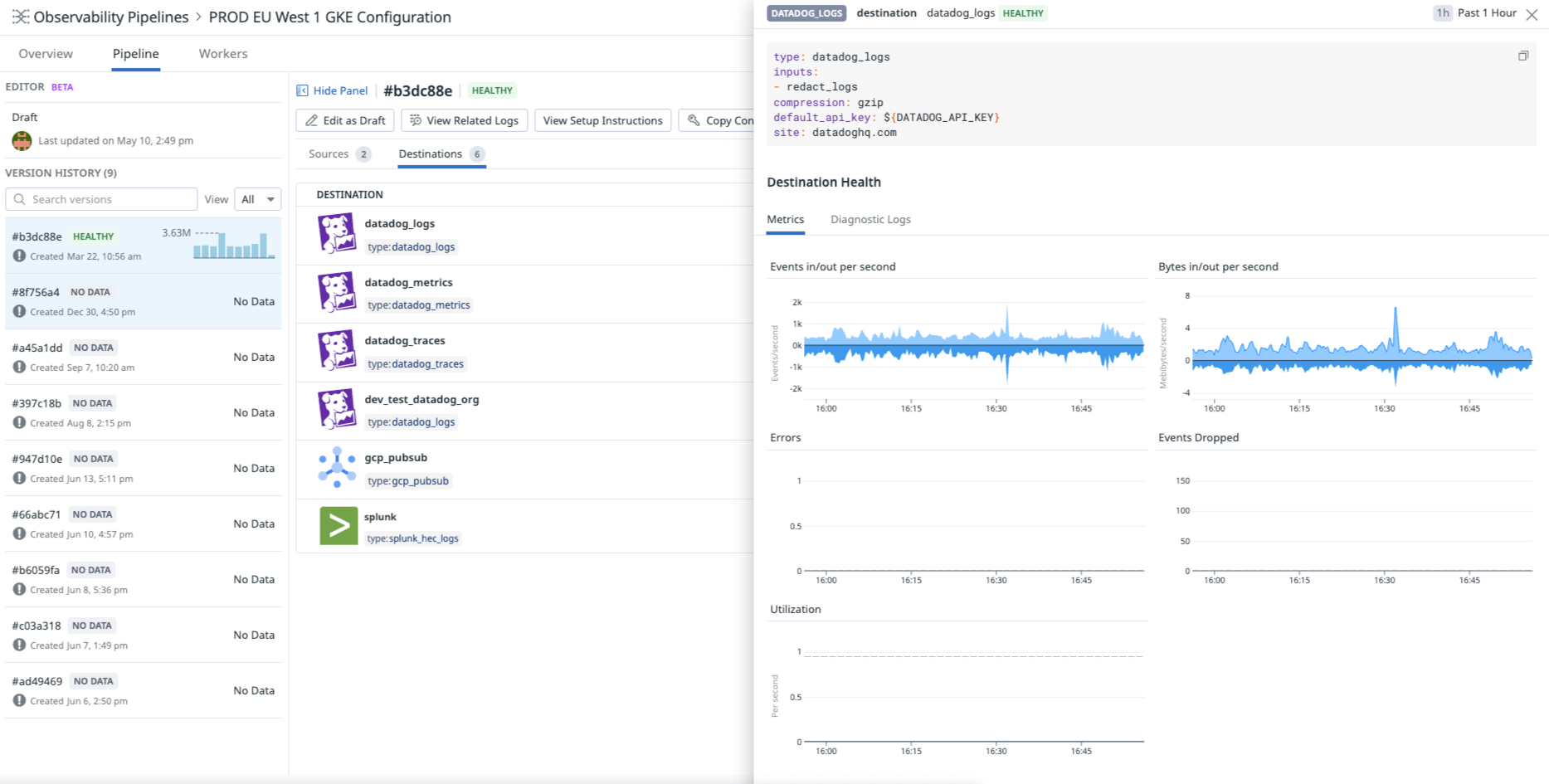

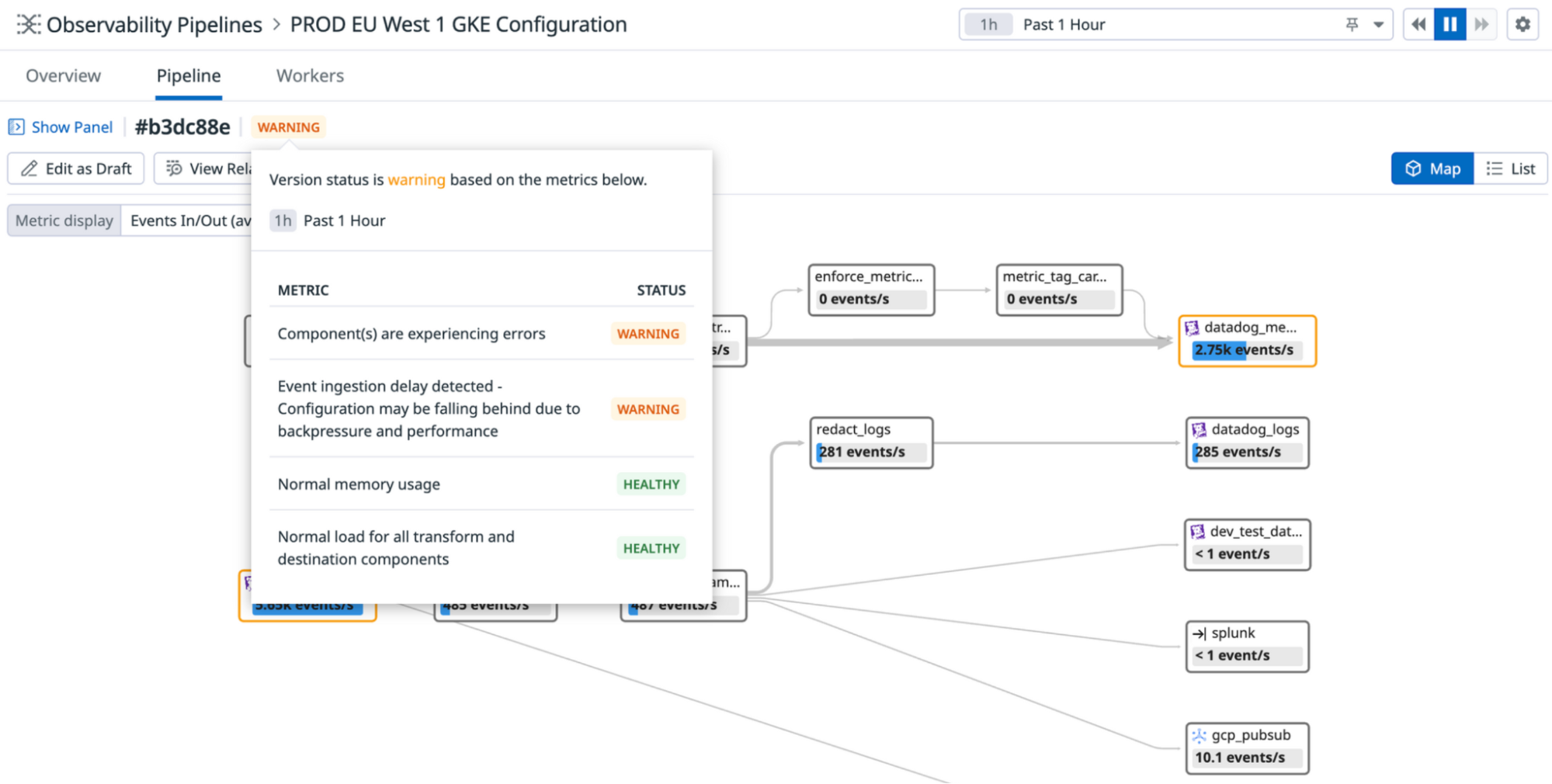

The Datadog UI provides a control plane to manage your Observability Pipelines Workers. You can monitor your pipelines to understand the health of your pipelines, identify bottlenecks and latencies, fine-tune performance, validate data delivery, and investigate your largest volume contributors. You can build or edit pipelines, whether it be routing a subset of data to a new destination or introducing a new sensitive data redaction rule, and roll out these changes to your active pipelines from the Datadog UI.

Get started

- Set up the Observability Pipelines Worker.

- Create pipelines to collect, transform and route your data.

- Discover how to deploy Observability Pipelines at production scale:

- See Deployment Design and Principles for information on what to consider when designing your Observability Pipelines architecture.

- See Best Practices for OP Worker Aggregator Architecture.

Explore Observability Pipelines

Start getting insights into your Observability Pipelines:

Collect data from any source and route data to any destination

Collect data* from any source and route them to any destination to reduce vendor lock-in and simplify migrations.

Control your data volume before it gets routed

Optimize volume and reduce the size of your observability data by sampling, filtering, deduplicating, and aggregating your logs.

Redact sensitive data from your telemetry data

Redact sensitive data before they are routed outside of your infrastructure, using out-of-the-box patterns to scan for PII, PCI, private keys, and more.

Monitor the health of your pipelines

Get a holistic view of all of your pipelines’ topologies and monitor key performance indicators, such as average load, error rate, and throughput for each of your flows.