- 重要な情報

- はじめに

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- Administrator's Guide

- API

- Partners

- DDSQL Reference

- モバイルアプリケーション

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- アプリ内

- ダッシュボード

- ノートブック

- DDSQL Editor

- Reference Tables

- Sheets

- Watchdog

- アラート設定

- メトリクス

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- インフラストラクチャー

- Cloudcraft

- Resource Catalog

- ユニバーサル サービス モニタリング

- Hosts

- コンテナ

- Processes

- サーバーレス

- ネットワークモニタリング

- Cloud Cost

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM & セッションリプレイ

- Synthetic モニタリング

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- Code Security

- クラウド セキュリティ マネジメント

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- CloudPrem

- 管理

Collect Google Cloud Logs with a Pub/Sub Push Subscription

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

This page describes deprecated features with configuration information relevant to legacy Pub/Sub Push subscriptions, useful for troubleshooting or modifying legacy setups. Pub/Sub Push subscription is being deprecated for the following reasons:

- For Google Cloud VPC, new Push subscriptions cannot be configured with external endpoints (see Google Cloud’s Supported products and limitations page for more information)

- The Push subscription does not provide compression or batching of events

Documentation for the Push subscription is only maintained for troubleshooting or modifying legacy setups.

Use a Pull subscription with the Datadog Dataflow template to forward your Google Cloud logs to Datadog instead. See Log collection on the Google Cloud integration page for instructions.

Overview

This guide describes how to forward logs from your Google Cloud services to Datadog through a Push subscription to a Google Cloud Pub/Sub topic.

To collect logs from applications running in GCE or GKE, you can also use the Datadog Agent.

Note: If you have a Google Cloud VPC in your Google Cloud environment, the Push subscription cannot access endpoints outside the VPC.

Setup

Prerequisites

The Google Cloud Platform integration is successfully installed.

Create a Cloud Pub/Sub topic

Go to the Cloud Pub/Sub console and create a new topic.

Give that topic an explicit name such as

export-logs-to-datadogand click Create.

Warning: Pub/subs are subject to Google Cloud quotas and limitations. If the number of logs you have is higher than those limitations, Datadog recommends you split your logs over several topics. See the Monitor the Log Forwarding section for information on setting up monitor notifications if you approach those limits.

Forward logs to Datadog with a Cloud Pub/Sub subscription

- In the Cloud Pub/Sub console, select Subscriptions in the left hand navigation. Click Create Subscription.

- Create a subscription ID and select the topic you previously created.

- Select the

Pushmethod and enter the following command, replacing<DATADOG_API_KEY>with the value of a valid Datadog API key:

https://gcp-intake.logs.Note: Ensure that the Datadog site selector on the right of the page is set to your Datadog site before copying the command above.

- Configure any additional options, such as Subscription expiration, Acknowledgment deadline, Message retention duration, or Dead lettering.

- Under Retry policy, select Retry after exponential backoff delay.

- Click Create at the bottom.

The Pub/Sub is ready to receive logs from Google Cloud Logging and forward them to Datadog.

Export logs from Google Cloud

Go to the Google Cloud Logs Explorer page and filter the logs that need to be exported.

From the Log Router tab, select Create Sink.

Provide a name for the sink.

Choose Cloud Pub/Sub as the destination and select the pub/sub that was created for that purpose. Note: The pub/sub can be located in a different project.

Click Create Sink and wait for the confirmation message to appear.

Note: It is possible to create several exports from Google Cloud Logging to the same Pub/Sub with different sinks.

Monitor the log forwarding

Pub/subs are subject to Google Cloud quotas and limitations. If the number of logs you have is higher than those limitations, Datadog recommends you split your logs over several topics, using different filters.

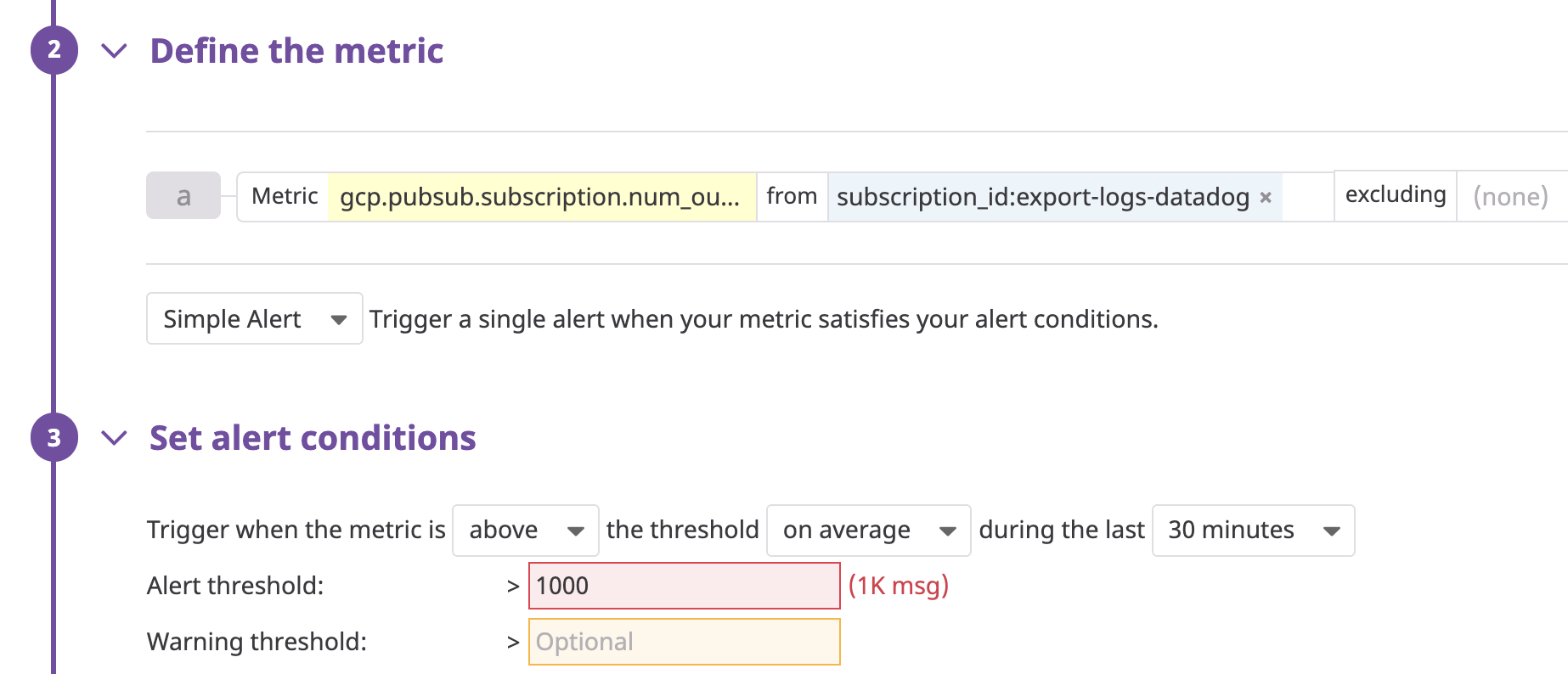

To be automatically notified when you reach this quota, activate the Pub/Sub metric integration and set up a monitor on the metric gcp.pubsub.subscription.num_outstanding_messages. Filter this monitor on the subscription that exports logs to Datadog to make sure it never goes above 1000, as per the below example:

Sampling logs

You can optionally sample logs while querying by using the sample function. For example, to include only 10% of the logs, use sample(insertId, 0.1).