- 重要な情報

- はじめに

- Datadog

- Datadog サイト

- DevSecOps

- AWS Lambda のサーバーレス

- エージェント

- インテグレーション

- コンテナ

- ダッシュボード

- アラート設定

- ログ管理

- トレーシング

- プロファイラー

- タグ

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic モニタリング

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Learning Center

- Support

- 用語集

- Standard Attributes

- ガイド

- インテグレーション

- エージェント

- OpenTelemetry

- 開発者

- 認可

- DogStatsD

- カスタムチェック

- インテグレーション

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- サービスのチェック

- IDE インテグレーション

- コミュニティ

- ガイド

- Administrator's Guide

- API

- モバイルアプリケーション

- CoScreen

- Cloudcraft

- アプリ内

- Service Management

- インフラストラクチャー

- アプリケーションパフォーマンス

- APM

- Continuous Profiler

- データベース モニタリング

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Software Delivery

- CI Visibility (CI/CDの可視化)

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- セキュリティ

- セキュリティの概要

- Cloud SIEM

- クラウド セキュリティ マネジメント

- Application Security Management

- AI Observability

- ログ管理

- Observability Pipelines(観測データの制御)

- ログ管理

- 管理

How to investigate a log parsing issue

このページは日本語には対応しておりません。随時翻訳に取り組んでいます。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

翻訳に関してご質問やご意見ございましたら、お気軽にご連絡ください。

Integration Pipelines support the default log format for a given technology. So if you have customized the log format or written a custom parser which is not working, your logs might not get properly parsed. Here are some guidelines on how to find the root cause of the issue and correct the parser.

Before troubleshooting your parser, read the Datadog log processors and parsing documentation, and the parsing best practice article.

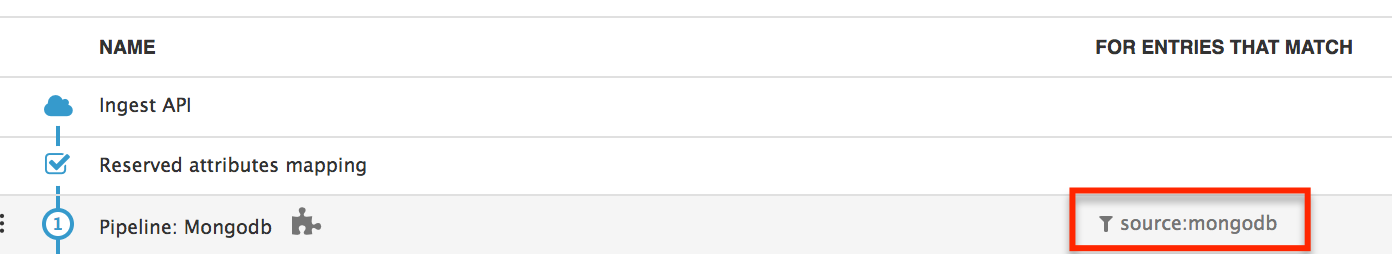

Identify your log’s pipeline: Because of the Pipeline filters, you can find the processing Pipeline that your log went through. Integration Pipeline takes the source as filter, so check that your log source is correctly set.

For integration pipeline, clone them and troubleshoot on the clone.

Spot obvious differences if any: In most of the cases, you should have examples or log samples in your parsers. Compare your log with the sample to find simple differences such as a missing element, a different order or extra elements. Also check the timestamp format as this is often the culprit.

You can illustrate this with an apache log. In your integration parser you have examples such as:

127.0.0.1 - frank [13/Jul/2016:10:55:36 +0000] "GET /apache_pb.gif HTTP/1.0" 200 2326Say that your log is:

[13/Jul/2016:10:55:36 +0000] 127.0.0.1 - frank "GET /apache_pb.gif HTTP/1.0" 200 2326The timestamp is not at the same place. Therefore, you need to change the parsing rule to reflect that difference.

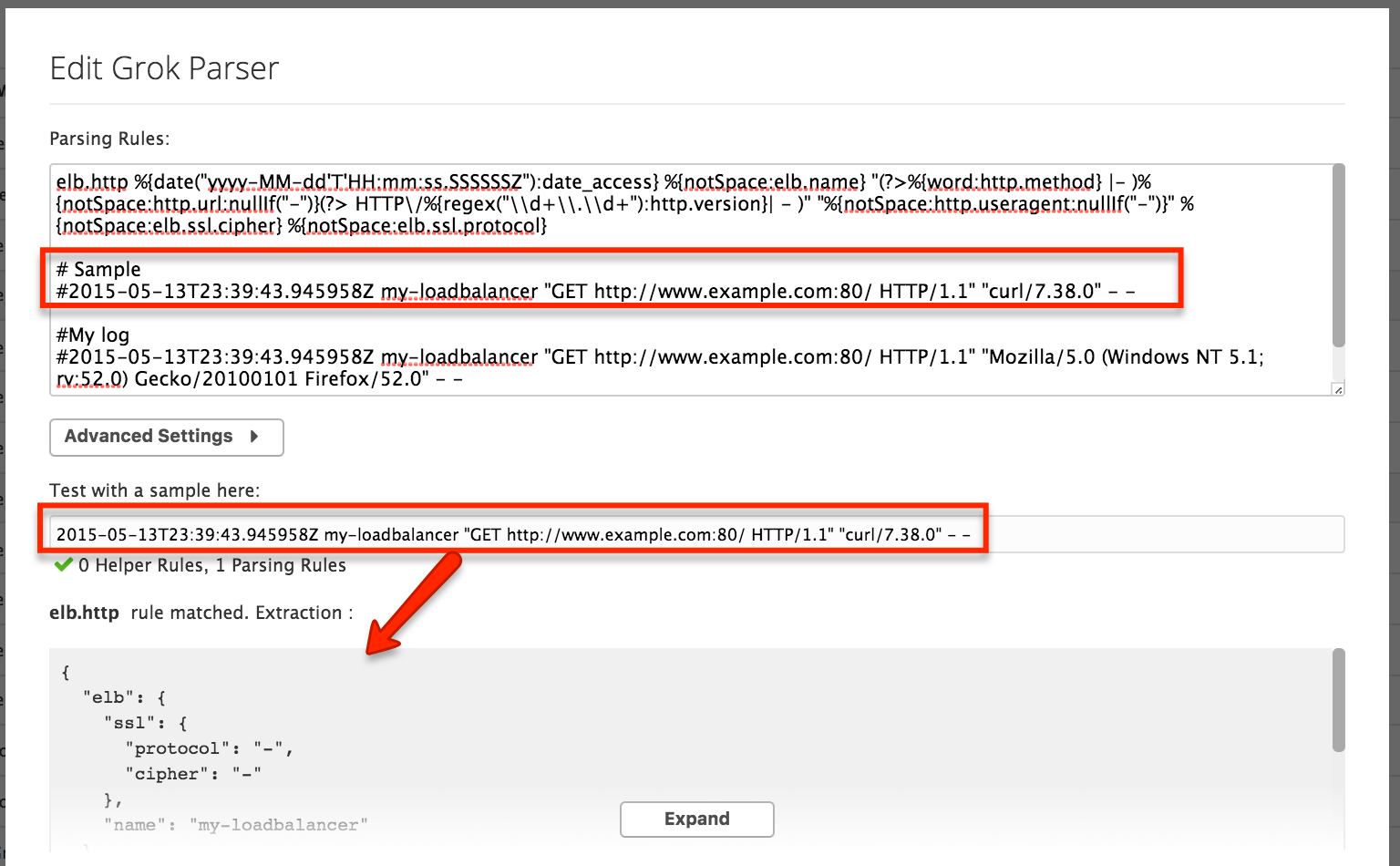

Find the culprit attribute: There are no obvious differences in the format? Start the actual troubleshooting on the parsing rule. Here is a shortened format of an ELB log to illustrate a real case.

The parsing rule is the following:

elb.http %{date("yyyy-MM-dd'T'HH:mm:ss.SSSSSSZ"):date_access} %{notSpace:elb.name} "(?>%{word:http.method} |- )%{notSpace:http.url:nullIf("-")}(?> HTTP\/%{regex("\\d+\\.\\d+"):http.version}| - )" "%{notSpace:http.useragent:nullIf("-")}" %{notSpace:elb.ssl.cipher} %{notSpace:elb.ssl.protocol}It contains the following log:

2015-05-13T23:39:43.945958Z my-loadbalancer "GET http://www.example.com:80/ HTTP/1.1" "Mozilla/5.0 (Windows NT 5.1; rv:52.0) Gecko/20100101 Firefox/52.0" - -From the provided sample, there are no obvious differences and the parser works fine for the sample:

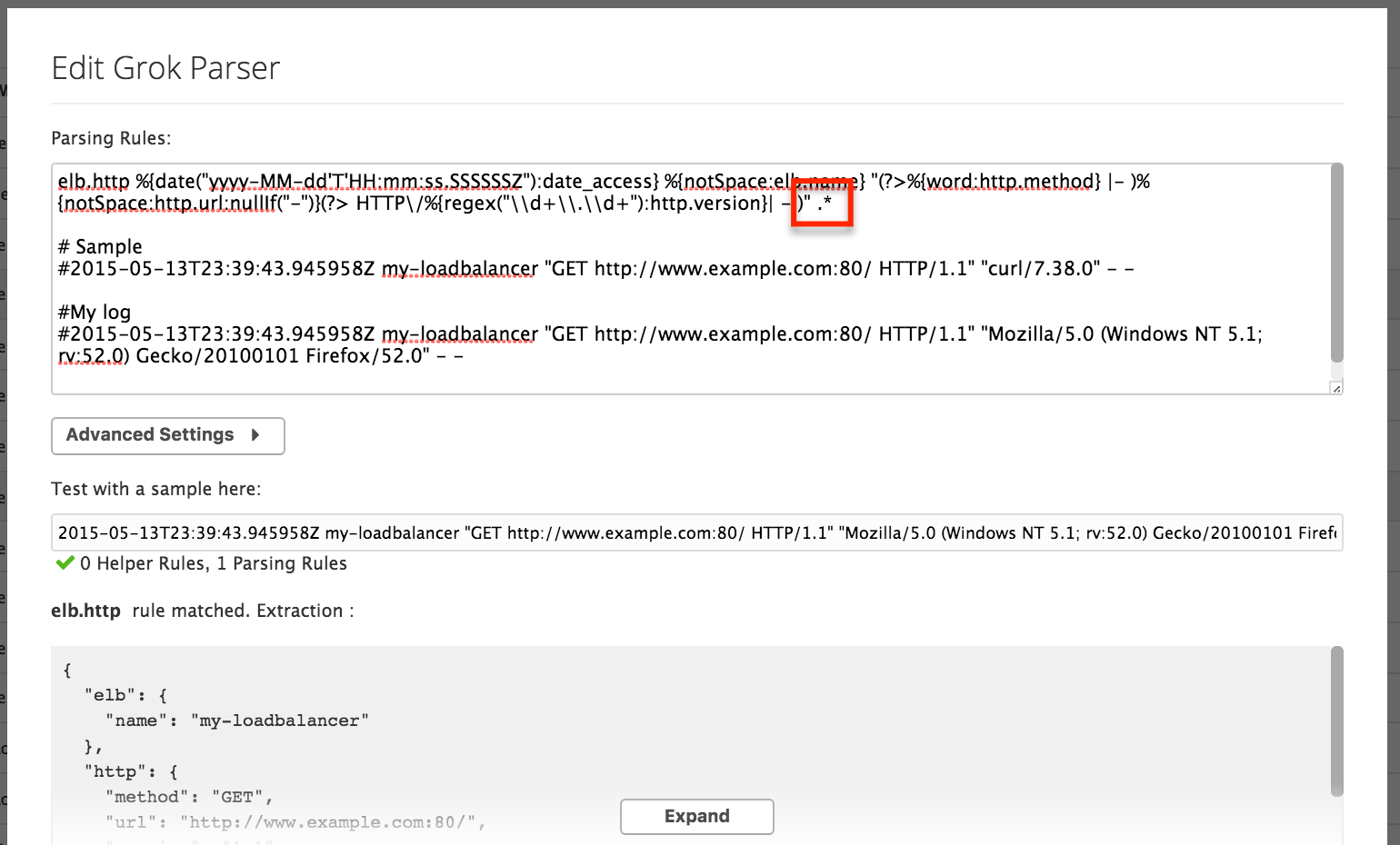

But when tested with the log, it is not working. The next step is to start to remove attributes one by one from the end until you find the culprit. To do so, add

.*at the end of the rule and then remove the attributes.The image below illustrates the rule starting to work after you have removed everything up to the user Agent:

This means that the issue is in the user Agent attribute.

Fix the issue: After identifying the culprit attribute, look at it more closely.

The user Agent in the log is:

- Mozilla/5.0 (Windows NT 5.1; rv:52.0) Gecko/20100101 Firefox/52.0.

And the parsing rule is using:

%{notSpace:http.useragent:nullIf("-")}

The first thing to check is the matcher (as a reminder, a matcher describes what element the rule expects such as integer, notSpace, regex, …). Here, it expects

notSpace. But the useragent contains spaces and even specific characters. ThereforenotSpaceis not going to work here.The matcher to use is: regex("[^\"]*")

In other situation it might be the rule expecting an “integer” whereas the values are double so the matcher should be changed to “number”.

Ask for help: Datadog is always here to help you! If you did not manage to find the cause of the parsing error, contact the support team.