- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Configure Containers View

This page lists configuration options for the Containers page in Datadog. To learn more about the Containers page and its capabilities, see Containers View documentation.

Configuration options

Include or exclude containers

Include and exclude containers from real-time collection:

- Exclude containers either by passing the environment variable

DD_CONTAINER_EXCLUDEor by addingcontainer_exclude:in yourdatadog.yamlmain configuration file. - Include containers either by passing the environment variable

DD_CONTAINER_INCLUDEor by addingcontainer_include:in yourdatadog.yamlmain configuration file.

Both arguments take an image name as value. Regular expressions are also supported.

For example, to exclude all Debian images except containers with a name starting with frontend, add these two configuration lines in your datadog.yaml file:

container_exclude: ["image:debian"]

container_include: ["name:frontend.*"]

Note: For Agent 5, instead of including the above in the datadog.conf main configuration file, explicitly add a datadog.yaml file to /etc/datadog-agent/, as the Process Agent requires all configuration options here. This configuration only excludes containers from real-time collection, not from Autodiscovery.

Scrubbing sensitive information

To prevent the leaking of sensitive data, you can scrub sensitive words in container YAML files. Container scrubbing is enabled by default for Helm charts, and some default sensitive words are provided:

passwordpasswdmysql_pwdaccess_tokenauth_tokenapi_keyapikeypwdsecretcredentialsstripetoken

You can set additional sensitive words by providing a list of words to the environment variable DD_ORCHESTRATOR_EXPLORER_CUSTOM_SENSITIVE_WORDS. This adds to, and does not overwrite, the default words.

Note: The additional sensitive words must be in lowercase, as the Agent compares the text with the pattern in lowercase. This means password scrubs MY_PASSWORD to MY_*******, while PASSWORD does not.

You need to setup this environment variable for the following agents:

- process-agent

- cluster-agent

env:

- name: DD_ORCHESTRATOR_EXPLORER_CUSTOM_SENSITIVE_WORDS

value: "customword1 customword2 customword3"

For example, because password is a sensitive word, the scrubber changes <MY_PASSWORD> in any of the following to a string of asterisks, ***********:

password <MY_PASSWORD>

password=<MY_PASSWORD>

password: <MY_PASSWORD>

password::::== <MY_PASSWORD>

However, the scrubber does not scrub paths that contain sensitive words. For example, it does not overwrite /etc/vaultd/secret/haproxy-crt.pem with /etc/vaultd/******/haproxy-crt.pem even though secret is a sensitive word.

Configure Orchestrator Explorer

Resource collection compatibility matrix

The following table presents the list of collected resources and the minimal Agent, Cluster Agent, and Helm chart versions for each.

| Resource | Minimal Agent version | Minimal Cluster Agent version* | Minimal Helm chart version | Minimal Kubernetes version |

|---|---|---|---|---|

| ClusterRoleBindings | 7.33.0 | 1.19.0 | 2.30.9 | 1.14.0 |

| ClusterRoles | 7.33.0 | 1.19.0 | 2.30.9 | 1.14.0 |

| Clusters | 7.33.0 | 1.18.0 | 2.10.0 | 1.17.0 |

| CronJobs | 7.33.0 | 7.40.0 | 2.15.5 | 1.16.0 |

| CustomResourceDefinitions | 7.51.0 | 7.51.0 | 3.39.2 | v1.16.0 |

| CustomResources | 7.51.0 | 7.51.0 | 3.39.2 | v1.16.0 |

| DaemonSets | 7.33.0 | 1.18.0 | 2.16.3 | 1.16.0 |

| Deployments | 7.33.0 | 1.18.0 | 2.10.0 | 1.16.0 |

| HorizontalPodAutoscalers | 7.33.0 | 7.51.0 | 2.10.0 | 1.1.1 |

| Ingresses | 7.33.0 | 1.22.0 | 2.30.7 | 1.21.0 |

| Jobs | 7.33.0 | 1.18.0 | 2.15.5 | 1.16.0 |

| Namespaces | 7.33.0 | 7.41.0 | 2.30.9 | 1.17.0 |

| Network Policies | 7.33.0 | 7.56.0 | 3.57.2 | 1.14.0 |

| Nodes | 7.33.0 | 1.18.0 | 2.10.0 | 1.17.0 |

| PersistentVolumeClaims | 7.33.0 | 1.18.0 | 2.30.4 | 1.17.0 |

| PersistentVolumes | 7.33.0 | 1.18.0 | 2.30.4 | 1.17.0 |

| Pods | 7.33.0 | 1.18.0 | 3.9.0 | 1.17.0 |

| ReplicaSets | 7.33.0 | 1.18.0 | 2.10.0 | 1.16.0 |

| RoleBindings | 7.33.0 | 1.19.0 | 2.30.9 | 1.14.0 |

| Roles | 7.33.0 | 1.19.0 | 2.30.9 | 1.14.0 |

| ServiceAccounts | 7.33.0 | 1.19.0 | 2.30.9 | 1.17.0 |

| Services | 7.33.0 | 1.18.0 | 2.10.0 | 1.17.0 |

| Statefulsets | 7.33.0 | 1.15.0 | 2.20.1 | 1.16.0 |

| VerticalPodAutoscalers | 7.33.0 | 7.46.0 | 3.6.8 | 1.16.0 |

Note: After version 1.22, Cluster Agent version numbering follows Agent release numbering, starting with version 7.39.0.

Add custom tags to resources

You can add custom tags to Kubernetes resources to ease filtering inside the Kubernetes resources view.

Additional tags are added through the DD_ORCHESTRATOR_EXPLORER_EXTRA_TAGS environment variable.

Note: These tags only show up in the Kubernetes resources view.

Add the environment variable on both the Process Agent and the Cluster Agent by setting agents.containers.processAgent.env and clusterAgent.env in datadog-agent.yaml.

apiVersion: datadoghq.com/v2alpha1

kind: DatadogAgent

metadata:

name: datadog

spec:

global:

credentials:

apiKey: <DATADOG_API_KEY>

appKey: <DATADOG_APP_KEY>

features:

liveContainerCollection:

enabled: true

orchestratorExplorer:

enabled: true

override:

agents:

containers:

processAgent:

env:

- name: "DD_ORCHESTRATOR_EXPLORER_EXTRA_TAGS"

value: "tag1:value1 tag2:value2"

clusterAgent:

env:

- name: "DD_ORCHESTRATOR_EXPLORER_EXTRA_TAGS"

value: "tag1:value1 tag2:value2"

Then, apply the new configuration:

kubectl apply -n $DD_NAMESPACE -f datadog-agent.yaml

If you are using the official Helm chart, add the environment variable on both the Process Agent and the Cluster Agent by setting agents.containers.processAgent.env and clusterAgent.env in values.yaml.

agents:

containers:

processAgent:

env:

- name: "DD_ORCHESTRATOR_EXPLORER_EXTRA_TAGS"

value: "tag1:value1 tag2:value2"

clusterAgent:

env:

- name: "DD_ORCHESTRATOR_EXPLORER_EXTRA_TAGS"

value: "tag1:value1 tag2:value2"

Then, upgrade your Helm chart.

Set the environment variable on both the Process Agent and Cluster Agent containers:

- name: DD_ORCHESTRATOR_EXPLORER_EXTRA_TAGS

value: "tag1:value1 tag2:value2"

Collect custom resources

The Kubernetes Explorer automatically collects CustomResourceDefinitions (CRDs) by default.

Follow these steps to collect the custom resources that these CRDs define:

In Datadog, open Kubernetes Explorer. On the left panel, under Select Resources, select Kubernetes > Custom Resources > Resource Definitions.

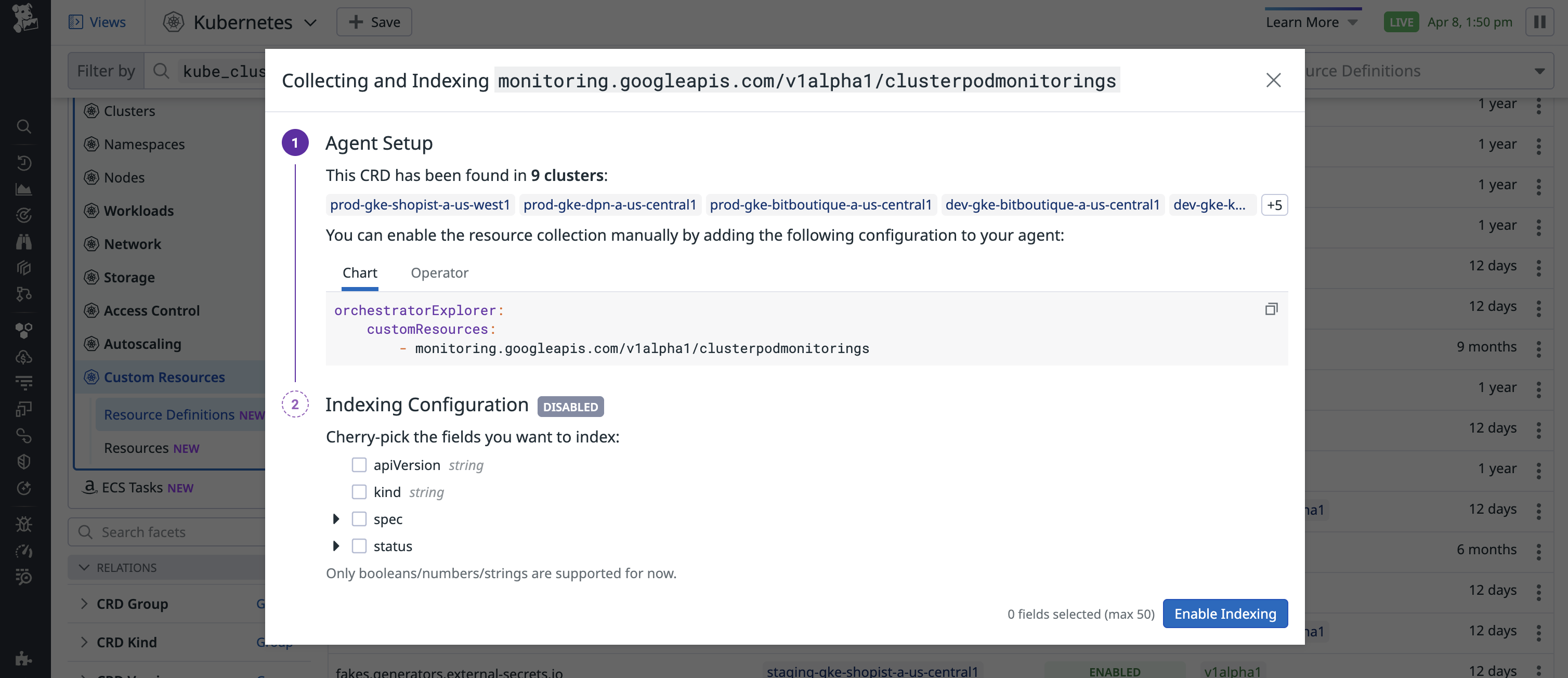

Locate the CRD that defines the custom resource you want to visualize in the explorer. Under the Indexing column, click ENABLED or DISABLED.

If your CRD has multiple versions, you are prompted to select which version you want to configure indexing for.A modal appears:

Follow the instructions in the modal’s Agent Setup section to update your Datadog Agent configuration:

Add the following configuration to

datadog-values.yaml:datadog: #(...) orchestratorExplorer: customResources: - <CUSTOM_RESOURCE_NAME>Upgrade your Helm chart:

helm upgrade -f datadog-values.yaml <RELEASE_NAME> datadog/datadog

Install the Datadog Operator with an option that grants the Datadog Agent permission to collect custom resources:

helm install datadog-operator datadog/datadog-operator --set clusterRole.allowReadAllResources=trueAdd the following configuration to your

DatadogAgentmanifest,datadog-agent.yaml:apiVersion: datadoghq.com/v2alpha1 kind: DatadogAgent metadata: name: datadog spec: #(...) features: orchestratorExplorer: customResources: - <CUSTOM_RESOURCE_NAME>Apply your new configuration:

kubectl apply -n $DD_NAMESPACE -f datadog-agent.yaml

Each

<CUSTOM_RESOURCE_NAME>must use the formatgroup/version/kind.On the modal, under Indexing Configuration, select the fields you want to index from the custom resource.

Select Enable Indexing to save.

You can select a maximum of 50 fields for each resource.

After the fields are indexed, you can add them as columns in the explorer or as part of Saved Views.

Further reading

Additional helpful documentation, links, and articles: