- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

Destinations

Ce produit n'est pas pris en charge par le site Datadog que vous avez sélectionné. ().

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Overview

Use the Observability Pipelines Worker to send your processed logs and metrics (PREVIEW indicates an early access version of a major product or feature that you can opt into before its official release.Glossary) to different destinations. Most Observability Pipelines destinations send events in batches to the downstream integration. See Event batching for more information. Some Observability Pipelines destinations also have fields that support template syntax, so you can set these fields based on specific fields. See Template syntax for more information.

Select a destination in the left navigation menu to see more information about it.

Destinations

These are the available destinations:

- Amazon OpenSearch

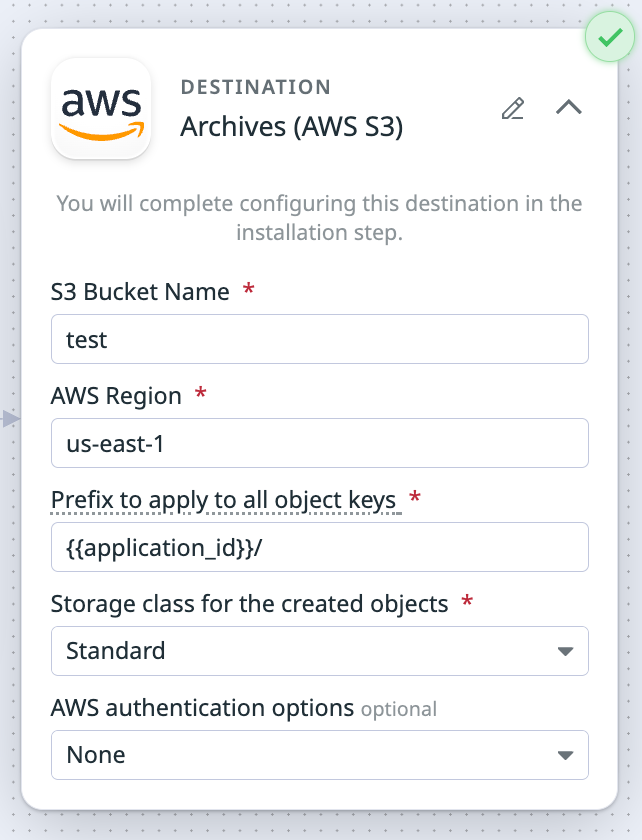

- Amazon S3

- Amazon Security Lake

- Azure Storage

- Datadog CloudPrem

- CrowdStrike Next-Gen SIEM

- Datadog Logs

- Elasticsearch

- Google Cloud Storage

- Google Pub/Sub

- Google SecOps

- HTTP Client

- Kafka

- Microsoft Sentinel

- New Relic

- OpenSearch

- SentinelOne

- Socket

- Splunk HTTP Event Collector (HEC)

- Sumo Logic Hosted Collector

- Syslog

Template syntax

Logs are often stored in separate indexes based on log data, such as the service or environment the logs are coming from or another log attribute. In Observability Pipelines, you can use template syntax to route your logs to different indexes based on specific log fields.

When the Observability Pipelines Worker cannot resolve the field with the template syntax, the Worker defaults to a specified behavior for that destination. For example, if you are using the template {{application_id}} for the Amazon S3 destination’s Prefix field, but there isn’t an application_id field in the log, the Worker creates a folder called OP_UNRESOLVED_TEMPLATE_LOGS/ and publishes the logs there.

The following table lists the destinations and fields that support template syntax, and what happens when the Worker cannot resolve the field:

| Destination | Fields that support template syntax | Behavior when the field cannot be resolved |

|---|---|---|

| Amazon Opensearch | Index | The Worker writes logs to the datadog-op index. |

| Amazon S3 | Prefix | The Worker creates a folder named OP_UNRESOLVED_TEMPLATE_LOGS/ and writes the logs there. |

| Azure Blob | Prefix | The Worker creates a folder named OP_UNRESOLVED_TEMPLATE_LOGS/ and writes the logs there. |

| Elasticsearch | Index | The Worker writes logs to the datadog-op index. |

| Google Chronicle | Log type | Defaults to DATADOG log type. |

| Google Cloud | Prefix | The Worker creates a folder named OP_UNRESOLVED_TEMPLATE_LOGS/ and writes the logs there. |

| Opensearch | Index | The Worker writes logs to the datadog-op index. |

| Splunk HEC | Index Source type | The Worker sends the logs to the default index configured in Splunk. The Worker defaults to the httpevent sourcetype. |

Example

If you want to route logs based on the log’s application ID field (for example, application_id) to the Amazon S3 destination, use the event fields syntax in the Prefix to apply to all object keys field.

Syntax

Event fields

Use {{ <field_name> }} to access individual log event fields. For example:

{{ application_id }}

Strftime specifiers

Use strftime specifiers for the date and time. For example:

year=%Y/month=%m/day=%d

Escape characters

Prefix a character with \ to escape the character. This example escapes the event field syntax:

\{{ field_name }}

This example escapes the strftime specifiers:

year=\%Y/month=\%m/day=\%d/

Event batching

Observability Pipelines destinations send events in batches to the downstream integration. A batch of events is flushed when one of the following parameters is met:

- Maximum number of events

- Maximum number of bytes

- Timeout (seconds)

For example, if a destination’s parameters are:

- Maximum number of events = 2

- Maximum number of bytes = 100,000

- Timeout (seconds) = 5

And the destination receives 1 event in a 5-second window, it flushes the batch at the 5-second timeout.

If the destination receives 3 events within 2 seconds, it flushes a batch with 2 events and then flushes a second batch with the remaining event after 5 seconds. If the destination receives 1 event that is more than 100,000 bytes, it flushes this batch with the 1 event.

| Destination | Maximum Events | Maximum Bytes | Timeout (seconds) |

|---|---|---|---|

| Amazon OpenSearch | None | 10,000,000 | 1 |

| Amazon S3 (Datadog Log Archives) | None | 100,000,000 | 900 |

| Amazon Security Lake | None | 256,000,000 | 300 |

| Azure Storage (Datadog Log Archives) | None | 100,000,000 | 900 |

| CrowdStrike | None | 1,000,000 | 1 |

| Datadog Logs | 1,000 | 4,250,000 | 5 |

| Elasticsearch | None | 10,000,000 | 1 |

| Google Chronicle | None | 1,000,000 | 15 |

| Google Cloud Storage (Datadog Log Archives) | None | 100,000,000 | 900 |

| HTTP Client | 1000 | 1,000,000 | 1 |

| Microsoft Sentinel | None | 10,000,000 | 1 |

| New Relic | 100 | 1,000,000 | 1 |

| OpenSearch | None | 10,000,000 | 1 |

| SentinelOne | None | 1,000,000 | 1 |

| Socket* | N/A | N/A | N/A |

| Splunk HTTP Event Collector (HEC) | None | 1,000,000 | 1 |

| Sumo Logic Hosted Collecter | None | 10,000,000 | 1 |

| Syslog* | N/A | N/A | N/A |

*Destination does not batch events.