- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

Managed Evaluations

Ce produit n'est pas pris en charge par le site Datadog que vous avez sélectionné. ().

Cette page n'est pas encore disponible en français, sa traduction est en cours.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Si vous avez des questions ou des retours sur notre projet de traduction actuel, n'hésitez pas à nous contacter.

Overview

Managed evaluations are built-in tools to assess your LLM application on dimensions like quality, security, and safety. By creating them, you can assess the effectiveness of your application’s responses, including detection of sentiment, topic relevancy, toxicity, failure to answer, and hallucination.

LLM Observability associates evaluations with individual spans so you can view the inputs and outputs that led to a specific evaluation.

LLM Observability managed evaluations leverage LLMs. To connect your LLM provider to Datadog, you need a key from the provider.

Learn more about the compatibility requirements.

Connect your LLM provider account

Configure the LLM provider you would like to use for bring-your-own-key (BYOK) evaluations. You only have to complete this step once.

If you are subject to HIPAA, you are responsible for ensuring that you connect only to an OpenAI account that is subject to a business associate agreement (BAA) and meets all requirements for HIPAA compliance.

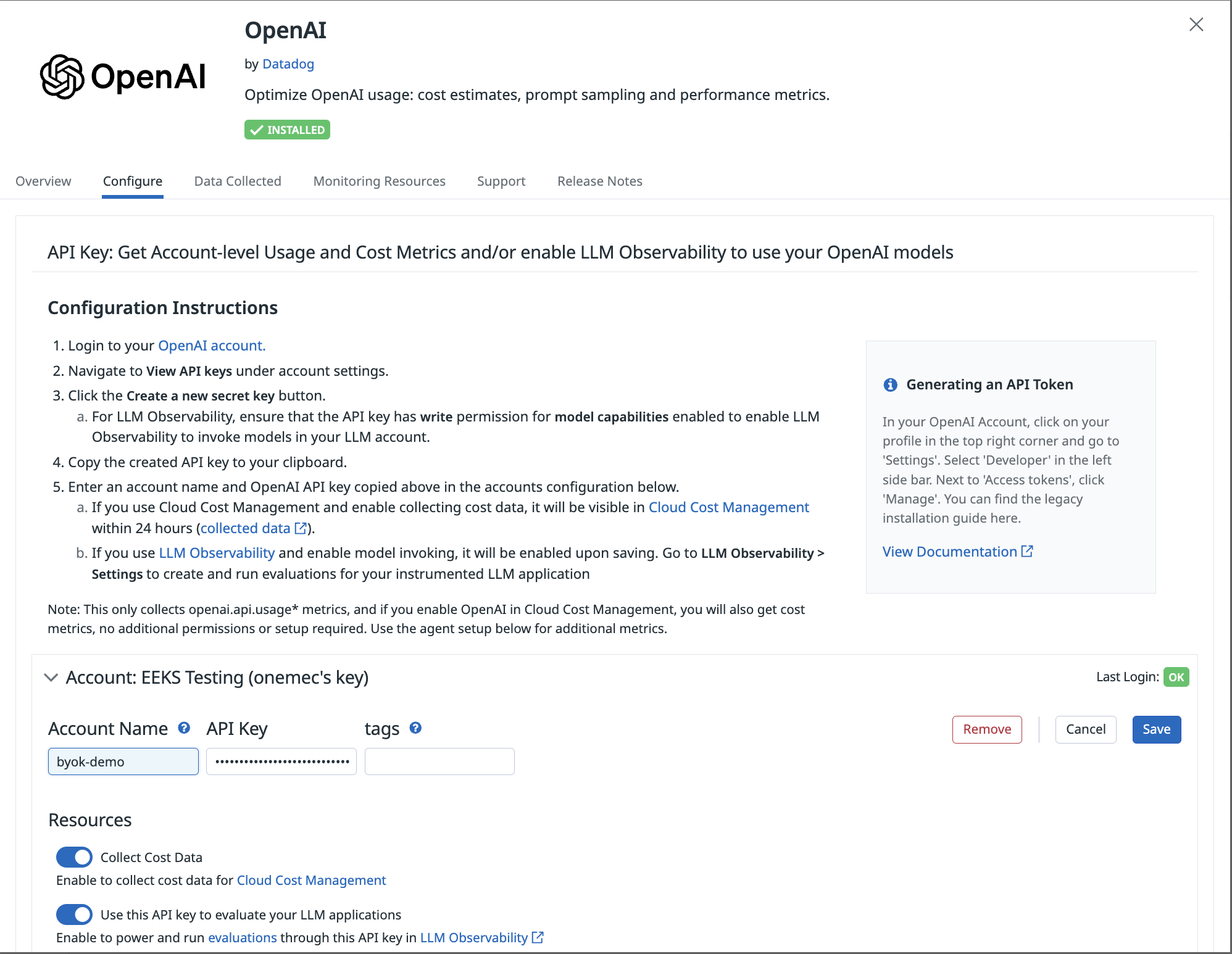

Connect your OpenAI account to LLM Observability with your OpenAI API key. LLM Observability uses the GPT-4o mini model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the OpenAI tile.

- Follow the instructions on the tile.

- Provide your OpenAI API key. Ensure that this key has write permission for model capabilities.

- Enable Use this API key to evaluate your LLM applications.

LLM Observability does not support data residency for OpenAI.

If you are subject to HIPAA, you are responsible for ensuring that you connect only to an Azure OpenAI account that is subject to a business associate agreement (BAA) and meets all requirements for HIPAA compliance.

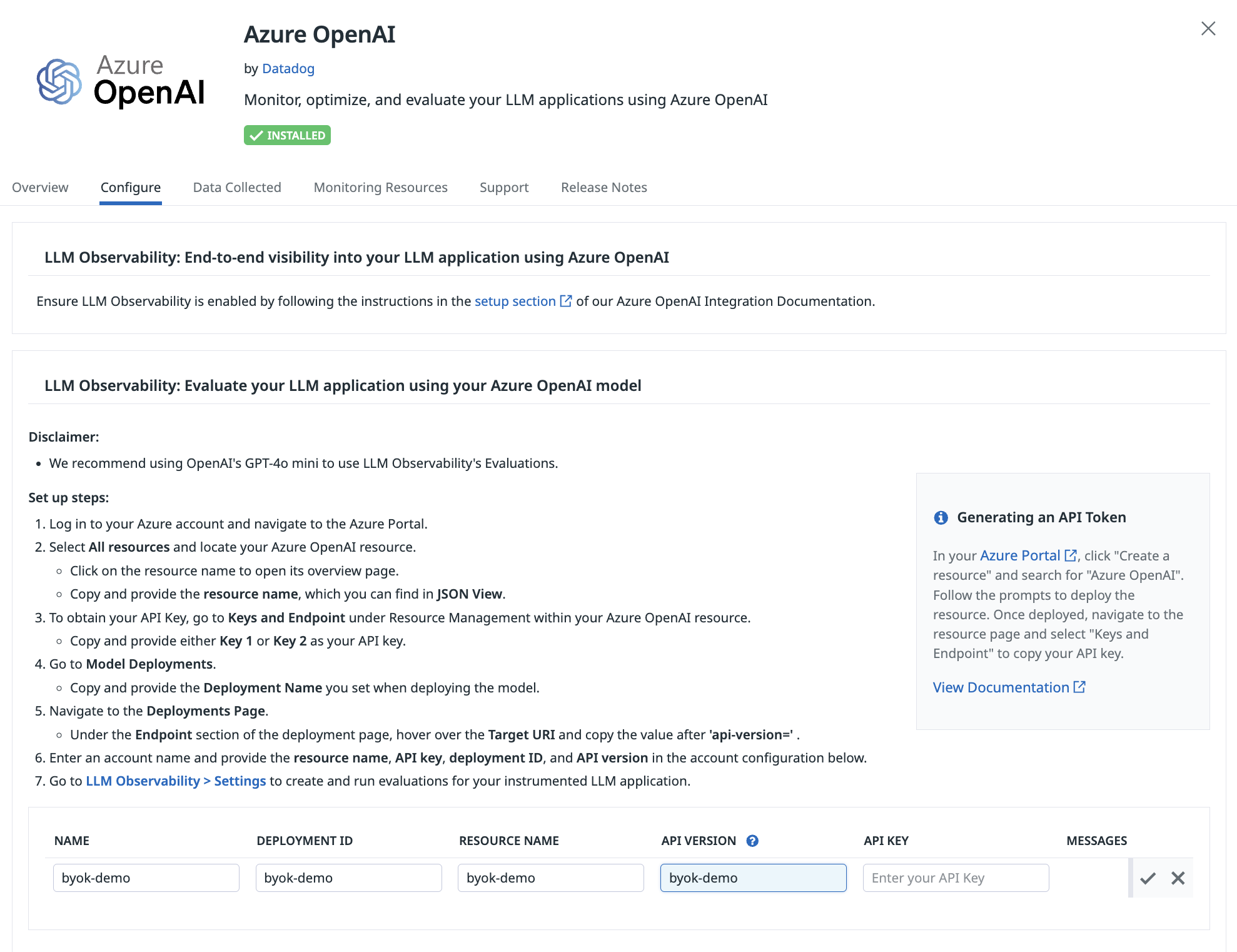

Connect your Azure OpenAI account to LLM Observability with your OpenAI API key. Datadog strongly recommends using the GPT-4o mini model for evaluations. The selected model version must support structured output.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the Azure OpenAI tile.

- Follow the instructions on the tile.

- Provide your Azure OpenAI API key. Ensure that this key has write permission for model capabilities.

- Provide the Resource Name, Deployment ID, and API version to complete integration.

If you are subject to HIPAA, you are responsible for ensuring that you connect only to an Anthropic account that is subject to a business associate agreement (BAA) and meets all requirements for HIPAA compliance.

Connect your Anthropic account to LLM Observability with your Anthropic API key. LLM Observability uses the Haiku model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Select Connect on the Anthropic tile.

- Follow the instructions on the tile.

- Provide your Anthropic API key. Ensure that this key has write permission for model capabilities.

If you are subject to HIPAA, you are responsible for ensuring that you connect only to an Amazon Bedrock account that is subject to a business associate agreement (BAA) and meets all requirements for HIPAA compliance.

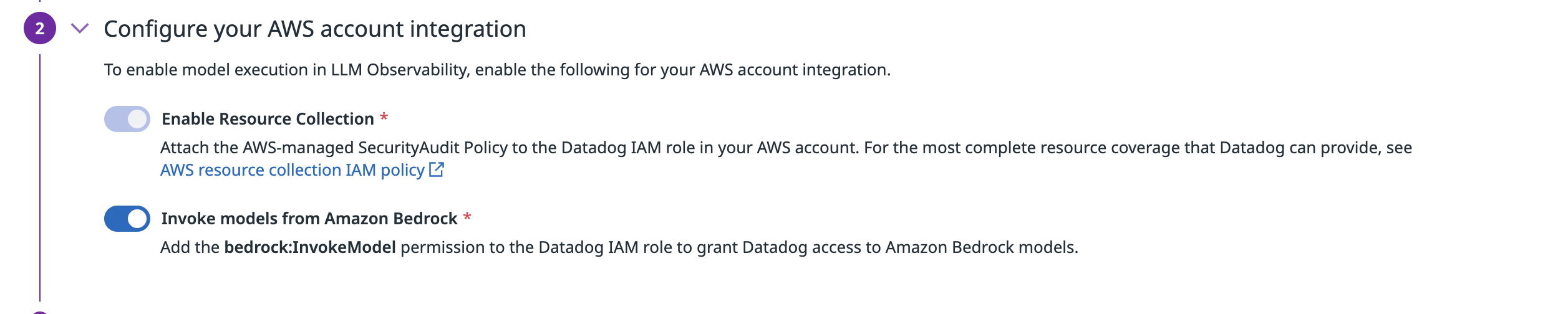

Connect your Amazon Bedrock account to LLM Observability with your AWS Account. LLM Observability uses the Haiku model for evaluations.

In Datadog, navigate to LLM Observability > Settings > Integrations.

Select Connect on the Amazon Bedrock tile.

Follow the instructions on the tile.

Be sure to configure the Invoke models from Amazon Bedrock role to run evaluations. More details about the InvokeModel action can be found in the Amazon Bedrock API reference documentation.

If you are subject to HIPAA, you are responsible for ensuring that you connect only to a Google Cloud Platform account that is subject to a business associate agreement (BAA) and meets all requirements for HIPAA compliance.

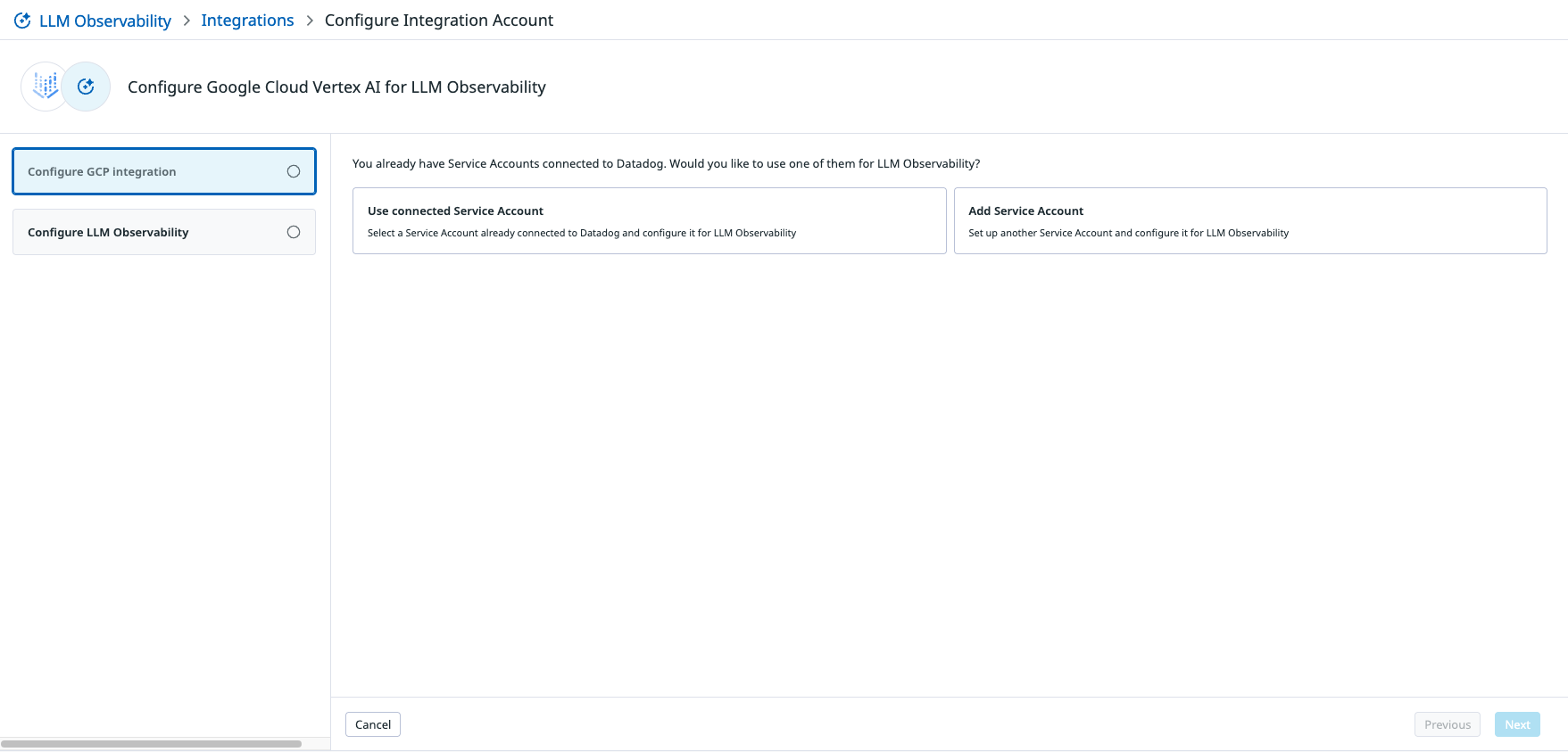

Connect Vertex AI to LLM Observability with your Google Cloud Platform account. LLM Observability uses the gemini-2.5-flash model for evaluations.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- On the Google Cloud Vertex AI tile, click Connect to add a new GCP account, or click Configure next to where your existing accounts are listed to begin the onboarding process.

- You will see all GCP accounts connected to Datadog in this page. However, you must still go through the onboarding process for an account to use it in LLM Observability.

- Follow the onboarding instructions to configure your account.

- Add the Vertex AI User role to your account and enable the Vertex AI API.

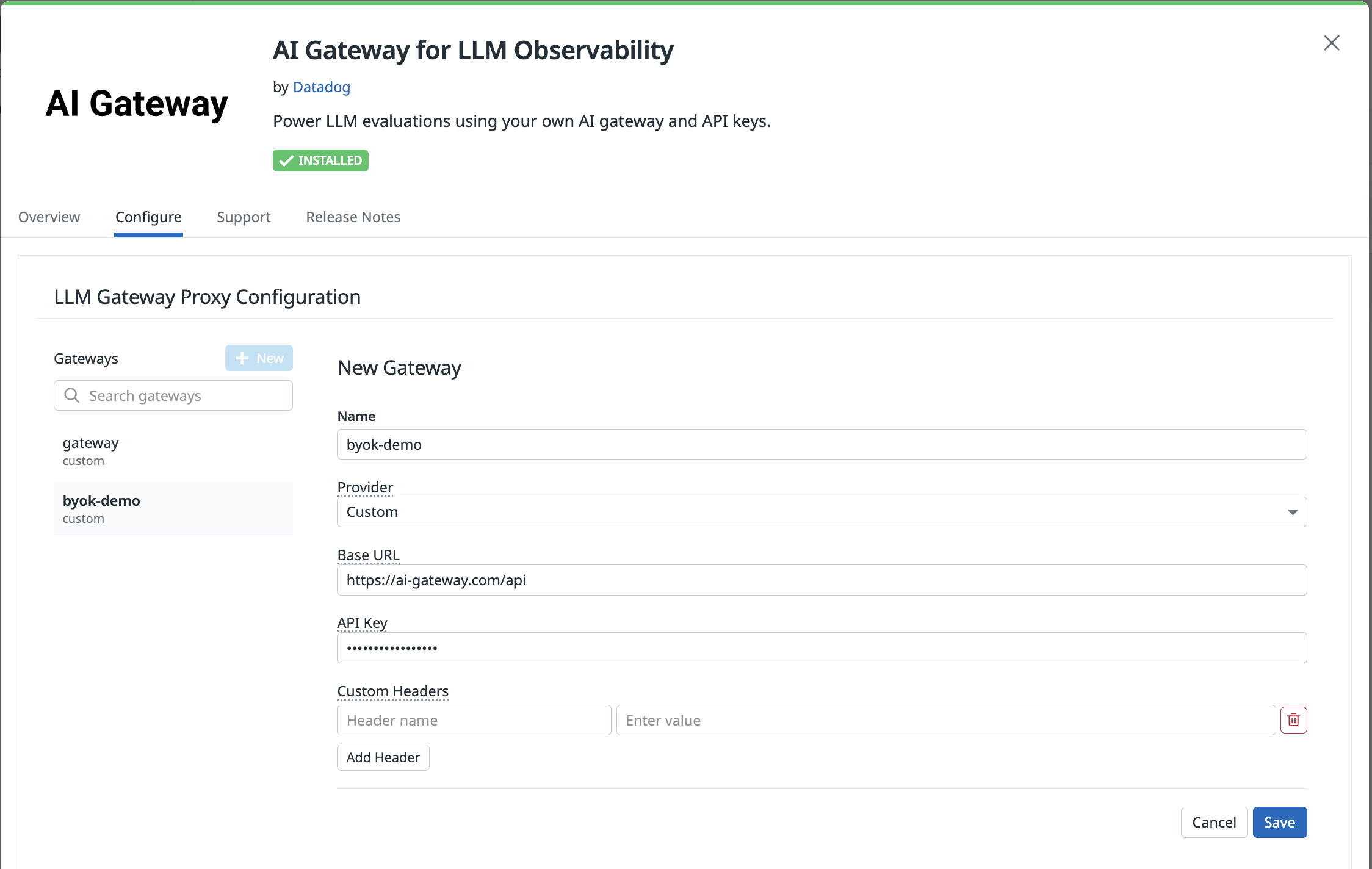

If you are subject to HIPAA, you are responsible for ensuring that you only connect to an AI Gateway that is subject to a business associate agreement (BAA) and meets all requirements for HIPAA compliance.

Your AI Gateway must be compatible with the OpenAI API specification.

Connect your AI Gateway to LLM Observability with your base URL, API key, and headers.

- In Datadog, navigate to LLM Observability > Settings > Integrations.

- Click the Configure tab, then click New to create a new gateway.

- Follow the instructions on the tile.

- Provide a name for your gateway.

- Select your provider.

- Provide your base URL.

- Provide your API key and optionally any headers.

If your LLM provider restricts IP addresses, you can obtain the required IP ranges by visiting Datadog’s IP ranges documentation, selecting your Datadog Site, pasting the GET URL into your browser, and copying the webhooks section.

Create new evaluations

- Navigate to AI Observability > Evaluations.

- Click on the Create Evaluation button on the top right corner.

- Select a specific managed evaluation. This will open the evalution editor window.

- Select the LLM application(s) you want to configure your evaluation for.

- Select the LLM provider and corresponding account.

- If you select an Amazon Bedrock account, choose the region the account is configured for.

- If you select a Vertex account, choose a project and location.

- Configure the data to run the evaluation on:

- Select Traces (filtering for the root span of each trace) or All Spans (no filtering).

- (Optional) Specify any or all tags you want this evaluation to run on.

- (Optional) Select what percentage of spans you would like this evaluation to run on by configuring the sampling percentage. This number must be greater than

0and less than or equal to100(sampling all spans).

- (Optional) Configure evaluation options by selecting what subcategories should be flagged. Only available on some evaluations.

After you click Save and Publish, LLM Observability uses the LLM account you connected to power the evaluation you enabled. Alternatively, you can Save as Draft and edit or enable them later.

Edit existing evaluations

- Navigate to AI Observability > Evaluations.

- Hover over the evaluation you want to edit and click the Edit button.

Estimated token usage

You can monitor the token usage of your managed LLM evaluations using this dashboard.

If you need more details, the following metrics allow you to track the LLM resources consumed to power evaluations:

ml_obs.estimated_usage.llm.input.tokensml_obs.estimated_usage.llm.output.tokensml_obs.estimated_usage.llm.total.tokens

Each of these metrics has ml_app, model_server, model_provider, model_name, and evaluation_name tags, allowing you to pinpoint specific applications, models, and evaluations contributing to your usage.

Quality evaluations

Quality evaluations help ensure your LLM-powered applications generate accurate, relevant, and safe responses. Managed evaluations automatically score model outputs on key quality dimensions and attach results to traces, helping you detect issues, monitor trends, and improve response quality over time. Datadog offers the following quality evaluations:

- Topic relevancy - Measures whether the model’s response stays relevant to the user’s input or task

- Hallucination - Detects when the model generates incorrect or unsupported information presented as fact

- Failure to Answer - Identifies cases where the model does not meaningfully answer the user’s question

- Language Mismatch - Flags responses that are written in a different language than the user’s input

- Sentiment - Evaluates the emotional tone of the model’s response to ensure it aligns with expectations

Security and Safety evaluations

Security and Safety evaluations help ensure your LLM-powered applications resist malicious inputs and unsafe outputs. Managed evaluations automatically detect risks like prompt injection and toxic content by scoring model interactions and tying results to trace data for investigation. Datadog offers the following security and safety evaluations:

- Toxicity - Detects harmful, offensive, or abusive language in model inputs or outputs

- Prompt Injection - Identifies attempts to manipulate the model into ignoring instructions or revealing unintended behavior

- Sensitive Data Scanning - Flags the presence of sensitive or regulated information in model inputs or outputs

Session level evaluations

Session level evaluations help ensure your LLM-powered applications successfully achieve intended user outcomes across entire interactions. These managed evaluations analyze multi-turn sessions to assess higher-level goals and behaviors that span beyond individual spans, giving insight into overall effectiveness and user satisfaction. Datadog offers the following session-level evaluations:

- Goal Completeness - Evaluates whether the user’s intended goal was successfully achieved over the course of the entire session

Agent evaluations

Agent evaluations help ensure your LLM-powered applications are making the right tool calls and successfully resolving user requests. These checks are designed to catch common failure modes when agents interact with external tools, APIs, or workflows. Datadog offers the following agent evaluations:

- Tool selection - Verifies that the tool(s) selected by an agent are correct

- Tool argument correctness - Ensures the arguments provided to a tool by the agent are correct

Further Reading

Documentation, liens et articles supplémentaires utiles: