- Principales informations

- Getting Started

- Agent

- API

- Tracing

- Conteneurs

- Dashboards

- Database Monitoring

- Datadog

- Site Datadog

- DevSecOps

- Incident Management

- Intégrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profileur

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Surveillance Synthetic

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Intégrations

- Développeurs

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Application mobile

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Alertes

- Watchdog

- Métriques

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Conteneurs

- Processes

- Sans serveur

- Surveillance réseau

- Cloud Cost

- Application Performance

- APM

- Termes et concepts de l'APM

- Sending Traces to Datadog

- APM Metrics Collection

- Trace Pipeline Configuration

- Connect Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Observabilité des services

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Suivi des erreurs

- Sécurité des données

- Guides

- Dépannage

- Profileur en continu

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Configuration de Postgres

- Configuration de MySQL

- Configuration de SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Données collectées

- Exploring Database Hosts

- Explorer les métriques de requête

- Explorer des échantillons de requêtes

- Exploring Database Schemas

- Exploring Recommendations

- Dépannage

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- RUM et Session Replay

- Surveillance Synthetic

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Visibility

- Code Coverage

- Quality Gates

- DORA Metrics

- Feature Flags

- Securité

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security Management

- Application Security Management

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Pipelines d'observabilité

- Log Management

- CloudPrem

- Administration

Créer un pipeline de log

Présentation

Ce guide accompagne les partenaires technologiques dans la création d’un pipeline de log pour une intégration qui envoie des logs à Datadog. Un pipeline de log est requis pour traiter, structurer et enrichir les logs afin d’en optimiser l’utilisation.

Meilleures pratiques

- Utilisez les endpoints pris en charge par Datadog pour les logs.

- L’intégration doit utiliser l’un des endpoints de collecte de logs pris en charge par Datadog.

- Vous pouvez aussi utiliser le endpoint HTTP de collecte de logs pour envoyer des logs à Datadog.

- Assurez la compatibilité avec tous les sites Datadog.

- Veillez à permettre aux utilisateurs de choisir le site Datadog approprié lorsque nécessaire.

- Consultez la section Débuter avec les sites Datadog pour connaître des détails spécifiques à chaque site.

- Le endpoint de site Datadog pour la collecte de logs est :

http-intake.logs.

- Permettez aux utilisateurs d’ajouter des tags personnalisés.

- Les tags doivent être définis comme des attributs clé-valeur dans le corps JSON de la charge utile du log.

- Si les logs sont envoyés via l’API, les tags peuvent également être définis à l’aide du paramètre de requête

ddtags=<TAGS>.

- Définissez le tag source des logs de l’intégration.

- Définissez le tag source comme le nom de l’intégration, par exemple

source: okta. - Le tag source doit :

- Utiliser uniquement des minuscules

- Ne pas être modifiable par l’utilisateur (à utiliser dans les pipelines et dashboards)

- Définissez le tag source comme le nom de l’intégration, par exemple

- Ne transmettez pas de logs avec des tableaux dans le corps JSON.

- Bien que les tableaux soient pris en charge, ils ne peuvent pas être utilisés comme facettes, ce qui limite les options de filtrage.

- Protégez les clés d’API et les clés d’application Datadog.

- Les clés d’API Datadog ne doivent jamais apparaître dans les logs. Transmettez-les uniquement via l’en-tête de la requête ou dans le chemin HTTP.

- Les clés d’application ne doivent pas être utilisées pour envoyer des logs.

Créer des ressources pour l’intégration de logs

Les ressources d’intégration de logs comprennent :

- Des pipelines - Pour traiter et structurer les logs.

- Des facettes - Attributs utilisés pour filtrer et rechercher les logs. Les intégrations créées par des partenaires technologiques doivent respecter la convention de nommage standard de Datadog afin de garantir la compatibilité avec les dashboards intégrés.

Pour être examinées par l'équipe chargée des intégrations chez Datadog, les intégrations de logs doivent inclure des ressources et comporter des processeurs de pipeline ou des facettes.

Présentation des pipelines

Les logs envoyés à Datadog passent par des pipelines de logs avec des processeurs qui permettent de parser, remapper et extraire les attributs, pour enrichir et uniformiser les logs sur toute la plateforme.

Créer un pipeline

- Accédez à la page Pipelines et sélectionnez New Pipeline.

- Dans le champ Filter, saisissez le tag source unique des logs. Par exemple,

source:oktapour l’intégration Okta. - [Facultatif] Ajoutez des tags et une description pour plus de clarté.

- Cliquez sur Create.

Important : assurez-vous que les logs envoyés via l’intégration sont tagués avant ingestion.

Ajouter des processeurs de pipeline

- Consultez les attributs standard Datadog pour connaître les bonnes pratiques en matière de de structuration des logs.

Les attributs standard sont des attributs réservés qui s’appliquent sur l’ensemble de la plateforme.

- Cliquez sur Add Processor et choisissez parmi les options suivantes :

- Attribute Remapper – Mappe les attributs de logs personnalisés vers les attributs standard Datadog.

- Service Remapper – Garantit que les logs sont associés au bon nom de service.

- Date Remapper – Attribue le bon horodatage aux logs.

- Status Remapper – Mappe les statuts de logs vers les attributs standard Datadog.

- Message Remapper – Affecte les logs au bon attribut de message.

- Si les logs ne sont pas au format JSON, utilisez un processeur Grok parser pour extraire les attributs. Les processeurs Grok extraient les attributs et enrichissent les logs avant le mappage ou tout autre traitement.

Pour un traitement plus poussé, vous pouvez utiliser :

- Arithmetic Processor – Réalise des calculs sur les attributs des logs.

- String Builder Processor – Combine plusieurs attributs texte en une seule chaîne.

Conseils

- Utilisez

preserveSource:falsepour retirer les attributs sources après remappage, ce qui limite les conflits et doublons. - Pour optimiser le parsing Grok, n’utilisez pas de correspondances globales ou génériques (wildcard matchers).

Utilisez les processeurs dans vos pipelines pour enrichir et restructurer vos données, et générer des attributs de logs. Pour consulter la liste complète des processeurs de logs, consultez la documentation relative aux processeurs.

Prérequis

- Mapper les attributs de logs de l’application vers les attributs standard de Datadog

- Utilisez l’Attribute Remapper pour associer les clés d’attributs aux attributs standard de Datadog lorsque c’est possible. Par exemple, un attribut contenant l’adresse IP du client d’un service réseau doit être remappé vers

network.client.ip. - Mapper le tag

servicedu log vers le nom du service générant les données de télémétrie - Utilisez le Service Remapper pour remapper l’attribut

service. Lorsque la source et le service partagent la même valeur, remappez le tagservicevers le tagsource. Les tagsservicedoivent être en minuscules.

Mapper l’horodatage interne des logs sur l’horodatage officiel Datadog Utilisez le Date Remapper pour définir l’horodatage officiel des logs. Si un horodatage ne correspond pas à un attribut standard de date, Datadog utilisera l’heure d’ingestion.

Mapper les attributs de statut personnalisés des logs sur l’attribut status officiel de Datadog

Utilisez un Status Remapper pour remapper le champ status, ou un processeur de catégorie si les statuts sont groupés (comme les codes HTTP).

Mapper l’attribut de message personnalisé des logs sur l’attribut message officiel de Datadog

Utilisez le message remapper pour définir le champ de message officiel lorsqu’il diffère du champ par défaut, afin de permettre les recherches textuelles.

Attribuer un espace de nommage aux attributs personnalisés dans les logs

Les attributs génériques qui ne correspondent pas à un attribut standard Datadog doivent être placés dans un espace de nommage s’ils sont mappés à des facettes. Par exemple, file devient integration_name.file.

Utilisez l’Attribute Remapper pour définir les clés avec un espace de nommage.

- Développez le pipeline nouvellement créé et cliquez sur Add Processor pour commencer à ajouter des processeurs.

- Si les logs de l’intégration ne sont pas au format JSON, ajoutez le processeur Grok pour extraire les informations d’attribut. Les processeurs Grok analysent les attributs et enrichissent les logs avant le remappage ou tout autre traitement.

- Après avoir extrait les attributs, remappez-les vers les attributs standard de Datadog à l’aide des Attribute Remappers.

- Définissez l’horodatage officiel Datadog des logs de l’intégration à l’aide du Date Remapper.

- Pour un traitement avancé et des transformations de données, utilisez des processeurs supplémentaires.

Par exemple, l’

Arithmetic Processorpeut servir à effectuer des calculs, et leString Builder Processorà combiner plusieurs attributs en une chaîne unique.

Conseils

- Utilisez

preserveSource:falsepour retirer les attributs sources après remappage, ce qui limite les conflits et doublons. - Pour de meilleures performances de parsing Grok, évitez les correspondances larges comme

%{data:}ou%{regex(".*"):}. Privilégiez des expressions ciblées. - Suivez le cours gratuit Des analyses plus poussées grâce au traitement des logs pour apprendre à écrire des processeurs et exploiter les attributs standard.

Présentation des facettes

Les facettes sont des attributs qualitatifs ou quantitatifs spécifiques permettant de filtrer et d’affiner les résultats de recherche. Même si les facettes ne sont pas requises pour le filtrage, elles jouent un rôle essentiel dans la compréhension des axes de recherche disponibles.

Les facettes basées sur des attributs standard sont ajoutées automatiquement par Datadog lorsqu’un pipeline est publié. Vérifiez si l’attribut doit être remappé vers un attribut standard de Datadog.

Tous les attributs ne sont pas destinés à être utilisés comme facettes. Les besoins en facettes dans les intégrations se concentrent sur deux aspects :

- Les facettes offrent une interface simple pour filtrer les logs. Elles sont utilisées dans les fonctions de saisie semi-automatique de Log Management, ce qui permet aux utilisateurs de retrouver et d’agréger les informations clés contenues dans leurs logs.

- Elles offrent également la possibilité de donner un nom plus lisible à des attributs peu explicites. Par exemple,

@deviceCPUperdevientDevice CPU Utilization Percentage.

Les facettes peuvent être créées dans le Log Explorer.

Créer des facettes

Un bon paramétrage des facettes renforce l’efficacité des logs indexés dans les fonctions d’analyse, de surveillance et d’agrégation de la solution Log Management de Datadog.

Elles améliorent la recherche des logs applicatifs en alimentant les fonctions de saisie automatique dans Log Management.

Les facettes quantitatives, appelées « Mesures », permettent aux utilisateurs de filtrer les logs selon une plage de valeurs numériques à l'aide d'opérateurs relationnels. Par exemple, une mesure appliquée à un attribut de latence permet de rechercher tous les logs dont la durée dépasse un certain seuil.

Prérequis

Les attributs mappés à des facettes personnalisées doivent d’abord être placés dans un espace de nommage

Les attributs personnalisés génériques qui ne correspondent pas à un attribut standard de Datadog doivent être placés dans un espace de nommage lorsqu’ils sont associés à des facettes personnalisées. Un Attribute Remapper peut être utilisé pour ajouter l’espace de nommage correspondant au nom de l’intégration.

Par exemple, attribute_name devient integration_name.attribute_name.

Les facettes personnalisées ne doivent pas dupliquer une facette Datadog existante Pour éviter les doublons avec les facettes Datadog existantes, ne créez pas de facettes personnalisées correspondant à des attributs déjà associés à des attributs standard de Datadog.

Les facettes personnalisées doivent être regroupées sous le nom source

Lors de la création d’une facette personnalisée, vous devez lui attribuer un groupe. Définissez la valeur du champ Group sur source, identique au nom de l’intégration.

Les facettes personnalisées doivent avoir le même type de données que l’attribut mappé Définissez le type de données de la facette (String, Boolean, Double ou Integer) en fonction du type de l’attribut mappé. Un type incorrect empêche la facette de fonctionner comme prévu et peut entraîner un affichage erroné.

Ajouter une facette ou une mesure

- Cliquez sur un log contenant l’attribut pour lequel vous souhaitez ajouter une facette ou une mesure.

- Dans le panneau des logs, cliquez sur l’icône d’engrenage à côté de l’attribut.

- Sélectionnez Create facet/measure for @attribute.

- S’il s’agit d’une mesure, cliquez sur Advanced options pour définir l’unité. Sélectionnez l’unité en fonction de ce que représente l’attribut. Remarque : l’unité d’une mesure doit correspondre à la signification de l’attribut.

- Indiquez un groupe de facette pour faciliter la navigation dans la liste des facettes. Si le groupe n’existe pas encore, sélectionnez New group, saisissez un nom identique au tag source, puis ajoutez une description.

- Pour créer la facette, cliquez sur Add.

Configurer et modifier les facettes

- Dans le panneau des logs, cliquez sur l’icône d’engrenage à côté de l’attribut à configurer ou à regrouper.

- Sélectionnez Edit facet/measure for @attribute. S’il n’y a pas encore de facette pour l’attribut, sélectionnez Create facet/measure for @attribute.

- Cliquez sur Add ou Update lorsque vous avez terminé.

Conseils

- Il est recommandé d’attribuer une unité aux mesures lorsque c’est pertinent. Les familles disponibles sont

TIMEetBYTES, avec des unités telles quemillisecondougibibyte. - Les facettes peuvent avoir une description. Une description claire aide les utilisateurs à mieux comprendre comment exploiter la facette.

- Si vous remappez un attribut et conservez l’original avec l’option

preserveSource:true, définissez une facette sur un seul des deux uniquement. - Lors de la configuration manuelle des facettes dans les fichiers

.yamld’un pipeline, notez qu’elles sont associées à unesource. Cela indique l’origine de l’attribut, commelogpour les attributs outagpour les tags.

Exporter votre pipeline de logs

Survolez le pipeline à exporter et sélectionnez export pipeline.

L’exportation d’un pipeline de logs génère deux fichiers YAML : -pipeline-name.yaml : le pipeline de logs, incluant les facettes personnalisées, les Attribute Remappers et les parsers Grok. -pipeline_name_test.yaml : les logs bruts fournis en exemple et une section result vide.

Remarque : selon votre navigateur, vous devrez peut-être ajuster les paramètres pour autoriser le téléchargement de fichiers.

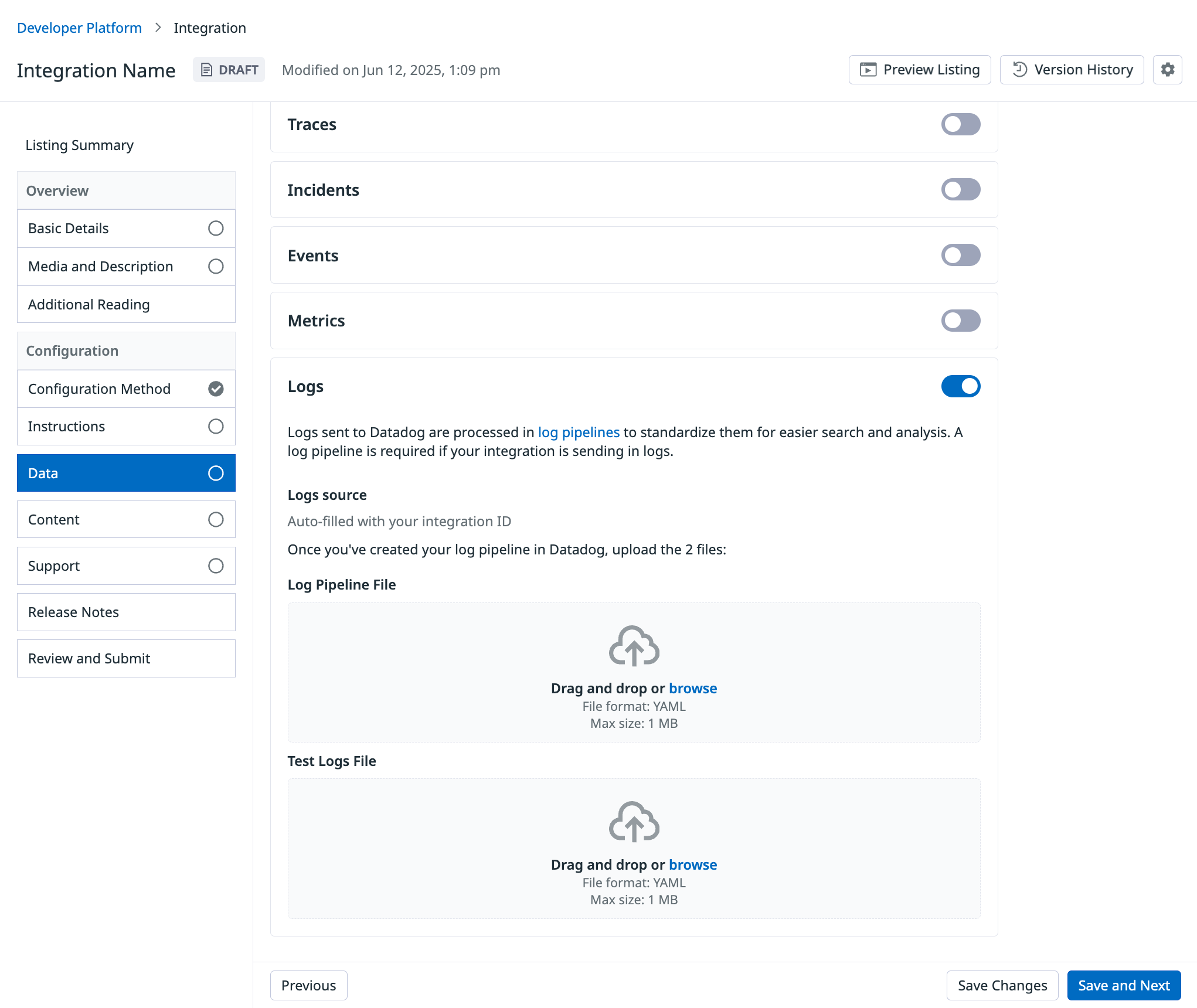

Importer votre pipeline de logs

Accédez à la plateforme de développement d’intégrations, puis dans l’onglet Data > Submitted logs, indiquez la source des logs et importez les deux fichiers exportés à l’étape précédente.

Pour aller plus loin

Documentation, liens et articles supplémentaires utiles: