- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Enable Data Jobs Monitoring for Spark on Amazon EMR

This product is not supported for your selected Datadog site. ().

Data Jobs Monitoring gives visibility into the performance and reliability of Apache Spark applications on Amazon EMR.

If you are using EMR on EKS, follow these instructions for setting up DJM on Kubernetes.

Requirements

Amazon EMR Release 6.0.1 or later is required.

Setup

Follow these steps to enable Data Jobs Monitoring for Amazon EMR.

- Store your Datadog API key in AWS Secrets Manager (Recommended).

- Grant permissions to EMR EC2 instance profile.

- Create and configure your EMR cluster.

- Specify service tagging per Spark application.

Store your Datadog API key in AWS Secrets Manager (Recommended)

- Take note of your Datadog API key.

- In AWS Secrets Manager, choose Store a new secret.

- Under Secret type, select Other type of secret.

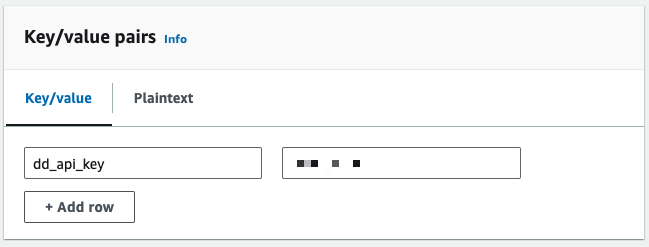

- Under Key/value pairs, add your Datadog API key as a key-value pair, where the key is

dd_api_key. - Then, click Next.

- On the Configure secret page, enter a Secret name. You can use

datadog/dd_api_key. Then, click Next. - On the Configure rotation page, you can optionally turn on automatic rotation. Then, click Next.

- On the Review page, review your secret details. Then, click Store.

- In AWS Secrets Manager, open the secret you created. Take note of the Secret ARN.

Grant permissions to EMR EC2 instance profile

EMR EC2 instance profile is a IAM role assigned to every EC2 instance in an Amazon EMR cluster when the instance launches. Follow the Amazon guide to prepare this role based on your application’s need to interact with other AWS services. The following additional permissions may be required for Data Jobs Monitoring.

Permissions to get secret value using AWS Secrets Manager

These permissions are required if you are using AWS Secrets Manager.

- In your AWS IAM console, click on Access management > Roles in the left navigation bar.

- Click on the IAM role you plan to use as the instance profile for your EMR cluster.

- On the next page, under the Permissions tab, find the Permissions policies section. Click on Add permissions > Create inline policy.

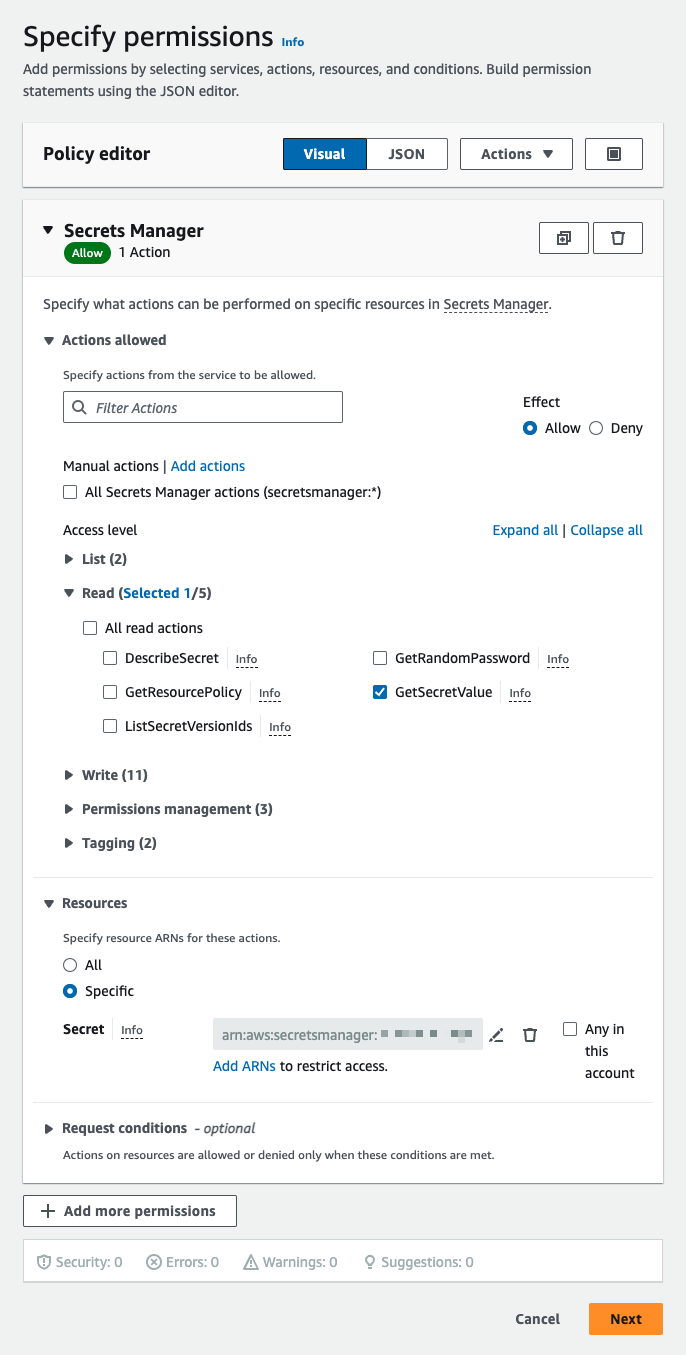

- On the Specify permissions page, find the Select a service section. Under Service, select Secrets Manager.

- Then, under Actions allowed, select

GetSecretValue. This is a Read action. - Under Resources, select Specific. Then, next to Secret, click on Add ARNs and add the ARN of the secret you created in the first step on this page.

- Click Next.

- Then, under Actions allowed, select

- On the next page, give your policy a name. Then, click Create policy.

Permissions to describe cluster

These permissions are required if you are NOT using the default role,

EMR_EC2_DefaultRole.- In your AWS IAM console, click on Access management > Roles in the left navigation bar.

- Click on the IAM role you plan to use as the instance profile for your EMR cluster.

- On the next page, under the Permissions tab, find the Permissions policies section. Click on Add permissions > Create inline policy.

- On the Specify permissions page, toggle on the JSON tab.

- Then, copy and paste the following policy into the Policy editor

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "elasticmapreduce:ListBootstrapActions", "elasticmapreduce:ListInstanceFleets", "elasticmapreduce:DescribeCluster", "elasticmapreduce:ListInstanceGroups" ], "Resource": [ "*" ] } ] }- Click Next.

- On the next page, give your policy a name. Then, click Create policy.

Take note of the name of the IAM role you plan to use as the instance profile for your EMR cluster.

Create and configure your EMR cluster

When you create a new EMR cluster in the Amazon EMR console, add a bootstrap action on the Create Cluster page:

Save the following script to an S3 bucket that your EMR cluster can read. Take note of the path to this script.

#!/bin/bash # Set required parameter DD_SITE export DD_SITE=# Set required parameter DD_API_KEY with Datadog API key. # The commands below assumes the API key is stored in AWS Secrets Manager, with the secret name as datadog/dd_api_key and the key as dd_api_key. # IMPORTANT: Modify if you choose to manage and retrieve your secret differently. SECRET_NAME=datadog/dd_api_key export DD_API_KEY=$(aws secretsmanager get-secret-value --secret-id $SECRET_NAME | jq -r .SecretString | jq -r '.["dd_api_key"]') # Optional: uncomment to send spark driver and worker logs to Datadog # export DD_EMR_LOGS_ENABLED=true # Download and run the latest init script curl -L https://install.datadoghq.com/scripts/install-emr.sh > djm-install-script; bash djm-install-script || trueOptionally, the script can be configured adding the following environment variables: The script above sets the required parameters, and downloads and runs the latest init script for Data Jobs Monitoring in EMR. If you want to pin your script to a specific version, you can replace the filename in the URL with

install-emr-0.13.5.shto use version0.13.5, for example. The source code used to generate this script, and the changes between script versions can be found on the Datadog Agent repository.Optionally, the script can be configured by adding the following environment variables:

| Variable | Description | Default |

|---|---|---|

| DD_TAGS | Add tags to EMR cluster and Spark performance metrics. Comma or space separated key:value pairs. Follow Datadog tag conventions. Example: env:staging,team:data_engineering | |

| DD_ENV | Set the env environment tag on metrics, traces, and logs from this cluster. | |

| DD_EMR_LOGS_ENABLED | Send Spark driver and worker logs to Datadog. | false |

| DD_LOGS_CONFIG_PROCESSING_RULES | Filter the logs collected with processing rules. See Advanced Log Collection for more details. |

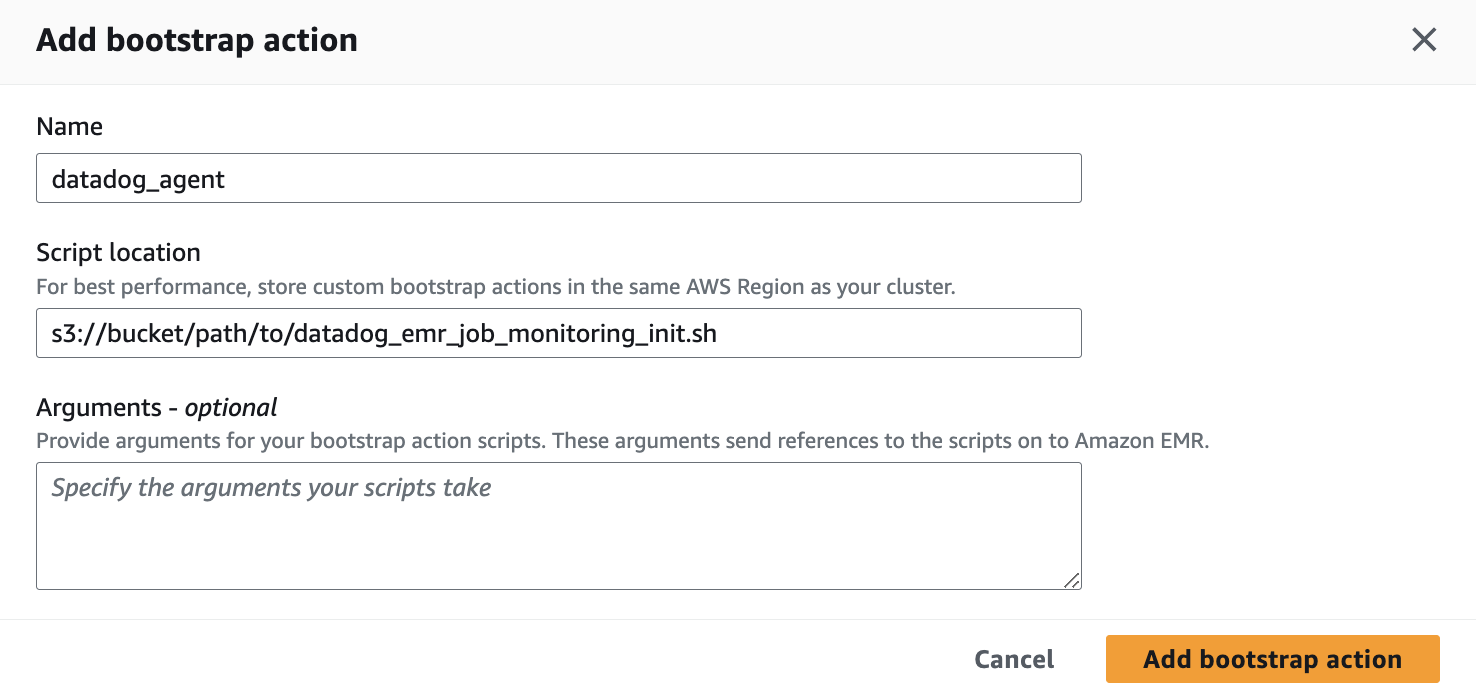

On the Create Cluster page, find the Bootstrap actions section. Click Add to bring up the Add bootstrap action dialog.

- For Name, give your bootstrap action a name. You can use

datadog_agent. - For Script location, enter the path to where you stored the init script in S3.

- Click Add bootstrap action.

- For Name, give your bootstrap action a name. You can use

On the Create Cluster page, find the Identity and Access Management (IAM) roles section. For instance profile dropdown, select the IAM role you have granted permissions in Grant permissions to EMR EC2 instance profile.

When your cluster is created, this bootstrap action installs the Datadog Agent and downloads the Java tracer on each node of the cluster.

Specify service tagging per Spark application

Tagging enables you to better filter, aggregate, and compare your telemetry in Datadog. You can configure tags by passing -Ddd.service, -Ddd.env, -Ddd.version, and -Ddd.tags options to your Spark driver and executor extraJavaOptions properties.

In Datadog, each job’s name corresponds to the value you set for -Ddd.service.

spark-submit \

--conf spark.driver.extraJavaOptions="-Ddd.service=<JOB_NAME> -Ddd.env=<ENV> -Ddd.version=<VERSION> -Ddd.tags=<KEY_1>:<VALUE_1>,<KEY_2:VALUE_2>" \

--conf spark.executor.extraJavaOptions="-Ddd.service=<JOB_NAME> -Ddd.env=<ENV> -Ddd.version=<VERSION> -Ddd.tags=<KEY_1>:<VALUE_1>,<KEY_2:VALUE_2>" \

application.jar

Validation

In Datadog, view the Data Jobs Monitoring page to see a list of all your data processing jobs.

Troubleshooting

If you don’t see any data in DJM after installing the product, follow those steps.

The init script installs the Datadog Agent. To make sure it is properly installed, ssh into the cluster and run the Agent status command:

sudo datadog-agent status

Advanced Configuration

Tag spans at runtime

You can set tags on Spark spans at runtime. These tags are applied only to spans that start after the tag is added.

// Add tag for all next Spark computations

sparkContext.setLocalProperty("spark.datadog.tags.key", "value")

spark.read.parquet(...)

To remove a runtime tag:

// Remove tag for all next Spark computations

sparkContext.setLocalProperty("spark.datadog.tags.key", null)

Further Reading

Additional helpful documentation, links, and articles: