- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- OpenTelemetry

- Profiler

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origins for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

Enable Data Jobs Monitoring for Databricks

This product is not supported for your selected Datadog site. ().

Data Jobs Monitoring gives visibility into the performance and reliability of your Apache Spark and Databricks jobs.

Join the Preview: Databricks Serverless Job Monitoring

Databricks Serverless Job Monitoring helps you detect issues with jobs running on Serverless or SQL Warehouse compute. Complete the form to request access.

Request AccessSetup

Databricks Networking Restrictions can block some Datadog functions. Add the following Datadog IP ranges to your allow-list: webhook IPs, API IPs.

Follow these steps to enable Data Jobs Monitoring for Databricks.

- Configure the Datadog-Databricks integration for a Databricks workspace.

- Install the Datadog Agent on your Databricks cluster(s) in the workspace.

Configure the Datadog-Databricks integration

New workspaces must authenticate using OAuth. Workspaces integrated with a Personal Access Token continue to function and can switch to OAuth at any time. After a workspace starts using OAuth, it cannot revert to a Personal Access Token.

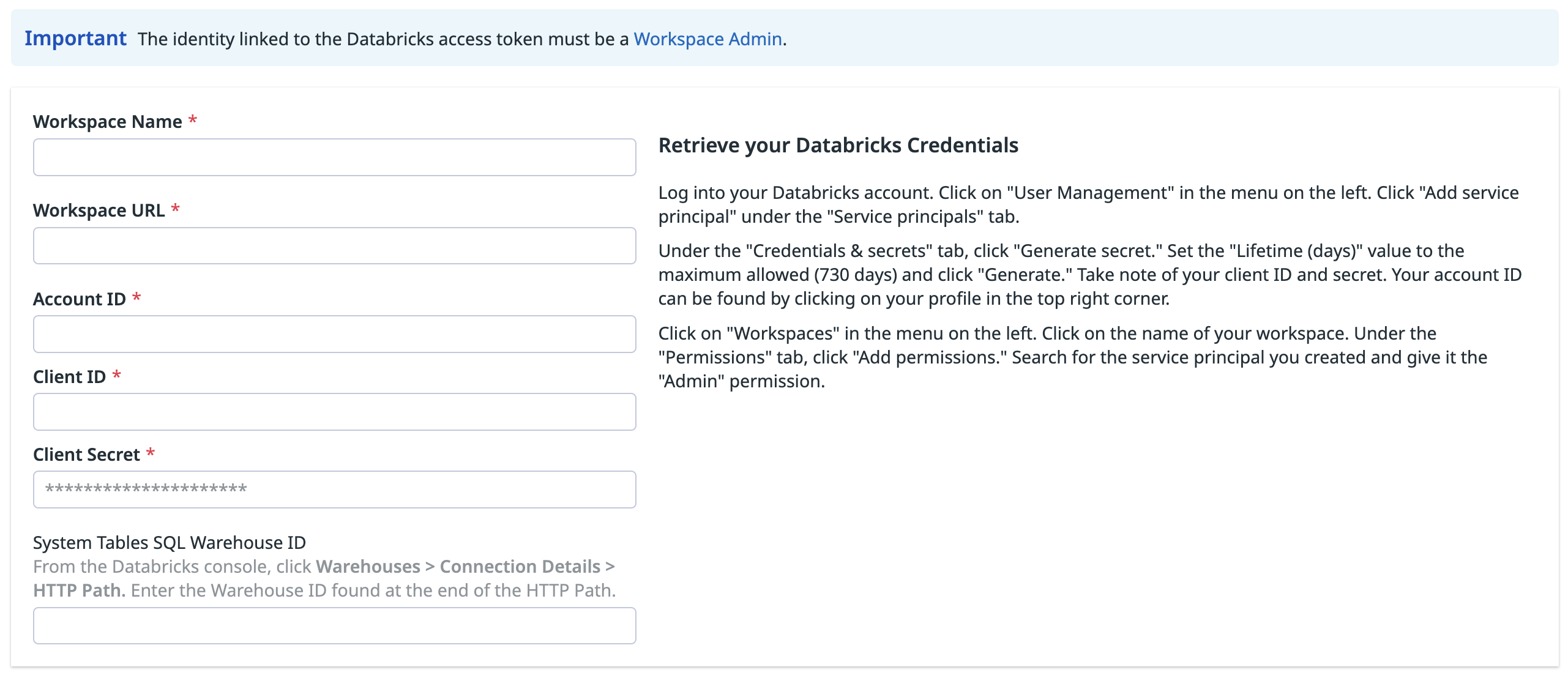

In your Databricks account, click on User Management in the left menu. Then, under the Service principals tab, click Add service principal.

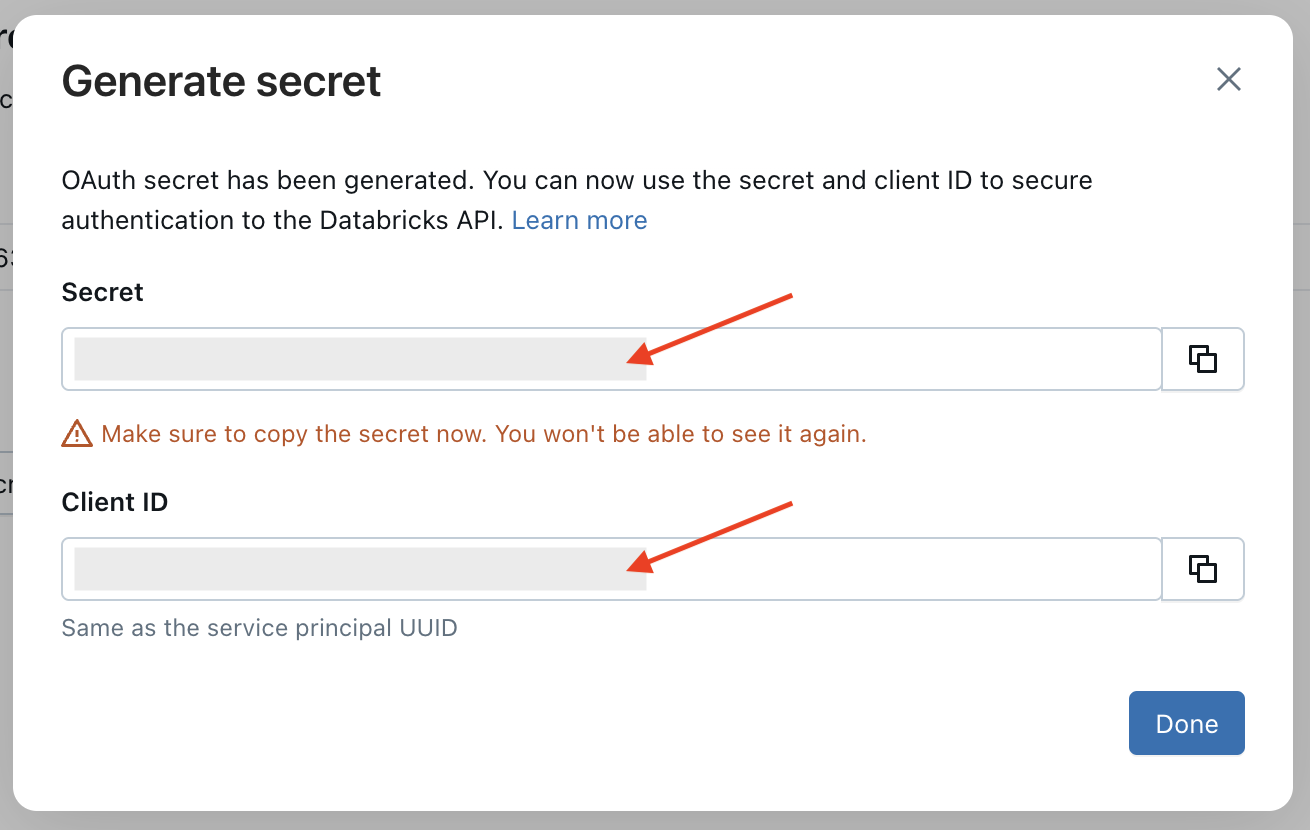

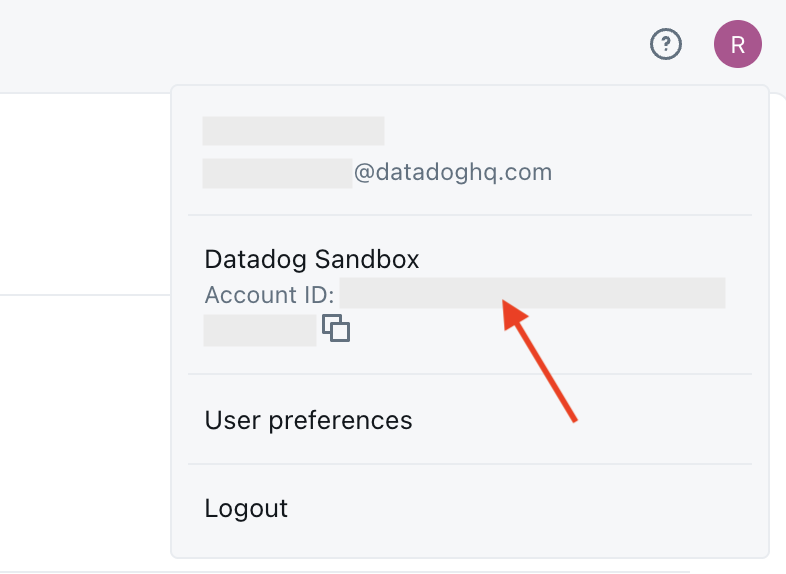

Under the Credentials & secrets tab, click Generate secret. Set Lifetime (days) to the maximum value allowed (730), then click Generate. Take note of your client ID and client secret. Also take note of your account ID, which can be found by clicking on your profile in the upper-right corner.

Click Workspaces in the left menu, then select the name of your workspace.

Go to the Permissions tab and click Add permissions.

Search for the service principal you created and assign it the Admin permission.

In Datadog, open the Databricks integration tile.

On the Configure tab, click Add Databricks Workspace.

Enter a workspace name, your Databricks workspace URL, account ID, and the client ID and secret you generated.

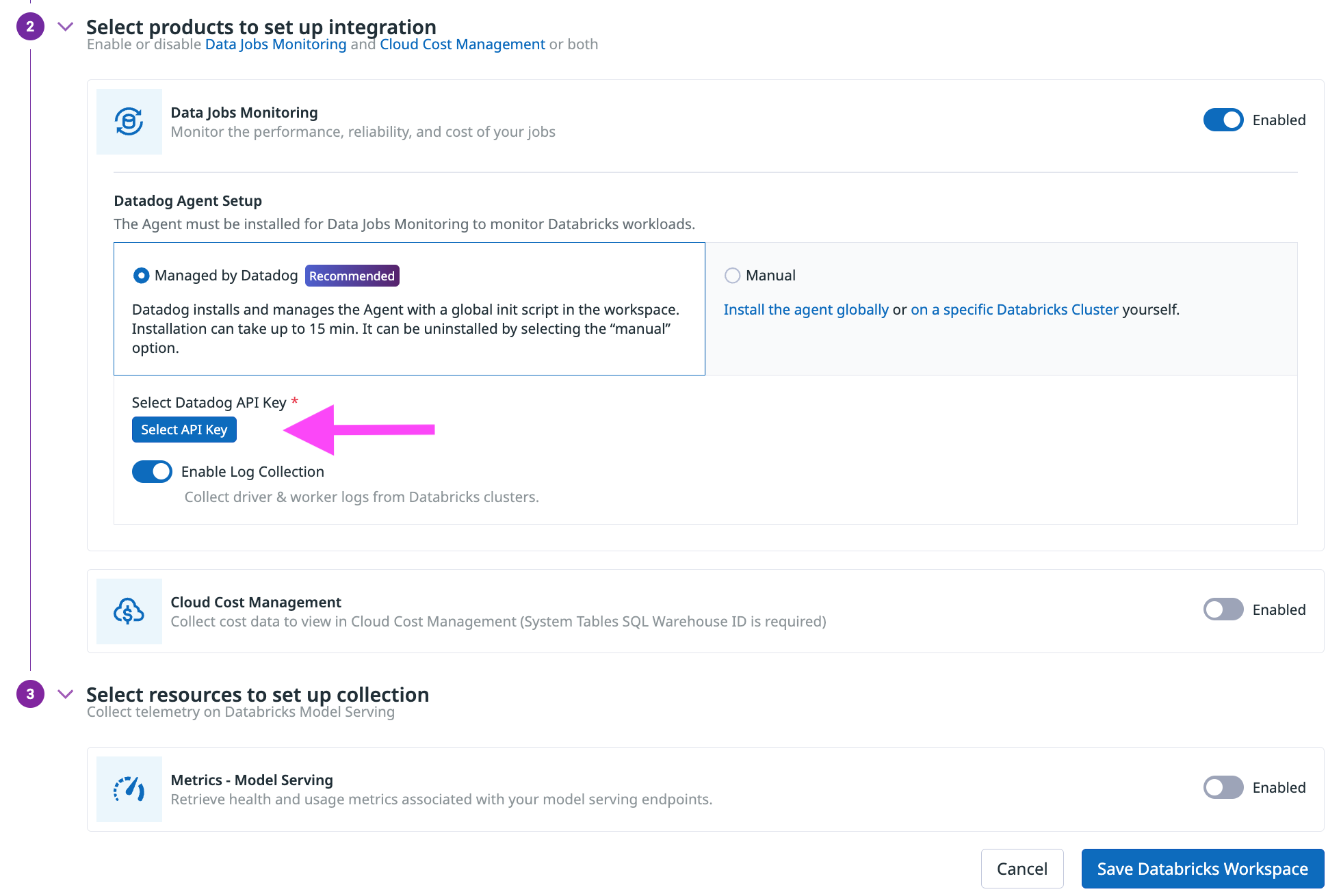

In the Select products to set up integration section, ensure that Data Jobs Monitoring is Enabled.

In the Datadog Agent Setup section, choose either

- Managed by Datadog (recommended): Datadog installs and manages the Agent with a global init script in the workspace.

- Manually: Follow the instructions below to install and manage the init script for installing the Agent globally or on specific Databricks clusters.

This option is only available for workspaces created before July 7, 2025. New workspaces must authenticate using OAuth.

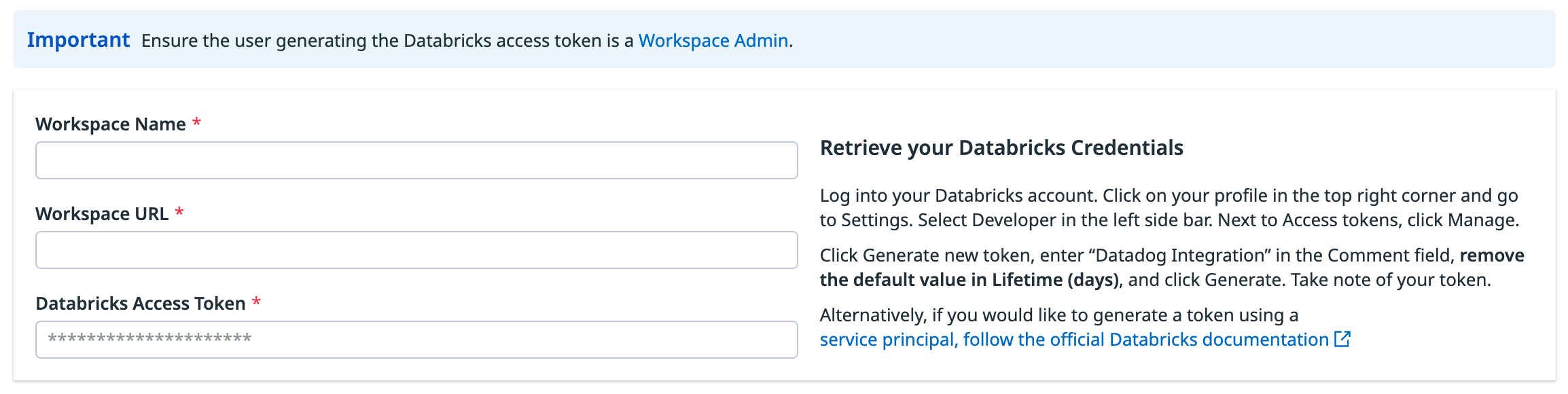

In your Databricks workspace, click on your profile in the top right corner and go to Settings. Select Developer in the left side bar. Next to Access tokens, click Manage.

Click Generate new token, enter “Datadog Integration” in the Comment field, set the Lifetime (days) value to the maximum allowed (730 days), and create a reminder to update the token before it expires. Then click Generate. Take note of your token.

Important:

- For the Datadog managed init script install (recommended), ensure the token’s Principal is a Workspace Admin.

- For manual init script installation, ensure the token’s Principal has CAN VIEW access for the Databricks jobs and clusters you want to monitor.

As an alternative, follow the official Databricks documentation to generate an access token for a service principal. The service principal must have the Workspace access entitlement enabled and the Workspace Admin or CAN VIEW access permissions as described above.

In Datadog, open the Databricks integration tile.

On the Configure tab, click Add Databricks Workspace.

Enter a workspace name, your Databricks workspace URL, and the Databricks token you generated.

In the Select products to set up integration section, make sure the Data Jobs Monitoring product is Enabled.

In the Datadog Agent Setup section, choose either

- Managed by Datadog (recommended): Datadog installs and manages the Agent with a global init script in the workspace.

- Manually: Follow the instructions below to install and manage the init script for installing the Agent globally or on specific Databricks clusters.

Install the Datadog Agent

The Datadog Agent must be installed on Databricks clusters to monitor Databricks jobs that run on all-purpose or job clusters.

Datadog can install and manage a global init script in the Databricks workspace. The Datadog Agent is installed on all clusters in the workspace, when they start.

- This setup does not work on Databricks clusters in Standard (formerly Shared) access mode, because global init scripts cannot be installed on those clusters. If you are using clusters with the Standard (formerly Shared) access mode, you must follow the instructions to Manually install on a specific cluster for installation on those specific clusters.

- This install option, in which Datadog installs and manages your Datadog global init script, requires a Databricks Access Token with Workspace Admin permissions. A token with CAN VIEW access does not allow Datadog to manage the global init script of your Databricks account.

When integrating a workspace with Datadog

In the Select products to set up integration section, make sure the Data Jobs Monitoring product is Enabled.

In the Datadog Agent Setup section, select the Managed by Datadog toggle button.

Click Select API Key to either select an existing Datadog API key or create a new Datadog API key.

(Optional) Disable Enable Log Collection if you do not want to collect driver and worker logs for correlating with jobs.

Click Save Databricks Workspace.

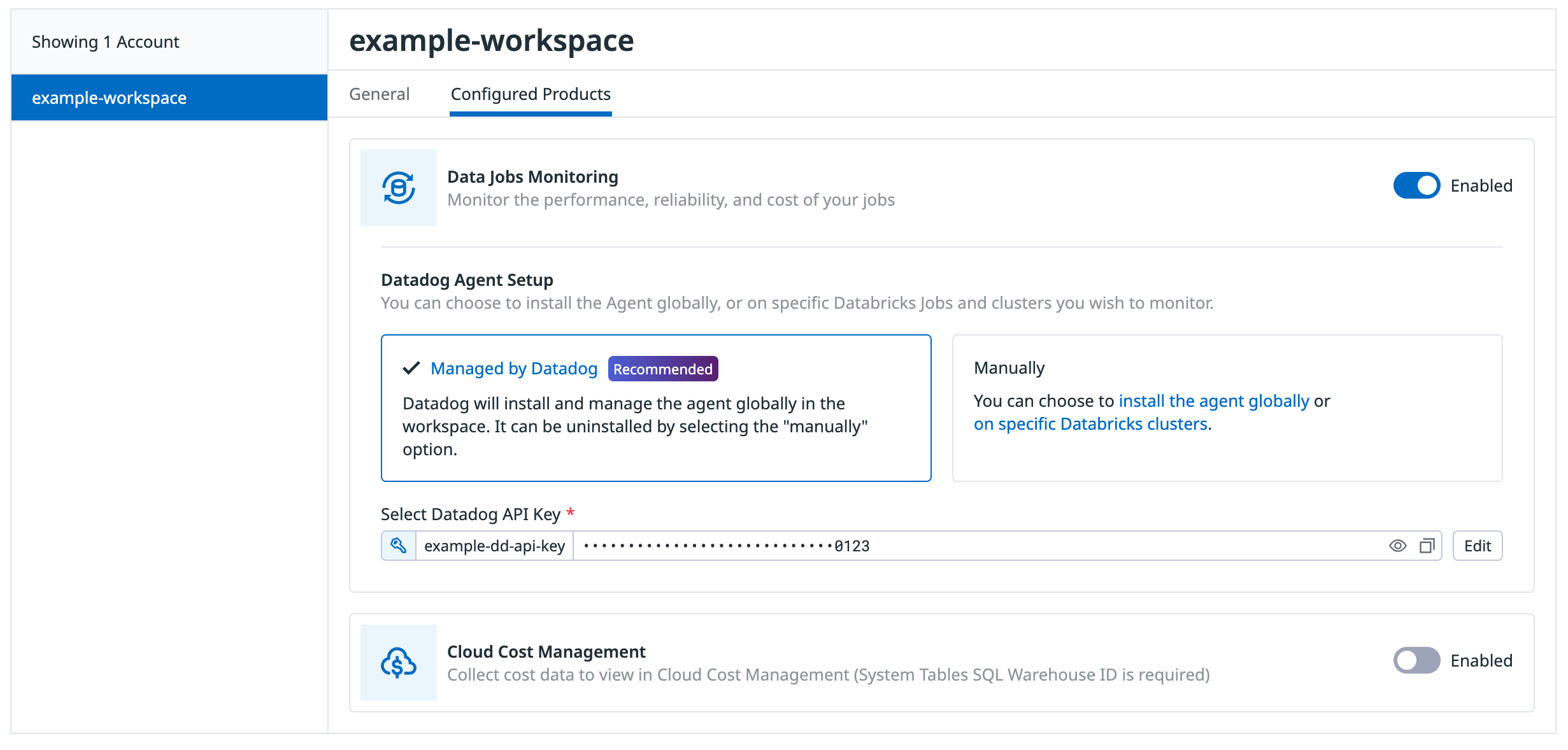

When adding the init script to a Databricks workspace already integrated with Datadog

On the Configure tab, click the workspace in the list of workspaces

Click the Configured Products tab

Make sure the Data Jobs Monitoring product is Enabled.

In the Datadog Agent Setup section, select the Managed by Datadog toggle button.

Click Select API Key to either select an existing Datadog API key or create a new Datadog API key.

(Optional) Disable Enable Log Collection if you do not want to collect driver and worker logs for correlating with jobs.

Click Save Databricks Workspace at the bottom of the browser window.

Optionally, you can add tags to your Databricks cluster and Spark performance metrics by configuring the following environment variable in the Advanced Configuration section of your cluster in the Databricks UI or as Spark env vars with the Databricks API:

| Variable | Description |

|---|---|

| DD_TAGS | Add tags to Databricks cluster and Spark performance metrics. Comma or space separated key:value pairs. Follow Datadog tag conventions. Example: env:staging,team:data_engineering |

| DD_ENV | Set the env environment tag on metrics, traces, and logs from this cluster. |

| DD_LOGS_CONFIG_PROCESSING_RULES | Filter the logs collected with processing rules. See Advanced Log Collection for more details. |

This setup does not work on Databricks clusters in Standard (formerly Shared) access mode, because global init scripts cannot be installed on those clusters. If you are using clusters with the Standard (formerly Shared) access mode, you must follow the instructions to Manually install on a specific cluster for installation on those specific clusters.

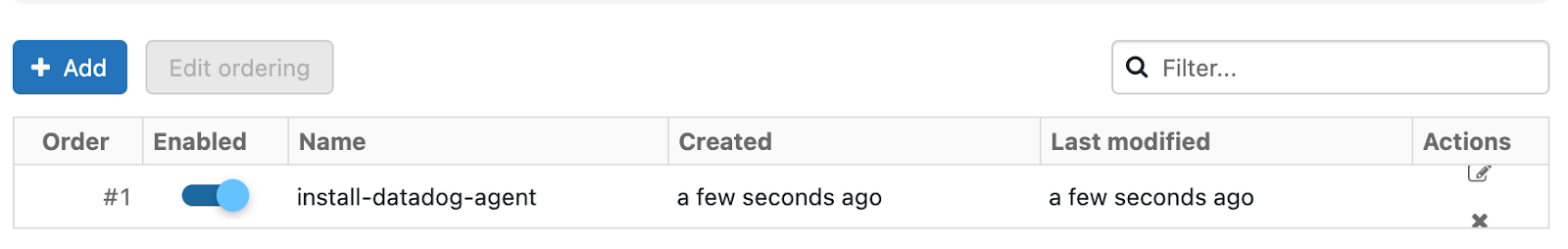

In Databricks, click your display name (email address) in the upper right corner of the page.

Select Settings and click the Compute tab.

In the All purpose clusters section, next to Global init scripts, click Manage.

Click Add. Name your script. Then, in the Script field, copy and paste the following script, remembering to replace the placeholders with your parameter values.

#!/bin/bash # Required parameters export DD_API_KEY=<YOUR API KEY> export DD_SITE=<YOUR DATADOG SITE> export DATABRICKS_WORKSPACE="<YOUR WORKSPACE NAME>" # Download and run the latest init script curl -L https://install.datadoghq.com/scripts/install-databricks.sh > djm-install-script bash djm-install-script || trueThe script above sets the required parameters, and downloads and runs the latest init script for Data Jobs Monitoring in Databricks. If you want to pin your script to a specific version, you can replace the filename in the URL with

install-databricks-0.13.5.shto use version0.13.5, for example. The source code used to generate this script, and the changes between script versions, can be found on the Datadog Agent repository.To enable the script for all new and restarted clusters, toggle Enabled.

Click Add.

Set the required init script parameters

Provide the values for the init script parameters at the beginning of the global init script.

export DD_API_KEY=<YOUR API KEY>

export DD_SITE=<YOUR DATADOG SITE>

export DATABRICKS_WORKSPACE="<YOUR WORKSPACE NAME>"

Optionally, you can also set other init script parameters and Datadog environment variables here, such as DD_ENV and DD_SERVICE. The script can be configured using the following parameters:

| Variable | Description | Default |

|---|---|---|

| DD_API_KEY | Your Datadog API key. | |

| DD_SITE | Your Datadog site. | |

| DATABRICKS_WORKSPACE | Name of your Databricks Workspace. It should match the name provided in the Datadog-Databricks integration step. Enclose the name in double quotes if it contains whitespace. | |

| DRIVER_LOGS_ENABLED | Collect spark driver logs in Datadog. | false |

| WORKER_LOGS_ENABLED | Collect spark workers logs in Datadog. | false |

| DD_TAGS | Add tags to Databricks cluster and Spark performance metrics. Comma or space separated key:value pairs. Follow Datadog tag conventions. Example: env:staging,team:data_engineering | |

| DD_ENV | Set the env environment tag on metrics, traces, and logs from this cluster. | |

| DD_LOGS_CONFIG_PROCESSING_RULES | Filter the logs collected with processing rules. See Advanced Log Collection for more details. |

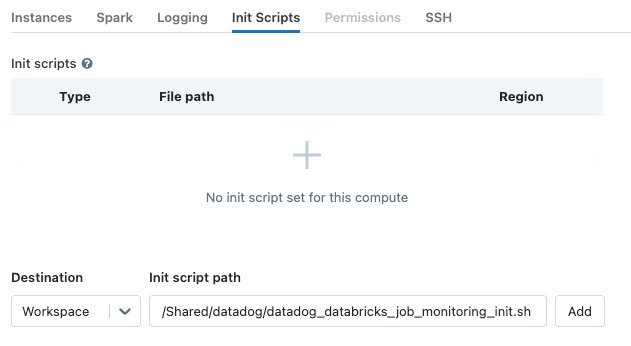

In Databricks, create a init script file in Workspace with the following content. Be sure to make note of the file path.

#!/bin/bash # Download and run the latest init script curl -L https://install.datadoghq.com/scripts/install-databricks.sh > djm-install-script bash djm-install-script || trueThe script above downloads and runs the latest init script for Data Jobs Monitoring in Databricks. If you want to pin your script to a specific version, you can replace the filename in the URL with

install-databricks-0.13.5.shto use version0.13.5, for example. The source code used to generate this script, and the changes between script versions, can be found on the Datadog Agent repository.On the cluster configuration page, click the Advanced options toggle.

At the bottom of the page, go to the Init Scripts tab.

- Under the Destination drop-down, select

Workspace. - Under Init script path, enter the path to your init script.

- Click Add.

- Under the Destination drop-down, select

Set the required init script parameters

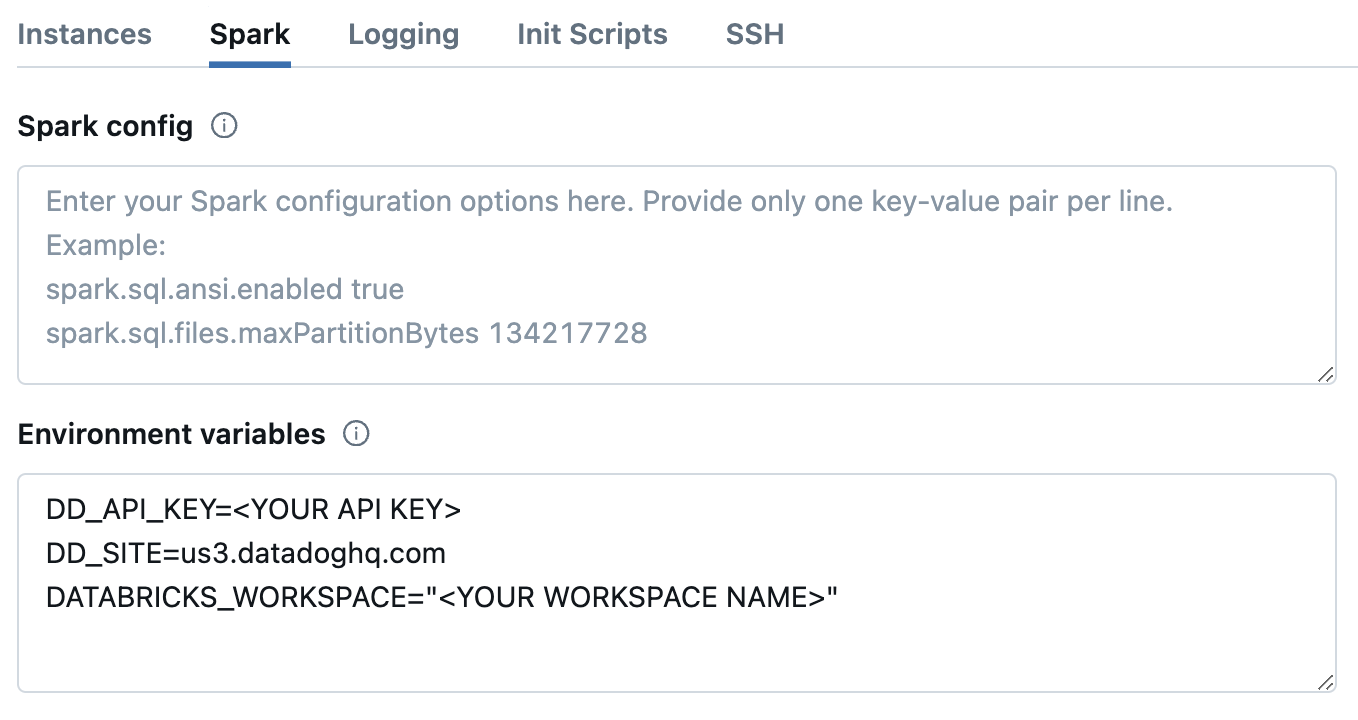

In Databricks, on the cluster configuration page, click the Advanced options toggle.

At the bottom of the page, go to the Spark tab.

In the Environment variables textbox, provide the values for the init script parameters.

DD_API_KEY=<YOUR API KEY> DD_SITE=<YOUR DATADOG SITE> DATABRICKS_WORKSPACE="<YOUR WORKSPACE NAME>"Optionally, you can also set other init script parameters and Datadog environment variables here, such as

DD_ENVandDD_SERVICE. The script can be configured using the following parameters:

| Variable | Description | Default |

|---|---|---|

| DD_API_KEY | Your Datadog API key. | |

| DD_SITE | Your Datadog site. | |

| DATABRICKS_WORKSPACE | Name of your Databricks Workspace. It should match the name provided in the Datadog-Databricks integration step. Enclose the name in double quotes if it contains whitespace. | |

| DRIVER_LOGS_ENABLED | Collect spark driver logs in Datadog. | false |

| WORKER_LOGS_ENABLED | Collect spark workers logs in Datadog. | false |

| DD_TAGS | Add tags to Databricks cluster and Spark performance metrics. Comma or space separated key:value pairs. Follow Datadog tag conventions. Example: env:staging,team:data_engineering | |

| DD_ENV | Set the env environment tag on metrics, traces, and logs from this cluster. | |

| DD_LOGS_CONFIG_PROCESSING_RULES | Filter the logs collected with processing rules. See Advanced Log Collection for more details. |

- Click Confirm.

Restart already-running clusters

The init script installs the Agent when clusters start.

Already-running all-purpose clusters or long-lived job clusters must be manually restarted for the init script to install the Datadog Agent.

For scheduled jobs that run on job clusters, the init script installs the Datadog Agent automatically on the next run.

Validation

In Datadog, view the Data Jobs Monitoring page to see a list of all your Databricks jobs.

If some jobs are not visible, navigate to the Configuration page to understand why. This page lists all your Databricks jobs not yet configured with the Agent on their clusters, along with guidance for completing setup.

Troubleshooting

If you don’t see any data in DJM after installing the product, follow those steps.

The init script installs the Datadog Agent. To make sure it is properly installed, ssh into the cluster and run the Agent status command:

sudo datadog-agent status

Advanced Configuration

Filter log collection on clusters

Exclude all log collection from an individual cluster

Configure the following environment variable in the Advanced Configuration section of your cluster in the Databricks UI or as a Spark environment variable in the Databricks API.

DD_LOGS_CONFIG_PROCESSING_RULES=[{\"type\": \"exclude_at_match\",\"name\": \"drop_all_logs\",\"pattern\": \".*\"}]

Tag spans at runtime

You can set tags on Spark spans at runtime. These tags are applied only to spans that start after the tag is added.

// Add tag for all next Spark computations

sparkContext.setLocalProperty("spark.datadog.tags.key", "value")

spark.read.parquet(...)

To remove a runtime tag:

// Remove tag for all next Spark computations

sparkContext.setLocalProperty("spark.datadog.tags.key", null)

Aggregate cluster metrics from one-time job runs

This configuration is applicable if you want cluster resource utilization data about your jobs and create a new job and cluster for each run via the one-time run API endpoint (common when using orchestration tools outside of Databricks such as Airflow or Azure Data Factory).

If you are submitting Databricks Jobs through the one-time run API endpoint, each job run has a unique job ID. This can make it difficult to group and analyze cluster metrics for jobs that use ephemeral clusters. To aggregate cluster utilization from the same job and assess performance across multiple runs, you must set the DD_JOB_NAME variable inside the spark_env_vars of every new_cluster to the same value as your request payload’s run_name.

Here’s an example of a one-time job run request body:

{

"run_name": "Example Job",

"idempotency_token": "8f018174-4792-40d5-bcbc-3e6a527352c8",

"tasks": [

{

"task_key": "Example Task",

"description": "Description of task",

"depends_on": [],

"notebook_task": {

"notebook_path": "/Path/to/example/task/notebook",

"source": "WORKSPACE"

},

"new_cluster": {

"num_workers": 1,

"spark_version": "13.3.x-scala2.12",

"node_type_id": "i3.xlarge",

"spark_env_vars": {

"DD_JOB_NAME": "Example Job"

}

}

}

]

}Set up Data Jobs Monitoring with Databricks Networking Restrictions

With Databricks Networking Restrictions, Datadog may not have access to your Databricks APIs, which is required to collect traces for Databricks job executions along with tags and other metadata.

If you are controlling Databricks API access with IP access lists, allow-listing Datadog’s specific webhook IP addresses allows Datadog to connect to the Databricks APIs in your workspace. See Databricks’s documentation for configuring IP access lists for workspaces to give Datadog API access.

If you are using Databricks Private Connectivity, reach out to the Datadog support team to discuss potential options.

Further Reading

Additional helpful documentation, links, and articles: