- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Extend Datadog

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Service Level Objectives

- Metrics

- Watchdog

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Event Management

- Incident Response

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Session Replay

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Developer Integrations

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- AI Guard

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Observability Pipelines

- Configuration

- Sources

- Processors

- Destinations

- Packs

- Akamai CDN

- Amazon CloudFront

- Amazon VPC Flow Logs

- AWS Application Load Balancer Logs

- AWS CloudTrail

- AWS Elastic Load Balancer Logs

- AWS Network Load Balancer Logs

- Cisco ASA

- Cloudflare

- F5

- Fastly

- Fortinet Firewall

- HAProxy Ingress

- Istio Proxy

- Juniper SRX Firewall Traffic Logs

- Netskope

- NGINX

- Okta

- Palo Alto Firewall

- Windows XML

- ZScaler ZIA DNS

- Zscaler ZIA Firewall

- Zscaler ZIA Tunnel

- Zscaler ZIA Web Logs

- Search Syntax

- Scaling and Performance

- Monitoring and Troubleshooting

- Guides and Resources

- Log Management

- CloudPrem

- Administration

Send OpenTelemetry logs with Observability Pipelines

This product is not supported for your selected Datadog site. ().

CloudPrem is in Preview

Join the CloudPrem Preview to access new self-hosted log management features.

Request AccessOverview

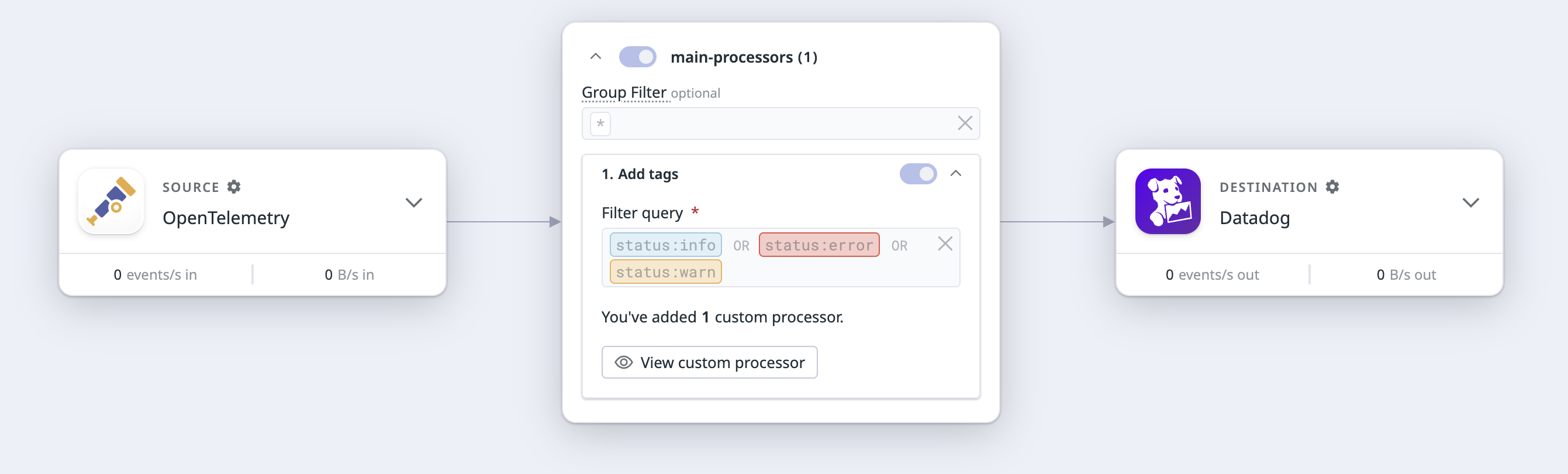

CloudPrem supports log ingestion from OTEL collectors by using Observability Pipelines as the ingestion layer. This guide provides step-by-step instructions to connect OTEL logs to CloudPrem—without disrupting your existing OTEL configuration.

By the end of this guide, you will be able to:

- Start CloudPrem locally.

- Create an Observability Pipeline with a custom processor to add tags.

- Run the Observability Pipelines Worker.

- Send OpenTelemetry logs using the Python SDK.

- View tagged logs in Datadog.

Prerequisites

- CloudPrem Preview access.

- Datadog API Key: Get your API key.

- Datadog Application Key: Get your application key.

- Docker: Install Docker.

- Python 3 and pip: For sending test OTLP logs.

Step 1: Start CloudPrem

Start a local CloudPrem instance. Replace <YOUR_API_KEY> with your Datadog API Key:

export DD_API_KEY="<YOUR_API_KEY>"

export DD_SITE="datadoghq.com"

docker run -d \

--name cloudprem \

-v $(pwd)/qwdata:/quickwit/qwdata \

-e DD_SITE=${DD_SITE} \

-e DD_API_KEY=${DD_API_KEY} \

-p 127.0.0.1:7280:7280 \

datadog/cloudprem run

Step 2: Create an Observability Pipeline with the API

Create a pipeline with an OpenTelemetry source, a filter processor, and a CloudPrem destination. Replace <YOUR_APP_KEY> with your Datadog Application Key:

export DD_APP_KEY="<YOUR_APP_KEY>"

curl -s -X POST "https://api.${DD_SITE}/api/v2/obs-pipelines/pipelines" \

-H "Content-Type: application/json" \

-H "DD-API-KEY: ${DD_API_KEY}" \

-H "DD-APPLICATION-KEY: ${DD_APP_KEY}" \

-d '{

"data": {

"attributes": {

"name": "OTEL to CloudPrem Pipeline",

"config": {

"sources": [

{

"id": "otel-source",

"type": "opentelemetry"

}

],

"processor_groups": [

{

"id": "main-processors",

"enabled": true,

"include": "*",

"inputs": ["otel-source"],

"processors": [

{

"id": "add-tags",

"display_name": "Add tags",

"enabled": true,

"type": "custom_processor",

"include": "*",

"remaps": [

{

"drop_on_error": false,

"enabled": true,

"include": "*",

"name": "ddtags",

"source": ".ddtags = [\"pipeline:observability-pipelines\", \"source:opentelemetry\"]"

}

]

}

]

}

],

"destinations": [

{

"id": "cloudprem-dest",

"type": "cloud_prem",

"inputs": ["main-processors"]

}

]

}

},

"type": "pipelines"

}

}' | jq -r '.data.id'

This command returns the pipeline_id. Save it for the next step.

Note: The custom processor adds a ddtags field with custom tags to all logs through the remaps configuration.

Step 3: Run the Observability Pipelines Worker

Start the Observability Pipelines Worker using Docker. Replace <PIPELINE_ID> with the ID from Step 2:

export PIPELINE_ID="<PIPELINE_ID>"

docker run -d \

--name opw \

-p 4317:4317 \

-p 4318:4318 \

-e DD_API_KEY=${DD_API_KEY} \

-e DD_SITE=${DD_SITE} \

-e DD_OP_PIPELINE_ID=${PIPELINE_ID} \

-e DD_OP_SOURCE_OTEL_GRPC_ADDRESS="0.0.0.0:4317" \

-e DD_OP_SOURCE_OTEL_HTTP_ADDRESS="0.0.0.0:4318" \

-e DD_OP_DESTINATION_CLOUDPREM_ENDPOINT_URL="http://host.docker.internal:7280" \

datadog/observability-pipelines-worker run

Notes:

- The Worker exposes port 4318 for HTTP and 4317 for gRPC.

- On macOS/Windows, use

host.docker.internalto connect to CloudPrem on the host machine. - On Linux, use

--network hostinstead of-pflags andhttp://localhost:7280for the endpoint.

Step 4: Send logs through Observability Pipelines

Install the OpenTelemetry SDK and send a test log to the Observability Pipelines Worker:

pip install opentelemetry-api opentelemetry-sdk opentelemetry-exporter-otlp-proto-http

python3 -c "

import time, logging

from opentelemetry.sdk._logs import LoggerProvider, LoggingHandler

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter

from opentelemetry.sdk.resources import Resource

exporter = OTLPLogExporter(endpoint='http://localhost:4318/v1/logs')

resource = Resource.create({'service.name': 'otel-demo'})

log_provider = LoggerProvider(resource=resource)

log_provider.add_log_record_processor(BatchLogRecordProcessor(exporter))

handler = LoggingHandler(logger_provider=log_provider)

logging.getLogger().addHandler(handler)

logging.getLogger().setLevel(logging.INFO)

logging.info('Hello from OpenTelemetry via Observability Pipelines!')

time.sleep(2)

log_provider.shutdown()

print('✓ Log sent successfully!')

"

For production, configure your OpenTelemetry Collector to forward logs to the Worker:

exporters:

otlphttp:

endpoint: http://localhost:4318

service:

pipelines:

logs:

receivers: [otlp]

exporters: [otlphttp]

Verify the pipeline and CloudPrem

Check that all components are running:

# Check CloudPrem status

docker logs cloudprem --tail 20

# Check Observability Pipelines Worker status

docker logs opw --tail 20

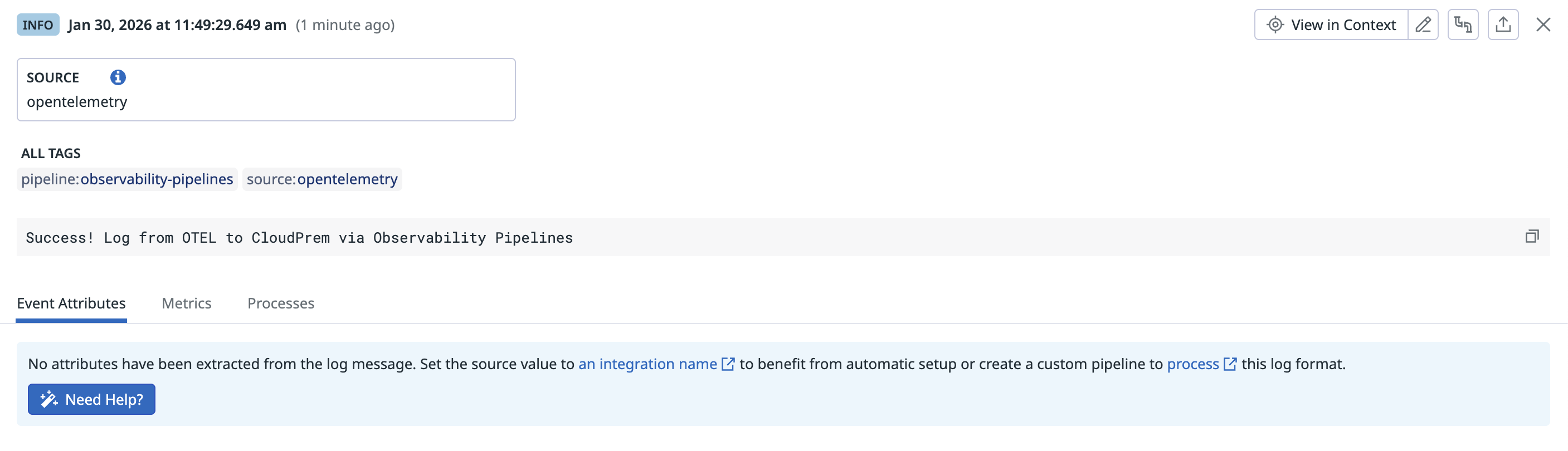

Step 5: View logs in Datadog

- Go to the Datadog Log Explorer.

- In the left facet panel, select your CloudPrem index under CLOUDPREM INDEXES.

- You should see your OpenTelemetry logs from the

otel-demoservice with custom tags:pipeline:observability-pipelinesandsource:opentelemetry.

Next steps

- Configure your OpenTelemetry Collector or instrumented applications to send logs to the Worker.

- Add more processors to your pipeline (sampling, enrichment, transformation).

- Scale the Worker deployment for production workloads.

- See Observability Pipelines documentation for advanced configurations.

Cleanup

To stop and remove the containers:

docker stop cloudprem opw

docker rm cloudprem opw

Further reading

Additional helpful documentation, links, and articles: