- Essentials

- Getting Started

- Agent

- API

- APM Tracing

- Containers

- Dashboards

- Database Monitoring

- Datadog

- Datadog Site

- DevSecOps

- Incident Management

- Integrations

- Internal Developer Portal

- Logs

- Monitors

- Notebooks

- OpenTelemetry

- Profiler

- Search

- Session Replay

- Security

- Serverless for AWS Lambda

- Software Delivery

- Synthetic Monitoring and Testing

- Tags

- Workflow Automation

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Build an Integration with Datadog

- Create an Agent-based Integration

- Create an API-based Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create an Integration Dashboard

- Create a Monitor Template

- Create a Cloud SIEM Detection Rule

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- OpenTelemetry

- Administrator's Guide

- API

- Partners

- Datadog Mobile App

- DDSQL Reference

- CoScreen

- CoTerm

- Remote Configuration

- Cloudcraft (Standalone)

- In The App

- Dashboards

- Notebooks

- DDSQL Editor

- Reference Tables

- Sheets

- Monitors and Alerting

- Watchdog

- Metrics

- Bits AI

- Internal Developer Portal

- Error Tracking

- Change Tracking

- Service Management

- Service Level Objectives

- Incident Management

- On-Call

- Status Pages

- Event Management

- Case Management

- Actions & Remediations

- Infrastructure

- Cloudcraft

- Resource Catalog

- Universal Service Monitoring

- End User Device Monitoring

- Hosts

- Containers

- Processes

- Serverless

- Network Monitoring

- Storage Management

- Cloud Cost

- Application Performance

- APM

- APM Terms and Concepts

- Application Instrumentation

- APM Metrics Collection

- Trace Pipeline Configuration

- Correlate Traces with Other Telemetry

- Trace Explorer

- Recommendations

- Code Origin for Spans

- Service Observability

- Endpoint Observability

- Dynamic Instrumentation

- Live Debugger

- Error Tracking

- Data Security

- Guides

- Troubleshooting

- Continuous Profiler

- Database Monitoring

- Agent Integration Overhead

- Setup Architectures

- Setting Up Postgres

- Setting Up MySQL

- Setting Up SQL Server

- Setting Up Oracle

- Setting Up Amazon DocumentDB

- Setting Up MongoDB

- Connecting DBM and Traces

- Data Collected

- Exploring Database Hosts

- Exploring Query Metrics

- Exploring Query Samples

- Exploring Database Schemas

- Exploring Recommendations

- Troubleshooting

- Guides

- Data Streams Monitoring

- Data Jobs Monitoring

- Data Observability

- Digital Experience

- Real User Monitoring

- Synthetic Testing and Monitoring

- Continuous Testing

- Product Analytics

- Software Delivery

- CI Visibility

- CD Visibility

- Deployment Gates

- Test Optimization

- Code Coverage

- PR Gates

- DORA Metrics

- Feature Flags

- Security

- Security Overview

- Cloud SIEM

- Code Security

- Cloud Security

- App and API Protection

- Workload Protection

- Sensitive Data Scanner

- AI Observability

- Log Management

- Administration

(LEGACY) Set Up Observability Pipelines in Datadog

This product is not supported for your selected Datadog site. ().

If you upgrade your OP Workers version 1.8 or below to version 2.0 or above, your existing pipelines will break. Do not upgrade your OP Workers if you want to continue using OP Workers version 1.8 or below. If you want to use OP Worker 2.0 or above, you must migrate your OP Worker 1.8 or earlier pipelines to OP Worker 2.x.

Datadog recommends that you update to OP Worker versions 2.0 or above. Upgrading to a major OP Worker version and keeping it updated is the only supported way to get the latest OP Worker functionality, fixes, and security updates.

Datadog recommends that you update to OP Worker versions 2.0 or above. Upgrading to a major OP Worker version and keeping it updated is the only supported way to get the latest OP Worker functionality, fixes, and security updates.

Overview

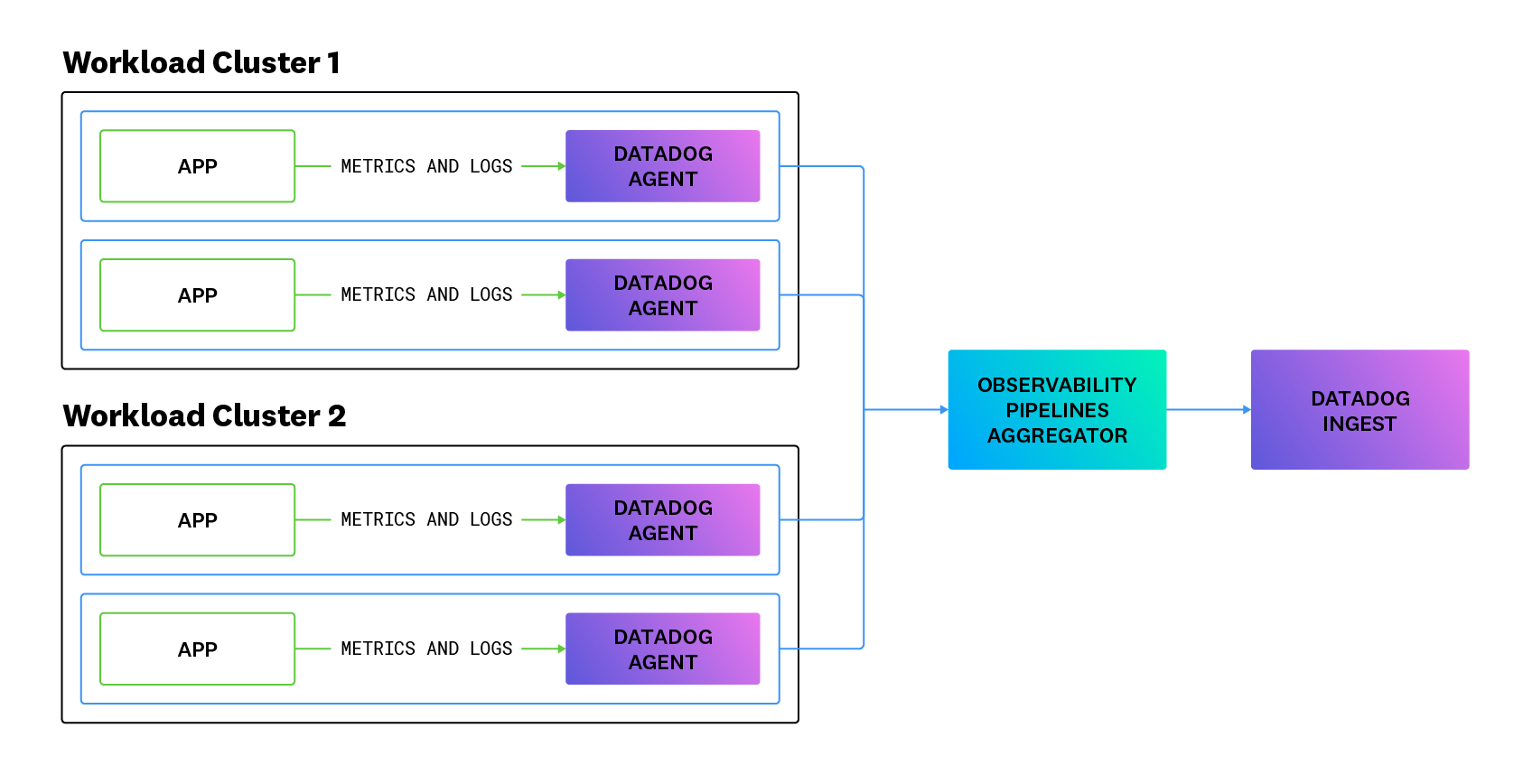

The Observability Pipelines Worker can collect, process, and route logs from any source to any destination. Using Datadog, you can build and manage all of your Observability Pipelines Worker deployments at scale.

This guide walks you through deploying the Worker in your common tools cluster and configuring the Datadog Agent to send logs and metrics to the Worker.

Deployment Modes

Remote configuration for Observability Pipelines is in private beta. Contact Datadog support or your Customer Success Manager for access.

If you are enrolled in the private beta of Remote Configuration, you can remotely roll out changes to your Workers from the Datadog UI, rather than make updates to your pipeline configuration in a text editor and then manually rolling out your changes. Choose your deployment method when you create a pipeline and install your Workers.

See Updating deployment modes on how to change the deployment mode after a pipeline is deployed.

Assumptions

- You are already using Datadog and want to use Observability Pipelines.

- You have administrative access to the clusters where the Observability Pipelines Worker is going to be deployed, as well as to the workloads that are going to be aggregated.

- You have a common tools or security cluster for your environment to which all other clusters are connected.

Prerequisites

Before installing, make sure you have:

- A valid Datadog API key.

- A Pipeline ID.

You can generate both of these in Observability Pipelines.

Provider-specific requirements

Ensure that your machine is configured to run Docker.

To run the Worker on your Kubernetes nodes, you need a minimum of two nodes with one CPU and 512MB RAM available. Datadog recommends creating a separate node pool for the Workers, which is also the recommended configuration for production deployments.

The EBS CSI driver is required. To see if it is installed, run the following command and look for

ebs-csi-controllerin the list:kubectl get pods -n kube-systemA

StorageClassis required for the Workers to provision the correct EBS drives. To see if it is installed already, run the following command and look forio2in the list:kubectl get storageclassIf

io2is not present, download the StorageClass YAML andkubectl applyit.The AWS Load Balancer controller is required. To see if it is installed, run the following command and look for

aws-load-balancer-controllerin the list:helm list -ADatadog recommends using Amazon EKS >= 1.16.

See Best Practices for OPW Aggregator Architecture for production-level requirements.

To run the Worker on your Kubernetes nodes, you need a minimum of two nodes with one CPU and 512 MB RAM available. Datadog recommends creating a separate node pool for the Workers, which is also the recommended configuration for production deployments.

See Best Practices for OPW Aggregator Architecture for production-level requirements.

To run the Worker on your Kubernetes nodes, you need a minimum of two nodes with one CPU and 512MB RAM available. Datadog recommends creating a separate node pool for the Workers, which is also the recommended configuration for production deployments.

See Best Practices for OPW Aggregator Architecture for production-level requirements.

There are no provider-specific requirements for APT-based Linux.

There are no provider-specific requirements for RPM-based Linux.

In order to run the Worker in your AWS account, you need administrative access to that account. Collect the following pieces of information to run the Worker instances:

- The VPC ID your instances will run in.

- The subnet IDs your instances will run in.

- The AWS region your VPC is located in.

CloudFormation installs only support Remote Configuration.

Only use CloudFormation installs for non-production-level workloads.

In order to run the Worker in your AWS account, you need administrative access to that account. Collect the following pieces of information to run the Worker instances:

- The VPC ID your instances will run in.

- The subnet IDs your instances will run in.

- The AWS region your VPC is located in.

Installing the Observability Pipelines Worker

The Observability Pipelines Worker Docker image is published to Docker Hub here.

Download the sample pipeline configuration file.

Run the following command to start the Observability Pipelines Worker with Docker:

docker run -i -e DD_API_KEY=<API_KEY> \ -e DD_OP_PIPELINE_ID=<PIPELINE_ID> \ -e DD_SITE=<SITE> \ -p 8282:8282 \ -v ./pipeline.yaml:/etc/observability-pipelines-worker/pipeline.yaml:ro \ datadog/observability-pipelines-worker runReplace

<API_KEY>with your Datadog API key,<PIPELINES_ID>with your Observability Pipelines configuration ID, and<SITE>with../pipeline.yamlmust be the relative or absolute path to the configuration you downloaded in Step 1.

Download the Helm chart values file for AWS EKS.

In the Helm chart, replace the

datadog.apiKeyanddatadog.pipelineIdvalues to match your pipeline and usefor thesitevalue. Then, install it in your cluster with the following commands:helm repo add datadog https://helm.datadoghq.comhelm repo updatehelm upgrade --install \ opw datadog/observability-pipelines-worker \ -f aws_eks.yaml

Download the Helm chart values file for Azure AKS.

In the Helm chart, replace the

datadog.apiKeyanddatadog.pipelineIdvalues to match your pipeline and usefor thesitevalue. Then, install it in your cluster with the following commands:helm repo add datadog https://helm.datadoghq.comhelm repo updatehelm upgrade --install \ opw datadog/observability-pipelines-worker \ -f azure_aks.yaml

Download the Helm chart values file for Google GKE.

In the Helm chart, replace the

datadog.apiKeyanddatadog.pipelineIdvalues to match your pipeline and usefor thesitevalue. Then, install it in your cluster with the following commands:helm repo add datadog https://helm.datadoghq.comhelm repo updatehelm upgrade --install \ opw datadog/observability-pipelines-worker \ -f google_gke.yaml

Run the following commands to set up APT to download through HTTPS:

sudo apt-get update sudo apt-get install apt-transport-https curl gnupgRun the following commands to set up the Datadog

debrepo on your system and create a Datadog archive keyring:sudo sh -c "echo 'deb [signed-by=/usr/share/keyrings/datadog-archive-keyring.gpg] https://apt.datadoghq.com/ stable observability-pipelines-worker-1' > /etc/apt/sources.list.d/datadog-observability-pipelines-worker.list" sudo touch /usr/share/keyrings/datadog-archive-keyring.gpg sudo chmod a+r /usr/share/keyrings/datadog-archive-keyring.gpg curl https://keys.datadoghq.com/DATADOG_APT_KEY_CURRENT.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch curl https://keys.datadoghq.com/DATADOG_APT_KEY_06462314.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch curl https://keys.datadoghq.com/DATADOG_APT_KEY_F14F620E.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batch curl https://keys.datadoghq.com/DATADOG_APT_KEY_C0962C7D.public | sudo gpg --no-default-keyring --keyring /usr/share/keyrings/datadog-archive-keyring.gpg --import --batchRun the following commands to update your local

aptrepo and install the Worker:sudo apt-get update sudo apt-get install observability-pipelines-worker datadog-signing-keysAdd your keys and the site (

) to the Worker’s environment variables:sudo cat <<-EOF > /etc/default/observability-pipelines-worker DD_API_KEY=<API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<SITE> EOFDownload the sample configuration file to

/etc/observability-pipelines-worker/pipeline.yamlon the host.Start the worker:

sudo systemctl restart observability-pipelines-worker

Run the following commands to set up the Datadog

rpmrepo on your system:cat <<EOF > /etc/yum.repos.d/datadog-observability-pipelines-worker.repo [observability-pipelines-worker] name = Observability Pipelines Worker baseurl = https://yum.datadoghq.com/stable/observability-pipelines-worker-1/\$basearch/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://keys.datadoghq.com/DATADOG_RPM_KEY_CURRENT.public https://keys.datadoghq.com/DATADOG_RPM_KEY_4F09D16B.public https://keys.datadoghq.com/DATADOG_RPM_KEY_B01082D3.public https://keys.datadoghq.com/DATADOG_RPM_KEY_FD4BF915.public https://keys.datadoghq.com/DATADOG_RPM_KEY_E09422B3.public EOFNote: If you are running RHEL 8.1 or CentOS 8.1, use

repo_gpgcheck=0instead ofrepo_gpgcheck=1in the configuration above.Update your packages and install the Worker:

sudo yum makecache sudo yum install observability-pipelines-workerAdd your keys and the site (

) to the Worker’s environment variables:sudo cat <<-EOF > /etc/default/observability-pipelines-worker DD_API_KEY=<API_KEY> DD_OP_PIPELINE_ID=<PIPELINE_ID> DD_SITE=<SITE> EOFDownload the sample configuration file to

/etc/observability-pipelines-worker/pipeline.yamlon the host.Start the worker:

sudo systemctl restart observability-pipelines-worker

- Download the sample configuration.

- Set up the Worker module in your existing Terraform using the sample configuration. Make sure to update the values in

vpc-id,subnet-ids, andregionto match your AWS deployment in the configuration. Also, update the values indatadog-api-keyandpipeline-idto match your pipeline.

Only use CloudFormation installs for non-production-level workloads.

To install the Worker in your AWS Account, use the CloudFormation template to create a Stack:

Download the CloudFormation template for the Worker.

In the CloudFormation console, click Create stack, and select the With new resources (standard) option.

Make sure that the Template is ready option is selected, and select Upload a template file. Click Choose file and add the CloudFormation template file you downloaded earlier. Click Next.

Enter a name for the stack in Specify stack details.

Fill in the parameters for the CloudFormation template. A few require special attention:

For

APIKeyandPipelineID, provide the key and ID that you gathered earlier in the Prerequisites section.For the

VPCIDandSubnetIDs, provide the subnets and VPC you chose earlier.All other parameters are set to reasonable defaults for a Worker deployment but you can adjust them for your use case as needed.

Click Next.

Review and make sure the parameters are as expected. Click the necessary permissions checkboxes for IAM, and click Submit to create the Stack.

CloudFormation handles the installation at this point; the Worker instances are launched, download the necessary software, and start running automatically.

See Configurations for more information about the source, transform, and sink used in the sample configuration. See Working with Data for more information on transforming your data.

Load balancing

Production-oriented setup is not included in the Docker instructions. Instead, refer to your company’s standards for load balancing in containerized environments. If you are testing on your local machine, configuring a load balancer is unnecessary.

Use the load balancers provided by your cloud provider. They adjust based on autoscaling events that the default Helm setup is configured for. The load balancers are internal-facing, so they are only accessible inside your network.

Use the load balancer URL given to you by Helm when you configure the Datadog Agent.

NLBs provisioned by the AWS Load Balancer Controller are used.

See Capacity Planning and Scaling for load balancer recommendations when scaling the Worker.

Cross-availability-zone load balancing

The provided Helm configuration tries to simplify load balancing, but you must take into consideration the potential price implications of cross-AZ traffic. Wherever possible, the samples try to avoid creating situations where multiple cross-AZ hops can happen.

The sample configurations do not enable the cross-zone load balancing feature available in this controller. To enable it, add the following annotation to the service block:

service.beta.kubernetes.io/aws-load-balancer-attributes: load_balancing.cross_zone.enabled=true

See AWS Load Balancer Controller for more details.

Use the load balancers provided by your cloud provider. They adjust based on autoscaling events that the default Helm setup is configured for. The load balancers are internal-facing, so they are only accessible inside your network.

Use the load balancer URL given to you by Helm when you configure the Datadog Agent.

See Capacity Planning and Scaling for load balancer recommendations when scaling the Worker.

Cross-availability-zone load balancing

The provided Helm configuration tries to simplify load balancing, but you must take into consideration the potential price implications of cross-AZ traffic. Wherever possible, the samples try to avoid creating situations where multiple cross-AZ hops can happen.

Use the load balancers provided by your cloud provider. They adjust based on autoscaling events that the default Helm setup is configured for. The load balancers are internal-facing, so they are only accessible inside your network.

Use the load balancer URL given to you by Helm when you configure the Datadog Agent.

See Capacity Planning and Scaling for load balancer recommendations when scaling the Worker.

Cross-availability-zone load balancing

The provided Helm configuration tries to simplify load balancing, but you must take into consideration the potential price implications of cross-AZ traffic. Wherever possible, the samples try to avoid creating situations where multiple cross-AZ hops can happen.

Global Access is enabled by default since that is likely required for use in a shared tools cluster.

No built-in support for load-balancing is provided, given the single-machine nature of the installation. You will need to provision your own load balancers using whatever your company’s standard is.

No built-in support for load-balancing is provided, given the single-machine nature of the installation. You will need to provision your own load balancers using whatever your company’s standard is.

An NLB is provisioned by the Terraform module, and configured to point at the instances. Its DNS address is returned in the lb-dns output in Terraform.

Only use CloudFormation installs for non-production-level workloads.

An NLB is provisioned by the CloudFormation template, and is configured to point at the AutoScaling Group. Its DNS address is returned in the LoadBalancerDNS CloudFormation output.

Buffering

Observability Pipelines includes multiple buffering strategies that allow you to increase the resilience of your cluster to downstream faults. The provided sample configurations use disk buffers, the capacities of which are rated for approximately 10 minutes of data at 10Mbps/core for Observability Pipelines deployments. That is often enough time for transient issues to resolve themselves, or for incident responders to decide what needs to be done with the observability data.

By default, the Observability Pipelines Worker’s data directory is set to /var/lib/observability-pipelines-worker. Make sure that your host machine has a sufficient amount of storage capacity allocated to the container’s mountpoint.

For AWS, Datadog recommends using the io2 EBS drive family. Alternatively, the gp3 drives could also be used.

For Azure AKS, Datadog recommends using the default (also known as managed-csi) disks.

For Google GKE, Datadog recommends using the premium-rwo drive class because it is backed by SSDs. The HDD-backed class, standard-rwo, might not provide enough write performance for the buffers to be useful.

By default, the Observability Pipelines Worker’s data directory is set to /var/lib/observability-pipelines-worker - if you are using the sample configuration, you should ensure that this has at least 288GB of space available for buffering.

Where possible, it is recommended to have a separate SSD mounted at that location.

By default, the Observability Pipelines Worker’s data directory is set to /var/lib/observability-pipelines-worker - if you are using the sample configuration, you should ensure that this has at least 288GB of space available for buffering.

Where possible, it is recommended to have a separate SSD mounted at that location.

By default, a 288GB EBS drive is allocated to each instance, and the sample configuration above is set to use that for buffering.

EBS drives created by this CloudFormation template have their lifecycle tied to the instance they are created with. This leads to data loss if an instance is terminated, for example by the AutoScaling Group. For this reason, only use CloudFormation installs for non-production-level workloads.

By default, a 288GB EBS drive is allocated to each instance, and is auto-mounted and formatted upon instance boot.

Connect the Datadog Agent to the Observability Pipelines Worker

To send Datadog Agent logs to the Observability Pipelines Worker, update your agent configuration with the following:

observability_pipelines_worker:

logs:

enabled: true

url: "http://<OPW_HOST>:8282"

OPW_HOST is the IP of the load balancer or machine you set up earlier. For single-host Docker-based installs, this is the IP address of the underlying host. For Kubernetes-based installs, you can retrieve it by running the following command and copying the EXTERNAL-IP:

kubectl get svc opw-observability-pipelines-worker

For Terraform installs, the lb-dns output provides the necessary value. For CloudFormation installs, the LoadBalancerDNS CloudFormation output has the correct URL to use.

At this point, your observability data should be going to the Worker and is available for data processing.

Updating deployment modes

After deploying a pipeline, you can also switch deployment methods, such as going from a manually managed pipeline to a remote configuration enabled pipeline or vice versa.

If you want to switch from a remote configuration deployment to a manually managed deployment:

- Navigate to Observability Pipelines and select the pipeline.

- Click the settings cog.

- In Deployment Mode, select manual to enable it.

- Set the

DD_OP_REMOTE_CONFIGURATION_ENABLEDflag tofalseand restart the Worker. Workers that are not restarted with this flag continue to be remote configuration enabled, which means that the Workers are not updated manually through a local configuration file.

If you want to switch from manually managed deployment to a remote configuration deployment:

- Navigate to Observability Pipelines and select the pipeline.

- Click the settings cog.

- In Deployment Mode, select Remote Configuration to enable it.

- Set the

DD_OP_REMOTE_CONFIGURATION_ENABLEDflag totrueand restart the Worker. Workers that are not restarted with this flag are not polled for configurations deployed in the UI. - Deploy a version in your version history, so that the Workers receive the new version configuration. Click on a version. Click Edit as Draft and then click Deploy.

Working with data

The sample configuration provided has example processing steps that demonstrate Observability Pipelines tools and ensures that data sent to Datadog is in the correct format.

Processing logs

The sample Observability Pipelines configuration does the following:

- Collects logs sent from the Datadog agent to the Observability Pipelines Worker.

- Tags logs coming through the Observability Pipelines Worker. This helps determine what traffic still needs to be shifted over to the Worker as you update your clusters. These tags also show you how logs are being routed through the load balancer, in case there are imbalances.

- Corrects the status of logs coming through the Worker. Due to how the Datadog Agent collects logs from containers, the provided

.statusattribute does not properly reflect the actual level of the message. It is removed to prevent issues with parsing rules in the backend, where logs are received from the Worker.

The following are two important components in the example configuration:

logs_parse_ddtags: Parses the tags that are stored in a string into structured data.logs_finish_ddtags: Re-encodes the tags so that it is in the format as how the Datadog Agent would send it.

Internally, the Datadog Agent represents log tags as a CSV in a single string. To effectively manipulate these tags, they must be parsed, modified, and then re-encoded before they are sent to the ingest endpoint. These steps are written to automatically perform those actions for you. Any modifications you make to the pipeline, especially for manipulating tags, should be in between these two steps.

At this point, your environment is configured for Observability Pipelines with data flowing through it. Further configuration is likely required for your specific use cases, but the tools provided give you a starting point.

Further reading

Additional helpful documentation, links, and articles: